Cinder的概述

Openstack块存储服务(Cinder)为云主机添加持久的存储,块存储提供一个基础设施为了管理卷,以及和Openstack计算服务交互,为实例提供卷。此服务也会激活管理卷的快照和卷类型的功能。

Cinder的组件

- Cinder-API

接收API请求,调用Cinder-Volume执行操作。

- Cinder-Volume

管理Volume的服务,与Volume Provider协调工作,管理Volume的生命周期。运行Cinder-Volume服务的节点被称作为存储节点。

- Cinder-Scheduler

选择最优存储提供节点来创建卷,其与Nova-Scheduler组件类似。

- Volume Provider

数据的存储设备,为Volume提供物理存储空间。Cinder-Volume支持多种Volume Provider,每种Volume Provider通过自己的Driver与Cinder-Volume协调工作。

- Cinder-Backup

提供任何种类备份卷到一个备份存储提供者。就像Cinder-Volume服务,它与多种存储提供者在驱动架构下进行交互。

Cinder的工作流程

安装配置控制节点

Cinder的安装

- 创建数据库,服务证书和API端点

MariaDB [(none)]> create database cinder;

Query OK, 1 row affected (0.01 sec)

MariaDB [(none)]> show databases;

+--------------------+

| Database |

+--------------------+

| cinder |

| glance |

| information_schema |

| keystone |

| mysql |

| neutron |

| nova |

| nova_api |

| performance_schema |

+--------------------+

9 rows in set (0.00 sec)

MariaDB [(none)]> grant all on cinder.* to 'cinder'@'localhost' identified by 'cinder';

Query OK, 0 rows affected (0.02 sec)

MariaDB [(none)]> grant all on cinder.* to 'cinder'@'%' identified by 'cinder';

Query OK, 0 rows affected (0.00 sec)

MariaDB [(none)]> exit

Bye- 获得admin凭证来获取只有管理员能执行的命令的访问权限

[root@linux-node1 ~]# source admin-openrc- 要创建服务证书,完成这些步骤

创建cinder用户

[root@linux-node1 ~]# openstack user create --domain default --password-prompt cinder

User Password:

Repeat User Password:

+---------------------+----------------------------------+

| Field | Value |

+---------------------+----------------------------------+

| domain_id | default |

| enabled | True |

| id | 9f17f5f2dd844ab1baf9eb894f832f50 |

| name | cinder |

| password_expires_at | None |

+---------------------+----------------------------------+添加admin角色到cinder用户

[root@linux-node1 ~]# openstack role add --project service --user cinder admin创建cinder和cinderv2服务实体

[root@linux-node1 ~]# openstack service create --name cinder \

--description "OpenStack Block Storage" volume

+-------------+----------------------------------+

| Field | Value |

+-------------+----------------------------------+

| description | OpenStack Block Storage |

| enabled | True |

| id | 67e95c224a554275bda7abea0c480d8e |

| name | cinder |

| type | volume |

+-------------+----------------------------------+

[root@linux-node1 ~]# openstack service create --name cinderv2 \

--description "OpenStack Block Storage" volumev2

+-------------+----------------------------------+

| Field | Value |

+-------------+----------------------------------+

| description | OpenStack Block Storage |

| enabled | True |

| id | f5e92c14055647e5b2fc66e83d979109 |

| name | cinderv2 |

| type | volumev2 |

+-------------+----------------------------------+创建块设备存储服务的API端点

[root@linux-node1 ~]# openstack endpoint create --region RegionOne \

volume public http://192.168.56.11:8776/v1/%\(tenant_id\)s

+--------------+--------------------------------------------+

| Field | Value |

+--------------+--------------------------------------------+

| enabled | True |

| id | 93bc480ca65b41ca9138c2aef10e57da |

| interface | public |

| region | RegionOne |

| region_id | RegionOne |

| service_id | 67e95c224a554275bda7abea0c480d8e |

| service_name | cinder |

| service_type | volume |

| url | http://192.168.56.11:8776/v1/%(tenant_id)s |

+--------------+--------------------------------------------+

[root@linux-node1 ~]# openstack endpoint create --region RegionOne \

volume internal http://192.168.56.11:8776/v1/%\(tenant_id\)s

+--------------+--------------------------------------------+

| Field | Value |

+--------------+--------------------------------------------+

| enabled | True |

| id | 25d0f74d972543fa93c137a19885796f |

| interface | internal |

| region | RegionOne |

| region_id | RegionOne |

| service_id | 67e95c224a554275bda7abea0c480d8e |

| service_name | cinder |

| service_type | volume |

| url | http://192.168.56.11:8776/v1/%(tenant_id)s |

+--------------+--------------------------------------------+

[root@linux-node1 ~]# openstack endpoint create --region RegionOne \

volume admin http://192.168.56.11:8776/v1/%\(tenant_id\)s

+--------------+--------------------------------------------+

| Field | Value |

+--------------+--------------------------------------------+

| enabled | True |

| id | 2459b96271ca4143ba56df8b3fca198d |

| interface | admin |

| region | RegionOne |

| region_id | RegionOne |

| service_id | 67e95c224a554275bda7abea0c480d8e |

| service_name | cinder |

| service_type | volume |

| url | http://192.168.56.11:8776/v1/%(tenant_id)s |

+--------------+--------------------------------------------+

[root@linux-node1 ~]# openstack endpoint create --region RegionOne \

volumev2 public http://192.168.56.11:8776/v2/%\(tenant_id\)s

+--------------+--------------------------------------------+

| Field | Value |

+--------------+--------------------------------------------+

| enabled | True |

| id | 41e2ae3a41924287b52bb784e2d8c121 |

| interface | public |

| region | RegionOne |

| region_id | RegionOne |

| service_id | f5e92c14055647e5b2fc66e83d979109 |

| service_name | cinderv2 |

| service_type | volumev2 |

| url | http://192.168.56.11:8776/v2/%(tenant_id)s |

+--------------+--------------------------------------------+

[root@linux-node1 ~]# openstack endpoint create --region RegionOne \

volumev2 internal http://192.168.56.11:8776/v2/%\(tenant_id\)s

+--------------+--------------------------------------------+

| Field | Value |

+--------------+--------------------------------------------+

| enabled | True |

| id | 2fdf3b33b61348328f5a112ae9ceac42 |

| interface | internal |

| region | RegionOne |

| region_id | RegionOne |

| service_id | f5e92c14055647e5b2fc66e83d979109 |

| service_name | cinderv2 |

| service_type | volumev2 |

| url | http://192.168.56.11:8776/v2/%(tenant_id)s |

+--------------+--------------------------------------------+

[root@linux-node1 ~]# openstack endpoint create --region RegionOne \

volumev2 admin http://192.168.56.11:8776/v2/%\(tenant_id\)s

+--------------+--------------------------------------------+

| Field | Value |

+--------------+--------------------------------------------+

| enabled | True |

| id | 99669c5db47c4fcc890f976fce07886b |

| interface | admin |

| region | RegionOne |

| region_id | RegionOne |

| service_id | f5e92c14055647e5b2fc66e83d979109 |

| service_name | cinderv2 |

| service_type | volumev2 |

| url | http://192.168.56.11:8776/v2/%(tenant_id)s |

+--------------+--------------------------------------------+安装Cinder相关软件包

[root@linux-node1 ~]# yum -y install openstack-cinder

[root@linux-node1 ~]# rpm -qa openstack-cinder

openstack-cinder-9.1.4-1.el7.noarchCinder的配置

- 编辑/etc/cinder/cinder.conf,同时完成如下动作

[root@linux-node1 ~]# cp -a /etc/cinder/cinder.conf /etc/cinder/cinder.conf_$(date +%F)

[root@linux-node1 ~]# vim /etc/cinder/cinder.conf在[database]部分,配置数据库访问

[database]

......

3399 connection = mysql+pymysql://cinder:[email protected]/cinder在[DEFAULT]部分,配置RabbitMQ消息队列访问权限

[DEFAULT]

......

3052 transport_url = rabbit://openstack:[email protected]在[DEFAULT]和[keystone_authtoken]部分,配置认证服务访问

[DEFAULT]

......

727 auth_strategy = keystone

[keystone_authtoken]

3551 auth_uri = http://192.168.56.11:5000

3552 auth_url = http://192.168.56.11:35357

3553 memcached_servers = 192.168.56.11:11211

3554 auth_type = password

3555 project_domain_name = Default

3556 user_domain_name = Default

3557 project_name = service

3558 username = cinder

3559 password = cinder在[oslo_concurrency]部分,配置锁路径

[oslo_concurrency]

......

3764 lock_path = /var/lib/cinder/tmp- 初始化块设备服务的数据库,可以忽略警告信息

[root@linux-node1 ~]# su -s /bin/sh -c "cinder-manage db sync" cinder

[root@linux-node1 ~]# mysql -ucinder -pcinder -e "use cinder;show tables;"|wc -l

34- 配置计算以使用块设备存储

编辑文件/etc/nova/nova.conf并添加如下到其中

[root@linux-node1 ~]# vim /etc/nova/nova.conf

4343 os_region_name = RegionOneCinder安装完成

- 重启计算API服务

[root@linux-node1 ~]# systemctl restart openstack-nova-api.service

[root@linux-node1 ~]# systemctl status openstack-nova-api.service- 启动块设备存储服务,并将其配置为开机自启

[root@linux-node1 ~]# systemctl enable openstack-cinder-api.service openstack-cinder-scheduler.service

[root@linux-node1 ~]# systemctl start openstack-cinder-api.service openstack-cinder-scheduler.service

[root@linux-node1 ~]# systemctl status openstack-cinder-api.service openstack-cinder-scheduler.service安装配置存储节点

Cinder存储节点ISCSI的安装

- 安装支持的工具包

安装LVM

[root@linux-node2 ~]# yum -y install lvm2

[root@linux-node2 ~]# rpm -qa lvm2

lvm2-2.02.171-8.el7.x86_64启动LVM的metadata服务并且设置该服务随系统启动

[root@linux-node2 ~]# systemctl enable lvm2-lvmetad.service

[root@linux-node2 ~]# systemctl start lvm2-lvmetad.service

[root@linux-node2 ~]# systemctl status lvm2-lvmetad.service- 创建LVM物理卷/dev/sdb(需要额外添加一块硬盘)

[root@linux-node2 ~]# fdisk -l /dev/sdb

Disk /dev/sdb: 21.5 GB, 21474836480 bytes, 41943040 sectors

Units = sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

[root@linux-node2 ~]# pvcreate /dev/sdb

Physical volume "/dev/sdb" successfully created.

[root@linux-node2 ~]# vgcreate cinder-volumes /dev/sdb

Volume group "cinder-volumes" successfully created

[root@linux-node2 ~]# vgdisplay

--- Volume group ---

VG Name cinder-volumes

System ID

Format lvm2

Metadata Areas 1

Metadata Sequence No 1

VG Access read/write

VG Status resizable

MAX LV 0

Cur LV 0

Open LV 0

Max PV 0

Cur PV 1

Act PV 1

VG Size <20.00 GiB

PE Size 4.00 MiB

Total PE 5119

Alloc PE / Size 0 / 0

Free PE / Size 5119 / <20.00 GiB

VG UUID KDglRs-1MjO-9ow0-umIx-5x02-eWzk-FAFpme- 编辑/etc/lvm/lvm.conf文件并完成下面的操作

[root@linux-node2 ~]# cp -a /etc/lvm/lvm.conf /etc/lvm/lvm.conf_$(date +%F)

[root@linux-node2 ~]# vim /etc/lvm/lvm.conf在devices部分,添加一个过滤器,只接受/dev/sdb设备,拒绝其它所有设备

devices {

129 filter = [ "a/sdb/", "r/.*/"]

}- 安装Cinder相关软件包

[root@linux-node2 ~]# yum -y install openstack-cinder targetcli python-keystone

[root@linux-node2 ~]# rpm -qa openstack-cinder targetcli python-keystone

python-keystone-10.0.3-1.el7.noarch

openstack-cinder-9.1.4-1.el7.noarch

targetcli-2.1.fb46-1.el7.noarchCinder存储节点ISCSI的配置

- 编辑/etc/cinder/cinder.conf,同时完成如下动作

[root@linux-node2 ~]# cp -a /etc/cinder/cinder.conf /etc/cinder/cinder.conf_$(date +%F)

[root@linux-node2 ~]# vim /etc/cinder/cinder.conf在[database]部分,配置数据库访问

[database]

......

3399 connection = mysql+pymysql://cinder:[email protected]/cinder在[DEFAULT]部分,配置RabbitMQ消息队列访问权限

[DEFAULT]

......

3052 transport_url = rabbit://openstack:[email protected]在[DEFAULT]部分,配置my_ip选项

[DEFAULT]

......

1446 iscsi_ip_address = 192.168.56.12在[DEFAULT]和[keystone_authtoken]部分,配置认证服务访问

[DEFAULT]

......

727 auth_strategy = keystone

[keystone_authtoken]

3551 auth_uri = http://192.168.56.11:5000

3552 auth_url = http://192.168.56.11:35357

3553 memcached_servers = 192.168.56.11:11211

3554 auth_type = password

3555 project_domain_name = Default

3556 user_domain_name = Default

3557 project_name = service

3558 username = cinder

3559 password = cinder在[lvm]部分中,配置LVM后端,包括LVM驱动,cinder-volumes卷组,ISCSI协议和适当的ISCSI服务。如果[lvm]部分不存在,则创建它

[lvm]

4411 volume_driver = cinder.volume.drivers.lvm.LVMVolumeDriver

4412 volume_group = cinder-volumes

4413 iscsi_protocol = iscsi

4414 iscsi_helper = lioadm

4415 volume_backend_name = Iscsi-Storage在[DEFAULT]部分,启用LVM后端

[DEFAULT]

......

731 enabled_backends = lvm在[DEFAULT]区域,配置镜像服务API的位置

[DEFAULT]

......

619 glance_api_servers = http://192.168.56.11:9292在[oslo_concurrency]部分,配置锁路径

[oslo_concurrency]

......

3764 lock_path = /var/lib/cinder/tmpCinder存储节点ISCSI安装完成

- 启动块存储卷服务及其依赖的服务,并将其配置为随系统启动

[root@linux-node2 ~]# systemctl enable openstack-cinder-volume.service target.service

[root@linux-node2 ~]# systemctl start openstack-cinder-volume.service target.service

[root@linux-node2 ~]# systemctl status openstack-cinder-volume.service target.serviceCinder存储节点ISCSI的验证操作

- 获得admin凭证来获取只有管理员能执行的命令的访问权限

[root@linux-node1 ~]# source admin-openrc- 列出服务组件以验证是否每个进程都成功启动

[root@linux-node1 ~]# openstack volume service list

+------------------+-----------------+------+---------+-------+----------------------------+

| Binary | Host | Zone | Status | State | Updated At |

+------------------+-----------------+------+---------+-------+----------------------------+

| cinder-scheduler | linux-node1 | nova | enabled | up | 2018-01-26T08:23:54.000000 |

| cinder-volume | linux-node2@lvm | nova | enabled | up | 2018-01-26T08:24:01.000000 |

+------------------+-----------------+------+---------+-------+----------------------------+- 创建云硬盘类型

[root@linux-node1 ~]# cinder type-create ISCSI

+--------------------------------------+-------+-------------+-----------+

| ID | Name | Description | Is_Public |

+--------------------------------------+-------+-------------+-----------+

| b5d6024e-7ba7-43ac-a034-beb89182bd1e | ISCSI | - | True |

+--------------------------------------+-------+-------------+-----------+- 后端存储和云硬盘类型关联

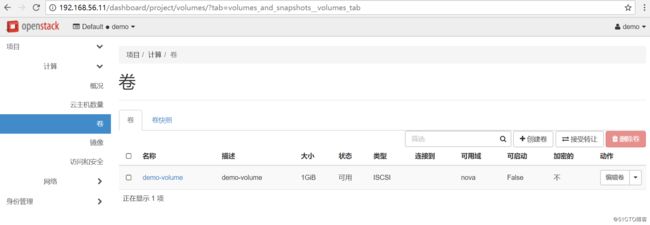

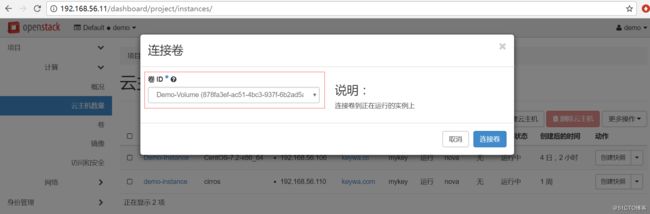

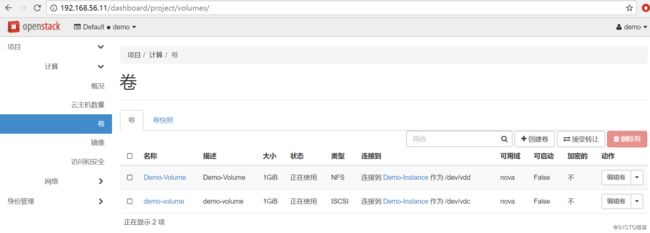

[root@linux-node1 ~]# cinder type-key ISCSI set volume_backend_name=Iscsi-Storage- 访问192.168.56.11/dashboard,使用demo用户登录

[root@demo-instance ~]# fdisk -l /dev/vdc

Disk /dev/vdc: 1073 MB, 1073741824 bytes, 2097152 sectors

Units = sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

[root@demo-instance ~]# mkfs.xfs /dev/vdc

[root@demo-instance ~]# blkid /dev/vdc

/dev/vdc: UUID="803f6ef5-5496-4943-94c3-b9a067cfa2a1" TYPE="xfs"

[root@demo-instance ~]# mkdir /data

[root@demo-instance ~]# ls -ld /data/

drwxr-xr-x 2 root root 6 Jan 26 17:35 /data/

[root@demo-instance ~]# echo "UUID=803f6ef5-5496-4943-94c3-b9a067cfa2a1 /data xfs defaults 0 0" >> /etc/fstab

[root@demo-instance ~]# tail -1 /etc/fstab

UUID=803f6ef5-5496-4943-94c3-b9a067cfa2a1 /data xfs defaults 0 0

[root@demo-instance ~]# mount -a

[root@demo-instance ~]# df -h

Filesystem Size Used Avail Use% Mounted on

/dev/vda1 10G 1.1G 9.0G 11% /

devtmpfs 236M 0 236M 0% /dev

tmpfs 245M 0 245M 0% /dev/shm

tmpfs 245M 13M 233M 6% /run

tmpfs 245M 0 245M 0% /sys/fs/cgroup

tmpfs 49M 0 49M 0% /run/user/0

/dev/vdc 1014M 33M 982M 4% /dataCinder存储节点NFS的安装

- 安装支持的工具包

安装nfs-utils,rpcbind

[root@linux-node3 ~]# yum -y install nfs-utils rpcbind

[root@linux-node3 ~]# rpm -qa nfs-utils rpcbind

rpcbind-0.2.0-42.el7.x86_64

nfs-utils-1.3.0-0.48.el7_4.1.x86_64- 启动NFS服务并且设置该服务随系统启动

[root@linux-node3 ~]# systemctl enable rpcbind nfs

[root@linux-node3 ~]# systemctl start rpcbind

[root@linux-node3 ~]# systemctl start nfs

[root@linux-node3 ~]# systemctl status rpcbind nfs- 创建共享目录并授权

[root@linux-node3 ~]# mkdir /data/nfs -p

[root@linux-node3 ~]# chown -R nfsnobody:nfsnobody /data/nfs/

[root@linux-node3 ~]# ls -ld /data/nfs/

drwxr-xr-x. 2 nfsnobody nfsnobody 6 Jan 30 14:01 /data/nfs/

[root@linux-node3 ~]# vim /etc/exports

/data/nfs *(rw,sync,all_squash)

[root@linux-node3 ~]# exportfs -rv

exporting *:/data/nfs

[root@linux-node3 ~]# showmount -e localhost

Export list for localhost:

/data/nfs *- 安装Cinder相关软件包

[root@linux-node3 ~]# yum -y install openstack-cinder targetcli python-keystone

[root@linux-node3 ~]# rpm -qa openstack-cinder targetcli python-keystone

openstack-cinder-9.1.4-1.el7.noarch

python-keystone-10.0.3-1.el7.noarch

targetcli-2.1.fb46-1.el7.noarchCinder存储节点NFS的配置

- 编辑/etc/cinder/cinder.conf,同时完成如下动作

[root@linux-node3 ~]# cp -a /etc/cinder/cinder.conf /etc/cinder/cinder.conf_$(date +%F)

[root@linux-node3 ~]# vim /etc/cinder/cinder.conf在[database]部分,配置数据库访问

[database]

......

3399 connection = mysql+pymysql://cinder:[email protected]/cinder在[DEFAULT]部分,配置RabbitMQ消息队列访问权限

[DEFAULT]

......

3052 transport_url = rabbit://openstack:[email protected]在[DEFAULT]和[keystone_authtoken]部分,配置认证服务访问

[DEFAULT]

......

727 auth_strategy = keystone

[keystone_authtoken]

3551 auth_uri = http://192.168.56.11:5000

3552 auth_url = http://192.168.56.11:35357

3553 memcached_servers = 192.168.56.11:11211

3554 auth_type = password

3555 project_domain_name = Default

3556 user_domain_name = Default

3557 project_name = service

3558 username = cinder

3559 password = cinder在[nfs]部分中,配置NFS后端,包括NFS驱动。如果[nfs]部分不存在,则创建它

[nfs]

4411 volume_driver = cinder.volume.drivers.nfs.NfsDriver

4412 nfs_shares_config = /etc/cinder/nfs_shares

4413 nfs_mount_point_base = $state_path/mnt

4414 volume_backend_name = Nfs-Storage在[DEFAULT]部分,启用NFS后端

[DEFAULT]

......

731 enabled_backends = nfs在[DEFAULT]区域,配置镜像服务API的位置

[DEFAULT]

......

619 glance_api_servers = http://192.168.56.11:9292在[oslo_concurrency]部分,配置锁路径

[oslo_concurrency]

......

3764 lock_path = /var/lib/cinder/tmp- 配置共享目录文件并修改权限

[root@linux-node3 ~]# echo "192.168.56.13:/data/nfs" >> /etc/cinder/nfs_shares

[root@linux-node3 ~]# tail -1 /etc/cinder/nfs_shares

192.168.56.13:/data/nfs

[root@linux-node3 ~]# chown root:cinder /etc/cinder/nfs_shares

[root@linux-node3 ~]# chmod 640 /etc/cinder/nfs_shares

[root@linux-node3 ~]# ls -l /etc/cinder/nfs_shares

-rw-r-----. 1 root cinder 24 Jan 30 13:23 /etc/cinder/nfs_sharesCinder存储节点NFS安装完成

- 启动块存储卷服务及其依赖的服务,并将其配置为随系统启动

[root@linux-node3 ~]# systemctl enable openstack-cinder-volume.service target.service

[root@linux-node3 ~]# systemctl start openstack-cinder-volume.service target.service

[root@linux-node3 ~]# systemctl status openstack-cinder-volume.service target.serviceCinder存储节点NFS的验证操作

- 获得admin凭证来获取只有管理员能执行的命令的访问权限

[root@linux-node1 ~]# source admin-openrc- 列出服务组件以验证是否每个进程都成功启动

[root@linux-node1 ~]# openstack volume service list

+------------------+-----------------+------+---------+-------+----------------------------+

| Binary | Host | Zone | Status | State | Updated At |

+------------------+-----------------+------+---------+-------+----------------------------+

| cinder-scheduler | linux-node1 | nova | enabled | up | 2018-01-30T05:20:43.000000 |

| cinder-volume | linux-node2@lvm | nova | enabled | up | 2018-01-30T05:20:44.000000 |

| cinder-volume | linux-node3@nfs | nova | enabled | down | 2018-01-30T05:15:42.000000 |

+------------------+-----------------+------+---------+-------+----------------------------+- 创建云硬盘类型

[root@linux-node1 ~]# cinder type-create NFS

+--------------------------------------+------+-------------+-----------+

| ID | Name | Description | Is_Public |

+--------------------------------------+------+-------------+-----------+

| 5940443d-bb0a-4682-88ca-c4acda79ab41 | NFS | - | True |

+--------------------------------------+------+-------------+-----------+- 后端存储和云硬盘类型关联

[root@linux-node1 ~]# cinder type-key NFS set volume_backend_name=Nfs-Storage- 访问192.168.56.11/dashboard,使用demo用户登录

[root@demo-instance ~]# fdisk -l /dev/vdd

Disk /dev/vdd: 1073 MB, 1073741824 bytes, 2097152 sectors

Units = sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

[root@demo-instance ~]# mkfs.xfs /dev/vdd

[root@demo-instance ~]# blkid /dev/vdd

/dev/vdd: UUID="e01c75ca-fc90-4886-ab5c-0d3947bed1cd" TYPE="xfs"

[root@demo-instance ~]# mkdir /nfs

[root@demo-instance ~]# ls -ld /nfs/

drwxr-xr-x 2 root root 6 Jan 30 14:13 /nfs/

[root@demo-instance ~]# echo "UUID=e01c75ca-fc90-4886-ab5c-0d3947bed1cd /nfs xfs defaults 0 0" >> /etc/fstab

[root@demo-instance ~]# tail -1 /etc/fstab

UUID=e01c75ca-fc90-4886-ab5c-0d3947bed1cd /nfs xfs defaults 0 0

[root@demo-instance ~]# mount -a

[root@demo-instance ~]# df -h

Filesystem Size Used Avail Use% Mounted on

/dev/vda1 10G 1.1G 9.0G 11% /

devtmpfs 236M 0 236M 0% /dev

tmpfs 245M 0 245M 0% /dev/shm

tmpfs 245M 13M 233M 6% /run

tmpfs 245M 0 245M 0% /sys/fs/cgroup

/dev/vdc 1014M 33M 982M 4% /data

tmpfs 49M 0 49M 0% /run/user/0

/dev/vdd 1014M 33M 982M 4% /nfs