由于国内网络问题,建议使用离线的mnist.npz数据集。(这是一个很基本上所有深度学习与神经网络教学案例都会拿来用的手写数字数据集)

下载好之后,进入C:\ProgramData\Anaconda3\Lib\site-packages\keras\datasets下面的mnist.py,使用notepad++编辑,将origin路径修改为mnist.npz的真实路径。

1、导入数据

path='c:/data/mnist.npz' f = np.load(path) X_train, y_train = f['x_train'], f['y_train'] X_test, y_test = f['x_test'], f['y_test'] f.close()

2、查看图片

可以自定义查看前N张图片,我们选择9张。

for i in range(9):

plt.subplot(3, 3, i+1)

plt.imshow(X_train[i], cmap='gray', interpolation='none')

plt.title("Class {}".format(y_train[i]))

3、查看图片数量

X_train = X_train.reshape(X_train.shape[0], 28, 28, 1)

X_test = X_test.reshape(X_test.shape[0], 28, 28, 1)

print('X_train shape:', X_train.shape)

print('X_test shape:', X_test.shape)

4、观察训练数据集

使用seaborn观察训练数据集的y值分布。

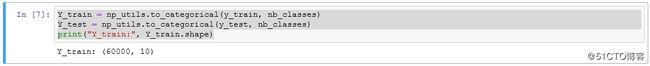

Y_train = np_utils.to_categorical(y_train, nb_classes)

Y_test = np_utils.to_categorical(y_test, nb_classes)

print("Y_train:", Y_train.shape)

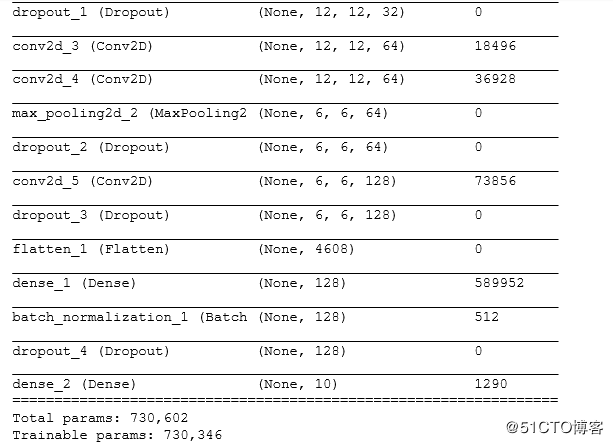

5、定义神经网络

model = Sequential() model.add(Conv2D(32, kernel_size=(3,3), activation='relu', kernel_initializer='he_normal', input_shape=input_shape)) model.add(Conv2D(32, kernel_size=(3,3), activation='relu', kernel_initializer='he_normal')) model.add(MaxPool2D(2,2)) model.add(Dropout(0.2)) model.add(Conv2D(64, kernel_size=(3,3), activation='relu', kernel_initializer='he_normal', padding='same')) model.add(Conv2D(64, kernel_size=(3,3), activation='relu', kernel_initializer='he_normal', padding='same')) model.add(MaxPool2D(2,2)) model.add(Dropout(0.25)) model.add(Conv2D(128, kernel_size=(3,3), activation='relu', kernel_initializer='he_normal', padding='same')) model.add(Dropout(0.25)) model.add(Flatten()) model.add(Dense(128, activation='relu')) model.add(BatchNormalization()) model.add(Dropout(0.25)) model.add(Dense(nb_classes, activation='softmax')) model.compile(loss=keras.losses.categorical_crossentropy, optimizer=keras.optimizers.Adam(), metrics=['accuracy']) model.summary()

6、训练模型

datagen = ImageDataGenerator(featurewise_center=True, featurewise_std_normalization=True) datagen.fit(X_train) filepath = 'model.hdf5' from keras.callbacks import ModelCheckpoint # monitor计算每一个模型validation_data的准确率 # save_best_only 只保存最好的一个模型 checkpointer = ModelCheckpoint(filepath, monitor='val_acc', save_best_only=True, mode='max') # steps_per_epoch指定循环次数 h = model.fit_generator(datagen.flow(X_train, Y_train, batch_size=500), steps_per_epoch=len(X_train)/1000, epochs=epochs, validation_data=datagen.flow(X_test, Y_test, batch_size=len(X_test)), validation_steps=1, callbacks=[checkpointer])

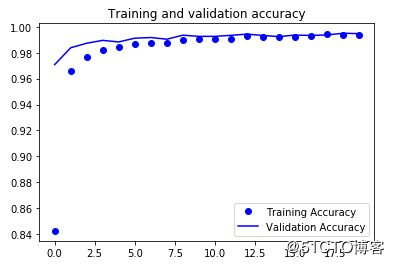

可以用pyplot打印一下,随着epochs,模型在训练与测试数据集的准确率表现

history = h.history

accuracy = history['acc']

val_accuracy = history['val_acc']

loss = history['loss']

val_loss = history['val_loss']

epochs = range(len(accuracy))

plt.plot(epochs, accuracy, 'bo', label='Training Accuracy')

plt.plot(epochs, val_accuracy, 'b', label='Validation Accuracy')

plt.title('Training and validation accuracy')

plt.legend()

plt.show()

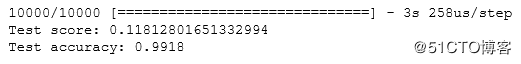

7、评估模型

from keras.models import load_model

model = load_model('model.hdf5')

score = model.evaluate(X_test, Y_test)

print('Test score:', score[0])

print('Test accuracy:', score[1])

8、抽取数据与真实值对比

predicted_classes = model.predict_classes(X_test) correct_indices = np.nonzero(predicted_classes == y_test)[0] incorrect_indices = np.nonzero(predicted_classes != y_test)[0]

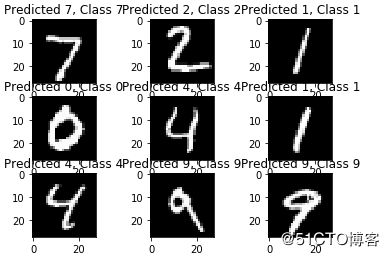

8.1打印正确预测

plt.figure()

for i, correct in enumerate(correct_indices[:9]):

plt.subplot(3,3,i+1)

plt.imshow(X_test[correct].reshape(28, 28), cmap='gray', interpolation='none')

plt.title("Predicted {}, Class {}".format(predicted_classes[correct], y_test[correct]))

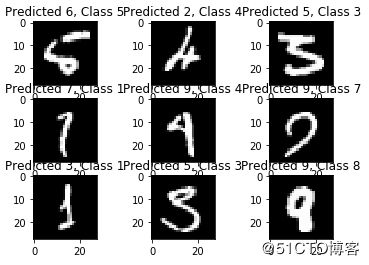

8.2打印错误预测

plt.figure()

for i, incorrect in enumerate(incorrect_indices[:9]):

plt.subplot(3,3,i+1)

plt.imshow(X_test[incorrect].reshape(28, 28), cmap='gray', interpolation='none')

plt.title("Predicted {}, Class {}".format(predicted_classes[incorrect], y_test[incorrect]))