一、keepalived简介

keepalived是分布式部署解决系统高可用的软件,结合lvs(LinuxVirtual Server)使用,解决单机宕机的问题。

keepalived是一个基于VRRP协议来实现IPVS的高可用的解决方案。对于LVS负载均衡来说,如果前端的调度器direct发生故障,则后端的realserver是无法接受请求并响应的。因此,保证前端direct的高可用性是非常关键的,否则后端的服务器是无法进行服务的。而我们的keepalived就可以用来解决单点故障(如LVS的前端direct故障)问题。keepalived的主要工作原理是:运行keepalived的两台服务器,其中一台为MASTER,另一台为BACKUP,正常情况下,所有的数据转换功能和ARP请求响应都是由MASTER完成的,一旦MASTER发生故障,则BACKUP会马上接管MASTER的工作,这种切换时非常迅速的。

二、测试环境

下面拿4台虚拟机进行环境测试,实验环境为centos6.6 x86_64,在lvs NAT模式环境下只有前端两台keepalived调度机有公网ip,后端真实机只有内网ip,具体用途和ip如下

服务器类型 |

公网ip |

内网ip |

LVS VIP1 |

192.168.214.70 |

192.168.211.254 |

LVS VIP2 |

192.168.214.71 |

192.168.211.253 |

Keepalived host1 |

192.168.214.76 |

192.168.211.76 |

Keepalived host2 |

192.168.214.77 |

192.168.211.77 |

Realserver A |

192.168.211.79 |

|

Realserver B |

192.168.211.83 |

拓扑图如下

三、软件安装

1、安装lvs所需包ipvsadm

yum install -y ipvsadm

ln -s /usr/src/kernels/`uname -r` /usr/src/linux

lsmod |grep ip_vs

#注意Centos 6.X安装lvs,使用1.26版本。并且需要先安装yuminstall libnl* popt* -y

执行ipvsadm(modprobe ip_vs)把ip_vs模块加载到内核

[root@test85 ~]# ipvsadm -L -n

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

#IP Virtual Server version 1.2.1 ---- ip_vs内核模块版本

2、安装keepalived

yum install -y keepalived

chkconfig keepalived on

注:在centos7系列系统中开机自动启动使用systemctl enable keepalived

四、开启路由转发

在lvs/NAT模式下前端两台调度机192.168.211.76和192.168.211.77上需要开启路由转发功能

vi /etc/sysctl.conf

修改net.ipv4.ip_forward = 0为net.ipv4.ip_forward= 1

保存退出后,使用systcl –p命令让其生效

五、keepalived配置

先看下211.76 keepalived配置文件

根据拓扑图得知211.76对应的是vip1 192.168.214.70的主,vip2192.168.214.71的备。

具体详细的参数说明见上篇LVS/DR + keepalived负载均衡实现

[root@localhost ~]# cat /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

notification_email {

[email protected]

}

notification_email_from [email protected]

smtp_server mail.test.com

smtp_connect_timeout 30

router_id LVS_DEVEL

}

vrrp_sync_group VG1 { #lvs vip1组

group {

VI_1

VI_GATEWAY1

}

}

vrrp_sync_group VG2 { #lvs vip2组

group {

VI_2

VI_GATEWAY2

}

}

vrrp_instance VI_GATEWAY1 { #lvs vip1的配置

state MASTER

interface eth1

lvs_sync_daemon_inteface eth1

virtual_router_id 51

priority 150

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.211.254

}

}

vrrp_instance VI_1 {

state MASTER

interface eth0

lvs_sync_daemon_inteface eth0

virtual_router_id 52

priority 150

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.214.70

}

}

vrrp_instance VI_GATEWAY2 { #lvs vip2的配置

state BACKUP

interface eth1

lvs_sync_daemon_inteface eth1

virtual_router_id 53

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.211.253

}

}

vrrp_instance VI_2 {

state BACKUP

interface eth0

lvs_sync_daemon_inteface eth0

virtual_router_id 54

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.214.71

}

}

virtual_server 192.168.214.70 80 { #vip1

delay_loop 6

lb_algo rr

lb_kind NAT

#nat_mask 255.255.255.0

persistence_timeout 10

protocol TCP

real_server 192.168.211.79 80 { #vip1对应的后端真实机

weight 100

TCP_CHECK {

connect_timeout 3

connect_port 80

nb_get_retry 3

delay_before_retry 3

}

}

}

virtual_server 192.168.214.71 8080 { #vip2

delay_loop 6

lb_algo rr

lb_kind NAT

#nat_mask 255.255.255.0

persistence_timeout 10

protocol TCP

real_server 192.168.211.83 8080 { #vip2对应的后端真实机

weight 100

TCP_CHECK {

connect_timeout 3

connect_port 8080

nb_get_retry 3

delay_before_retry 3

}

}

}

再看下211.77 keepalived配置文件

根据拓扑图得知211.77对应的是vip1 192.168.214.70的备,vip2192.168.214.71的主。

[root@localhost ~]# cat /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

notification_email {

[email protected]

}

notification_email_from [email protected]

#smtp_server 192.168.200.1

smtp_connect_timeout 30

router_id LVS_DEVEL

}

vrrp_sync_group VG1 {

group {

VI_1

VI_GATEWAY1

}

}

vrrp_sync_group VG2 {

group {

VI_2

VI_GATEWAY2

}

}

vrrp_instance VI_GATEWAY1 {

state BACKUP

interface eth1

lvs_sync_daemon_inteface eth1

virtual_router_id51

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.211.254

}

}

vrrp_instance VI_1 {

state BACKUP

interface eth0

lvs_sync_daemon_inteface eth0

virtual_router_id 52

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.214.70

}

}

vrrp_instance VI_GATEWAY2 {

state MASTER

interfaceeth1

lvs_sync_daemon_inteface eth1

virtual_router_id 53

priority 150

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.211.253

}

}

vrrp_instance VI_2 {

state MASTER

interface eth0

lvs_sync_daemon_inteface eth0

virtual_router_id 54

priority 150

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.214.71

}

}

virtual_server 192.168.214.70 80 {

delay_loop 6

lb_algo rr

lb_kind NAT

#nat_mask 255.255.255.0

persistence_timeout 10

protocol TCP

real_server 192.168.211.79 80 {

weight 100

TCP_CHECK {

connect_timeout 3

connect_port 80

nb_get_retry 3

delay_before_retry 3

}

}

}

virtual_server 192.168.214.71 8080 {

delay_loop 6

lb_algo rr

lb_kind NAT

#nat_mask 255.255.255.0

persistence_timeout 10

protocol TCP

real_server 192.168.211.83 8080 {

weight 100

TCP_CHECK {

connect_timeout 3

connect_port 8080

nb_get_retry 3

delay_before_retry 3

}

}

}

六、后端真实机配置

后端web应用机器192.168.211.79需要配置默认网关为vip1内网ip地址192.168.211.254,web应用机器192.168.211.83需要配置默认网关为vip2内网ip地址192.168.211.253

注意,线上环境如果更改默认网关为vip内网地址后,可能会造成连接不上服务器,需要提前添加相关静态路由。

七、启动keepalived服务及查看相关信息

在211.76和211.77上分别启动keepalived服务

在211.76主机上查看信息

通过ip addr可以看到vip1 地址已经绑定在eth0和eth1网口上

[root@localhost ~]# ip addr

1: lo:

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0:

link/ether 52:54:00:55:b2:d4 brd ff:ff:ff:ff:ff:ff

inet 192.168.214.76/24 brd 192.168.214.255 scope global eth0

inet 192.168.214.70/32 scope global eth0

inet6 fe80::5054:ff:fe55:b2d4/64 scope link

valid_lft forever preferred_lft forever

3: eth1:

link/ether 52:54:00:85:11:95 brd ff:ff:ff:ff:ff:ff

inet 192.168.211.76/24 brd 192.168.211.255 scope global eth1

inet192.168.211.254/32 scope global eth1

inet6 fe80::5054:ff:fe85:1195/64 scope link

valid_lft forever preferred_lft forever

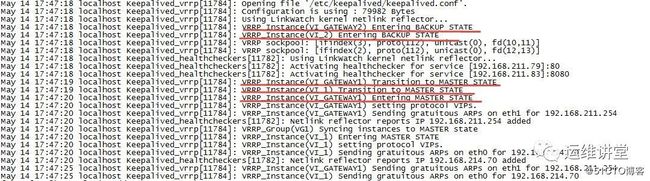

在211.76上查看日志信息,看到已成功进入keepalived vip1组的主机模式,vip2组的备机模式。

在211.77主机上查看信息

通过ip addr可以看到vip2 地址已经绑定在eth0和eth1网口上

[root@localhost ~]# ip addr

1: lo:

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0:

link/ether 52:54:00:1b:a2:11 brd ff:ff:ff:ff:ff:ff

inet 192.168.214.77/24 brd 192.168.214.255 scope global eth0

inet 192.168.214.71/32 scope global eth0

inet6 fe80::5054:ff:fe1b:a211/64 scope link

valid_lft forever preferred_lft forever

3: eth1:

link/ether 52:54:00:64:47:7d brd ff:ff:ff:ff:ff:ff

inet 192.168.211.77/24 brd 192.168.211.255 scope global eth1

inet192.168.211.253/32 scope global eth1

inet6 fe80::5054:ff:fe64:477d/64 scope link

valid_lft forever preferred_lft forever

在211.77上查看日志信息,看到已成功进入keepalived vip1组的备机模式,vip2组的主机模式。

通过ipvsadm -L –n 查看相应lvs连接信息

211.76上查看

[root@localhost ~]# ipvsadm -L -n

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 192.168.214.70:80 rr persistent 10

-> 192.168.211.79:80 Masq 100 3 5

TCP 192.168.214.71:8080 rr persistent 10

-> 192.168.211.83:8080 Masq 100 0 0

211.77上查看

[root@localhost ~]# ipvsadm -L -n

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 192.168.214.70:80 rr persistent 10

-> 192.168.211.79:80 Masq 100 0 0

TCP 192.168.214.71:8080 rr persistent 10

-> 192.168.211.83:8080 Masq 100 3 4

八、keepalived测试

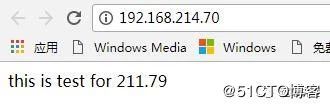

使用vip1地址192.168.214.70访问后端web192.168.211.79的页面

使用vip2地址192.168.214.71访问后端web192.168.211.83的页面

正常访问没问题后,我们来模拟lvs集群故障,把前端lvs调度机主机211.76宕机,看211.77能否把vip1的地址漂移过来

通过ip addr命令查看发现vip1的地址都已经漂移过来了

[root@localhost ~]# ip addr

1: lo:

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0:

link/ether 52:54:00:1b:a2:11 brd ff:ff:ff:ff:ff:ff

inet 192.168.214.77/24 brd 192.168.214.255scope global eth0

inet 192.168.214.71/32 scope global eth0

inet 192.168.214.70/32scope global eth0

inet6 fe80::5054:ff:fe1b:a211/64 scope link

valid_lft forever preferred_lft forever

3: eth1:

link/ether 52:54:00:64:47:7d brd ff:ff:ff:ff:ff:ff

inet 192.168.211.77/24 brd 192.168.211.255 scope global eth1

inet 192.168.211.253/32 scope global eth1

inet192.168.211.254/32 scope global eth1

inet6 fe80::5054:ff:fe64:477d/64 scope link

valid_lft forever preferred_lft forever

再211.77上通过日志查看,看到vip1的地址已漂移了过来,变成了主机状态

May 15 14:38:26 localhostKeepalived_vrrp[11898]: VRRP_Instance(VI_1) Transitionto MASTER STATE

May 15 14:38:26 localhostKeepalived_vrrp[11898]: VRRP_Group(VG1) Syncing instances to MASTER state

May 15 14:38:26 localhostKeepalived_vrrp[11898]: VRRP_Instance(VI_GATEWAY1) Transitionto MASTER STATE

May 15 14:38:27 localhostKeepalived_vrrp[11898]: VRRP_Instance(VI_GATEWAY1)Entering MASTER STATE

May 15 14:38:27 localhostKeepalived_vrrp[11898]: VRRP_Instance(VI_GATEWAY1) setting protocol VIPs.

May 15 14:38:27 localhostKeepalived_vrrp[11898]: VRRP_Instance(VI_GATEWAY1) Sending gratuitous ARPs oneth1 for 192.168.211.254

May 15 14:38:27 localhostKeepalived_healthcheckers[11897]: Netlink reflector reports IP 192.168.211.254added

May 15 14:38:27 localhostKeepalived_vrrp[11898]: VRRP_Instance(VI_1) EnteringMASTER STATE

May 15 14:38:27 localhostKeepalived_vrrp[11898]: VRRP_Instance(VI_1) setting protocol VIPs.

May 15 14:38:27 localhostKeepalived_vrrp[11898]: VRRP_Instance(VI_1) Sending gratuitous ARPs on eth0 for192.168.214.70

May 15 14:38:27 localhostKeepalived_healthcheckers[11897]: Netlink reflector reports IP 192.168.214.70added

May 15 14:38:32 localhostKeepalived_vrrp[11898]: VRRP_Instance(VI_GATEWAY1) Sending gratuitous ARPs oneth1 for 192.168.211.254

May 15 14:38:32 localhostKeepalived_vrrp[11898]: VRRP_Instance(VI_1) Sending gratuitous ARPs on eth0 for192.168.214.70

如果想了解更多,请关注我们的公众号

公众号ID:opdevos

扫码关注