webrtc视频采集

webrtc\src\webrtc\test 目录中的vcm_capturer.h 和 vcm_capturer.cc

如果仅仅是采集视频,可以参考或直接用这个例子;

也可以参考:webrtc58\src\webrtc\modules\video_capture\test\video_capture_unittest.cc;

一般仅仅是视频数据采集,Windows上直接用directshow就可以了;

移动端用相关平台sdk接口就可以了;

webrtc只是实现了上层接口的统一封装而已;

应用实例:

因为vcm_capturer.h 和 vcm_capturer.cc不是webrtc 库中的文件,所以要自己直接将文件添加到自己工程中;

class CWebRtcVideoDivece : public rtc::VideoSinkInterface

{

public:

CWebRtcVideoDivece( int iVideoDevice, size_t width, size_t height, size_t target_fps)

{

m_pVideoDivece = webrtc::test::VcmCapturer::Create(width, height, target_fps);

m_pVideoDivece->AddOrUpdateSink(this, rtc::VideoSinkWants());

m_pVideoDivece->Start();

}

~CWebRtcVideoDivece()

{

m_pVideoDivece->RemoveSink(this);

m_pVideoDivece->Stop();

delete m_pVideoDivece;

m_pVideoDivece = NULL;

}

private:

webrtc::test::VcmCapturer * m_pVideoDivece;

private:

void draw_imageBuf_to_wnd(unsigned char * pbuf, int widht, int height, HWND m_hWnd)

{

HDC hdc = ::GetDC(m_hWnd);

static BITMAPINFO bmi;

memset(&bmi, 0, sizeof(bmi));

bmi.bmiHeader.biSize = sizeof(BITMAPINFOHEADER);

bmi.bmiHeader.biWidth = widht;

bmi.bmiHeader.biHeight = -height;

bmi.bmiHeader.biPlanes = 1;

bmi.bmiHeader.biBitCount = 24;

bmi.bmiHeader.biCompression = BI_RGB;

SetStretchBltMode(hdc, COLORONCOLOR);

::StretchDIBits(

hdc,

0, 0, 200, 200,

0, 0, widht, height,

pbuf, &bmi, DIB_RGB_COLORS, SRCCOPY);

::ReleaseDC(m_hWnd, hdc);

}

public:

void OnFrame(const webrtc::VideoFrame& frame) override

{

//frame: YUV;

uint8_t * pY = (uint8_t *)(frame.video_frame_buffer()->DataY()); //only show Y;

int nSize = frame.width() * frame.height() * 3;

static uint8_t * pRGB = new uint8_t[nSize];

uint8_t * prgbTemp = pRGB;

for ( int i = 0; i

for (int n = 0; n<3; n++)

{

prgbTemp[n] = pY[i];

}

prgbTemp = prgbTemp + 3;

}

draw_imageBuf_to_wnd(pRGB, frame.width(), frame.height(), AfxGetApp()->m_pMainWnd->m_hWnd);

}

};

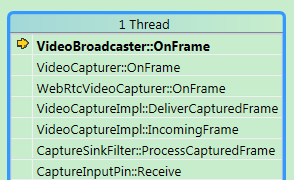

可以看一下类关系:

class VcmCapturer

: public VideoCapturer,

public rtc::VideoSinkInterface

class VideoCapturer : public rtc::VideoSourceInterface

也就是说: VcmCapturer实现了 VideoSourceInterface;

void VideoSendStream::SetSource(

rtc::VideoSourceInterface

const DegradationPreference& degradation_preference)

可以看出,第一个参数需要视频源,可以直接用这个了;

在webrtc内部,视频是通过各个平台实现的,当然,在Windows端,是通过directshow实现的视频采集;

void VideoBroadcaster::OnFrame(const webrtc::VideoFrame& frame) {

rtc::CritScope cs(&sinks_and_wants_lock_);

for (auto& sink_pair : sink_pairs()) {

if (sink_pair.wants.rotation_applied &&

frame.rotation() != webrtc::kVideoRotation_0) {

// Calls to OnFrame are not synchronized with changes to the sink wants.

// When rotation_applied is set to true, one or a few frames may get here

// with rotation still pending. Protect sinks that don't expect any

// pending rotation.

LOG(LS_VERBOSE) << "Discarding frame with unexpected rotation.";

continue;

}

if (sink_pair.wants.black_frames) {

sink_pair.sink->OnFrame(webrtc::VideoFrame(

GetBlackFrameBuffer(frame.width(), frame.height()), frame.rotation(),

frame.timestamp_us()));

} else {

sink_pair.sink->OnFrame(frame);

}

}

}

通过循环,将注册的observe发送视频数据;