kubernetes系列之十三:POD多网卡方案multus-cni之通过CRD给POD配置自由组合网络

一、前言

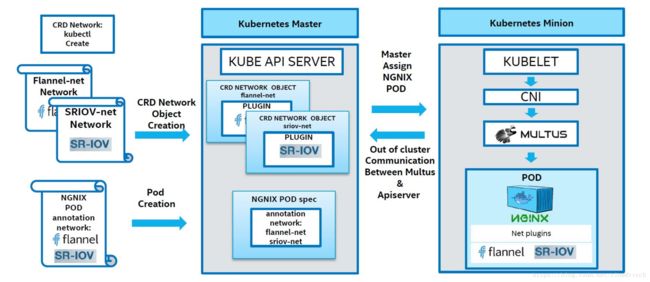

在文章《kubernetes POD多网卡方案multus-cni之一:概览》中,可以看到通过配置multus,可以为一个POD配置多个网络接口。根据multus主页上的描述,现在可以使用kubernetes的CDR来配置network object,允许POD对网络进行选取,在POD deployment定义中,可以指定需要使用哪个或者那些multus所管理的网络,来满足这种根据POD功能来自由组合网络的需求:

转载自https://blog.csdn.net/cloudvtech

二、测试环境设置

2.1 kubernetes(1个master,2个worker)

[root@k8s-master CRD]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master Ready master 23d v1.10.0

k8s-node1 Ready 23d v1.10.0

k8s-node2 Ready 23d v1.10.0 [root@k8s-master CRD]# kubectl version

Client Version: version.Info{Major:"1", Minor:"10", GitVersion:"v1.10.0", GitCommit:"fc32d2f3698e36b93322a3465f63a14e9f0eaead", GitTreeState:"clean", BuildDate:"2018-03-26T16:55:54Z", GoVersion:"go1.9.3", Compiler:"gc", Platform:"linux/amd64"}

Server Version: version.Info{Major:"1", Minor:"10", GitVersion:"v1.10.0", GitCommit:"fc32d2f3698e36b93322a3465f63a14e9f0eaead", GitTreeState:"clean", BuildDate:"2018-03-26T16:44:10Z", GoVersion:"go1.9.3", Compiler:"gc", Platform:"linux/amd64"}2.2 运行flannel CNI

kube-flannel.yml

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: flannel

rules:

- apiGroups:

- ""

resources:

- pods

verbs:

- get

- apiGroups:

- ""

resources:

- nodes

verbs:

- list

- watch

- apiGroups:

- ""

resources:

- nodes/status

verbs:

- patch

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: flannel

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: flannel

subjects:

- kind: ServiceAccount

name: flannel

namespace: kube-system

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: flannel

namespace: kube-system

---

kind: ConfigMap

apiVersion: v1

metadata:

name: kube-flannel-cfg

namespace: kube-system

labels:

tier: node

app: flannel

data:

cni-conf.json: |

{

"name": "cbr0",

"plugins": [

{

"type": "flannel",

"delegate": {

"hairpinMode": true,

"isDefaultGateway": true

}

},

{

"type": "portmap",

"capabilities": {

"portMappings": true

}

}

]

}

net-conf.json: |

{

"Network": "10.244.0.0/16",

"Backend": {

"Type": "vxlan"

}

}

---

apiVersion: extensions/v1beta1

kind: DaemonSet

metadata:

name: kube-flannel-ds

namespace: kube-system

labels:

tier: node

app: flannel

spec:

template:

metadata:

labels:

tier: node

app: flannel

spec:

hostNetwork: true

nodeSelector:

beta.kubernetes.io/arch: amd64

tolerations:

- key: node-role.kubernetes.io/master

operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni

image: quay.io/coreos/flannel:v0.10.0-amd64

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

image: quay.io/coreos/flannel:v0.10.0-amd64

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: true

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: run

mountPath: /run

- name: flannel-cfg

mountPath: /etc/kube-flannel/

volumes:

- name: run

hostPath:

path: /run

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

kubectl apply -f kube-flannel.yml[root@k8s-master CRD]# kubectl get pod -n kube-system | grep flannel

kube-flannel-ds-gmkg8 1/1 Running 1 1h

kube-flannel-ds-kjnbw 1/1 Running 3 1h

kube-flannel-ds-rb997 1/1 Running 3 1h2.3 安装multus

参见文章《kubernetes POD多网卡方案multus-cni之一:概览》

2.4 配置multus

首先清理原有的CNI配置

rm -f /etc/cni/net.d/* {

"name": "multus-cni-network",

"type": "multus",

"kubeconfig": "/etc/kubernetes/kubelet.conf"

}重启kubelet

systemctl restart kubelet转载自https://blog.csdn.net/cloudvtech

三、配置multus CRD

3.1 建立权限

clusterrole.yml

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: multus-crd-overpowered

rules:

- apiGroups:

- '*'

resources:

- '*'

verbs:

- '*'

- nonResourceURLs:

- '*'

verbs:

- '*'kubectl apply -f clusterrole.ymlkubectl create clusterrolebinding multus-node-k8s-node1 --clusterrole=multus-crd-overpowered --user=system:node:k8s-node1

kubectl create clusterrolebinding multus-node-k8s-node2 --clusterrole=multus-crd-overpowered --user=system:node:k8s-node2

kubectl create clusterrolebinding multus-node-k8s-master --clusterrole=multus-crd-overpowered --user=system:node:k8s-master3.2 建立CRD network

crdnetwork.yaml

apiVersion: apiextensions.k8s.io/v1beta1

kind: CustomResourceDefinition

metadata:

# name must match the spec fields below, and be in the form: .

name: networks.kubernetes.com

spec:

# group name to use for REST API: /apis//

group: kubernetes.com

# version name to use for REST API: /apis//

version: v1

# either Namespaced or Cluster

scope: Namespaced

names:

# plural name to be used in the URL: /apis///

plural: networks

# singular name to be used as an alias on the CLI and for display

singular: network

# kind is normally the CamelCased singular type. Your resource manifests use this.

kind: Network

# shortNames allow shorter string to match your resource on the CLI

shortNames:

- net kubectl apply -f crdnetwork.yml3.3 建立network resource object

CNI flannel network object:flannel-network.yaml

apiVersion: "kubernetes.com/v1"

kind: Network

metadata:

name: flannel-networkobj

plugin: flannel

args: '[

{

"delegate": {

"isDefaultGateway": true

}

}

]'apiVersion: "kubernetes.com/v1"

kind: Network

metadata:

name: macvlan-networkobj

plugin: macvlan

args: '[

{

"master": "ens33",

"mode": "vepa",

"ipam": {

"type": "host-local",

"subnet": "192.168.166.0/24",

"rangeStart": "192.168.166.21",

"rangeEnd": "192.168.166.29",

"routes": [

{ "dst": "0.0.0.0/0" }

],

"gateway": "192.168.166.2"

}

}

]'部署这两个网络对象:

kubectl apply -f flannel-network.yaml

kubectl apply -f macvlan-network.yaml查看部署结果:

[root@k8s-master CRD]# kubectl get CustomResourceDefinition

NAME AGE

networks.kubernetes.com 2h[root@k8s-master CRD]# kubectl get networks

NAME AGE

flannel-networkobj 1h

macvlan-networkobj 58m

[root@k8s-master CRD]# kubectl describe networks

Name: flannel-networkobj

Namespace: default

Labels:

Annotations: kubectl.kubernetes.io/last-applied-configuration={"apiVersion":"kubernetes.com/v1","args":"[ { \"delegate\": { \"isDefaultGateway\": true } } ]","kind":"Network","metadata":{"annotations":{},"name":"f...

API Version: kubernetes.com/v1

Args: [ { "delegate": { "isDefaultGateway": true } } ]

Kind: Network

Metadata:

Cluster Name:

Creation Timestamp: 2018-05-08T01:32:50Z

Generation: 1

Resource Version: 314203

Self Link: /apis/kubernetes.com/v1/namespaces/default/networks/flannel-networkobj

UID: b85192fa-525f-11e8-a891-000c29d3e746

Plugin: flannel

Events:

Name: macvlan-networkobj

Namespace: default

Labels:

Annotations: kubectl.kubernetes.io/last-applied-configuration={"apiVersion":"kubernetes.com/v1","args":"[ { \"master\": \"ens33\", \"mode\": \"vepa\", \"ipam\": { \"type\": \"host-local\", \"subnet\": \"192.168.16...

API Version: kubernetes.com/v1

Args: [ { "master": "ens33", "mode": "vepa", "ipam": { "type": "host-local", "subnet": "192.168.166.0/24", "rangeStart": "192.168.166.21", "rangeEnd": "192.168.166.29", "routes": [ { "dst": "0.0.0.0/0" } ], "gateway": "192.168.166.2" } } ]

Kind: Network

Metadata:

Cluster Name:

Creation Timestamp: 2018-05-08T02:18:54Z

Generation: 1

Resource Version: 318342

Self Link: /apis/kubernetes.com/v1/namespaces/default/networks/macvlan-networkobj

UID: 27f24729-5266-11e8-9ff5-000c29d3e746

Plugin: macvlan

Events:

转载自https://blog.csdn.net/cloudvtech

四、选择不同的网络部署POD

4.1 使用flannel的POD

nginx_pod_flannel.yaml

apiVersion: v1

kind: Pod

metadata:

name: nginx-flannel

annotations:

networks: '[

{ "name": "flannel-networkobj" }

]'

spec:

containers:

- name: nginx

image: nginx:1.7.9

ports:

- containerPort: 80查看POD内部:

[root@k8s-node1 net.d]# docker exec -it db bash

root@nginx-flannel:/# ip a

1: lo: mtu 65536 qdisc noqueue state UNKNOWN qlen 1

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

3: eth0@if12: mtu 1450 qdisc noqueue state UP

link/ether 0a:58:0a:f4:01:03 brd ff:ff:ff:ff:ff:ff

inet 10.244.1.3/24 scope global eth0

valid_lft forever preferred_lft forever

inet6 fe80::744c:23ff:fe2c:11ce/64 scope link

valid_lft forever preferred_lft forever 从另外一个node访问:

[root@k8s-node2 ~]# curl 10.244.1.3

Welcome to nginx!

......

......

4.2 使用macvlan的POD

macvlan-network.yaml

apiVersion: "kubernetes.com/v1"

kind: Network

metadata:

name: macvlan-networkobj

plugin: macvlan

args: '[

{

"master": "ens33",

"mode": "vepa",

"ipam": {

"type": "host-local",

"subnet": "192.168.166.0/24",

"rangeStart": "192.168.166.21",

"rangeEnd": "192.168.166.29",

"routes": [

{ "dst": "0.0.0.0/0" }

],

"gateway": "192.168.166.2"

}

}

]'查看POD内部:

[root@k8s-node2 ~]# docker exec -it bf bash

root@nginx-macvlan:/# ip a

1: lo: mtu 65536 qdisc noqueue state UNKNOWN qlen 1

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: mtu 1500 qdisc noqueue state UNKNOWN

link/ether 06:a1:c9:b2:ff:c4 brd ff:ff:ff:ff:ff:ff

inet 192.168.166.23/24 scope global eth0

valid_lft forever preferred_lft forever

inet6 fe80::4a1:c9ff:feb2:ffc4/64 scope link

valid_lft forever preferred_lft forever

从另外一个node访问:

[root@k8s-node2 ~]# curl 192.168.166.23

Welcome to nginx!

......

......

4.3 同时使用flannel和macvlan的POD

nginx_pod_both.yaml

apiVersion: v1

kind: Pod

metadata:

name: nginx-both

annotations:

networks: '[

{ "name": "flannel-networkobj" },

{ "name": "macvlan-networkobj" }

]'

spec:

containers:

- name: nginx

image: nginx:1.7.9

ports:

- containerPort: 80查看POD内部:

[root@k8s-node1 net.d]# docker exec -it 82 bash

root@nginx-both:/# ip a

1: lo: mtu 65536 qdisc noqueue state UNKNOWN qlen 1

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

3: eth0@if13: mtu 1450 qdisc noqueue state UP

link/ether 0a:58:0a:f4:01:04 brd ff:ff:ff:ff:ff:ff

inet 10.244.1.4/24 scope global eth0

valid_lft forever preferred_lft forever

inet6 fe80::287c:8dff:fefd:4f88/64 scope link

valid_lft forever preferred_lft forever

4: net0@if2: mtu 1500 qdisc noqueue state UNKNOWN

link/ether de:59:61:70:cf:a9 brd ff:ff:ff:ff:ff:ff

inet 192.168.166.21/24 scope global net0

valid_lft forever preferred_lft forever

inet6 fe80::dc59:61ff:fe70:cfa9/64 scope link

valid_lft forever preferred_lft forever 从另外一个node访问:

[root@k8s-node2 ~]# curl 10.244.1.4

Welcome to nginx!

......

......

[root@k8s-node2 ~]# curl 192.168.166.21

Welcome to nginx!

......

......