kubernetes系列之十五:Kubernetes CRD在multus-cni和prometheus-operator的应用

一、前言

在文章《kubernetes系列之十四:Kubernetes CRD(CustomResourceDefinition)概览》中,可以看到,借助于CRD,Kubernetes平台的使用者可以根据特定应用程序的需求定义自己的资源和资源的行为方式,使用简单的模式就可以扩展Kubernetes平台的能力。本文借助multus-cni和prometheus-operator这两个Kubernetes的扩展资源来看一下CRD可以如何被多样化的使用。

转载自https://blog.csdn.net/cloudvtech

二、multus-cni的CRD实现

multus-cni里面对于CRD资源的定义和CRD资源实例的定义可以看见文章《kubernetes系列之十三:POD多网卡方案multus-cni之通过CRD给POD配置自由组合网络》,这里重点分析一下multus-cni里面一个特殊的"CDR controller"实现的实现,在这个实现中,CDR controller和CNCF CNI plugin的实现被绑定在了一起。

multus-cni里面的一个功能是用户可以根据POD的类型为POD选择所需要的网络,这些网络是事先定义在Kubernetes CRD里面的,POD可以使用annonation来引用这些网络。

查看multus-cni的代码,可以看到在plugin里面会读取正在创建的POD的annonation,根据annonation里面的网络定义来为CNI选择plugin的列表:

func getPodNetworkAnnotation(client *kubernetes.Clientset, k8sArgs K8sArgs) (string, error) {

var annot string

var err error

pod, err := client.Pods(string(k8sArgs.K8S_POD_NAMESPACE)).Get(fmt.Sprintf("%s", string(k8sArgs.K8S_POD_NAME)), metav1.GetOptions{})

if err != nil {

return annot, fmt.Errorf("getPodNetworkAnnotation: failed to query the pod %v in out of cluster comm", string(k8sArgs.K8S_POD_NAME))

}

return pod.Annotations["networks"], nil

}

func parsePodNetworkObject(podnetwork string) ([]map[string]interface{}, error) {

var podNet []map[string]interface{}

if podnetwork == "" {

return nil, fmt.Errorf("parsePodNetworkObject: pod annotation not having \"network\" as key, refer Multus README.md for the usage guide")

}

if err := json.Unmarshal([]byte(podnetwork), &podNet); err != nil {

return nil, fmt.Errorf("parsePodNetworkObject: failed to load pod network err: %v | pod network: %v", err, podnetwork)

}

return podNet, nil

}转载自https://blog.csdn.net/cloudvtech

三、prometheus-operator的CRD实现

3.1 prometheus CRD

prometheus.crd.yaml

---

apiVersion: apiextensions.k8s.io/v1beta1

kind: CustomResourceDefinition

metadata:

creationTimestamp: null

name: prometheuses.monitoring.coreos.com

spec:

group: monitoring.coreos.com

names:

kind: Prometheus

plural: prometheuses

scope: Namespaced

validation:

openAPIV3Schema:

description: Prometheus defines a Prometheus deployment.

properties:

apiVersion:包含schema和validation的yaml一共有2000多行,可见是非常复杂的一个CRD,同一个目录下还包含了其它两个CRD:

3.2 prometheus CRD controller

CRD的入口代码在这里,这个CRD感兴趣的的事件比较多:

c.promInf = cache.NewSharedIndexInformer(

&cache.ListWatch{

ListFunc: mclient.MonitoringV1().Prometheuses(c.config.Namespace).List,

WatchFunc: mclient.MonitoringV1().Prometheuses(c.config.Namespace).Watch,

},

&monitoringv1.Prometheus{}, resyncPeriod, cache.Indexers{},

)

c.promInf.AddEventHandler(cache.ResourceEventHandlerFuncs{

AddFunc: c.handleAddPrometheus,

DeleteFunc: c.handleDeletePrometheus,

UpdateFunc: c.handleUpdatePrometheus,

})

c.smonInf = cache.NewSharedIndexInformer(

&cache.ListWatch{

ListFunc: mclient.MonitoringV1().ServiceMonitors(c.config.Namespace).List,

WatchFunc: mclient.MonitoringV1().ServiceMonitors(c.config.Namespace).Watch,

},

&monitoringv1.ServiceMonitor{}, resyncPeriod, cache.Indexers{},

)

c.smonInf.AddEventHandler(cache.ResourceEventHandlerFuncs{

AddFunc: c.handleSmonAdd,

DeleteFunc: c.handleSmonDelete,

UpdateFunc: c.handleSmonUpdate,

})

c.cmapInf = cache.NewSharedIndexInformer(

cache.NewListWatchFromClient(c.kclient.Core().RESTClient(), "configmaps", c.config.Namespace, fields.Everything()),

&v1.ConfigMap{}, resyncPeriod, cache.Indexers{},

)

c.cmapInf.AddEventHandler(cache.ResourceEventHandlerFuncs{

AddFunc: c.handleConfigMapAdd,

DeleteFunc: c.handleConfigMapDelete,

UpdateFunc: c.handleConfigMapUpdate,

})

c.secrInf = cache.NewSharedIndexInformer(

cache.NewListWatchFromClient(c.kclient.Core().RESTClient(), "secrets", c.config.Namespace, fields.Everything()),

&v1.Secret{}, resyncPeriod, cache.Indexers{},

)

c.secrInf.AddEventHandler(cache.ResourceEventHandlerFuncs{

AddFunc: c.handleSecretAdd,

DeleteFunc: c.handleSecretDelete,

UpdateFunc: c.handleSecretUpdate,

})

c.ssetInf = cache.NewSharedIndexInformer(

cache.NewListWatchFromClient(c.kclient.AppsV1beta2().RESTClient(), "statefulsets", c.config.Namespace, fields.Everything()),

&appsv1.StatefulSet{}, resyncPeriod, cache.Indexers{},

)

c.ssetInf.AddEventHandler(cache.ResourceEventHandlerFuncs{

AddFunc: c.handleAddStatefulSet,

DeleteFunc: c.handleDeleteStatefulSet,

UpdateFunc: c.handleUpdateStatefulSet,

})

c.nsInf = cache.NewSharedIndexInformer(

cache.NewListWatchFromClient(c.kclient.Core().RESTClient(), "namespaces", metav1.NamespaceAll, fields.Everything()),

&v1.Namespace{}, resyncPeriod, cache.Indexers{},

)可以看到包含如下资源的add、delete和update:

- Promethues

- ServiceMonitor

- ConfigMap

- Secret

- StatefulSet

3.3 部署模式

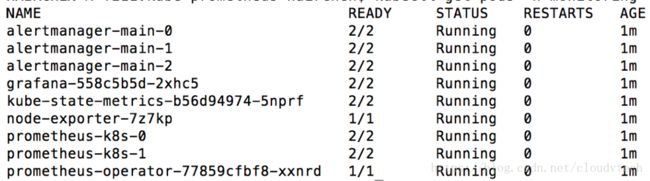

这个CRD controller会被构建成一个docker镜像,在部署这个CRD资源的实例时候会启动一个prometheus-operator的docker实例(kubectl get pods -n monitoring):

这个docker实例连接到kubernetes master,会通过informer获取关注的资源的状态改变通知,并进行响应的处理,比如:

- 创建promethues实例

- 创建alertmanager实例

- 创建配置文件等

prometheus的实例是以statfulset形式运行的,所以有如下函数为这个资源创建Spec用于kubernetes的部署:

func makeStatefulSetSpec(p monitoringv1.Prometheus, c *Config, ruleConfigMaps []*v1.ConfigMap) (*appsv1.StatefulSetSpec, error) {

...

handleAdd return &appsv1.StatefulSetSpec{

ServiceName: governingServiceName,

Replicas: p.Spec.Replicas,

PodManagementPolicy: appsv1.ParallelPodManagement,

UpdateStrategy: appsv1.StatefulSetUpdateStrategy{

Type: appsv1.RollingUpdateStatefulSetStrategyType,

},

Selector: &metav1.LabelSelector{

MatchLabels: finalLabels,

},

Template: v1.PodTemplateSpec{

ObjectMeta: metav1.ObjectMeta{

Labels: finalLabels,

Annotations: podAnnotations,

},

Spec: v1.PodSpec{

Containers: append([]v1.Container{

{

Name: "prometheus",

Image: fmt.Sprintf("%s:%s", p.Spec.BaseImage, p.Spec.Version),

Ports: ports,

Args: promArgs,

VolumeMounts: promVolumeMounts,

LivenessProbe: livenessProbe,

ReadinessProbe: readinessProbe,

Resources: p.Spec.Resources,

}, {

Name: "prometheus-config-reloader",

Image: c.PrometheusConfigReloader,

Env: []v1.EnvVar{

{

Name: "POD_NAME",

ValueFrom: &v1.EnvVarSource{

FieldRef: &v1.ObjectFieldSelector{FieldPath: "metadata.name"},

},

},

},

Args: configReloadArgs,

VolumeMounts: configReloadVolumeMounts,

Resources: v1.ResourceRequirements{

Limits: v1.ResourceList{

v1.ResourceCPU: resource.MustParse("10m"),

v1.ResourceMemory: resource.MustParse("50Mi"),

},

},

},

}, p.Spec.Containers...),

SecurityContext: securityContext,

ServiceAccountName: p.Spec.ServiceAccountName,

NodeSelector: p.Spec.NodeSelector,

TerminationGracePeriodSeconds: &terminationGracePeriod,

Volumes: volumes,

Tolerations: p.Spec.Tolerations,

Affinity: p.Spec.Affinity,

},

},

}, nil