运用spark读取hive表的数据大批量导入Hbase

首先需要将集群上的hdfs-site.xml与hive-site.xml拷贝到resources目录下。开发spark程序推荐使用IDEA,能够事半功倍。缩短你的开发时间。scala代码需要放在下面的scala目录里。

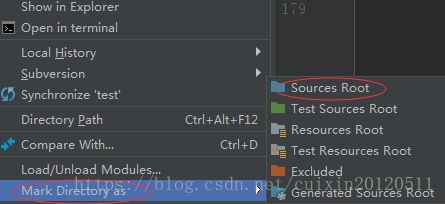

新建的项目没有这个文件夹需要自己创建,然后选中文件夹右键利用菜单把它变成sourcesRoot。

pom文件中主要的依赖及打包工具

2.2.1

org.scala-lang

scala-library

2.11.12

org.apache.spark

spark-core_2.11

${spark.version}

org.apache.spark

spark-sql_2.11

${spark.version}

org.apache.spark

spark-hive_2.11

${spark.version}

org.apache.hbase

hbase-common

1.2.6

org.apache.hbase

hbase-server

1.2.6

org.apache.hbase

hbase-client

1.2.6

mysql

mysql-connector-java

5.1.38

org.apache.hadoop

hadoop-client

2.7.3

org.apache.hive

hive-hbase-handler

2.3.2

net.alchim31.maven

scala-maven-plugin

3.2.2

org.apache.maven.plugins

maven-compiler-plugin

3.5.1

net.alchim31.maven

scala-maven-plugin

scala-compile-first

process-resources

add-source

compile

scala-test-compile

process-test-resources

testCompile

org.apache.maven.plugins

maven-compiler-plugin

compile

compile

org.apache.maven.plugins

maven-shade-plugin

2.4.3

package

shade

*:*

META-INF/*.SF

META-INF/*.DSA

META-INF/*.RSA

spark完整代码

import java.net.URI

import org.apache.hadoop.conf.Configuration

import org.apache.hadoop.fs.{FileSystem, Path}

import org.apache.hadoop.hbase.client.ConnectionFactory

import org.apache.hadoop.hbase.io.ImmutableBytesWritable

import org.apache.hadoop.hbase.mapreduce.{HFileOutputFormat2, LoadIncrementalHFiles}

import org.apache.hadoop.hbase.util.Bytes

import org.apache.hadoop.hbase.{HBaseConfiguration, KeyValue, TableName}

import org.apache.hadoop.mapreduce.Job

import org.apache.spark.sql.SparkSession

object ImportGPS{

/**

* 处理null字段

* @param str

* @return

*/

def nullHandle(str: String):String = {

if(str == null || "".equals(str)){

return "NULL"

}else{

return str

}

}

def main(args: Array[String]): Unit = {

val spark = SparkSession.builder().appName("import")

.master("local[*]")

.enableHiveSupport()

.getOrCreate()

//从hive中读取数据,数据是在hdfs上,hive是个外部表,你也可以用内部表,都有一样

val Data = spark.sql("select *,ID rowkey from tableName")

val dataRdd = Data.rdd.flatMap(row => { //cf是列族名,ID、DATA_TYPE、DEVICE_ID为字段名

val rowkey = row.getAs[String]("rowkey".toLowerCase)

Array(

(rowkey,("cf","ID",nullHandle(row.getAs[String]("ID".toLowerCase)))),

(rowkey,("cf","DATA_TYPE",nullHandle(row.getAs[String]("DATA_TYPE".toLowerCase)))),

(rowkey,("cf","DEVICE_ID",nullHandle(row.getAs[String]("DEVICE_ID".toLowerCase))))

)

})

//要保证行键,列族,列名的整体有序,必须先排序后处理,防止数据异常过滤rowkey

val rdds = dataRdd.filter(x=>x._1 != null).sortBy(x=>(x._1,x._2._1,x._2._2)).map(x => {

//将rdd转换成HFile需要的格式,Hfile的key是ImmutableBytesWritable,那么我们定义的RDD也是要以ImmutableBytesWritable的实例为key

//KeyValue的实例为value

val rowKey = Bytes.toBytes(x._1)

val family = Bytes.toBytes(x._2._1)

val colum = Bytes.toBytes(x._2._2)

val value = Bytes.toBytes(x._2._3)

(new ImmutableBytesWritable(rowKey), new KeyValue(rowKey, family, colum, value))

})

//临时文件保存位置,在hdfs上

val tmpdir = "/tmp/test"

val hconf = new Configuration()

hconf.set("fs.defaultFS", "hdfs://cluster1")

val fs = FileSystem.get(new URI("hdfs://cluster1"), hconf, "hadoop") //hadoop为你的服务器用户名

if (fs.exists(new Path(tmpdir))){ //由于生成Hfile文件的目录必须是不存在的,所以我们存在的话就把它删除掉

println("删除临时文件夹")

fs.delete(new Path(tmpdir), true)

}

//创建HBase的配置

val conf = HBaseConfiguration.create()

conf.set("hbase.zookeeper.quorum", "192.168.1.107,192.168.1.108,192.168.1.109")

conf.set("hbase.zookeeper.property.clientPort", "2181")

//为了预防hfile文件数过多无法进行导入,设置该参数值

conf.setInt("hbase.mapreduce.bulkload.max.hfiles.perRegion.perFamily", 5000)

//此处运行完成之后,在tmpdir生成的Hfile文件

rdds.saveAsNewAPIHadoopFile(tmpdir,

classOf[ImmutableBytesWritable],

classOf[KeyValue],

classOf[HFileOutputFormat2],

conf)

//开始即那个HFile导入到Hbase,此处都是hbase的api操作

val load = new LoadIncrementalHFiles(conf)

//hbase的表名

val tableName = "importData"

//创建hbase的链接,利用默认的配置文件,实际上读取的hbase的master地址

val conn = ConnectionFactory.createConnection(conf)

//根据表名获取表

val table = conn.getTable(TableName.valueOf(tableName))

try {

//获取hbase表的region分布

val regionLocator = conn.getRegionLocator(TableName.valueOf(tableName))

//创建一个hadoop的mapreduce的job

val job = Job.getInstance(conf)

//设置job名称,随便起一个就行

job.setJobName("test")

//此处最重要,需要设置文件输出的key,因为我们要生成HFil,所以outkey要用ImmutableBytesWritable

job.setMapOutputKeyClass(classOf[ImmutableBytesWritable])

//输出文件的内容KeyValue

job.setMapOutputValueClass(classOf[KeyValue])

//配置HFileOutputFormat2的信息

HFileOutputFormat2.configureIncrementalLoad(job, table, regionLocator)

//开始导入

load.doBulkLoad(new Path(tmpdir),conn.getAdmin,table, regionLocator)

} finally {

table.close()

conn.close()

}

spark.close()

}

}