opencv:特征匹配

一:简述

在前面两节,我们介绍了各种特征检测的算法,SIFT、SURF、HOG、LBP、Haar等等,通过这些算法我们得到了关于一幅图像的特征描述子,接下来我们可以利用这些特征描述子进行图像与图像之间的匹配。

二:FLANN特征匹配

FLANN库全称是Fast Library for Approximate Nearest Neighbors,它是目前最完整的(近似)最近邻开源库。不但实现了一系列查找算法,还包含了一种自动选取最快算法的机制。

高维数据快速最近邻算法FLANN内部算法主要依靠k-d树来实现,关于k-d树的原理以及该算法如何利用kd树进行特征匹配在这篇博客中已经讲述的很清楚了,就不过多进行赘述了,本节主要是实践这个算法。

代码奉上:

#include

#include

#include

#include

using namespace std;

using namespace cv;

using namespace cv::xfeatures2d;

int main() {

Mat Img1 = imread("D:\\opencv\\img1.jpg");

Mat Img2 = imread("D:\\opencv\\img2.jpg");

Mat img1,img2;

resize(Img1, img1, Size(Img1.cols / 8, Img1.rows / 8), (0, 0), (0, 0), INTER_LINEAR);

resize(Img2, img2, Size(Img2.cols / 8, Img2.rows / 8), (0, 0), (0, 0), INTER_LINEAR);

Mat gray_img1, gray_img2;

imshow("img1:",img1);

imshow("img2:", img2);

cvtColor(img1, gray_img1, CV_BGR2GRAY);

cvtColor(img2, gray_img2, CV_BGR2GRAY);

//SURF特征提取

int minHessian = 400;

Ptr detector = SURF::create(minHessian);

vector keyPoint_obj;

vector keyPoint_scene;

Mat descriptor_obj, descriptor_scene;

detector->detectAndCompute(gray_img1, Mat(), keyPoint_obj, descriptor_obj, false);

detector->detectAndCompute(gray_img2, Mat(), keyPoint_scene, descriptor_scene, false);

//匹配

FlannBasedMatcher Matcher;

vector matches;

Matcher.match(descriptor_obj, descriptor_scene, matches);

//寻找最大最小距离

double minDistance = 1000;

double maxDistance = 0;

for (int i = 0; i < descriptor_obj.rows; i++) {

double dist = matches[i].distance;

if (dist < minDistance)

minDistance = dist;

if (dist > maxDistance)

maxDistance = dist;

}

//选取最优的匹配

vector goodMatch;

for (int i = 0; i < descriptor_obj.rows; i++) {

double dist = matches[i].distance;

if (dist < max(2 * minDistance, 0.02)) {

goodMatch.push_back(matches[i]);

}

}

//将匹配关系表示出来

Mat matchesImg;

drawMatches(img1, keyPoint_obj, img2, keyPoint_scene, goodMatch, matchesImg, Scalar::all(-1), Scalar::all(-1),

vector(), DrawMatchesFlags::NOT_DRAW_SINGLE_POINTS);

imshow("matchImg", matchesImg);

waitKey(0);

return(0);

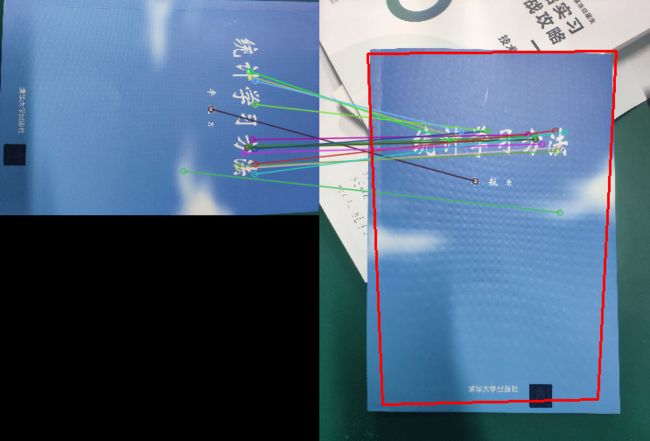

} 执行效果如下所示:

平面对象识别

继续上面的步骤,我们在获得对象的匹配关系后,可以对对象进行识别和定位。主要使用到findHomography和perspectiveTransform两个函数具体功能可以参考这篇博客,在下述代码中阐述:

#include

#include

#include

#include

using namespace std;

using namespace cv;

using namespace cv::xfeatures2d;

int main() {

Mat Img1 = imread("D:\\opencv\\img1.jpg");

Mat Img2 = imread("D:\\opencv\\img2.jpg");

Mat img1,img2;

resize(Img1, img1, Size(Img1.cols / 8, Img1.rows / 8), (0, 0), (0, 0), INTER_LINEAR);

resize(Img2, img2, Size(Img2.cols / 8, Img2.rows / 8), (0, 0), (0, 0), INTER_LINEAR);

Mat gray_img1, gray_img2;

imshow("img1:",img1);

imshow("img2:", img2);

cvtColor(img1, gray_img1, CV_BGR2GRAY);

cvtColor(img2, gray_img2, CV_BGR2GRAY);

//SURF特征提取

int minHessian = 400;

Ptr detector = SURF::create(minHessian);

vector keyPoint_obj;

vector keyPoint_scene;

Mat descriptor_obj, descriptor_scene;

detector->detectAndCompute(gray_img1, Mat(), keyPoint_obj, descriptor_obj, false);

detector->detectAndCompute(gray_img2, Mat(), keyPoint_scene, descriptor_scene, false);

//匹配

FlannBasedMatcher Matcher;

vector matches;

Matcher.match(descriptor_obj, descriptor_scene, matches);

//寻找最大最小距离

double minDistance = 1000;

double maxDistance = 0;

for (int i = 0; i < descriptor_obj.rows; i++) {

double dist = matches[i].distance;

if (dist < minDistance)

minDistance = dist;

if (dist > maxDistance)

maxDistance = dist;

}

//选取最优的匹配

vector goodMatch;

for (int i = 0; i < descriptor_obj.rows; i++) {

double dist = matches[i].distance;

if (dist < max(2 * minDistance, 0.02)) {

goodMatch.push_back(matches[i]);

}

}

//将匹配关系表示出来

Mat matchesImg;

drawMatches(img1, keyPoint_obj, img2, keyPoint_scene, goodMatch, matchesImg, Scalar::all(-1), Scalar::all(-1),

vector(), DrawMatchesFlags::NOT_DRAW_SINGLE_POINTS);

imshow("matchImg", matchesImg);

vector obj;

vector objInScene;

for (size_t t = 0; t < goodMatch.size(); t++) {

//queryIdx 为第一图的特征点的 ID,也为query描述子的索引

//pt:关键点的点坐标

obj.push_back(keyPoint_obj[goodMatch[t].queryIdx].pt);

objInScene.push_back(keyPoint_scene[goodMatch[t].trainIdx].pt);

}

//获取变换矩阵

Mat H = findHomography(obj, objInScene, RANSAC);

vector obj_corners(4); //原始坐标的角点

vector scene_corners(4); //透视变换后的对象点

obj_corners[0] = Point(0, 0);

obj_corners[1] = Point(img1.cols, 0);

obj_corners[2] = Point(img1.cols, img1.rows);

obj_corners[3] = Point(0, img1.rows);

//获得变换后对象坐标点

perspectiveTransform(obj_corners, scene_corners, H);

// 画线,因为要在新图中画出识别的对象,因此对象坐标要加上第一张图的宽

line(matchesImg, scene_corners[0] + Point2f(img1.cols, 0), scene_corners[1] + Point2f(img1.cols, 0), Scalar(0, 0, 255), 2, 8, 0);

line(matchesImg, scene_corners[1] + Point2f(img1.cols, 0), scene_corners[2] + Point2f(img1.cols, 0), Scalar(0, 0, 255), 2, 8, 0);

line(matchesImg, scene_corners[2] + Point2f(img1.cols, 0), scene_corners[3] + Point2f(img1.cols, 0), Scalar(0, 0, 255), 2, 8, 0);

line(matchesImg, scene_corners[3] + Point2f(img1.cols, 0), scene_corners[0] + Point2f(img1.cols, 0), Scalar(0, 0, 255), 2, 8, 0);

Mat dst;

cvtColor(gray_img2, dst, COLOR_GRAY2BGR);

line(dst, scene_corners[0], scene_corners[1], Scalar(0, 0, 255), 2, 8, 0);

line(dst, scene_corners[1], scene_corners[2], Scalar(0, 0, 255), 2, 8, 0);

line(dst, scene_corners[2], scene_corners[3], Scalar(0, 0, 255), 2, 8, 0);

line(dst, scene_corners[3], scene_corners[0], Scalar(0, 0, 255), 2, 8, 0);

imshow("find known object demo", matchesImg);

imshow("Draw object", dst);

waitKey(0);

return(0);

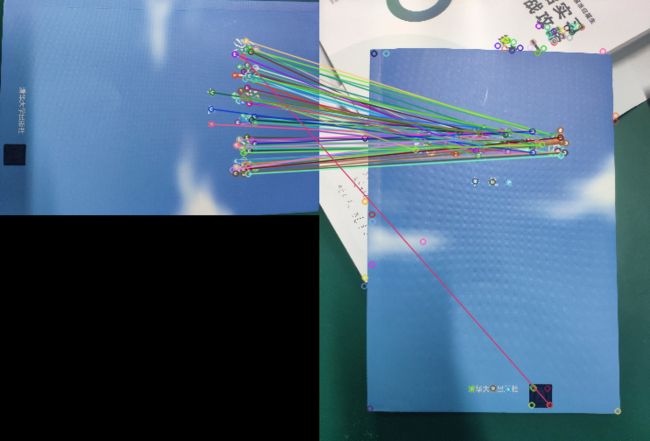

} 测试效果:

三:KAZE算法和AKAZE算法

KAZE算法相关学习博客:

- 一点一滴完全突破KAZE特征检测算法(一)

- 一点一滴完全突破KAZE特征检测算法(二)

- 一点一滴完全突破KAZE特征检测算法(三)

- 一点一滴完全突破KAZE特征检测算法(四)

另一个作者的系列笔记共5篇:

KAZE算法原理与源码剖析

AKAZE算法相关学习博客:

AKAZE算法分析

实践代码演示:

#include

#include

#include

#include

using namespace std;

using namespace cv;

using namespace cv::xfeatures2d;

int main() {

Mat Img1 = imread("D:\\opencv\\img1.jpg");

Mat Img2 = imread("D:\\opencv\\img2.jpg");

Mat img1,img2;

resize(Img1, img1, Size(Img1.cols / 8, Img1.rows / 8), (0, 0), (0, 0), INTER_LINEAR);

resize(Img2, img2, Size(Img2.cols / 8, Img2.rows / 8), (0, 0), (0, 0), INTER_LINEAR);

Mat gray_img1, gray_img2;

imshow("img1:",img1);

imshow("img2:", img2);

cvtColor(img1, gray_img1, CV_BGR2GRAY);

cvtColor(img2, gray_img2, CV_BGR2GRAY);

// 提取AKAZE特征描述子

Ptr detector = AKAZE::create();

vector keypoints_obj;

vector keypoints_scene;

Mat descriptor_obj, descriptor_scene;

double t1 = getTickCount();

detector->detectAndCompute(gray_img1, Mat(), keypoints_obj, descriptor_obj);

detector->detectAndCompute(gray_img2, Mat(), keypoints_scene, descriptor_scene);

double t2 = getTickCount();

//计算AKAZE算法消耗的时间

double tkaze = 1000 * (t2 - t1) / getTickFrequency();

printf("AKAZE Time consume(ms) : %f\n", tkaze);

//FLANN匹配

FlannBasedMatcher matcher(new flann::LshIndexParams(20, 10, 2));

vector matches;

matcher.match(descriptor_obj, descriptor_scene, matches);

// 将匹配关系表示出来

Mat akazeMatchesImg;

drawMatches(img1, keypoints_obj, img2, keypoints_scene, matches, akazeMatchesImg);

imshow("akaze match result", akazeMatchesImg);

waitKey(0);

return(0);

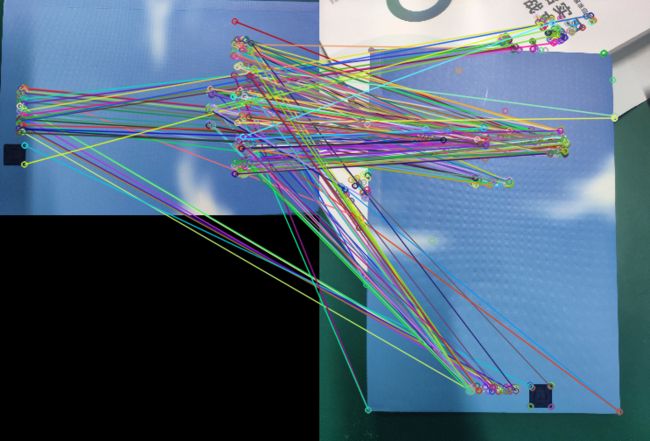

} 效果:

四:BRISK算法

在了解BRISK特征提取算法之前,我们可以先看看该算法的组成部分之一,也就是FAST特征检测算法,该算法的原理可以参考:

FAST特征检测算法

该算法的原理比较简单,之后我们开始学习BRISK算法,关于这个算法的讲解也有很多大佬说的很清楚了,我就不再进行赘述了,下面给出叙述这个算法的一些博客资源:

Brisk特征提取算法

Brisk原文翻译

建议看完上述博客后的朋友如果还是不甚了解,可以结合源码进行学习。下面主要进行该算法的实际应用。

代码:

#include

#include

using namespace cv;

using namespace std;

int main(int argc, char** argv) {

Mat Img1 = imread("D:\\opencv\\img1.jpg");

Mat Img2 = imread("D:\\opencv\\img2.jpg");

Mat img1, img2;

resize(Img1, img1, Size(Img1.cols / 8, Img1.rows / 8), (0, 0), (0, 0), INTER_LINEAR);

resize(Img2, img2, Size(Img2.cols / 8, Img2.rows / 8), (0, 0), (0, 0), INTER_LINEAR);

Mat gray_img1, gray_img2;

imshow("img1:", img1);

imshow("img2:", img2);

cvtColor(img1, gray_img1, CV_BGR2GRAY);

cvtColor(img2, gray_img2, CV_BGR2GRAY);

imshow("box image", img1);

imshow("scene image", img2);

// 提取Brisk特征

Ptr detector = BRISK::create();

vector keypoints_obj;

vector keypoints_scene;

Mat descriptor_obj, descriptor_scene;

detector->detectAndCompute(img1, Mat(), keypoints_obj, descriptor_obj);

detector->detectAndCompute(img2, Mat(), keypoints_scene, descriptor_scene);

// 进行暴力匹配

BFMatcher matcher(NORM_L2);

vector matches;

matcher.match(descriptor_obj, descriptor_scene, matches);

// 将匹配关系表示出来

Mat matchesImg;

drawMatches(img1, keypoints_obj, img2, keypoints_scene, matches, matchesImg);

imshow("BRISK MATCH RESULT", matchesImg);

waitKey(0);

return 0;

}

效果:

总结

关于opencv特征检测、提取、匹配的说明大概就到这里了。可能以后还会有所补充,下面会更新级联分类器的相关知识以及应用