tensorflow/pytorch材质检测(多分类)

tensorflow

import tensorflow as tf

import cv2.cv2 as cv

import numpy as np

import matplotlib.pyplot as plt

from tensorflow.python import debug as tf_debug

from tensorboard.plugins.beholder import Beholder

import os

import sys

import time

FLAGS = tf.app.flags.FLAGS

tf.app.flags.DEFINE_string("image","png/10_100.png", "input image file")

os.environ['TF_CPP_MIN_LOG_LEVEL'] = '2'

theme = ['暖色','冷色','中色','浅色']

color = ['纯色','花卉图案','几何图形','动物纹理','其他图案']

material = {

'10':'木料',

'11':'金属',

'12':'布料',

'13':'皮料',

'14':'玻璃',

'15':'竹藤',

'16':'石料',

'17':'陶瓷',

'18':'塑料'

}

def Img2TrainFile(filename):

writer = tf.python_io.TFRecordWriter(filename)

for file in os.listdir('./png/'):

if file.endswith('.png'):

img=cv.imdecode(np.fromfile('./png/'+file,dtype=np.uint8),-1)

if img != []:

img = cv.resize(img,(70,70))

#src_gray = cv.cvtColor(img, cv.COLOR_BGR2GRAY)

# 4.求梯度 Sobel 算子

# gx = cv.Sobel(src_gray, ddepth=cv.CV_16S, dx=1, dy=0)

# gy = cv.Sobel(src_gray, ddepth=cv.CV_16S, dx=0, dy=1)

# gx_abs = cv.convertScaleAbs(gx)

# gy_abs = cv.convertScaleAbs(gy)

# grad = cv.addWeighted(src1=gx_abs, alpha=0.5, src2=gy_abs, beta=0.5, gamma=0)

pic = np.reshape(img,-1).tobytes()

#test = np.frombuffer(pic,np.uint8)

#test = np.reshape(test,(70,70))

#plt.imshow(test)

#plt.show()

example = tf.train.Example(features=tf.train.Features(

feature={

'label':tf.train.Feature(int64_list=tf.train.Int64List(value=[int(file[:2])])),

'imag_raw':tf.train.Feature(bytes_list=tf.train.BytesList(value=[pic]))

}))

writer.write(example.SerializeToString())

writer.close()

def DeepCNN():

#输入

x = tf.placeholder(tf.float32,[None,70,70,4])

y = tf.placeholder(tf.int32,[None,19])

## 第一层卷积操作 ##

with tf.name_scope('conv1'):

W_conv1 = tf.Variable(tf.truncated_normal([5, 5, 4,32], stddev=0.1))

b_conv1 = tf.Variable(tf.truncated_normal([32], stddev=0.1))

h_conv1 = tf.nn.relu(tf.nn.conv2d(x, W_conv1, strides=[1, 1,1, 1], padding='SAME') + b_conv1)

tf.summary.histogram('1/W_conv1',W_conv1)

tf.summary.histogram('1/b_conv1',b_conv1)

with tf.name_scope('pool1'):

h_pool1 = tf.nn.max_pool(h_conv1, ksize=[1, 2, 2, 1],strides=[1, 2, 2, 1], padding='SAME')

## 第二层卷积操作 ##

with tf.name_scope('conv2'):

W_conv2 = tf.Variable(tf.truncated_normal([5, 5, 32, 64],stddev=0.1))

b_conv2 = tf.Variable(tf.truncated_normal([64],stddev=0.1))

h_conv2 = tf.nn.relu(tf.nn.conv2d(h_pool1, W_conv2, strides=[1, 1, 1, 1], padding='SAME') + b_conv2)

tf.summary.histogram('2/W_conv1',W_conv2)

tf.summary.histogram('2/b_conv1',b_conv2)

with tf.name_scope('pool2'):

h_pool2 = tf.nn.max_pool(h_conv2, ksize=[1, 2, 2, 1],strides=[1, 2, 2, 1], padding='SAME')

## 第三层全连接操作 ##

with tf.name_scope('fc1'):

W_fc1 = tf.Variable(tf.truncated_normal([18 * 18 * 64, 1024],stddev=0.1))

b_fc1 = tf.Variable(tf.truncated_normal([1024],stddev=0.1))

h_pool2_flat = tf.reshape(h_pool2, [-1, 18 * 18 * 64])

h_fc1 = tf.nn.relu(tf.matmul(h_pool2_flat, W_fc1) + b_fc1)

tf.summary.histogram('3/W_conv1',W_fc1)

tf.summary.histogram('3/b_conv1',b_fc1)

with tf.name_scope('dropout'):

keep_prob = tf.placeholder(tf.float32)

h_fc1_drop = tf.nn.dropout(h_fc1, keep_prob)

## 第四层输出操作 ##

with tf.name_scope('fc2'):

W_fc2 = tf.Variable(tf.truncated_normal([1024, 19],stddev=0.1))

b_fc2 = tf.Variable(tf.truncated_normal([19],stddev=0.1))

y_conv = tf.matmul(h_fc1_drop, W_fc2) + b_fc2

tf.summary.histogram('4/W_fc2',W_fc2)

tf.summary.histogram('4/b_fc2',b_fc2)

with tf.name_scope('loss'):

cross_entropy = tf.nn.softmax_cross_entropy_with_logits(labels=y,logits=y_conv)

cross_entropy = tf.reduce_mean(cross_entropy)

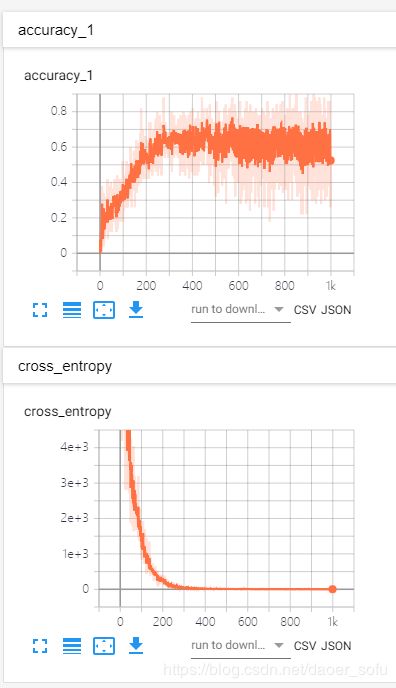

tf.summary.scalar('cross_entropy',cross_entropy)

with tf.name_scope('adam_optimizer'):

train_step = tf.train.AdamOptimizer(0.0001).minimize(cross_entropy)

with tf.name_scope('accuracy'):

correct_prediction = tf.equal(tf.argmax(y_conv, 1), tf.argmax(y, 1))

correct_prediction = tf.cast(correct_prediction, tf.float32)

accuracy = tf.reduce_mean(correct_prediction)

tf.summary.scalar('accuracy',accuracy)

return x,y,y_conv,keep_prob,accuracy,cross_entropy,train_step

def TrainData(filename):

#组建模型

x,y,y_conv,keep_prob,accuracy,cross_entropy,train_step = DeepCNN()

#session

with tf.Session() as sess:

saver = tf.train.Saver(max_to_keep=1)

sess.run(tf.global_variables_initializer())

#tfrecord

dataset = tf.data.TFRecordDataset(filename)

dataset = dataset.map(parse_example).repeat().batch(50)

it = dataset.make_one_shot_iterator()

#tfevent

logPath = './logs/'+time.strftime('%Y%m%d_%H%M%S',time.localtime())

logs = tf.summary.FileWriter(logPath,sess.graph)

#beholder = Beholder(logPath)

#coord = tf.train.Coordinator()

#threads = tf.train.start_queue_runners(coord=coord)

#debug_sess = tf_debug.TensorBoardDebugWrapperSession(sess, "localhost:90")

for epoch in range(3000):

image,label = it.get_next()

#label = tf.one_hot(label,1)

image,label = sess.run([image,label])

label = sess.run(tf.one_hot(label,19))

#image = np.reshape(image,[-1,70,70])

image = np.reshape(image,(-1,70,70,4))

# plt.imshow(image[0])

# plt.show()

#tf.summary.image('image'+str(epoch),image,50)

merged = tf.summary.merge_all()

#beholder.update(session=sess,arrays=image)

#debug_sess.run(cross_entropy)

cos,acc,result,_,yy = sess.run([cross_entropy,accuracy,merged,train_step,tf.argmax(y_conv,1)],feed_dict={x:image,y:label,keep_prob:0.5})

#if(epoch % 50 == 0):

logs.add_summary(result,epoch)

print('epch is %d,loss is %f,accuracy is %f'%(epoch,cos,acc))

saver.save(sess,'logs/train/data')

#coord.request_stop()

#coord.join(threads)

def parse_example(example):

feats = tf.parse_single_example(example, features={

'imag_raw':tf.FixedLenFeature([], tf.string),

'label':tf.FixedLenFeature([],tf.int64)

})

image = tf.decode_raw(feats['imag_raw'], tf.uint8)

label = feats['label']

return image, label

def TestData(filename):

#组建模型

x,y,y_conv,keep_prob,_,_,_ = DeepCNN()

img = cv.imdecode(np.fromfile(filename,dtype=np.uint8),-1)

img = cv.resize(img,(70,70))

img = np.reshape(img,(1,70,70,4))

with tf.Session() as sess:

# 恢复数据并校验和测试

saver = tf.train.Saver()

saver.restore(sess, './logs/train/data')

label = np.zeros((1,19))

ret = sess.run(tf.argmax(y_conv,1),feed_dict={x:img,y:label,keep_prob:1})

print('======================================================')

print('regconise material data is %d,%s',ret,material[str(ret[0])] if material.get(str(ret[0]))!=None else '')

print('======================================================')

def main(_):

filename = 'train.tfrecords'

#Img2TrainFile(filename)

#TrainData(filename)

TestData(FLAGS.image)

if __name__ == '__main__':

tf.app.run()

pytorch

import torch

from torch import nn

import torch.nn.functional as F

import torch.optim as optim

from torchvision import transforms,utils

from torch.utils.data import Dataset,DataLoader

import matplotlib.pyplot as plt

from tensorboardX import SummaryWriter

from PIL import Image

import gc

import numpy as np

import tqdm

import os

import sys

fileSave = './module.pth'

class Net(nn.Module):

def __init__(self):

super(Net, self).__init__()

self.conv1 = torch.nn.Sequential(

torch.nn.Conv2d(4, 32, 4, 1, 1),

torch.nn.ReLU(),

torch.nn.MaxPool2d(2))

self.conv2 = torch.nn.Sequential(

torch.nn.Conv2d(32, 64, 4, 1, 1),

torch.nn.ReLU(),

torch.nn.MaxPool2d(2)

)

self.conv3 = torch.nn.Sequential(

torch.nn.Conv2d(64, 64, 4, 1, 1),

torch.nn.ReLU(),

torch.nn.MaxPool2d(2)

)

self.dense = torch.nn.Sequential(

torch.nn.Linear(64*7*7, 128),

torch.nn.Dropout(0.5),

torch.nn.ReLU(),

torch.nn.Dropout(0.5),

torch.nn.Linear(128, 64)

)

def forward(self, x):

conv1_out = self.conv1(x)

conv2_out = self.conv2(conv1_out)

conv3_out = self.conv3(conv2_out)

res = conv3_out.view(conv3_out.size(0), -1)

out = self.dense(res)

return out

class MyDataSet(Dataset):

def __init__(self,path):

self.data=[]

for file in os.listdir(path):

self.data.append((Image.open(path+file),int(file[:2])))

def __getitem__(self,index):

img,label =self.data[index]

transform = transforms.Compose([

transforms.Resize((70,70))

,transforms.ToTensor()])

img = transform(img)

return img,label

def __len__(self):

return len(self.data)

def train():

train_data=MyDataSet('./tensorflow/png/')

data_loader = DataLoader(train_data, batch_size=64,shuffle=True)

unet = Net()

loss_func = torch.nn.CrossEntropyLoss()

optimizer = torch.optim.SGD(unet.parameters(), lr = 0.001, momentum=0.99)

writer = SummaryWriter(comment='Net')

for epoch in range(1000):

train_loss,train_acc = 0.,0.

for data,txt in data_loader:

img,label = data,txt

img,label = torch.autograd.Variable(img),torch.autograd.Variable(label)

out = unet(img)

loss = loss_func(out,label)

train_loss += loss.data.item()

pred = torch.max(out, 1)[1]

train_correct = (pred == label).sum()

train_acc += train_correct.data.item()

optimizer.zero_grad()

loss.backward()

optimizer.step()

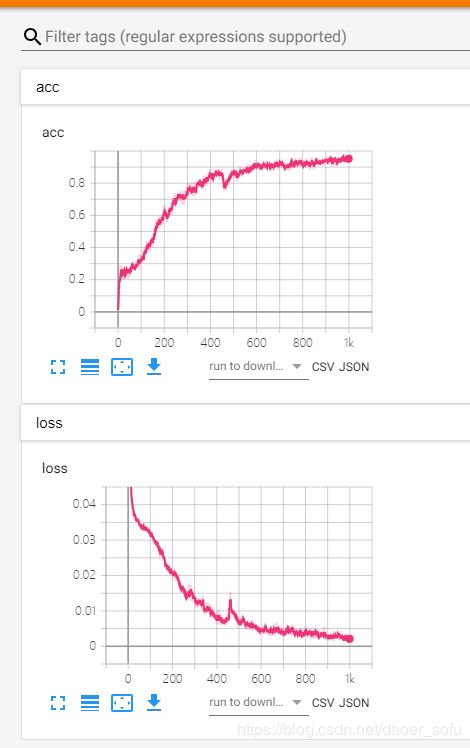

print("epoch:%d,loss:%f,acc:%f"%(epoch,train_loss/len(train_data),train_acc/len(train_data)))

writer.add_scalar('loss',train_loss/len(train_data),epoch)

writer.add_scalar('acc',train_acc/len(train_data),epoch)

gc.collect()

writer.add_graph(unet,(data,))

writer.close()

state = {'net':unet.state_dict(),'optimizer':optimizer.state_dict(), 'epoch':epoch}

torch.save(state, fileSave)

def test(file):

unet = Net()

optimizer = torch.optim.SGD(unet.parameters(), lr = 0.001, momentum=0.99)

checkpoint = torch.load(fileSave)

unet.load_state_dict(checkpoint['net'])

optimizer.load_state_dict(checkpoint['optimizer'])

start_epoch = checkpoint['epoch']+1

unet.eval()

transform = transforms.Compose([

transforms.Resize((70,70))

,transforms.ToTensor()])

img = transform(Image.open(file))

img = torch.unsqueeze(img,0)

out = unet(img)

pred = torch.max(out, 1)[1]

print('pred is %d',pred.item())

if __name__=='__main__':

test('tensorflow/png/16_166.png')

#test(sys.argv[0])

比较

pytorch封装性更好,成功率高由于pytorch中使用了归一化数据(tensorflow中没有添加相应的代码),所以成功率更高

tensorflow中keras有类似pytorch的封装(keras.model 创建模型关联keras.input , model中重写call类似pytorch中forward)