使用Scrapy框架爬取88读书网小说,并保存本地文件

Scrapy框架,爬取88读书网小说

链接:

88读书网

源码

工具

python 3.7

pycharm

scrapy框架

教程

spider:

# -*- coding: utf-8 -*-

import scrapy

from dushu.items import DushuItem

class BookSpider(scrapy.Spider):

name = 'book'

# allowed_domains = ['xdushu.com']

start_urls = ['https://www.x88dushu.com/xiaoshuo/111/111516/']

def parse(self, response):

if response.url == self.start_urls[0]:

self.logger.info('访问小说目录'+response.url)

li_list = response.css("div.mulu ul li a")

for li in li_list:

link = li.css('a::attr(href)').extract_first()

yield scrapy.Request(self.start_urls[0]+link)

else:

self.logger.info('访问小说内容'+response.url)

novel = response.css('div.novel')

item = DushuItem()

item['chapterName'] = novel.css('h1::text').extract_first()

item['text'] = novel.css('div.yd_text2::text').extract()

# self.logger().info(item)

yield item

# pass

items.py:

# -*- coding: utf-8 -*-

# Define here the models for your scraped items

#

# See documentation in:

# https://doc.scrapy.org/en/latest/topics/items.html

import scrapy

class DushuItem(scrapy.Item):

# define the fields for your item here like:

# name = scrapy.Field()

# 章节名称

chapterName = scrapy.Field()

# 内容

text = scrapy.Field()

pass

pipelines.py:

# -*- coding: utf-8 -*-

# Define your item pipelines here

#

# Don't forget to add your pipeline to the ITEM_PIPELINES setting

# See: https://doc.scrapy.org/en/latest/topics/item-pipeline.html

import json

class DushuPipeline(object):

def process_item(self, item, spider):

file = open('mulu/' + item['chapterName'] + '.txt', 'w', encoding='utf-8')

for text in item['text']:

file.write(text + '\n')

file.close()

return item

setting.py:

BOT_NAME = 'dushu'

SPIDER_MODULES = ['dushu.spiders']

NEWSPIDER_MODULE = 'dushu.spiders'

ROBOTSTXT_OBEY = False

ITEM_PIPELINES = {

'dushu.pipelines.DushuPipeline': 300,

}程序运行:

要爬取的小说url:

start_urls = ['https://www.x88dushu.com/xiaoshuo/111/111516/']

运行cmd:

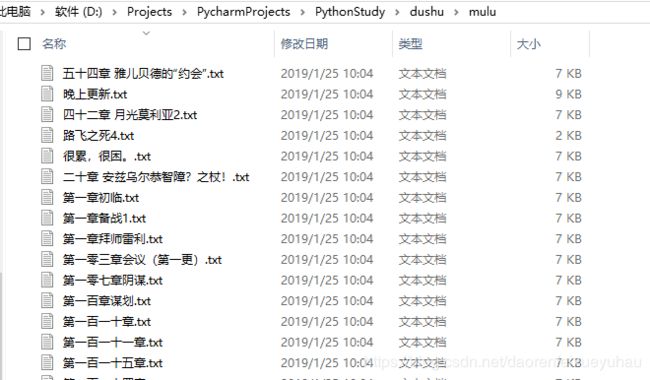

scrapy crawl book运行结果: