【OpenCV学习笔记 025】OpenCV3双目视觉实验

1 双目视觉

何为双目视觉?

双目视觉是模拟人类视觉原理,使用计算机被动感知距离的方法。从两个或者多个点观察一个物体,获取在不同视觉下的图像,根据图像之间像素的匹配关系,通过三角测量原理计算出像素之间的偏移来获取物体的三维信息。得到了物体的景深信息,就可以计算出物体与相机之间的实际距离,物体3维大小,两点之间实际距离。目前也有很多研究机构进行3维物体识别,来解决2D算法无法处理遮挡,姿态变化的问题,提高物体的识别率。

2 实验环境

VS2013

OpenCV3.2.0

双目摄像头 直接购买两个普通的usb摄像头或购买双目摄像头

标定板 淘宝购买或自行打印

参考 http://blog.csdn.net/lonelyrains/article/details/46874723

或者 http://blog.csdn.net/loser__wang/article/details/51811347

3 双目摄像机读取

双目摄像机读取代码很简单, 但针对不同的摄像头可能需要稍微调试一下,遇到问题可以参考一下http://blog.csdn.net/vampireshj/article/details/53535724

,然而我的双目摄像头使用上文中的两种方法都没有成功,以下是我修改后的代码。我把两个摄像头的分辨率都改了一下。这个得看具体摄像头的支持程度,有的无法改,你改了也没效果。

#include "opencv2/opencv.hpp"

#include "opencv2/highgui/highgui.hpp"

using namespace std;

using namespace cv;

int main(){

Mat frame_l, frame_r;

VideoCapture camera_l, camera_r;

int cont = 0;

while (frame_l.rows < 2){

camera_l.open(0);

camera_l.set(CV_CAP_PROP_FOURCC, 'GPJM');

camera_l.set(CV_CAP_PROP_FRAME_WIDTH, 320);

camera_l.set(CV_CAP_PROP_FRAME_HEIGHT, 240);

cont = 0;

while (frame_l.rows < 2 && cont < 5){

camera_l >> frame_l;

cont++;

}

}

while (frame_r.rows < 2){

camera_r.open(1);

camera_r.set(CV_CAP_PROP_FOURCC, 'GPJM');

camera_r.set(CV_CAP_PROP_FRAME_WIDTH, 320);

camera_r.set(CV_CAP_PROP_FRAME_HEIGHT, 240);

cont = 0;

while (frame_r.rows < 2 && cont < 5){

camera_r >> frame_r;

cont++;

}

}

while (true)

{

camera_l >> frame_l;

camera_r >> frame_r;

imshow("camera_l", frame_l);

imshow("camera_r", frame_r);

waitKey(60);

}

return 0;

}实验效果如下:

注意到,右摄像头的图像相对于左摄像头的图像有点“左移”。这点自己分析一下原因。很重要,如果不是这项,你下面的工作会白做。因为匹配的算法就是遵循这种“左移”的。

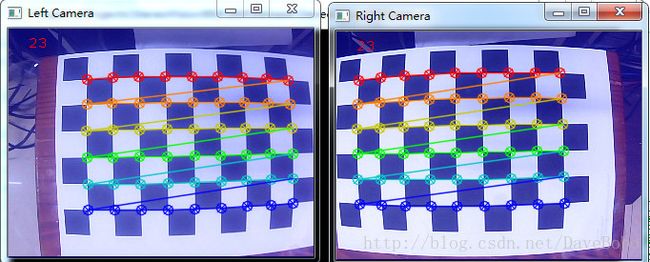

4 标定环节

基本上的流程就是读取左右摄像头,分别检测棋盘的角点,当同时都检测到完整的角点之后,进行精细化处理,得到更精确的角点并存储。攒够一定数量之后(20-30)之后进行参数计算。并将参数进行存储。还是直接上代码。并说明一些实现的细节部分。

ChessboardStable是用来检测棋盘格是否稳定的。

方案一:如果你的双目摄像头是用手拿着的,或多或少会有一些抖动,这样如果只是检测是否存在角点,可能会通过不是很清晰稳定的图像进行分析,这样会带来比较大的误差,如果通过一个队列判断是否稳定,则可以避免这种误差。 我是简单粗暴的使用vector代替队列的。

后面的部分需要注意的就是 boardSize, squareSize需要设置为你的标定板对应的尺寸,我拿A4纸简单的打印一份,每个格子的大小经过测量是26mm ,你可以根据自己的标定板进行相应的设置。

#include <string>

#include

#include

#include "opencv2/opencv.hpp"

#include "opencv2/calib3d/calib3d.hpp"

#include "opencv2/core/core.hpp"

#include "opencv2/highgui/highgui.hpp"

using namespace std;

using namespace cv;

vector<vector >corners_l_array, corners_r_array;

int array_index = 0;

bool ChessboardStable(vectorcorners_l, vectorcorners_r){

if (corners_l_array.size() < 10){

corners_l_array.push_back(corners_l);

corners_r_array.push_back(corners_r);

return false;

}

else{

corners_l_array[array_index % 10] = corners_l;

corners_r_array[array_index % 10] = corners_r;

array_index++;

double error = 0.0;

for (int i = 0; i < corners_l_array.size(); i++){

for (int j = 0; j < corners_l_array[i].size(); j++){

error += abs(corners_l[j].x - corners_l_array[i][j].x) + abs(corners_l[j].y - corners_l_array[i][j].y);

error += abs(corners_r[j].x - corners_r_array[i][j].x) + abs(corners_r[j].y - corners_r_array[i][j].y);

}

}

if (error < 1000)

{

corners_l_array.clear();

corners_r_array.clear();

array_index = 0;

return true;

}

else

return false;

}

}

int main(){

VideoCapture camera_l, camera_r;

Mat frame_l, frame_r;

int cont = 0;

while (frame_l.rows < 2){

camera_l.open(0);

camera_l.set(CV_CAP_PROP_FOURCC, 'GPJM');

camera_l.set(CV_CAP_PROP_FRAME_WIDTH, 320);

camera_l.set(CV_CAP_PROP_FRAME_HEIGHT, 240);

cont = 0;

while (frame_l.rows < 2 && cont < 5){

camera_l >> frame_l;

cont++;

}

}

while (frame_r.rows < 2){

camera_r.open(1);

camera_r.set(CV_CAP_PROP_FOURCC, 'GPJM');

camera_r.set(CV_CAP_PROP_FRAME_WIDTH, 320);

camera_r.set(CV_CAP_PROP_FRAME_HEIGHT, 240);

cont = 0;

while (frame_r.rows < 2 && cont < 5){

camera_r >> frame_r;

cont++;

}

}

Size boardSize(9, 6);

const float squareSize = 26.f; // Set this to your actual square size

vector<vector > imagePoints_l;

vector<vector > imagePoints_r;

int nimages = 0;

while (true)

{

camera_l >> frame_l;

camera_r >> frame_r;

bool found_l = false, found_r = false;

vector corners_l, corners_r;

found_l = findChessboardCorners(frame_l, boardSize, corners_l, CALIB_CB_ADAPTIVE_THRESH | CALIB_CB_NORMALIZE_IMAGE);

found_r = findChessboardCorners(frame_r, boardSize, corners_r, CALIB_CB_ADAPTIVE_THRESH | CALIB_CB_NORMALIZE_IMAGE);

if (found_l && found_r && ChessboardStable(corners_l, corners_r)) {

Mat viewGray;

cvtColor(frame_l, viewGray, COLOR_BGR2GRAY);

cornerSubPix(viewGray, corners_l, Size(11, 11),

Size(-1, -1), TermCriteria(TermCriteria::EPS + TermCriteria::COUNT, 30, 0.1));

cvtColor(frame_r, viewGray, COLOR_BGR2GRAY);

cornerSubPix(viewGray, corners_r, Size(11, 11),

Size(-1, -1), TermCriteria(TermCriteria::EPS + TermCriteria::COUNT, 30, 0.1));

imagePoints_l.push_back(corners_l);

imagePoints_r.push_back(corners_r);

++nimages;

frame_l += 100;

frame_r += 100;

drawChessboardCorners(frame_l, boardSize, corners_l, found_l);

drawChessboardCorners(frame_r, boardSize, corners_r, found_r);

putText(frame_l, to_string(nimages), Point(20, 20), 1, 1, Scalar(0, 0, 255));

putText(frame_r, to_string(nimages), Point(20, 20), 1, 1, Scalar(0, 0, 255));

imshow("Left Camera", frame_l);

imshow("Right Camera", frame_r);

char c = (char)waitKey(500);

if (c == 27 || c == 'q' || c == 'Q') //Allow ESC to quit

exit(-1);

if (nimages >= 30)

break;

}else{

drawChessboardCorners(frame_l, boardSize, corners_l, found_l);

drawChessboardCorners(frame_r, boardSize, corners_r, found_r);

putText(frame_l, to_string(nimages), Point(20, 20), 1, 1, Scalar(0, 0, 255));

putText(frame_r, to_string(nimages), Point(20, 20), 1, 1, Scalar(0, 0, 255));

imshow("Left Camera", frame_l);

imshow("Right Camera", frame_r);

char key = waitKey(1);

if (key == 27)

break;

}

}

if (nimages < 20){ cout << "Not enough" << endl; return -1;}

vector<vector > imagePoints[2] = { imagePoints_l, imagePoints_r };

vector<vector > objectPoints;

objectPoints.resize(nimages);

for(int i = 0; i < nimages; i++)

{

for (int j = 0; j < boardSize.height; j++)

for (int k = 0; k < boardSize.width; k++)

objectPoints[i].push_back(Point3f(k*squareSize, j*squareSize, 0));

}

cout << "Running stereo calibration ..." << endl;

Size imageSize(320, 240);

Mat cameraMatrix[2], distCoeffs[2];

cameraMatrix[0] = initCameraMatrix2D(objectPoints, imagePoints_l, imageSize, 0);

cameraMatrix[1] = initCameraMatrix2D(objectPoints, imagePoints_r, imageSize, 0);

Mat R, T, E, F;

double rms = stereoCalibrate(objectPoints, imagePoints_l, imagePoints_r,

cameraMatrix[0], distCoeffs[0],

cameraMatrix[1], distCoeffs[1],

imageSize, R, T, E, F, //图像尺寸 旋转矩阵 平移矩阵 本帧矩阵 基础矩阵

CV_CALIB_FIX_ASPECT_RATIO + //如果设置了该标志位,那么在调用标定程序时,优化过程只同时改变fx和fy,而固定intrinsic_matrix的其他值(如果 CV_CALIB_USE_INTRINSIC_GUESS也没有被设置,则intrinsic_matrix中的fx和fy可以为任何值,但比例相关)

CV_CALIB_ZERO_TANGENT_DIST + //该标志在标定高级摄像机的时候比较重要,因为精确制作将导致很小的径向畸变。试图将参数拟合0会导致噪声干扰和数值不稳定。通过设置该标志可以关闭切向畸变参数p1和p2的拟合,即设置两个参数为0//插值亚像素角点

CV_CALIB_USE_INTRINSIC_GUESS + // cvCalibrateCamera2()计算内参数矩阵的时候,通常不需要额外的信息。具体来说,参数cx和cy(图像中心)的初始值可以直接从变量image_size中得到(即(H-1)/2,(W-1)/2)),如果设置了该变量那么instrinsic_matrix假设包含正确的值,并被用作初始猜测,为cvCalibrateCamera2()做优化时所用

CV_CALIB_SAME_FOCAL_LENGTH + //该标志位在优化的时候,直接使用intrinsic_matrix传递过来的fx和fy。

CV_CALIB_RATIONAL_MODEL +

CV_CALIB_FIX_K3 + CV_CALIB_FIX_K4 + CV_CALIB_FIX_K5,//固定径向畸变k1,k2,k3。径向畸变参数可以通过组合这些标志设置为任意值。一般地最后一个参数应设置为0,初始使用鱼眼透镜。

TermCriteria(TermCriteria::COUNT + TermCriteria::EPS, 100, 1e-5));//插值亚像素角点

cout << "done with RMS error=" << rms << endl;

double err = 0;

int npoints = 0;

//计算极线向量

vector lines[2]; //极线

for (int i = 0; i < nimages; i++)

{

//左某图所有角点数量

int npt = (int)imagePoints_l[i].size();

Mat imgpt[2];

imgpt[0] = Mat(imagePoints_l[i]);

undistortPoints(imgpt[0], imgpt[0], cameraMatrix[0], distCoeffs[0], Mat(), cameraMatrix[0]);

computeCorrespondEpilines(imgpt[0], 0 + 1, F, lines[0]);

imgpt[1] = Mat(imagePoints_r[i]); //某图的角点向量矩阵

undistortPoints(imgpt[1], imgpt[1], cameraMatrix[1], distCoeffs[1], Mat(), cameraMatrix[1]); //计算校正后的角点坐标

computeCorrespondEpilines(imgpt[1], 1 + 1, F, lines[1]); //计算极线

for (int j = 0; j < npt; j++)

{

double errij = fabs(imagePoints[0][i][j].x*lines[1][j][0] +

imagePoints[0][i][j].y*lines[1][j][1] + lines[1][j][2]) +

fabs(imagePoints[1][i][j].x*lines[0][j][0] +

imagePoints[1][i][j].y*lines[0][j][1] + lines[0][j][2]);

err += errij;

}

npoints += npt;

}

cout << "average epipolar err = " << err / npoints << endl;

FileStorage fs("intrinsics.yml", FileStorage::WRITE);

if (fs.isOpened())

{

fs << "M1" << cameraMatrix[0] << "D1" << distCoeffs[0] <<

"M2" << cameraMatrix[1] << "D2" << distCoeffs[1];

fs.release();

}

else

cout << "Error: can not save the intrinsic parameters\n";

Mat R1, R2, P1, P2, Q; //计算外参数

Rect validRoi[2];

stereoRectify(cameraMatrix[0], distCoeffs[0],

cameraMatrix[1], distCoeffs[1], //左内参数矩阵 //左畸变参数

imageSize, R, T, R1, R2, P1, P2, Q,

CALIB_ZERO_DISPARITY, 1, imageSize, &validRoi[0], &validRoi[1]); //图像尺寸 旋转矩阵 平移矩阵 左旋转矫正参数 右旋转矫正参数 左平移矫正参数 右平移矫正参数 深度矫正参数

fs.open("extrinsics.yml", FileStorage::WRITE); //保存外参数

if (fs.isOpened())

{

fs << "R" << R << "T" << T << "R1" << R1 << "R2" << R2 << "P1" << P1 << "P2" << P2 << "Q" << Q;

fs.release();

}

else

cout << "Error: can not save the extrinsic parameters\n";

return 0;

} 注意1:该程序我在x64 debug模式下会出现assert错误,release下没有问题。有人解决请赐教!

注意2:标定的时候把各个方向,大小都照顾到。

重要函数说明

(1) findChessboardCorners()函数 在图像中找到指定大小的棋盘图案

函数原型:

//! finds checkerboard pattern of the specified size in the image

CV_EXPORTS_W bool findChessboardCorners( InputArray image, Size patternSize,

OutputArray corners,

int flags=CALIB_CB_ADAPTIVE_THRESH+CALIB_CB_NORMALIZE_IMAGE );image 输入的棋盘图,必须是8位的灰度或者彩色图像。

pattern_size 棋盘图中每行和每列角点的个数。

corners 检测到的角点

flags 各种操作标志,可以是0或者下面值的组合:

CV_CALIB_CB_ADAPTIVE_THRESH -使用自适应阈值(通过平均图像亮度计算得到)将图像转换为黑白图,而不是一个固定的阈值。

CV_CALIB_CB_NORMALIZE_IMAGE -在利用固定阈值或者自适应的阈值进行二值化之前,先使用cvNormalizeHist来均衡化图像亮度。

CV_CALIB_CB_FILTER_QUADS -使用其他的准则(如轮廓面积,周长,方形形状)来去除在轮廓检测阶段检测到的错误方块。

补充说明

函数cvFindChessboardCorners试图确定输入图像是否是棋盘模式,并确定角点的位置。如果所有角点都被检测到且它们都被以一定顺序排布,函数返回非零值,否则在函数不能发现所有角点或者记录它们地情况下,函数返回0。例如一个正常地棋盘图右8x8个方块和7x7个内角点,内角点是黑色方块相互联通的位置。这个函数检测到地坐标只是一个大约的值,如果要精确地确定它们的位置,可以使用函数cvFindCornerSubPix。

(2) cornerSubPix()函数 角点检测中精确化角点位置,从而取得亚像素级别的角点检测效果。

函数原型:

//! adjusts the corner locations with sub-pixel accuracy to maximize the certain cornerness criteria

CV_EXPORTS_W void cornerSubPix( InputArray image, InputOutputArray corners,

Size winSize, Size zeroZone,

TermCriteria criteria );image:输入图像(8位或32位单通道图)

corners:检测到的角点,即是输入也是输出

winSize:计算亚像素角点时考虑的区域的大小,大小为NXN; N=(winSize*2+1)。

zeroZone:作用类似于winSize,但是总是具有较小的范围,通常忽略(即Size(-1, -1))。

criteria:用于表示计算亚像素时停止迭代的标准,可选的值有cv::TermCriteria::MAX_ITER 、cv::TermCriteria::EPS(可以是两者其一,或两者均选),前者表示迭代次数达到了最大次数时停止,后者表示角点位置变化的最小值已经达到最小时停止迭代。二者均使用cv::TermCriteria()构造函数进行指定。

(3) drawChessboardCorners()函数 棋盘格角点的绘制

//! draws the checkerboard pattern (found or partly found) in the image

CV_EXPORTS_W void drawChessboardCorners( InputOutputArray image, Size patternSize,

InputArray corners, bool patternWasFound );image:棋盘格图像(8UC3)

patternSize:棋盘格内部角点的行、列,和cv::findChessboardCorners()指定的相同

corners:检测到的棋盘格角点

patternWasFound:cv::findChessboardCorners()的返回值

(4) stereoCalibrate()函数 找到立体相机的内在和外在参数

Similarly to calibrateCamera , the function minimizes the total re-projection error for all the

points in all the available views from both cameras. The function returns the final value of the

re-projection error.

*/

CV_EXPORTS_W double stereoCalibrate( InputArrayOfArrays objectPoints,

InputArrayOfArrays imagePoints1, InputArrayOfArrays imagePoints2,

InputOutputArray cameraMatrix1, InputOutputArray distCoeffs1,

InputOutputArray cameraMatrix2, InputOutputArray distCoeffs2,

Size imageSize, OutputArray R,OutputArray T, OutputArray E, OutputArray F,

int flags = CALIB_FIX_INTRINSIC,

TermCriteria criteria = TermCriteria(TermCriteria::COUNT+TermCriteria::EPS, 30, 1e-6) );objectPoints:校正的图像点向量组

imagePoints1:通过第一台相机观测到的图像上面的向量组.

imagePoints2:通过第二台相机观测到的图像上面的向量组.

cameraMatrix1:输入或者输出第一个相机的内参数矩阵

distCoeffs1:输入/输出第一个相机的畸变系数向量

cameraMatrix2:输入或者输出第二个相机的内参数矩阵

distCoeffs2:输入/输出第二个相机的畸变系数向量

imageSize:图像文件的大小——只用于初始化相机内参数矩阵

R:输出第一和第二相机坐标系之间的旋转矩阵。

T:输出第一和第二相机坐标系之间的旋转矩阵平移向量

E:输出本征矩阵

F:输出基础矩阵

flags:不同的FLAG,可能是零或以下值的结合,参考http://www.baike.com/wiki/stereoCalibrate

criteria:迭代优化算法终止的标准

5 立体匹配

此处直接上完整代码

#include <string>

#include

#include

#include "opencv2/opencv.hpp"

#include "opencv2/calib3d/calib3d.hpp"

#include "opencv2/core/core.hpp"

#include "opencv2/imgcodecs.hpp"

#include "opencv2/highgui/highgui.hpp"

using namespace std;

using namespace cv;

vector<vector >corners_l_array, corners_r_array;

int array_index = 0;

bool ChessboardStable(vectorcorners_l, vectorcorners_r){

if (corners_l_array.size() < 10){

corners_l_array.push_back(corners_l);

corners_r_array.push_back(corners_r);

return false;

}

else{

corners_l_array[array_index % 10] = corners_l;

corners_r_array[array_index % 10] = corners_r;

array_index++;

double error = 0.0;

for (int i = 0; i < corners_l_array.size(); i++){

for (int j = 0; j < corners_l_array[i].size(); j++){

error += abs(corners_l[j].x - corners_l_array[i][j].x) + abs(corners_l[j].y - corners_l_array[i][j].y);

error += abs(corners_r[j].x - corners_r_array[i][j].x) + abs(corners_r[j].y - corners_r_array[i][j].y);

}

}

if (error < 1000)

{

corners_l_array.clear();

corners_r_array.clear();

array_index = 0;

return true;

}

else

return false;

}

}

int main(){

VideoCapture camera_l, camera_r;

Mat frame_l, frame_r;

int cont = 0;

while (frame_l.rows < 2){

camera_l.open(0);

camera_l.set(CV_CAP_PROP_FOURCC, 'GPJM');

camera_l.set(CV_CAP_PROP_FRAME_WIDTH, 320);

camera_l.set(CV_CAP_PROP_FRAME_HEIGHT, 240);

cont = 0;

while (frame_l.rows < 2 && cont < 5){

camera_l >> frame_l;

cont++;

}

}

while (frame_r.rows < 2){

camera_r.open(1);

camera_r.set(CV_CAP_PROP_FOURCC, 'GPJM');

camera_r.set(CV_CAP_PROP_FRAME_WIDTH, 320);

camera_r.set(CV_CAP_PROP_FRAME_HEIGHT, 240);

cont = 0;

while (frame_r.rows < 2 && cont < 5){

camera_r >> frame_r;

cont++;

}

}

Size boardSize(9, 6);

const float squareSize = 26.f; // Set this to your actual square size

vector goodFrame_l;

vector goodFrame_r;

vector<vector > imagePoints_l;

vector<vector > imagePoints_r;

vector<vector > objectPoints;

int nimages = 0;

while (true){

camera_l >> frame_l;

camera_r >> frame_r;

bool found_l = false, found_r = false;

vectorcorners_l, corners_r;

found_l = findChessboardCorners(frame_l, boardSize, corners_l,

CALIB_CB_ADAPTIVE_THRESH | CALIB_CB_NORMALIZE_IMAGE);

found_r = findChessboardCorners(frame_r, boardSize, corners_r,

CALIB_CB_ADAPTIVE_THRESH | CALIB_CB_NORMALIZE_IMAGE);

if (found_l && found_r &&ChessboardStable(corners_l, corners_r)){

goodFrame_l.push_back(frame_l);

goodFrame_r.push_back(frame_r);

Mat viewGray;

cvtColor(frame_l, viewGray, COLOR_BGR2GRAY);

cornerSubPix(viewGray, corners_l, Size(11, 11),

Size(-1, -1), TermCriteria(TermCriteria::EPS + TermCriteria::COUNT, 30, 0.1));

cvtColor(frame_r, viewGray, COLOR_BGR2GRAY);

cornerSubPix(viewGray, corners_r, Size(11, 11),

Size(-1, -1), TermCriteria(TermCriteria::EPS + TermCriteria::COUNT, 30, 0.1));

imagePoints_l.push_back(corners_l);

imagePoints_r.push_back(corners_r);

++nimages;

frame_l += 100;

frame_r += 100;

drawChessboardCorners(frame_l, boardSize, corners_l, found_l);

drawChessboardCorners(frame_r, boardSize, corners_r, found_r);

putText(frame_l, to_string(nimages), Point(20, 20), 1, 1, Scalar(0, 0, 255));

putText(frame_r, to_string(nimages), Point(20, 20), 1, 1, Scalar(0, 0, 255));

imshow("Left Camera", frame_l);

imshow("Right Camera", frame_r);

char c = (char)waitKey(500);

if (c == 27 || c == 'q' || c == 'Q') //Allow ESC to quit

exit(-1);

if (nimages >= 30)

break;

}

else{

drawChessboardCorners(frame_l, boardSize, corners_l, found_l);

drawChessboardCorners(frame_r, boardSize, corners_r, found_r);

putText(frame_l, to_string(nimages), Point(20, 20), 1, 1, Scalar(0, 0, 255));

putText(frame_r, to_string(nimages), Point(20, 20), 1, 1, Scalar(0, 0, 255));

imshow("Left Camera", frame_l);

imshow("Right Camera", frame_r);

char key = waitKey(1);

if (key == 27)

break;

}

}

if (nimages < 20){ cout << "Not enough" << endl; return -1; }

vector<vector > imagePoints[2] = { imagePoints_l, imagePoints_r };

objectPoints.resize(nimages);

for (int i = 0; i < nimages; i++)

{

for (int j = 0; j < boardSize.height; j++)

for (int k = 0; k < boardSize.width; k++)

objectPoints[i].push_back(Point3f(k*squareSize, j*squareSize, 0));

}

cout << "Running stereo calibration ..." << endl;

Size imageSize(320, 240);

Mat cameraMatrix[2], distCoeffs[2];

cameraMatrix[0] = initCameraMatrix2D(objectPoints, imagePoints_l, imageSize, 0);

cameraMatrix[1] = initCameraMatrix2D(objectPoints, imagePoints_r, imageSize, 0);

Mat R, T, E, F;

double rms = stereoCalibrate(objectPoints, imagePoints_l, imagePoints_r,

cameraMatrix[0], distCoeffs[0],

cameraMatrix[1], distCoeffs[1],

imageSize, R, T, E, F,

CALIB_FIX_ASPECT_RATIO +

CALIB_ZERO_TANGENT_DIST +

CALIB_USE_INTRINSIC_GUESS +

CALIB_SAME_FOCAL_LENGTH +

CALIB_RATIONAL_MODEL +

CALIB_FIX_K3 + CALIB_FIX_K4 + CALIB_FIX_K5,

TermCriteria(TermCriteria::COUNT + TermCriteria::EPS, 100, 1e-5));

cout << "done with RMS error=" << rms << endl;

double err = 0;

int npoints = 0;

vector lines[2];

for (int i = 0; i < nimages; i++)

{

int npt = (int)imagePoints_l[i].size();

Mat imgpt[2];

imgpt[0] = Mat(imagePoints_l[i]);

undistortPoints(imgpt[0], imgpt[0], cameraMatrix[0], distCoeffs[0], Mat(), cameraMatrix[0]);

computeCorrespondEpilines(imgpt[0], 0 + 1, F, lines[0]);

imgpt[1] = Mat(imagePoints_r[i]);

undistortPoints(imgpt[1], imgpt[1], cameraMatrix[1], distCoeffs[1], Mat(), cameraMatrix[1]);

computeCorrespondEpilines(imgpt[1], 1 + 1, F, lines[1]);

for (int j = 0; j < npt; j++)

{

double errij = fabs(imagePoints[0][i][j].x*lines[1][j][0] +

imagePoints[0][i][j].y*lines[1][j][1] + lines[1][j][2]) +

fabs(imagePoints[1][i][j].x*lines[0][j][0] +

imagePoints[1][i][j].y*lines[0][j][1] + lines[0][j][2]);

err += errij;

}

npoints += npt;

}

cout << "average epipolar err = " << err / npoints << endl;

FileStorage fs("intrinsics.yml", FileStorage::WRITE);

if (fs.isOpened())

{

fs << "M1" << cameraMatrix[0] << "D1" << distCoeffs[0] <<

"M2" << cameraMatrix[1] << "D2" << distCoeffs[1];

fs.release();

}

else

cout << "Error: can not save the intrinsic parameters\n";

Mat R1, R2, P1, P2, Q;

Rect validRoi[2];

stereoRectify(cameraMatrix[0], distCoeffs[0],

cameraMatrix[1], distCoeffs[1],

imageSize, R, T, R1, R2, P1, P2, Q,

CALIB_ZERO_DISPARITY, 1, imageSize, &validRoi[0], &validRoi[1]);

fs.open("extrinsics.yml", FileStorage::WRITE);

if (fs.isOpened())

{

fs << "R" << R << "T" << T << "R1" << R1 << "R2" << R2 << "P1" << P1 << "P2" << P2 << "Q" << Q;

fs.release();

}

else

cout << "Error: can not save the extrinsic parameters\n";

// OpenCV can handle left-right

// or up-down camera arrangements

bool isVerticalStereo = fabs(P2.at(1, 3)) > fabs(P2.at(0, 3));

// COMPUTE AND DISPLAY RECTIFICATION

Mat rmap[2][2];

// IF BY CALIBRATED (BOUGUET'S METHOD)

//Precompute maps for cv::remap()

initUndistortRectifyMap(cameraMatrix[0], distCoeffs[0], R1, P1, imageSize, CV_16SC2, rmap[0][0], rmap[0][1]);

initUndistortRectifyMap(cameraMatrix[1], distCoeffs[1], R2, P2, imageSize, CV_16SC2, rmap[1][0], rmap[1][1]);

Mat canvas;

double sf;

int w, h;

if (!isVerticalStereo)

{

sf = 600. / MAX(imageSize.width, imageSize.height);

w = cvRound(imageSize.width*sf);

h = cvRound(imageSize.height*sf);

canvas.create(h, w * 2, CV_8UC3);

}

else

{

sf = 300. / MAX(imageSize.width, imageSize.height);

w = cvRound(imageSize.width*sf);

h = cvRound(imageSize.height*sf);

canvas.create(h * 2, w, CV_8UC3);

}

destroyAllWindows();

Mat imgLeft, imgRight;

int ndisparities = 16 * 5; /**< Range of disparity */

int SADWindowSize = 31; /**< Size of the block window. Must be odd */

Ptr sbm = StereoBM::create(ndisparities, SADWindowSize);

sbm->setMinDisparity(0);

//sbm->setNumDisparities(64);

sbm->setTextureThreshold(10);

sbm->setDisp12MaxDiff(-1);

sbm->setPreFilterCap(31);

sbm->setUniquenessRatio(25);

sbm->setSpeckleRange(32);

sbm->setSpeckleWindowSize(100);

Ptr sgbm = StereoSGBM::create(0, 64, 7,

10 * 7 * 7,

40 * 7 * 7,

1, 63, 10, 100, 32, StereoSGBM::MODE_SGBM);

Mat rimg, cimg;

Mat Mask;

while (true)

{

camera_l >> frame_l;

camera_r >> frame_r;

if (frame_l.empty() || frame_r.empty())

continue;

remap(frame_l, rimg, rmap[0][0], rmap[0][1], INTER_LINEAR);

rimg.copyTo(cimg);

Mat canvasPart1 = !isVerticalStereo ? canvas(Rect(w * 0, 0, w, h)) : canvas(Rect(0, h * 0, w, h));

resize(cimg, canvasPart1, canvasPart1.size(), 0, 0, INTER_AREA);

Rect vroi1(cvRound(validRoi[0].x*sf), cvRound(validRoi[0].y*sf),

cvRound(validRoi[0].width*sf), cvRound(validRoi[0].height*sf));

remap(frame_r, rimg, rmap[1][0], rmap[1][1], INTER_LINEAR);

rimg.copyTo(cimg);

Mat canvasPart2 = !isVerticalStereo ? canvas(Rect(w * 1, 0, w, h)) : canvas(Rect(0, h * 1, w, h));

resize(cimg, canvasPart2, canvasPart2.size(), 0, 0, INTER_AREA);

Rect vroi2 = Rect(cvRound(validRoi[1].x*sf), cvRound(validRoi[1].y*sf),

cvRound(validRoi[1].width*sf), cvRound(validRoi[1].height*sf));

Rect vroi = vroi1&vroi2;

imgLeft = canvasPart1(vroi).clone();

imgRight = canvasPart2(vroi).clone();

rectangle(canvasPart1, vroi1, Scalar(0, 0, 255), 3, 8);

rectangle(canvasPart2, vroi2, Scalar(0, 0, 255), 3, 8);

if (!isVerticalStereo)

for (int j = 0; j < canvas.rows; j += 32)

line(canvas, Point(0, j), Point(canvas.cols, j), Scalar(0, 255, 0), 1, 8);

else

for (int j = 0; j < canvas.cols; j += 32)

line(canvas, Point(j, 0), Point(j, canvas.rows), Scalar(0, 255, 0), 1, 8);

cvtColor(imgLeft, imgLeft, CV_BGR2GRAY);

cvtColor(imgRight, imgRight, CV_BGR2GRAY);

//-- And create the image in which we will save our disparities

Mat imgDisparity16S = Mat(imgLeft.rows, imgLeft.cols, CV_16S);

Mat imgDisparity8U = Mat(imgLeft.rows, imgLeft.cols, CV_8UC1);

Mat sgbmDisp16S = Mat(imgLeft.rows, imgLeft.cols, CV_16S);

Mat sgbmDisp8U = Mat(imgLeft.rows, imgLeft.cols, CV_8UC1);

if (imgLeft.empty() || imgRight.empty())

{

std::cout << " --(!) Error reading images " << std::endl; return -1;

}

sbm->compute(imgLeft, imgRight, imgDisparity16S);

imgDisparity16S.convertTo(imgDisparity8U, CV_8UC1, 255.0 / 1000.0);

cv::compare(imgDisparity16S, 0, Mask, CMP_GE);

applyColorMap(imgDisparity8U, imgDisparity8U, COLORMAP_HSV);

Mat disparityShow;

imgDisparity8U.copyTo(disparityShow, Mask);

sgbm->compute(imgLeft, imgRight, sgbmDisp16S);

sgbmDisp16S.convertTo(sgbmDisp8U, CV_8UC1, 255.0 / 1000.0);

cv::compare(sgbmDisp16S, 0, Mask, CMP_GE);

applyColorMap(sgbmDisp8U, sgbmDisp8U, COLORMAP_HSV);

Mat sgbmDisparityShow;

sgbmDisp8U.copyTo(sgbmDisparityShow, Mask);

imshow("bmDisparity", disparityShow);

imshow("sgbmDisparity", sgbmDisparityShow);

imshow("rectified", canvas);

char c = (char)waitKey(1);

if (c == 27 || c == 'q' || c == 'Q')

break;

}

return 0;

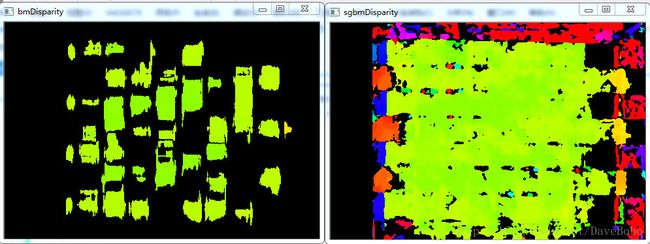

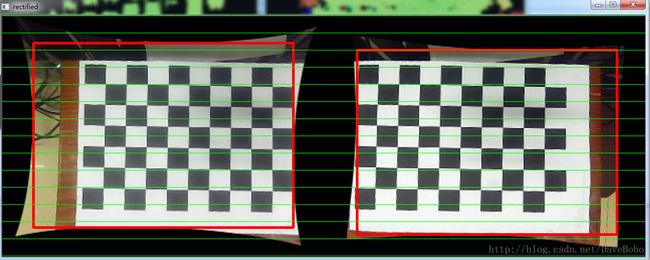

} 以下为实验效果:

主要模块探讨:

OpenCV中包括三种立体匹配求视差图算法分别为:StereoBM、StereoSGBM和StereoVar。

三种匹配算法总结:http://blog.csdn.net/wqvbjhc/article/details/6260844

OpenCV中对BM算法的使用进行了更新。 在OpenCV3中,StereoBM算法发生了比较大的变化,StereoBM被定义为纯虚类,因此不能直接实例化,只能用智能指针的形式实例化,也不用StereoBMState类来设置了,而是改成用bm->set…的形式,详细看如下代码。

//BM(bidirectional matching)算法进行双向匹配,首先通过匹配代价在右图中计算得出匹配点。

//然后相同的原理及计算在左图中的匹配点。比较找到的左匹配点和源匹配点是否一致,如果是,则匹配成功。

//初始化 stereoBMstate 结构体

//错误:不允许使用抽象类型的stereoBM对象

//C++中含有纯虚拟函数并且所有纯虚函数并未完全实现的类称为抽象类,它不能生成对象

//opencv3以后的版本不能这么用了

//StereoBM bm;

//int unitDisparity = 15;//40

//int numberOfDisparities = unitDisparity * 16;

//bm.state->roi1 = roi1;

//bm.state->roi2 = roi2;

//bm.state->preFilterCap = 13;

//bm.state->SADWindowSize = 19;

//bm.state->minDisparity = 0;

//bm.state->numberOfDisparities = numberOfDisparities;

//bm.state->textureThreshold = 1000;

//bm.state->uniquenessRatio = 1;

//bm.state->speckleWindowSize = 200;

//bm.state->speckleRange = 32;

//bm.state->disp12MaxDiff = -1;

cv::Ptr<cv::StereoBM> bm = cv::StereoBM::create();

int unitDisparity = 15;//40

int numberOfDisparities = unitDisparity * 16;

bm->setROI1(roi1);

bm->setROI2 (roi2);

bm->setPreFilterCap(13);

bm->setBlockSize = 15; //SAD窗口大小

bm->setMinDisparity(0); //确定匹配搜索从哪里开始 默认值是0

bm->setNumDisparities(numberOfDisparities) ; //在该数值确定的视差范围内进行搜索,视差窗口

// 即最大视差值与最小视差值之差, 大小必须是16的整数倍

bm->setTextureThreshold(1000); //保证有足够的纹理以克服噪声

bm->setUniquenessRatio(1); //使用匹配功能模式

bm->setSpeckleWindowSize(200); //检查视差连通区域变化度的窗口大小, 值为0时取消 speckle 检查

bm->setSpeckleRange(32); // 视差变化阈值,当窗口内视差变化大于阈值时,该窗口内的视差清零

bm->setDisp12MaxDiff(-1); //左视差图(直接计算得出)和右视差图(通过cvValidateDisparity计算得出)

// 之间的最大容许差异,默认为-1 References:

http://blog.csdn.net/vampireshj/article/details/53535724

http://blog.csdn.net/taily_duan/article/details/52165458

http://blog.csdn.net/zilanpotou182/article/details/72329903

http://blog.csdn.net/ailunlee/article/details/70254835

http://blog.sina.com.cn/s/blog_4a540be60102v44s.html

https://www.zhihu.com/question/48747960/answer/112787533

http://blog.csdn.net/h532600610/article/details/51800488

http://blog.csdn.net/guduruyu/article/details/69537083

http://www.baike.com/wiki/stereoCalibrate