- 掌握检索技术:构建高效知识检索系统的架构与算法23

是小旭啊

人工智能

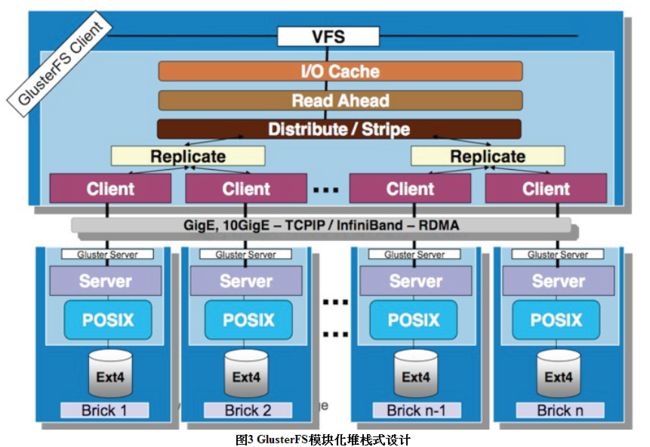

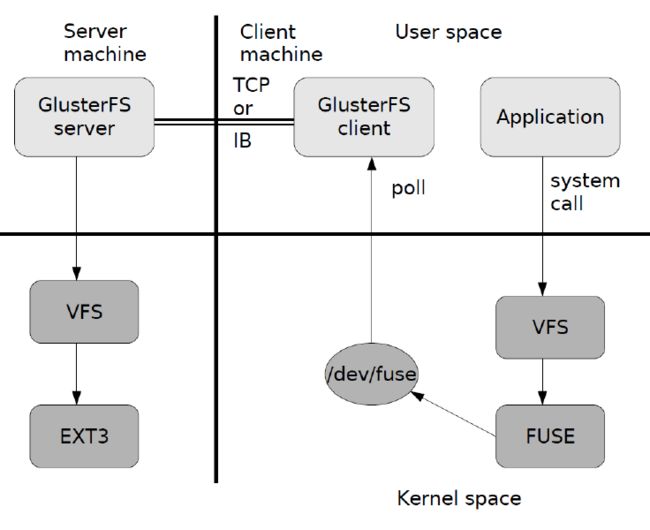

在检索专业知识层需要涵盖更高级的检索技术,包括工程架构和算法策略。一、工程架构工程架构在构建检索系统中决定了系统的可扩展性、高可用性和性能。比如需要考虑的基本点:分布式架构:水平扩展:采用分布式架构,将检索任务分布到多个节点上,实现水平扩展。这可以通过将索引数据分片存储在不同的节点上,并使用分布式文件系统或对象存储来存储大规模的索引数据。任务分配:设计任务调度器,负责将查询请求分配到空闲的节点上进

- 掌握检索技术:构建高效知识检索系统的架构与算法21

是小旭啊

人工智能

在检索专业知识层需要涵盖更高级的检索技术,包括工程架构和算法策略。一、工程架构工程架构在构建检索系统中决定了系统的可扩展性、高可用性和性能。比如需要考虑的基本点:分布式架构:水平扩展:采用分布式架构,将检索任务分布到多个节点上,实现水平扩展。这可以通过将索引数据分片存储在不同的节点上,并使用分布式文件系统或对象存储来存储大规模的索引数据。任务分配:设计任务调度器,负责将查询请求分配到空闲的节点上进

- java 对象存储_在Java中将大量对象存储到磁盘的最佳方法

凯文哥爱分享

java对象存储

顺便说一句,您不需要列表包装器即可将许多项目写入文件,但是您的项目因此需要可序列化.publicclassSObject{privateStringvalue;privateintoccurences;privateStringkey;}来写Listlist=newArrayList<>();ObjectOutputStreamoos=newObjectOutputStream(newFileOu

- 阿里云“99计划”是什么?“99计划”有哪些特惠云产品?价格是多少?

阿里云最新优惠和活动汇总

2024年,阿里云推出了“99计划”,该计划是阿里云为了助力中小企业无忧上云而推出的特惠活动,“99计划”为初创企业准备的上云首选必备产品,让客户享受技术红利,长期普惠上云,新老同享,续费同价。包含的云产品有云服务器e实例和u1实例、对象存储OSS、NAS文件存储、阿里云盘企业版CDE、SLS日志服务、云数据库RDSMySQL版、云数据库RDSPostgreSQL版、云数据库RDSSQLServe

- python(64) 内存的几个现象,主动释放内存

python开发笔记

Pythonpython

1.主动释放内存的方式在Python中使用gc.collect()方法清除内存使用del语句清除Python中的内存gc.collect(generation=2)方法用于清除或释放Python中未引用的内存。未引用的内存是无法访问且无法使用的内存。可选参数generation是一个整数,值的范围是0到2。它使用gc.collect()方法指定要收集的对象的生成。在Python中,寿命短的对象存储

- 详解 JuiceFS sync 新功能,选择性同步增强与多场景性能优化

Juicedata

性能优化

JuiceFSsync是一个强大的数据同步工具,支持在多种存储系统之间进行并发同步或迁移数据,包括对象存储、JuiceFS、NFS、HDFS、本地文件系统等。此外,该工具还提供了增量同步、模式匹配(类似Rsync)、分布式同步等高级功能。在最新的v1.2版本中,针对Juicesync我们引入了多项新功能,并对多个场景进行了性能优化,以提高用户在处理大目录和复杂迁移时的数据同步效率。新增功能增强选择

- 如何在 AWS S3 中设置跨区域复制

数云界

awsjava数据库

如何在AWSS3中设置跨区域复制概述欢迎来到雲闪世界。AmazonSimpleStorageService(S3)是一种可扩展的对象存储服务,广泛用于存储和检索数据。其主要功能之一是跨区域复制(CRR),允许跨不同的AWS区域自动异步复制对象。此功能对于灾难恢复、数据冗余以及改善不同地理区域的数据访问延迟至关重要。在本文中,我们将介绍在AWSS3中设置跨区域复制的过程,确保您的数据在各个区域之间安

- Elasticsearch:无状态世界中的数据安全

Elastic 中国社区官方博客

ElasticsearchServerlessElasticelasticsearch大数据搜索引擎人工智能全文检索serverless

作者:来自ElasticHenningAndersen在最近的博客文章中,我们宣布了支持ElasticCloudServerless产品的无状态架构。通过将持久性保证和复制卸载到对象存储(例如AmazonS3),我们获得了许多优势和简化。从历史上看,Elasticsearch依靠本地磁盘持久性来确保数据安全并处理陈旧或孤立的节点。在本博客中,我们将讨论无状态的数据持久性保证,包括我们如何使用安全检

- Spring Boot + MinIO 实现文件的分片上传、秒传、续传功能

雨轩智能

java及Linux相关教程springboot服务器后端

文件上传是一个常见的功能需求。然而,传统的文件上传方式在面对大文件或不稳定的网络环境时,可能会出现性能瓶颈和上传失败的问题。为了解决这些问题,分片上传、秒传和续传技术应运而生.技术选型SpringBoot:一个快速开发框架,简化了Spring应用的搭建和配置。MinIO:一个高性能的对象存储服务器,支持S3协议。分片上传、秒传和续传原理说明分片上传:原理:将大文件分割成多个较小的片段(称为分片),

- 实现多云对象存储支持:Go 语言实践

microrain

golang物联网golang开发语言后端

实现多云对象存储支持:Go语言实践在现代云原生应用开发中,对象存储已成为不可或缺的组件。然而,不同的云服务提供商有各自的对象存储服务和SDK。本文将介绍如何在Go语言中实现一个灵活的对象存储系统,支持多个主流云服务提供商,包括AWSS3、GoogleCloudStorage、MinIO和阿里云OSS。目标我们的目标是创建一个统一的接口,使得应用程序可以轻松地在不同的对象存储服务之间切换,而无需修改

- 阿里云对象存储服务(Aliyun OSS):企业级云存储解决方案

Lill_bin

杂谈阿里云云计算分布式javaspringboot开发语言

在当今数字化时代,数据的存储、管理和访问已成为企业运营的核心部分。阿里云对象存储服务(AliyunOSS),作为业界领先的云存储解决方案,提供了一个可靠、安全且易于扩展的存储平台。本文将详细介绍AliyunOSS的关键特性、使用场景以及如何为企业带来价值。什么是AliyunOSS?AliyunOSS是阿里云提供的一种高度可扩展、安全且成本效益高的云存储服务。它允许用户在互联网上存储和访问任意数量和

- java开发面试:AOT有什么优缺点/适用于什么场景/AOT和JIT的对比、逃逸分析和对象存储在堆上的关系、高并发中的集合有哪些问题

鸡鸭扣

java面试java面试开发语言

JDK9引入了AOT编译模式。AOT有什么优点?适用于什么场景?JDK9引入了一种新的编译模式AOT(AheadofTimeCompilation)。和JIT不同的是,这种编译模式会在程序被执行前就将其编译成机器码,属于静态编译(C、C++,Rust,Go等语言就是静态编译)。AOT避免了JIT预热等各方面的开销,可以提高Java程序的启动速度。并且AOT还能减少内存占用和增强Java程序的安全性

- 《 Java 编程思想》CH02 一切都是对象

Java天天

用引用操纵对象尽管Java中一切都看作为对象,但是操纵的标识符实际上对象的一个“引用”。Strings;//这里只是创建了一个引用,而不是一个对象Strings=newString("abcd");//使用`new`操作符来创建一个对象,并将其与一个引用相关联Strings="abcd";//字符串可以直接用带引号的文本初始化必须由你创建所有对象使用new操作符创建一个对象存储到什么位置由五个地方

- Linux 使用 docker 安装 MinIO 分布式对象存储系统

liupeng_blog

dockerdockerlinux分布式

文章目录个人知识库MinIO简介环境要求一.新建文件目录1.1.新建docker-compose.yml1.2.编写docker-compose.yml二.文件赋权限三.启动容器3.1.启动并下载镜像3.2.停止并删除容器四.访问五.DockerHub官网更多知识平台个人知识库云网站:http://www.liupeng.cloud语雀:https://www.yuque.com/liupeng_

- 学习笔记六:ceph介绍以及初始化配置

风车带走过往

K8S相关应用学习笔记ceph

k8s对接cephceph是一种开源的分布式的存储系统,包含以下几种存储类型:块存储(rbd)文件系统cephfs对象存储分布式存储的优点:Ceph核心组件介绍安装Ceph集群初始化配置Ceph安装源安装基础软件包安装ceph集群安装ceph-deploy创建monitor节点修改ceph配置文件配置初始monitor、收集所有的密钥部署osd服务创建ceph文件系统ceph是一种开源的分布式的存

- etcd集群恢复、单节点恢复操作手册

*老工具人了*

数据库Kubernetesetcd数据库

一、集群备份备份方式:Jenkins触发每小时的定时任务,通过调取ansible的playbook进行etcd集群的数据备份和上传,默认只备份集群中的非leader成员,避免leader成员压力过大。将备份数据上传到对应的公有云对象存储,分别上传到两个不同的对象存储的目录下,以适配不同目录下的不同生命周期规则,a目录每小时上传,生命周期为保留近3天的每小时的数据备份;b目录为只有当时间为0点、12

- MySQL运维实战之备份和恢复(8.2)xtrabackup备份到云端(OSS)

mysql数据库

作者:俊达xtrabackup工具中有一个xbcloud程序,可以将数据库直接备份到S3对象存储中,本地不落盘。这里介绍将数据库直接备份到OSS的一种方法。具体方法如下:1、准备OSS我们使用ossutil工具上传备份文件。下载ossutil工具:wget-Oossutil-v1.7.16-linux-amd64.zip"https://gosspublic.alicdn.com/ossutil/

- 如何在Linux搭建MinIO服务并实现无公网ip远程访问内网管理界面

学编程的小程

linuxtcp/ip运维

文章目录前言1.Docker部署MinIO2.本地访问MinIO3.Linux安装Cpolar4.配置MinIO公网地址5.远程访问MinIO管理界面6.固定MinIO公网地址前言MinIO是一个开源的对象存储服务器,可以在各种环境中运行,例如本地、Docker容器、Kubernetes集群等。它兼容AmazonS3API,因此可以与现有的S3工具和库无缝集成。MinIO的设计目标是高性能、高可用

- Element-ui:头像上传

.@d

pythondjangovuepython

本篇文章用到element官网和七牛云官网element-ui官网:https://element.eleme.io/#/zh-CN七牛云官网:https://www.qiniu.com/1.七牛云注册登录之后然后实名认证2.进入对象存储后进入空间管理3.新建空间在这里就能拿到cdn测试域名pythonSDK在开发者中心可以查看使用七牛云就需要安装他pipinstallqiniu我们使用封装的思想

- 【C++航海王:追寻罗杰的编程之路】类与对象你学会了吗?(上)

枫叶丹4

C++c++开发语言visualstudio后端

目录1->面向过程与面向对象的初步认识2->类的引入3->类的定义4->类的访问限定符及封装4.1->访问限定符4.2->封装5->类的作用域6->类的实例化7->类对象模型7.1->如何计算类对象的大小7.2->类对象存储方式的猜测7.3->结构体内存对齐规则8->this指针8.1->this指针的引出8.2->this指针的特性8.3->C语言和C++实现Stack的对比1->面向过程与面向

- HashSet源码分析

gogoingmonkey

HashSet是基于HashMap实现的,底层采用HashMap来保存元素,本篇文章需要在HashMap的基础上进行阅读特点:HashSet是无序的没有索引HashSet将对象存储在key中,且不允许key重复HashSet的Value是固定的HashSet的构造函数privatetransientHashMapmap;privatestaticfinalObjectPRESENT=newObje

- NSCoding对象存储(注意:以后使用NSSecureCoding)

皮蛋豆腐酱油

1.NSCoding是一个协议,遵守NSCoding协议的类,可以实现这个类到NSData的互相转换2.使用场景NSUserDefaults将自定义的类转换成NSData实例,然后存储到偏好存储中。文件存储:NSData类提供了一个方法,可以直接将NSData实例存储到文件中3.相关知识介绍NSCoder类是一个抽象类,用来被其他的类继承NSKeyedArchiver和NSKeyedUnarchi

- javaScript实现客户端直连华为云OBS实现文件上传、断点续传、断网重传

三月的一天

华为云obs文件上传javascript

写在前面:在做这个调研时我遇到的需求是前端直接对接华为云平台实现文件上传功能。上传视频文件通常十几个G、客户工作环境网络较差KB/s,且保证上传是稳定的,支持网络异常断点重试、文件断开支持二次拖入自动重传等。综合考虑使用的华为云的分段上传功能,基于分段的特性在应用层面上实现断点、断网重传功能。主要参考华为云上传官方文档文件上传_对象存储服务OBS_BrowserJS_上传对象同时我的另一篇博客介绍

- 访问minio地址的图片_SpringBoot2 整合MinIO中间件,实现文件便捷管理

高世界

访问minio地址的图片

一、MinIO简介1、基础描述MinIO是一个开源的对象存储服务。适合于存储大容量非结构化的数据,例如图片、视频、日志文件、备份数据和容器/虚拟机镜像等,而一个对象文件可以是任意大小,从几kb到最大5T不等。MinIO是一个非常轻量的服务,可以很简单的和其他应用的结合,类似NodeJS,Redis或者MySQL。2、存储机制MinIO使用按对象的嵌入式擦除编码保护数据,该编码以汇编代码编写,可提供

- 如何在微服务中使用 MinIO 进行配置

阿宽...

微服务javac#

MinIO是一个自由软件和开源对象存储服务器,可与微服务架构协同工作。使用MinIO可以轻松地扩展存储容量,支持高并发、分布式的对象存储,并且拥有各种强大的功能(如访问控制、加密等)。在微服务架构中,使用MinIO作为对象存储服务器时,需要选择合适的语言和框架来与MinIO进行交互。以下是使用C#与MinIO进行交互的简单步骤:在.NETCore项目中安装MinioNuGet包:可以使用NuGet

- 阿里云平台提供哪些云产品服务

阿里云最新优惠和活动汇总

在日常生活中,我们经常听到阿里云,但阿里云到底能提供哪些云产品和服务,可能你并不是特别清楚,我给大家梳理阿里云平台的一些主要云产品和服务。阿里云平台提供的主要云产品和服务如下表:云基础服务域名与建站企业应用安全网络与存储云服务器域名小程序SSL证书CDN轻量应用服务器云虚拟主机企业邮箱DDoS高防IP对象存储OSS云数据库RDS网站建设短信服务Web应用防火墙负载均衡云数据库Redis云解析DNS

- autoreleasepool自动释放池(就问你点不点心了...)

best_su

送一波干货:所谓自动释放池:自动释放池是用来存放对象的,存储在自动释放池中的对象,在自动释放次销毁的时候会给池子中的每一个对象发送一个release消息,即调用对象的relesae方法可以解决的问题:将创建的对象存入到自动释放池中,就不需要在手动的relese这个对象了。因为池子销毁的时候就会自动的调用对象的release方法好处:将创建的对象存储到自动的释放池中,不需要在写release如何创建

- 文件上传-第三方服务阿里云OSS

sunyunfei1994

阿里云java

JAVA后端实现文件上传,比如图片上床功能,有很多实现方案,可以将图片保存到服务器的硬盘上。也可以建立分布式集群,专门的微服务来存储文件常见的技术比如Minio。对于中小型公司,并且上传文件私密性不高的话可以使用第三方的存储服务,比如阿里云、华为云等。阿里云官网地址:对象存储OSS_云存储服务_企业数据管理_存储-阿里云阿里云对象存储OSS是一款海量、安全、低成本、高可靠的云存储服务,提供99.9

- 如何使用 Python 通过代码创建图表

张无忌打怪兽

Pythonpythonlinux开发语言

简介DiagramasCode工具允许您创建基础架构的架构图。您可以重复使用代码、测试、集成和自动化绘制图表的过程,这将使您能够将文档视为代码,并构建用于映射基础架构的流水线。您可以使用diagrams脚本与许多云提供商和自定义基础架构。在本教程中,您将使用Python创建一个基本的图表,并将其上传到对象存储桶。完成后,您将了解diagrams脚本的工作原理,从而能够创建新的集成。步骤1—安装Gr

- Thinkphp6+Uniapp+微信小程序上传图片到阿里云OSS

阿里云对象存储OSS(ObjectStorageService)是一款海量、安全、低成本、高可靠的云存储服务。本实例微信小程序直传文件参考官方文档:https://help.aliyun.com/zh/oss/use-cases/use-wechat-mini-prog...,使用STS临时访问凭证访问OSS官方文档:https://www.alibabacloud.com/help/zh/oss

- windows下源码安装golang

616050468

golang安装golang环境windows

系统: 64位win7, 开发环境:sublime text 2, go版本: 1.4.1

1. 安装前准备(gcc, gdb, git)

golang在64位系

- redis批量删除带空格的key

bylijinnan

redis

redis批量删除的通常做法:

redis-cli keys "blacklist*" | xargs redis-cli del

上面的命令在key的前后没有空格时是可以的,但有空格就不行了:

$redis-cli keys "blacklist*"

1) "blacklist:12:

[email protected]

- oracle正则表达式的用法

0624chenhong

oracle正则表达式

方括号表达示

方括号表达式

描述

[[:alnum:]]

字母和数字混合的字符

[[:alpha:]]

字母字符

[[:cntrl:]]

控制字符

[[:digit:]]

数字字符

[[:graph:]]

图像字符

[[:lower:]]

小写字母字符

[[:print:]]

打印字符

[[:punct:]]

标点符号字符

[[:space:]]

- 2048源码(核心算法有,缺少几个anctionbar,以后补上)

不懂事的小屁孩

2048

2048游戏基本上有四部分组成,

1:主activity,包含游戏块的16个方格,上面统计分数的模块

2:底下的gridview,监听上下左右的滑动,进行事件处理,

3:每一个卡片,里面的内容很简单,只有一个text,记录显示的数字

4:Actionbar,是游戏用重新开始,设置等功能(这个在底下可以下载的代码里面还没有实现)

写代码的流程

1:设计游戏的布局,基本是两块,上面是分

- jquery内部链式调用机理

换个号韩国红果果

JavaScriptjquery

只需要在调用该对象合适(比如下列的setStyles)的方法后让该方法返回该对象(通过this 因为一旦一个函数称为一个对象方法的话那么在这个方法内部this(结合下面的setStyles)指向这个对象)

function create(type){

var element=document.createElement(type);

//this=element;

- 你订酒店时的每一次点击 背后都是NoSQL和云计算

蓝儿唯美

NoSQL

全球最大的在线旅游公司Expedia旗下的酒店预订公司,它运营着89个网站,跨越68个国家,三年前开始实验公有云,以求让客户在预订网站上查询假期酒店时得到更快的信息获取体验。

云端本身是用于驱动网站的部分小功能的,如搜索框的自动推荐功能,还能保证处理Hotels.com服务的季节性需求高峰整体储能。

Hotels.com的首席技术官Thierry Bedos上个月在伦敦参加“2015 Clou

- java笔记1

a-john

java

1,面向对象程序设计(Object-oriented Propramming,OOP):java就是一种面向对象程序设计。

2,对象:我们将问题空间中的元素及其在解空间中的表示称为“对象”。简单来说,对象是某个类型的实例。比如狗是一个类型,哈士奇可以是狗的一个实例,也就是对象。

3,面向对象程序设计方式的特性:

3.1 万物皆为对象。

- C语言 sizeof和strlen之间的那些事 C/C++软件开发求职面试题 必备考点(一)

aijuans

C/C++求职面试必备考点

找工作在即,以后决定每天至少写一个知识点,主要是记录,逼迫自己动手、总结加深印象。当然如果能有一言半语让他人收益,后学幸运之至也。如有错误,还希望大家帮忙指出来。感激不尽。

后学保证每个写出来的结果都是自己在电脑上亲自跑过的,咱人笨,以前学的也半吊子。很多时候只能靠运行出来的结果再反过来

- 程序员写代码时就不要管需求了吗?

asia007

程序员不能一味跟需求走

编程也有2年了,刚开始不懂的什么都跟需求走,需求是怎样就用代码实现就行,也不管这个需求是否合理,是否为较好的用户体验。当然刚开始编程都会这样,但是如果有了2年以上的工作经验的程序员只知道一味写代码,而不在写的过程中思考一下这个需求是否合理,那么,我想这个程序员就只能一辈写敲敲代码了。

我的技术不是很好,但是就不代

- Activity的四种启动模式

百合不是茶

android栈模式启动Activity的标准模式启动栈顶模式启动单例模式启动

android界面的操作就是很多个activity之间的切换,启动模式决定启动的activity的生命周期 ;

启动模式xml中配置

<activity android:name=".MainActivity" android:launchMode="standard&quo

- Spring中@Autowired标签与@Resource标签的区别

bijian1013

javaspring@Resource@Autowired@Qualifier

Spring不但支持自己定义的@Autowired注解,还支持由JSR-250规范定义的几个注解,如:@Resource、 @PostConstruct及@PreDestroy。

1. @Autowired @Autowired是Spring 提供的,需导入 Package:org.springframewo

- Changes Between SOAP 1.1 and SOAP 1.2

sunjing

ChangesEnableSOAP 1.1SOAP 1.2

JAX-WS

SOAP Version 1.2 Part 0: Primer (Second Edition)

SOAP Version 1.2 Part 1: Messaging Framework (Second Edition)

SOAP Version 1.2 Part 2: Adjuncts (Second Edition)

Which style of WSDL

- 【Hadoop二】Hadoop常用命令

bit1129

hadoop

以Hadoop运行Hadoop自带的wordcount为例,

hadoop脚本位于/home/hadoop/hadoop-2.5.2/bin/hadoop,需要说明的是,这些命令的使用必须在Hadoop已经运行的情况下才能执行

Hadoop HDFS相关命令

hadoop fs -ls

列出HDFS文件系统的第一级文件和第一级

- java异常处理(初级)

白糖_

javaDAOspring虚拟机Ajax

从学习到现在从事java开发一年多了,个人觉得对java只了解皮毛,很多东西都是用到再去慢慢学习,编程真的是一项艺术,要完成一段好的代码,需要懂得很多。

最近项目经理让我负责一个组件开发,框架都由自己搭建,最让我头疼的是异常处理,我看了一些网上的源码,发现他们对异常的处理不是很重视,研究了很久都没有找到很好的解决方案。后来有幸看到一个200W美元的项目部分源码,通过他们对异常处理的解决方案,我终

- 记录整理-工作问题

braveCS

工作

1)那位同学还是CSV文件默认Excel打开看不到全部结果。以为是没写进去。同学甲说文件应该不分大小。后来log一下原来是有写进去。只是Excel有行数限制。那位同学进步好快啊。

2)今天同学说写文件的时候提示jvm的内存溢出。我马上反应说那就改一下jvm的内存大小。同学说改用分批处理了。果然想问题还是有局限性。改jvm内存大小只能暂时地解决问题,以后要是写更大的文件还是得改内存。想问题要长远啊

- org.apache.tools.zip实现文件的压缩和解压,支持中文

bylijinnan

apache

刚开始用java.util.Zip,发现不支持中文(网上有修改的方法,但比较麻烦)

后改用org.apache.tools.zip

org.apache.tools.zip的使用网上有更简单的例子

下面的程序根据实际需求,实现了压缩指定目录下指定文件的方法

import java.io.BufferedReader;

import java.io.BufferedWrit

- 读书笔记-4

chengxuyuancsdn

读书笔记

1、JSTL 核心标签库标签

2、避免SQL注入

3、字符串逆转方法

4、字符串比较compareTo

5、字符串替换replace

6、分拆字符串

1、JSTL 核心标签库标签共有13个,

学习资料:http://www.cnblogs.com/lihuiyy/archive/2012/02/24/2366806.html

功能上分为4类:

(1)表达式控制标签:out

- [物理与电子]半导体教材的一个小问题

comsci

问题

各种模拟电子和数字电子教材中都有这个词汇-空穴

书中对这个词汇的解释是; 当电子脱离共价键的束缚成为自由电子之后,共价键中就留下一个空位,这个空位叫做空穴

我现在回过头翻大学时候的教材,觉得这个

- Flashback Database --闪回数据库

daizj

oracle闪回数据库

Flashback 技术是以Undo segment中的内容为基础的, 因此受限于UNDO_RETENTON参数。要使用flashback 的特性,必须启用自动撤销管理表空间。

在Oracle 10g中, Flash back家族分为以下成员: Flashback Database, Flashback Drop,Flashback Query(分Flashback Query,Flashbac

- 简单排序:插入排序

dieslrae

插入排序

public void insertSort(int[] array){

int temp;

for(int i=1;i<array.length;i++){

temp = array[i];

for(int k=i-1;k>=0;k--)

- C语言学习六指针小示例、一维数组名含义,定义一个函数输出数组的内容

dcj3sjt126com

c

# include <stdio.h>

int main(void)

{

int * p; //等价于 int *p 也等价于 int* p;

int i = 5;

char ch = 'A';

//p = 5; //error

//p = &ch; //error

//p = ch; //error

p = &i; //

- centos下php redis扩展的安装配置3种方法

dcj3sjt126com

redis

方法一

1.下载php redis扩展包 代码如下 复制代码

#wget http://redis.googlecode.com/files/redis-2.4.4.tar.gz

2 tar -zxvf 解压压缩包,cd /扩展包 (进入扩展包然后 运行phpize 一下是我环境中phpize的目录,/usr/local/php/bin/phpize (一定要

- 线程池(Executors)

shuizhaosi888

线程池

在java类库中,任务执行的主要抽象不是Thread,而是Executor,将任务的提交过程和执行过程解耦

public interface Executor {

void execute(Runnable command);

}

public class RunMain implements Executor{

@Override

pub

- openstack 快速安装笔记

haoningabc

openstack

前提是要配置好yum源

版本icehouse,操作系统redhat6.5

最简化安装,不要cinder和swift

三个节点

172 control节点keystone glance horizon

173 compute节点nova

173 network节点neutron

control

/etc/sysctl.conf

net.ipv4.ip_forward =

- 从c面向对象的实现理解c++的对象(二)

jimmee

C++面向对象虚函数

1. 类就可以看作一个struct,类的方法,可以理解为通过函数指针的方式实现的,类对象分配内存时,只分配成员变量的,函数指针并不需要分配额外的内存保存地址。

2. c++中类的构造函数,就是进行内存分配(malloc),调用构造函数

3. c++中类的析构函数,就时回收内存(free)

4. c++是基于栈和全局数据分配内存的,如果是一个方法内创建的对象,就直接在栈上分配内存了。

专门在

- 如何让那个一个div可以拖动

lingfeng520240

html

<!DOCTYPE html PUBLIC "-//W3C//DTD XHTML 1.0 Transitional//EN" "http://www.w3.org/TR/xhtml1/DTD/xhtml1-transitional.dtd">

<html xmlns="http://www.w3.org/1999/xhtml

- 第10章 高级事件(中)

onestopweb

事件

index.html

<!DOCTYPE html PUBLIC "-//W3C//DTD XHTML 1.0 Transitional//EN" "http://www.w3.org/TR/xhtml1/DTD/xhtml1-transitional.dtd">

<html xmlns="http://www.w3.org/

- 计算两个经纬度之间的距离

roadrunners

计算纬度LBS经度距离

要解决这个问题的时候,到网上查了很多方案,最后计算出来的都与百度计算出来的有出入。下面这个公式计算出来的距离和百度计算出来的距离是一致的。

/**

*

* @param longitudeA

* 经度A点

* @param latitudeA

* 纬度A点

* @param longitudeB

*

- 最具争议的10个Java话题

tomcat_oracle

java

1、Java8已经到来。什么!? Java8 支持lambda。哇哦,RIP Scala! 随着Java8 的发布,出现很多关于新发布的Java8是否有潜力干掉Scala的争论,最终的结论是远远没有那么简单。Java8可能已经在Scala的lambda的包围中突围,但Java并非是函数式编程王位的真正觊觎者。

2、Java 9 即将到来

Oracle早在8月份就发布

- zoj 3826 Hierarchical Notation(模拟)

阿尔萨斯

rar

题目链接:zoj 3826 Hierarchical Notation

题目大意:给定一些结构体,结构体有value值和key值,Q次询问,输出每个key值对应的value值。

解题思路:思路很简单,写个类词法的递归函数,每次将key值映射成一个hash值,用map映射每个key的value起始终止位置,预处理完了查询就很简单了。 这题是最后10分钟出的,因为没有考虑value为{}的情