Hive之——启动问题及解决方案

问题1:

- Caused by: javax.jdo.JDODataStoreException: Required table missing : "`VERSION`" in Catalog "" Schema "". DataNucleus requires this table to perform its persistence operations. Either your MetaData is incorrect, or you need to enable "datanucleus.autoCreateTables"

- NestedThrowables:

- org.datanucleus.store.rdbms.exceptions.MissingTableException: Required table missing : "`VERSION`" in Catalog "" Schema "". DataNucleus requires this table to perform its persistence operations. Either your MetaData is incorrect, or you need to enable "datanucleus.autoCreateTables"

- at org.datanucleus.api.jdo.NucleusJDOHelper.getJDOExceptionForNucleusException(NucleusJDOHelper.java:461)

- at org.datanucleus.api.jdo.JDOPersistenceManager.jdoMakePersistent(JDOPersistenceManager.java:732)

- at org.datanucleus.api.jdo.JDOPersistenceManager.makePersistent(JDOPersistenceManager.java:752)

- at org.apache.hadoop.hive.metastore.ObjectStore.setMetaStoreSchemaVersion(ObjectStore.java:6664)

查看并跟踪hive的源码得出

- if ((this.readOnlyDatastore) || (this.fixedDatastore))

- {

- this.autoCreateTables = false;

- this.autoCreateColumns = false;

- this.autoCreateConstraints = false;

- }

- else

- {

- boolean autoCreateSchema = conf.getBooleanProperty("datanucleus.autoCreateSchema");

- if (autoCreateSchema)

- {

- this.autoCreateTables = true;

- this.autoCreateColumns = true;

- this.autoCreateConstraints = true;

- }

- else

- {

- this.autoCreateColumns = conf.getBooleanProperty("datanucleus.autoCreateColumns");

- this.autoCreateTables = conf.getBooleanProperty("datanucleus.autoCreateTables");

- this.autoCreateConstraints = conf.getBooleanProperty("datanucleus.autoCreateConstraints");

- }

- }

- this.readOnlyDatastore = conf.getBooleanProperty("datanucleus.readOnlyDatastore");

- this.fixedDatastore = conf.getBooleanProperty("datanucleus.fixedDatastore");

- <property>

- <name>datanucleus.readOnlyDatastorename>

- <value>falsevalue>

- property>

- <property>

- <name>datanucleus.fixedDatastorename>

- <value>falsevalue>

- property>

- <property>

- <name>datanucleus.autoCreateSchemaname>

- <value>truevalue>

- property>

- <property>

- <name>datanucleus.autoCreateTablesname>

- <value>truevalue>

- property>

- <property>

- <name>datanucleus.autoCreateColumnsname>

- <value>truevalue>

- property>

或者将:

- <property>

- <name>datanucleus.schema.autoCreateAllname>

- <value>falsevalue>

- <description>creates necessary schema on a startup if one doesn't exist. set this to false, after creating it oncedescription>

- property>

- <property>

- <name>datanucleus.schema.autoCreateAllname>

- <value>truevalue>

- <description>creates necessary schema on a startup if one doesn't exist. set this to false, after creating it oncedescription>

- property>

问题2:

- 16/06/02 10:49:52 WARN conf.HiveConf: DEPRECATED: Configuration property hive.metastore.local no longer has any effect. Make sure to provide a valid value for hive.metastore.uris if you are connecting to a remote metastore.

- 16/06/02 10:49:52 WARN conf.HiveConf: HiveConf of name hive.metastore.local does not exist

- Logging initialized using configuration in jar:file:/usr/local/hive/lib/hive-common-0.14.0.jar!/hive-log4j.properties

- Exception in thread "main" java.lang.RuntimeException: java.lang.RuntimeException: The root scratch dir: /tmp/hive on HDFS should be writable. Current permissions are: rwx--x--x

- at org.apache.hadoop.hive.ql.session.SessionState.start(SessionState.java:444)

- at org.apache.hadoop.hive.cli.CliDriver.run(CliDriver.java:672)

- at org.apache.hadoop.hive.cli.CliDriver.main(CliDriver.java:616)

- at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

- at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:39)

- at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:25)

- at java.lang.reflect.Method.invoke(Method.java:597)

- at org.apache.hadoop.util.RunJar.main(RunJar.java:160)

- Caused by: java.lang.RuntimeException: The root scratch dir: /tmp/hive on HDFS should be writable. Current permissions are: rwx--x--x

- at org.apache.hadoop.hive.ql.session.SessionState.createRootHDFSDir(SessionState.java:529)

- at org.apache.hadoop.hive.ql.session.SessionState.createSessionDirs(SessionState.java:478)

- at org.apache.hadoop.hive.ql.session.SessionState.start(SessionState.java:430)

- ... 7 more

- [root@hadoop ~]# hadoop fs -chmod -R 777 /tmp/hive

- [root@hadoop ~]# hadoop fs -ls /tmp

- Found 1 items

- drwxrwxrwx - root supergroup 0 2016-06-02 08:48 /tmp/hive

- 16/06/02 09:33:13 WARN conf.HiveConf: DEPRECATED: Configuration property hive.metastore.local no longer has any effect. Make sure to provide a valid value for hive.metastore.uris if you are connecting to a remote metastore.

- 16/06/02 09:33:13 WARN conf.HiveConf: HiveConf of name hive.metastore.local does not exist

- Logging initialized using configuration in jar:file:/usr/local/hive/lib/hive-common-0.14.0.jar!/hive-log4j.properties

- Exception in thread "main" java.lang.RuntimeException: java.net.ConnectException: Call to hadoop/192.168.52.139:9000 failed on connection exception: java.net.ConnectException: Connection refused

- at org.apache.hadoop.hive.ql.session.SessionState.start(SessionState.java:444)

- at org.apache.hadoop.hive.cli.CliDriver.run(CliDriver.java:672)

- at org.apache.hadoop.hive.cli.CliDriver.main(CliDriver.java:616)

- at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

- at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:39)

- at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:25)

- at java.lang.reflect.Method.invoke(Method.java:597)

- at org.apache.hadoop.util.RunJar.main(RunJar.java:160)

- Caused by: java.net.ConnectException: Call to hadoop/192.168.52.139:9000 failed on connection exception: java.net.ConnectException: Connection refused

此问题是因为 hadoop没有启动,启动hadoop后问题解决

问题4:

- [root@hadoop ~]# hive

- 16/06/02 09:38:06 WARN conf.HiveConf: DEPRECATED: Configuration property hive.metastore.local no longer has any effect. Make sure to provide a valid value for hive.metastore.uris if you are connecting to a remote metastore.

- 16/06/02 09:38:06 WARN conf.HiveConf: HiveConf of name hive.metastore.local does not exist

- Logging initialized using configuration in jar:file:/usr/local/hive/lib/hive-common-0.14.0.jar!/hive-log4j.properties

- hive>

在0.10 0.11或者之后的HIVE版本 hive.metastore.local 属性不再使用。

在配置文件里面:

- <property>

- <name>hive.metastore.localname>

- <value>falsevalue>

- <description>controls whether to connect to remove metastore server or open a new metastore server in Hive Client JVMdescription>

- property>

问题5:

- Exception in thread "main" java.lang.RuntimeException: java.lang.IllegalArgumentException: java.net.URISyntaxException: Relative path in absolute URI: ${system:java.io.tmpdir%7D/$%7Bsystem:user.name%7D

- at org.apache.hadoop.hive.ql.session.SessionState.start(SessionState.java:444)

- at org.apache.hadoop.hive.cli.CliDriver.run(CliDriver.java:672)

- at org.apache.hadoop.hive.cli.CliDriver.main(CliDriver.java:616)

- at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

- at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:57)

- at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

- at java.lang.reflect.Method.invoke(Method.java:606)

- at org.apache.hadoop.util.RunJar.main(RunJar.java:160)

- Caused by: java.lang.IllegalArgumentException: java.net.URISyntaxException: Relative path in absolute URI: ${system:java.io.tmpdir%7D/$%7Bsystem:user.name%7D

- at org.apache.hadoop.fs.Path.initialize(Path.java:148)

- at org.apache.hadoop.fs.Path.

(Path.java:126) - at org.apache.hadoop.hive.ql.session.SessionState.createSessionDirs(SessionState.java:487)

- at org.apache.hadoop.hive.ql.session.SessionState.start(SessionState.java:430)

- ... 7 more

- Caused by: java.net.URISyntaxException: Relative path in absolute URI: ${system:java.io.tmpdir%7D/$%7Bsystem:user.name%7D

- at java.net.URI.checkPath(URI.java:1804)

- at java.net.URI.

(URI.java:752) - at org.apache.hadoop.fs.Path.initialize(Path.java:145)

- ... 10 more

在配置文件hive-site.xml里找"system:java.io.tmpdir"把他们都换成绝对路径如:/home/grid/apache-hive-0.14.0-bin/iotmp

hive-site.xml 完整的内容如下:

- xml version="1.0" encoding="UTF-8" standalone="no"?>

- xml-stylesheet type="text/xsl" href="configuration.xsl"?>

- <configuration>

- <property>

- <name>javax.jdo.option.ConnectionURLname>

- <value>jdbc:mysql://192.168.52.139:3306/hive?createDatabaseIfNotExsit=true;characterEncoding=UTF-8value>

- property>

- <property>

- <name>javax.jdo.option.ConnectionDriverNamename>

- <value>com.mysql.jdbc.Drivervalue>

- property>

- <property>

- <name>javax.jdo.option.ConnectionUserNamename>

- <value>hivevalue>

- property>

- <property>

- <name>javax.jdo.option.ConnectionPasswordname>

- <value>hivevalue>

- property>

- <property>

- <name>datanucleus.readOnlyDatastorename>

- <value>falsevalue>

- property>

- <property>

- <name>datanucleus.fixedDatastorename>

- <value>falsevalue>

- property>

- <property>

- <name>datanucleus.autoCreateSchemaname>

- <value>truevalue>

- property>

- <property>

- <name>datanucleus.autoCreateTablesname>

- <value>truevalue>

- property>

- <property>

- <name>datanucleus.autoCreateColumnsname>

- <value>truevalue>

- property>

- configuration>

问题6:

- hive> show tables;

- FAILED: Error in metadata: MetaException(message:Got exception: javax.jdo.JDODataStoreException An exception was thrown while adding/validating class(es) : Specified key was too long; max key length is 767 bytes

- com.mysql.jdbc.exceptions.jdbc4.MySQLSyntaxErrorException: Specified key was too long; max key length is 767 bytes

由于mysql的最大索引长度导致,MySQL的varchar主键只支持不超过768个字节 或者 768/2=384个双字节 或者 768/3=256个三字节的字段 而 GBK是双字节的,UTF-8是三字节的。

解决方案:

数据库的字符集除了system为utf8,其他最好为latin1,否则可能出现如上异常,在mysql机器的上运行:

- mysql> show variables like '%char%';

- mysql>alter database 库名 character set latin1;

- mysql>flush privileges;

问题7:

java.lang.IncompatibleClassChangeError: Found class jline.Terminal, but interface was expected

在配置hadoop2.6 ,hive1.1的时候,Hive 启动报错:

[ERROR] Terminal initialization failed; falling back to unsupported

java.lang.IncompatibleClassChangeError: Found class jline.Terminal, but interface was expecte

-rw-r--r-- 1 root root 213854 Mar 11 22:22 jline-2.12.jar

org.apache.hadoop.security.AccessControlException: Permission denied: user=root

解决方法是:用root 用户进入hive show tables没有报错,但是select 的时候报错了:

错误信息:

FAILED: Hive Internal Error: java.lang.RuntimeException(org.apache.hadoop.security.AccessControlException: org.apache.hadoop.security.AccessControlException: Permission denied: user=root, access=WRITE, inode="tmp":hadoop:supergroup:rwxr-xr-x)

java.lang.RuntimeException: org.apache.hadoop.security.AccessControlException: org.apache.hadoop.security.AccessControlException: Permission denied: user=root, access=WRITE, inode="tmp":hadoop:supergroup:rwxr-xr-x

at org.apache.hadoop.hive.ql.Context.getScratchDir(Context.java:170)

at org.apache.hadoop.hive.ql.Context.getMRScratchDir(Context.java:210)

at org.apache.hadoop.hive.ql.Context.getMRTmpFileURI(Context.java:267)

at org.apache.hadoop.hive.ql.parse.SemanticAnalyzer.getMetaData(SemanticAnalyzer.java:1112)

at org.apache.hadoop.hive.ql.parse.SemanticAnalyzer.analyzeInternal(SemanticAnalyzer.java:7524)

at org.apache.hadoop.hive.ql.parse.BaseSemanticAnalyzer.analyze(BaseSemanticAnalyzer.java:243)

at org.apache.hadoop.hive.ql.Driver.compile(Driver.java:431)

at org.apache.hadoop.hive.ql.Driver.compile(Driver.java:336)

at org.apache.hadoop.hive.ql.Driver.run(Driver.java:909)

at org.apache.hadoop.hive.cli.CliDriver.processLocalCmd(CliDriver.java:258)

at org.apache.hadoop.hive.cli.CliDriver.processCmd(CliDriver.java:215)

at org.apache.hadoop.hive.cli.CliDriver.processLine(CliDriver.java:406)

at org.apache.hadoop.hive.cli.CliDriver.run(CliDriver.java:689)

at org.apache.hadoop.hive.cli.CliDriver.main(CliDriver.java:557)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:39)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:25)

at java.lang.reflect.Method.invoke(Method.java:597)

at org.apache.hadoop.util.RunJar.main(RunJar.java:156)

Caused by: org.apache.hadoop.security.AccessControlException: org.apache.hadoop.security.AccessControlException: Permission denied: user=root, access=WRITE, inode="tmp":hadoop:supergroup:rwxr-xr-x

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:39)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:27)

at java.lang.reflect.Constructor.newInstance(Constructor.java:513)

at org.apache.hadoop.ipc.RemoteException.instantiateException(RemoteException.java:95)

at org.apache.hadoop.ipc.RemoteException.unwrapRemoteException(RemoteException.java:57)

at org.apache.hadoop.hdfs.DFSClient.mkdirs(DFSClient.java:1216)

at org.apache.hadoop.hdfs.DistributedFileSystem.mkdirs(DistributedFileSystem.java:321)

at org.apache.hadoop.fs.FileSystem.mkdirs(FileSystem.java:1126)

at org.apache.hadoop.hive.ql.Context.getScratchDir(Context.java:165)

... 18 more

Caused by: org.apache.hadoop.ipc.RemoteException: org.apache.hadoop.security.AccessControlException: Permission denied: user=root, access=WRITE, inode="tmp":hadoop:supergroup:rwxr-xr-x

at org.apache.hadoop.hdfs.server.namenode.FSPermissionChecker.check(FSPermissionChecker.java:199)

at org.apache.hadoop.hdfs.server.namenode.FSPermissionChecker.check(FSPermissionChecker.java:180)

at org.apache.hadoop.hdfs.server.namenode.FSPermissionChecker.checkPermission(FSPermissionChecker.java:128)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.checkPermission(FSNamesystem.java:5214)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.checkAncestorAccess(FSNamesystem.java:5188)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.mkdirsInternal(FSNamesystem.java:2060)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.mkdirs(FSNamesystem.java:2029)

at org.apache.hadoop.hdfs.server.namenode.NameNode.mkdirs(NameNode.java:817)

at sun.reflect.GeneratedMethodAccessor12.invoke(Unknown Source)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:25)

at java.lang.reflect.Method.invoke(Method.java:597)

at org.apache.hadoop.ipc.RPC$Server.call(RPC.java:563)

at org.apache.hadoop.ipc.Server$Handler$1.run(Server.java:1388)

at org.apache.hadoop.ipc.Server$Handler$1.run(Server.java:1384)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:396)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1121)

at org.apache.hadoop.ipc.Server$Handler.run(Server.java:1382)

at org.apache.hadoop.ipc.Client.call(Client.java:1070)

at org.apache.hadoop.ipc.RPC$Invoker.invoke(RPC.java:225)

at $Proxy6.mkdirs(Unknown Source)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:39)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:25)

at java.lang.reflect.Method.invoke(Method.java:597)

at org.apache.hadoop.io.retry.RetryInvocationHandler.invokeMethod(RetryInvocationHandler.java:82)

at org.apache.hadoop.io.retry.RetryInvocationHandler.invoke(RetryInvocationHandler.java:59)

at $Proxy6.mkdirs(Unknown Source)

at org.apache.hadoop.hdfs.DFSClient.mkdirs(DFSClient.java:1214)

... 21 more

解决办法:

错误信息是说我的root用户没有权限来访问hive中的表信息。

原因是我进入hive的用户不应该用hive 因为配置hadoop的时候 我所使用的用户是hadoop

当换成hadoop用户来操作 hive 这个问题就不存在了、

could not create ServerSocket on address 0.0.0.0/0.0.0.0:9083

解决方法:

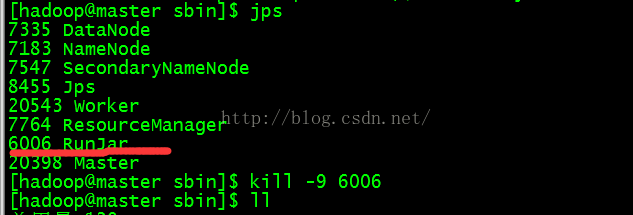

遇到这种情况大家都找不到头绪,是因为你开始运行了hive的metastore,可以输入jps

然后出现如下:

红线所示就是hive metastore的进程

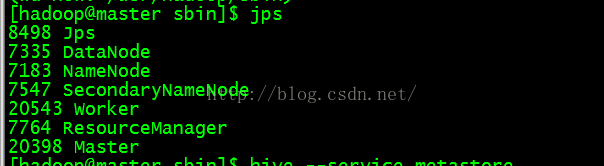

为了重新启动,需要把这个进杀掉;

kill -9 6006(这个是哪个程序的进程号)

然后再输入

hive --service metastore启动

OK,启动成功。

或者

执行查看linux端口命令

9083 端口发现被占用

[root@h1 bin]# netstat -apn|grep 9083

tcp 0 0 0.0.0.0:9083 0.0.0.0:* LISTEN 26235/java

tcp 48 0 192.168.170.69:9083 192.168.170.69:44742 CLOSE_WAIT -

[root@h1 bin]# netstat -apn|grep 9088

[root@h1 bin]#hive --service metastore -p 9088