一、概要

由于工作需要,最近一段时间开始接触学习hadoop相关的东西,目前公司的实时任务和离线任务都跑在一个hadoop集群,离线任务的特点就是每天定时跑,任务跑完了资源就空闲了,为了合理的利用资源,我们打算在搭一个集群用于跑离线任务,计算节点和储存节点分离,计算节点结合aws的Auto Scaling(自动扩容、缩容服务)以及竞价实例,动态调整,在跑任务的时候拉起一批实例,任务跑完就自动释放掉服务器,本文记录下hadoop集群的搭建过程,方便自己日后查看,也希望能帮到初学者,本文所有软件都是通过yum安装,大家也可以下载相应的二进制文件进行安装,使用哪种方式安装,从属个人习惯。

二、环境

1、角色介绍

10.10.103.246 NameNode zkfc journalNode QuorumaPeerMain DataNode ResourceManager NodeManager WebAppProxyServer JobHistoryServer 10.10.103.144 NameNode zkfc journalNode QuorumaPeerMain DataNode ResourceManager NodeManager WebAppProxyServer 10.10.103.62 zkfc journalNode QuorumaPeerMain DataNode NodeManager

10.10.20.64 journalNode QuorumaPeerMain NameNode zkfc 10.10.40.212 journalNode QuorumaPeerMain NameNode zkfc 10.10.102.207 journalNode QuorumaPeerMain 10.10.103.15 ResourceManager WebAppProxyServer JobHistoryServer NodeManager DataNode 10.10.30.83 ResourceManager WebAppProxyServer JobHistoryServer NodeManager DataNode

2、基础环境说明

a、系统版本

我们用的是aws的ec2,用的aws自己定制过的系统,不过和redhat基本相同,内核版本:4.9.20-10.30.amzn1.x86_64

b、java版本

java version "1.8.0_121"

c、hadoop版本

hadoop-2.6.0

d、cdh版本

cdh5.11.0

e、关于主机名,因为我这里用的aws的ec2,默认已有主机名,并且内网可以解析,故就不单独做主机名的配置了,如果你的主机名内网不能解析,请一定要配置主机名,集群内部通讯很多组件使用的是主机名

三、配置部署

1、设置yum源

vim /etc/yum.repos.d/cloudera.repo [cloudera-cdh5-11-0] # Packages for Cloudera's Distribution for Hadoop, Version 5.11.0, on RedHat or CentOS 6 x86_64 name=Cloudera's Distribution for Hadoop, Version 5.11.0 baseurl=http://archive.cloudera.com/cdh5/redhat/6/x86_64/cdh/5.11.0/ gpgkey=http://archive.cloudera.com/cdh5/redhat/6/x86_64/cdh/RPM-GPG-KEY-cloudera gpgcheck=1 [cloudera-gplextras5b2] # Packages for Cloudera's GPLExtras, Version 5.11.0, on RedHat or CentOS 6 x86_64 name=Cloudera's GPLExtras, Version 5.11.0 baseurl=http://archive.cloudera.com/gplextras5/redhat/6/x86_64/gplextras/5.11.0/ gpgkey=http://archive.cloudera.com/gplextras5/redhat/6/x86_64/gplextras/RPM-GPG-KEY-cloudera gpgcheck=1

PS:我这里安装的5.11.0,如果想安装低版本或者高版本,根据自己的需求修改版本号即可

2、安装配置zookeeper集群

yum -y install zookeeper zookeeper-server

vi /etc/zookeeper/conf/zoo.cfg tickTime=2000 initLimit=10 syncLimit=5 dataDir=/data/zookeeper clientPort=2181 maxClientCnxns=0 server.1=10.10.103.144:2888:3888 server.2=10.10.103.226:2888:3888 server.3=10.10.103.62:2888:3888 autopurge.snapRetainCount=3 autopurge.purgeInterval=1

mkdir /data/zookeeper #创建datadir目录 /etc/init.d/zookeeper-server init #所有节点先初始化 echo 1 > /data/zookeeper/myid #10.10.103.144上操作 echo 2 > /data/zookeeper/myid #10.10.103.226上操作 echo 3 > /data/zookeeper/myid #10.10.103.62上操作 /etc/init.d/zookeeper-server #启动服务 /usr/lib/zookeeper/bin/zkServer.sh status #查看所有节点状态,其中只有一个节点是Mode: leader就正常 了

3、安装

a、10.10.103.246和10.10.103.144安装

yum -y install hadoop hadoop-client hadoop-hdfs hadoop-hdfs-namenode hadoop-hdfs-zkfc hadoop-hdfs-journalnode hadoop-hdfs-datanode hadoop-mapreduce-historyserver hadoop-yarn-nodemanager hadoop-yarn-proxyserver hadoop-yarn hadoop-mapreduce hadoop-yarn-resourcemanager hadoop-lzo* impala-lzo

b、10.10.103.62上安装

yum -y install hadoop hadoop-client hadoop-hdfs hadoop-hdfs-journalnode hadoop-hdfs-datanode hadoop-lzo* impala-lzo hadoop-yarn hadoop-mapreduce hadoop-yarn-nodemanager

PS:

1、一般小公司,计算节点(ResourceManager)和储存节点(NameNode)的主节点部署在两台服务器上做HA,计算节点(NodeManager)和储存节点(DataNode)部署在多台服务器上,每台服务器上都启动NodeManager和DataNode服务。

2、如果大集群,可能需要计算资源和储存资源分离,集群的各个角色都有服务器单独部署,个人建议划分如下:

a、储存节点

NameNode:

需要安装hadoop hadoop-client hadoop-hdfs hadoop-hdfs-namenode hadoop-hdfs-zkfc hadoop-lzo* impala-lzo

DataNode:

需要安装hadoop hadoop-client hadoop-hdfs hadoop-hdfs-datanode hadoop-lzo* impala-lzo

QJM集群:

需要安装hadoop hadoop-hdfs hadoop-hdfs-journalnode zookeeper zookeeper-server

b、计算节点

ResourceManager:

需要安装hadoop hadoop-client hadoop-yarn hadoop-mapreduce hadoop-yarn-resourcemanager

WebAppProxyServer:

需要安装 hadoop hadoop-yarn hadoop-mapreduce hadoop-yarn-proxyserver

JobHistoryServer:

需要安装 hadoop hadoop-yarn hadoop-mapreduce hadoop-mapreduce-historyserver

NodeManager:

需要安装hadoop hadoop-client hadoop-yarn hadoop-mapreduce hadoop-yarn-nodemanager

4、配置

a、创建目录并设置权限

mkdir -p /data/hadoop/dfs/nn #datanode上操作 chown hdfs:hdfs /data/hadoop/dfs/nn/ -R #datanode上操作 mkdir -p /data/hadoop/dfs/dn #namenode上操作 chown hdfs:hdfs /data/hadoop/dfs/dn/ -R #namenode上操作 mkdir -p /data/hadoop/dfs/jn #journalnode上操作 chown hdfs:hdfs /data/hadoop/dfs/jn/ -R #journalnode上操作 mkdir /data/hadoop/yarn -p #nodemanager上操作 chown yarn:yarn /data/hadoop/yarn -R #nodemanager上操作

b、撰写配置文件

vim /etc/hadoop/conf/capacity-scheduler.xml~~~~~~~~~~~~~~~~~分界线~~~~~~~~~~~~~~~~~~~ yarn.scheduler.capacity.maximum-applications 10000 yarn.scheduler.capacity.maximum-am-resource-percent 0.4 yarn.scheduler.capacity.resource-calculator org.apache.hadoop.yarn.util.resource.DefaultResourceCalculator yarn.scheduler.capacity.node-locality-delay 30 yarn.scheduler.capacity.root.queues default,server,offline yarn.scheduler.capacity.root.default.capacity 95 yarn.scheduler.capacity.root.default.maximum-capacity 100 yarn.scheduler.capacity.root.default.user-limit-factor 100 yarn.scheduler.capacity.root.default.state running yarn.scheduler.capacity.root.default.acl_submit_applications * yarn.scheduler.capacity.root.default.acl_administer_queue * yarn.scheduler.capacity.root.server.capacity 0 yarn.scheduler.capacity.root.server.maximum-capacity 5 yarn.scheduler.capacity.root.server.user-limit-factor 100 yarn.scheduler.capacity.root.server.acl_submit_applications haijun.zhao yarn.scheduler.capacity.root.server.acl_administer_queue haijun.zhao yarn.scheduler.capacity.root.server.maximum-am-resource-percent 0.05 yarn.scheduler.capacity.root.server.state running yarn.scheduler.capacity.root.offline.capacity 5 yarn.scheduler.capacity.root.offline.maximum-capacity 100 yarn.scheduler.capacity.root.offline.user-limit-factor 100 yarn.scheduler.capacity.root.offline.acl_submit_applications hadoop,haifeng.huang,hongan.pan,rujing.zhang,lingjing.li yarn.scheduler.capacity.root.offline.acl_administer_queue hadoop,haifeng.huang,hongan.pan,rujing.zhang,linjing.li yarn.scheduler.capacity.root.offline.maximum-am-resource-percent 0.8 yarn.scheduler.capacity.root.offline.state running

vim /etc/hadoop/conf/core-site.xml~~~~~~~~~~~~~~~~~分界线~~~~~~~~~~~~~~~~~~~ fs.defaultFS hdfs://mycluster/ fs.trash.interval 1440 io.compression.codecs org.apache.hadoop.io.compress.DefaultCodec,org.apache.hadoop.io.compress.GzipCodec,org.apache.hadoop.io.compress.BZip2Codec,com.hadoop.compression.lzo.LzoCodec,com.hadoop.compression.lzo.LzopCodec,org.apache.hadoop.io.compress.SnappyCodec io.compression.codec.lzo.class com.hadoop.compression.lzo.LzoCodec hadoop.proxyuser.oozie.hosts * hadoop.proxyuser.oozie.groups * hadoop.proxyuser.httpfs.hosts * hadoop.proxyuser.httpfs.groups * fs.s3n.awsAccessKeyId AKIAIXxxx fs.s3.awsAccessKeyId AKIAIXxxx fs.s3bfs.awsAccessKeyId AKIAIXxxx fs.s3bfs.awsSecretAccessKey Hdne1k/2c90Ixxxxxx fs.s3.awsSecretAccessKey Hdne1k/2c90Ixxxxxx fs.s3n.awsSecretAccessKey Hdne1k/2c90Ixxxxxx fs.s3n.endpoint s3.amazonaws.com fs.s3bfs.impl org.apache.hadoop.fs.s3.S3FileSystem fs.s3.impl org.apache.hadoop.fs.s3native.NativeS3FileSystem

vim /etc/hadoop/conf/hdfs-site.xml~~~~~~~~~~~~~~~~~分界线~~~~~~~~~~~~~~~~~~~ dfs.nameservices mycluster dfs.replication 2 dfs.namenode.name.dir /data/hadoop/dfs/nn dfs.datanode.data.dir /data/hadoop/dfs/dn dfs.permissions.superusergroup hdfs dfs.permissions.enabled false dfs.ha.namenodes.mycluster 10.10.20.64,10.10.40.212 dfs.namenode.rpc-address.mycluster.10.10.20.64 10.10.20.64:8020 dfs.namenode.rpc-address.mycluster.10.10.40.212 10.10.40.212:8020 dfs.namenode.http-address.mycluster.10.10.20.64 10.10.20.64:50070 dfs.namenode.http-address.mycluster.10.10.40.212 10.10.40.212:50070 dfs.namenode.shared.edits.dir qjournal://10.10.20.64:8485;10.10.40.212:8485;10.10.102.207:8485/mycluster dfs.journalnode.edits.dir /data/hadoop/dfs/jn dfs.client.failover.proxy.provider.mycluster org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider dfs.ha.fencing.methods shell(/bin/true) dfs.ha.automatic-failover.enabled true ha.zookeeper.quorum 10.10.20.64:2181,10.10.40.212:2181,10.10.102.207:2181 dfs.blocksize 134217728 dfs.namenode.handler.count 128 dfs.datanode.handler.count 64 dfs.datanode.du.reserved 107374182400 dfs.balance.bandwidthPerSec 1048576 dfs.hosts.exclude /etc/hadoop/conf.mycluster/datanodes.exclude dfs.datanode.max.transfer.threads 4096 dfs.datanode.fsdataset.volume.choosing.policy org.apache.hadoop.hdfs.server.datanode.fsdataset.AvailableSpaceVolumeChoosingPolicy dfs.datanode.available-space-volume-choosing-policy.balanced-space-threshold 10737418240 dfs.datanode.available-space-volume-choosing-policy.balanced-space-preference-fraction 0.75 dfs.datanode.max.xcievers 4096 dfs.webhdfs.enabled true dfs.checksum.type CRC32 dfs.client.file-block-storage-locations.timeout 3000 dfs.datanode.hdfs-blocks-metadata.enabled true dfs.namenode.safemode.threshold-pct 0.85 fs.s3.awsAccessKeyId AKIAIXxxx fs.s3.awsSecretAccessKey Hdne1k/2c90Ixxxxxx fs.s3n.awsAccessKeyId AKIAIXxxx fs.s3n.awsSecretAccessKey Hdne1k/2c90Ixxxxxx

vim /etc/hadoop/conf/mapred-site.xml~~~~~~~~~~~~~~~~~分界线~~~~~~~~~~~~~~~~~~~ mapreduce.framework.name yarn mapreduce.jobhistory.address 10.10.103.15:10020 mapreduce.jobhistory.webapp.address 10.10.103.15:19888 yarn.app.mapreduce.am.staging-dir /user mapreduce.map.memory.mb 1536 mapreduce.reduce.memory.mb 2880 yarn.app.mapreduce.am.resource.mb 3072 mapreduce.map.java.opts -Xmx1228m mapreduce.reduce.java.opts -Xmx2456m yarn.app.mapreduce.am.command-opts -Xmx2457m mapreduce.jobtracker.handler.count 128 dfs.namenode.handler.count 128 mapreduce.map.cpu.vcores 1 mapreduce.reduce.cpu.vcores 1 yarn.app.mapreduce.am.resource.cpu-vcores 1 mapred.output.direct.EmrFileSystem true mapreduce.task.io.sort.factor 48 mapreduce.job.userlog.retain.hours 48 mapreduce.reduce.shuffle.parallelcopies 20 hadoop.job.history.user.location none mapreduce.map.speculative true mapreduce.reduce.speculative true mapred.output.direct.NativeS3FileSystem true mapreduce.map.output.compress true yarn.app.mapreduce.am.job.task.listener.thread-count 60 mapreduce.job.jvm.numtasks 20 mapreduce.map.output.compress.codec org.apache.hadoop.io.compress.SnappyCodec

vim /etc/hadoop/conf/yarn-env.sh

#!/bin/bash

export HADOOP_YARN_USER=${HADOOP_YARN_USER:-yarn}

export YARN_CONF_DIR="${YARN_CONF_DIR:-$HADOOP_YARN_HOME/conf}"

if [ "$JAVA_HOME" != "" ]; then

#echo "run java in $JAVA_HOME"

JAVA_HOME=$JAVA_HOME

fi

if [ "$JAVA_HOME" = "" ]; then

echo "Error: JAVA_HOME is not set."

exit 1

fi

JAVA=$JAVA_HOME/bin/java

JAVA_HEAP_MAX=-Xmx1000m

if [ "$YARN_HEAPSIZE" != "" ]; then

JAVA_HEAP_MAX="-Xmx""$YARN_HEAPSIZE""m"

fi

IFS=

if [ "$YARN_LOG_DIR" = "" ]; then

YARN_LOG_DIR="$HADOOP_YARN_HOME/logs"

fi

if [ "$YARN_LOGFILE" = "" ]; then

YARN_LOGFILE='yarn.log'

fi

if [ "$YARN_POLICYFILE" = "" ]; then

YARN_POLICYFILE="hadoop-policy.xml"

fi

unset IFS

YARN_OPTS="$YARN_OPTS -Dhadoop.log.dir=$YARN_LOG_DIR"

YARN_OPTS="$YARN_OPTS -Dyarn.log.dir=$YARN_LOG_DIR"

YARN_OPTS="$YARN_OPTS -Dhadoop.log.file=$YARN_LOGFILE"

YARN_OPTS="$YARN_OPTS -Dyarn.log.file=$YARN_LOGFILE"

YARN_OPTS="$YARN_OPTS -Dyarn.home.dir=$YARN_COMMON_HOME"

YARN_OPTS="$YARN_OPTS -Dyarn.id.str=$YARN_IDENT_STRING"

YARN_OPTS="$YARN_OPTS -Dhadoop.root.logger=${YARN_ROOT_LOGGER:-INFO,console}"

YARN_OPTS="$YARN_OPTS -Dyarn.root.logger=${YARN_ROOT_LOGGER:-INFO,console}"

if [ "x$JAVA_LIBRARY_PATH" != "x" ]; then

YARN_OPTS="$YARN_OPTS -Djava.library.path=$JAVA_LIBRARY_PATH"

fi

YARN_OPTS="$YARN_OPTS -Dyarn.policy.file=$YARN_POLICYFILE"

~~~~~~~~~~~~~~~~~分界线~~~~~~~~~~~~~~~~~~~

vim /etc/hadoop/conf/yarn-site.xml~~~~~~~~~~~~~~~~~分界线~~~~~~~~~~~~~~~~~~~ yarn.nodemanager.aux-services mapreduce_shuffle yarn.nodemanager.aux-services.spark_shuffle.class org.apache.spark.network.yarn.YarnShuffleService yarn.nodemanager.aux-services.mapreduce_shuffle.class org.apache.hadoop.mapred.ShuffleHandler yarn.resourcemanager.scheduler.class org.apache.hadoop.yarn.server.resourcemanager.scheduler.capacity.CapacityScheduler yarn.application.classpath $HADOOP_CONF_DIR,$HADOOP_COMMON_HOME/*,$HADOOP_COMMON_HOME/lib/*,$HADOOP_HDFS_HOME/*,$HADOOP_HDFS_HOME/lib/*,$HADOOP_MAPRED_HOME/*,$HADOOP_MAPRED_HOME/lib/*,$HADOOP_YARN_HOME/*,$HADOOP_YARN_HOME/lib/* yarn.log-aggregation-enable true yarn.nodemanager.remote-app-log-dir hdfs://mycluster/var/log/hadoop-yarn/apps yarn.nodemanager.vmem-pmem-ratio 10 yarn.nodemanager.resource.memory-mb 10360 yarn.nodemanager.resource.cpu-vcores 4 yarn.nodemanager.pmem-check-enabled true yarn.nodemanager.vmem-check-enabled true yarn.resourcemanager.scheduler.client.thread-count 64 yarn.nodemanager.container-manager.thread-count 64 yarn.resourcemanager.resource-tracker.client.thread-count 64 yarn.resourcemanager.client.thread-count 64 yarn.nodemanager.localizer.client.thread-count 20 yarn.nodemanager.localizer.fetch.thread-count 20 yarn.scheduler.minimum-allocation-mb 1536 yarn.scheduler.maximum-allocation-mb 9192 yarn.log-aggregation-enable true yarn.label.enabled true yarn.resourcemanager.connect.retry-interval.ms 2000 yarn.web-proxy.address 10.10.103.15:8100 yarn.log.server.url http://10.10.103.15:19888/jobhistory/logs/ yarn.resourcemanager.hostname.10.10.103.15 10.10.103.15 yarn.resourcemanager.hostname.10.10.30.83 10.10.30.83 yarn.resourcemanager.zk-address 10.10.20.64:2181,10.10.40.212:2181,10.10.102.207:2181 yarn.resourcemanager.address.10.10.103.15 10.10.103.15:23140 yarn.resourcemanager.scheduler.address.10.10.103.15 10.10.103.15:23130 yarn.resourcemanager.webapp.https.address.10.10.103.15 10.10.103.15:23189 yarn.resourcemanager.webapp.address.10.10.103.15 10.10.103.15:8088 yarn.resourcemanager.resource-tracker.address.10.10.103.15 10.10.103.15:23125 yarn.resourcemanager.admin.address.10.10.103.15 10.10.103.15:23141 yarn.resourcemanager.address.10.10.30.83 10.10.30.83:23140 yarn.resourcemanager.scheduler.address.10.10.30.83 10.10.30.83:23130 yarn.resourcemanager.webapp.https.address.10.10.30.83 10.10.30.83:23189 yarn.resourcemanager.webapp.address.10.10.30.83 10.10.30.83:8088 yarn.resourcemanager.resource-tracker.address.10.10.30.83 10.10.30.83:23125 yarn.resourcemanager.admin.address.10.10.30.83 10.10.30.83:23141 yarn.nodemanager.localizer.address 0.0.0.0:23344 yarn.nodemanager.webapp.address 0.0.0.0:23999 mapreduce.shuffle.port 23080 yarn.resourcemanager.work-preserving-recovery.enabled true

PS:配置参数解释参考下面链接

https://archive.cloudera.com/cdh5/cdh/5/hadoop/hadoop-yarn/hadoop-yarn-common/yarn-default.xml

https://archive.cloudera.com/cdh5/cdh/5/hadoop/hadoop-project-dist/hadoop-hdfs/hdfs-default.xml

https://archive.cloudera.com/cdh5/cdh/5/hadoop/hadoop-project-dist/hadoop-common/core-default.xml

https://archive.cloudera.com/cdh5/cdh/5/hadoop/hadoop-mapreduce-client/hadoop-mapreduce-client-core/mapred-default.xml

http://dongxicheng.org/mapreduce-nextgen/hadoop-yarn-configurations-capacity-scheduler/

5、服务启动

a、启动journalnode(三台服务器上都启动)

/etc/init.d/hadoop-hdfs-journalnode start

b、格式化namenode(在其中一台namenode10.10.103.246上操作)

sudo -u hdfs hadoop namenode -format

c、初始化zk中HA的状态(在其中一台namenode10.10.103.246上操作)

sudo -u hdfs hdfs zkfc -formatZK

d、初始化共享Edits文件(在其中一台namenode10.10.103.246上操作)

sudo -u hdfs hdfs namenode -initializeSharedEdits

e、启动10.10.103.246上namenode

/etc/init.d/hadoop-hdfs-namenode start

f、同步源数据并启动10.10.103.144上namenode

sudo -u hdfs hdfs namenode -bootstrapStandby /etc/init.d/hadoop-hdfs-namenode start

g、在两台namenode上启动zkfc

/etc/init.d/hadoop-hdfs-zkfc start

h、启动datanode(所有机器上操作)

/etc/init.d/hadoop-hdfs-journalnode start

i、在10.10.103.246上启动WebAppProxyServer、JobHistoryServer、httpfs

/etc/init.d/hadoop-yarn-proxyserver start /etc/init.d/hadoop-mapreduce-historyserver start /etc/init.d/hadoop-httpfs start

j、在所有机器上启动nodemanager

/etc/init.d/hadoop-yarn-nodemanager restart

四、功能验证

1、hadoop功能

a、查看hdfs根目录

[root@ip-10-10-103-246 ~]# hadoop fs -ls / Java HotSpot(TM) 64-Bit Server VM warning: ignoring option MaxPermSize=128m; support was removed in 8.0 Found 3 items drwxr-xr-x - hdfs hdfs 0 2017-05-11 11:40 /tmp drwxrwx--- - mapred hdfs 0 2017-05-11 11:28 /user drwxr-xr-x - yarn hdfs 0 2017-05-11 11:28 /var

b、上传一个文件到根目录

[root@ip-10-10-103-246 ~]# hadoop fs -put /tmp/test.txt / Java HotSpot(TM) 64-Bit Server VM warning: ignoring option MaxPermSize=128m; support was removed in 8.0 [root@ip-10-10-103-246 ~]# hadoop fs -ls / Java HotSpot(TM) 64-Bit Server VM warning: ignoring option MaxPermSize=128m; support was removed in 8.0 Found 4 items -rw-r--r-- 2 root hdfs 22 2017-05-11 15:47 /test.txt drwxr-xr-x - hdfs hdfs 0 2017-05-11 11:40 /tmp drwxrwx--- - mapred hdfs 0 2017-05-11 11:28 /user drwxr-xr-x - yarn hdfs 0 2017-05-11 11:28 /var

c、直接删除文件不放回收站

[root@ip-10-10-103-246 ~]# hadoop fs -rm -skipTrash /test.txt Java HotSpot(TM) 64-Bit Server VM warning: ignoring option MaxPermSize=128m; support was removed in 8.0 Deleted /test.txt

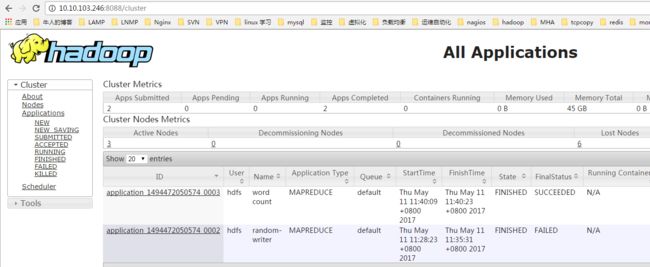

d、跑一个wordcount用例

[root@ip-10-10-103-246 ~]# hadoop fs -put /tmp/test.txt /user/hdfs/rand/ Java HotSpot(TM) 64-Bit Server VM warning: ignoring option MaxPermSize=128m; support was removed in 8.0 [root@ip-10-10-103-246 conf]# sudo -u hdfs hadoop jar /usr/lib/hadoop-mapreduce/hadoop-mapreduce-examples-2.6.0-cdh5.11.0.jar wordcount /user/hdfs/rand/ /tmp OpenJDK 64-Bit Server VM warning: ignoring option MaxPermSize=128m; support was removed in 8.0 17/05/11 11:40:08 INFO client.ConfiguredRMFailoverProxyProvider: Failing over to 10.10.103.246 17/05/11 11:40:09 INFO input.FileInputFormat: Total input paths to process : 1 17/05/11 11:40:09 INFO lzo.GPLNativeCodeLoader: Loaded native gpl library 17/05/11 11:40:09 INFO lzo.LzoCodec: Successfully loaded & initialized native-lzo library [hadoop-lzo rev 674c65bbf0f779edc3e00a00c953b121f1988fe1] 17/05/11 11:40:09 INFO mapreduce.JobSubmitter: number of splits:1 17/05/11 11:40:09 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1494472050574_0003 17/05/11 11:40:09 INFO impl.YarnClientImpl: Submitted application application_1494472050574_0003 17/05/11 11:40:09 INFO mapreduce.Job: The url to track the job: http://10.10.103.246:8100/proxy/application_1494472050574_0003/ 17/05/11 11:40:09 INFO mapreduce.Job: Running job: job_1494472050574_0003 17/05/11 11:40:15 INFO mapreduce.Job: Job job_1494472050574_0003 running in uber mode : false 17/05/11 11:40:15 INFO mapreduce.Job: map 0% reduce 0% 17/05/11 11:40:20 INFO mapreduce.Job: map 100% reduce 0% 17/05/11 11:40:25 INFO mapreduce.Job: map 100% reduce 100% 17/05/11 11:40:25 INFO mapreduce.Job: Job job_1494472050574_0003 completed successfully 17/05/11 11:40:25 INFO mapreduce.Job: Counters: 53 File System Counters FILE: Number of bytes read=1897 FILE: Number of bytes written=262703 FILE: Number of read operations=0 FILE: Number of large read operations=0 FILE: Number of write operations=0 HDFS: Number of bytes read=6431 HDFS: Number of bytes written=6219 HDFS: Number of read operations=6 HDFS: Number of large read operations=0 HDFS: Number of write operations=2 Job Counters Launched map tasks=1 Launched reduce tasks=1 Data-local map tasks=1 Total time spent by all maps in occupied slots (ms)=2592 Total time spent by all reduces in occupied slots (ms)=5360 Total time spent by all map tasks (ms)=2592 Total time spent by all reduce tasks (ms)=2680 Total vcore-milliseconds taken by all map tasks=2592 Total vcore-milliseconds taken by all reduce tasks=2680 Total megabyte-milliseconds taken by all map tasks=3981312 Total megabyte-milliseconds taken by all reduce tasks=8232960 Map-Reduce Framework Map input records=102 Map output records=96 Map output bytes=6586 Map output materialized bytes=1893 Input split bytes=110 Combine input records=96 Combine output records=82 Reduce input groups=82 Reduce shuffle bytes=1893 Reduce input records=82 Reduce output records=82 Spilled Records=164 Shuffled Maps =1 Failed Shuffles=0 Merged Map outputs=1 GC time elapsed (ms)=120 CPU time spent (ms)=1570 Physical memory (bytes) snapshot=501379072 Virtual memory (bytes) snapshot=7842639872 Total committed heap usage (bytes)=525860864 Peak Map Physical memory (bytes)=300183552 Peak Map Virtual memory (bytes)=3244224512 Peak Reduce Physical memory (bytes)=201195520 Peak Reduce Virtual memory (bytes)=4598415360 Shuffle Errors BAD_ID=0 CONNECTION=0 IO_ERROR=0 WRONG_LENGTH=0 WRONG_MAP=0 WRONG_REDUCE=0 File Input Format Counters Bytes Read=6321 File Output Format Counters Bytes Written=6219 [root@ip-10-10-103-246 conf]#

2、namenode高可用验证

查看http://10.10.103.246:50070

查看http://10.10.103.144:50070

停掉10.10.103.246节点的namenode进程,查看10.10.103.144节点是否会提升为active节点

3、resourcemanager高可用验证

查看http://10.10.103.246:8088

查看http://10.10.103.144:8088

在浏览器输入http://10.10.103.144:8088,会跳转到http://ip-10-10-103-246.ec2.internal:8088/,ip-10-10-103-246.ec2.internal是10.10.103.246的主机名,说明resourcemanager高可用配置ok,停掉10.10.103.144的

resourcemanager进程,在浏览器输入http://10.10.103.144:8088,就不会在跳转了,说明10.10.103.144已经切成了master。

五、总结

1、hadoop集群能成本部署完成,这才是开始,后期的维护,业务方问题的解决这些经验需要一点一点积累,多出差多折腾总是好的。

2、对应上面部署的集群后期需要扩容,直接把10.10.103.62这台机器做个镜像,用镜像启动服务器即可,服务会自动启动并且加入到集群

3、云上hadoop集群的成本优化,这里只针对aws而言

a、冷数据存在在s3上,hdfs可以直接支持s3,在hdfs-site.xml里面添加s3的key参数(fs.s3n.awsAccessKeyId和fs.s3n.awsSecretAccessKey)即可,需要注意的是程序上传、下载的逻辑需要多加几个重试机制,s3有时候不稳定会导致上传或者下载不成功

b、使用Auto Scaling服务结合竞价实例,配置扩展策略,比如当cpu大于50%的时候就扩容5台服务器,当cpu小于10%的时候就缩容5台服务器,当然你可以配置更多阶梯级的扩容、缩容策略,Auto Scaling还有一个计划任务的功能,你可以向设置crontab一样设置,让Auto Scaling帮你扩容、缩容服务器