一、简介

Haproxy一个高性能的负载均衡服务软件,它可基于四层和七层之间进行调度,而且对各个节点具有健康状态检测的功能,当后端服务器故障时,会自动标记为不可用状态,当服务器上线时还会自动将后端主机上线。比起lvs其配置简单,且引入了frontend,backend,listen等功能,frontend可添加acl规则,可根据HTTP请求头做规则匹配,然后把请求定向到相关的backend。

二、配置相关参数详解

haproxy主要分为global、defaults、front、backend、listen几段,配置文件详细说明如下:

#--------------------------------------------------------------------- # Global settings #全局配置段 #--------------------------------------------------------------------- global #全局配置段 # to have these messages end up in /var/log/haproxy.log you will # need to: # # 1) configure syslog to accept network log events. This is done # by adding the '-r' option to the SYSLOGD_OPTIONS in # /etc/sysconfig/syslog # # 2) configure local2 events to go to the /var/log/haproxy.log # file. A line like the following can be added to # /etc/sysconfig/syslog # # local2.* /var/log/haproxy.log #如需保存日志文件需修改/etc/rsyslog.cfg添加此项至配置问文件中重启rsyslog # log 127.0.0.1 local2 #日志将通过rsyslog进行记录 chroot /var/lib/haproxy #运行的安装路径 pidfile /var/run/haproxy.pid #运行时的pid进程文件 maxconn 4000 #最大连接数 user haproxy #运行以haproxy用户 group haproxy #运行以haproxy用户 daemon #以守护进程的方式运行haproxy # turn on stats unix socket stats socket /var/lib/haproxy/stats #--------------------------------------------------------------------- # common defaults that all the 'listen' and 'backend' sections will # use if not designated in their block #--------------------------------------------------------------------- defaults mode http #工作模式 log global #记录日志级别为全局 option httplog #详细的http日志 option dontlognull option http-server-close option forwardfor except 127.0.0.0/8 #传递客服端IP option redispatch retries 3 #失败后重试次数 timeout http-request 10s #http请求超时时长 timeout queue 1m timeout connect 10s timeout client 1m timeout server 1m timeout http-keep-alive 10s timeout check 10s #心跳信息检测超时时长 maxconn 3000 #--------------------------------------------------------------------- # main frontend which proxys to the backends #--------------------------------------------------------------------- listen stats mode http bind *:1080 stats enable stats hide-version stats uri /admin stats realm Haproxy\ Statistics stats auth admin:admin stats admin if TRUE frontend main bind *:80 #定义acl规则 acl url_static path_beg -i /static /p_w_picpaths /javascript /stylesheets #请求报文中以此类开头的都定义为uri_static acl url_static path_end -i .jpg .gif .png .css .js .html .ico #不区分大小写一此类.*结尾的都定义为url_static acl url_dynamic path_end -i .php .jsp .asp #不区分大小写以此类开头的定义为动态资源组 use_backend static if url_static #调用后端服务器并检测规则 use_backend bynamic if url_dynamic #调用后端服务器并检查规则 default_backend static #使用默认规则 #--------------------------------------------------------------------- # static backend for serving up p_w_picpaths, stylesheets and such #--------------------------------------------------------------------- backend static #后端调度 balance roundrobin #调度算法,除此外还有static-rr,leaseconn,first,source,uri等 server static 192.168.10.125:80 inter 1500 rise 2 fall 3 check rspadd X-Via:static #启用响应报文首部标志,以便观察是静态服务器反馈的 #--------------------------------------------------------------------- # round robin balancing between the various backends #--------------------------------------------------------------------- backend dynamic balance source server s2 172.16.10.12:80 check inter 1500 rise 2 fall 3 #check inter 1500是检测心跳频率 #rise2 2次正确认为服务器可用 #fall3 3次失败认为服务器不可用 #--------------------------------------------------------------------- # round robin balancing listen option #--------------------------------------------------------------------- listen statistics mode http #http 7 层模式 bind *:9988 #监听地址 stats enable #启用状态监控 stats auth admin:admin #验证的用户与密码 stats uri /admin?stats #访问路径 stats hide-version #隐藏状态页面版本号 stats admin if TRUE #如果验证通过了就允许登录 stats refresh 3s #每3秒刷新一次 acl allow src 192.168.18.0/24 #允许的访问的IP地址 tcp-request content accept if allow #允许的地址段就允许访问 tcp-request content reject #拒绝非法连接

三、haproxy+varnish实现动静分离小案例

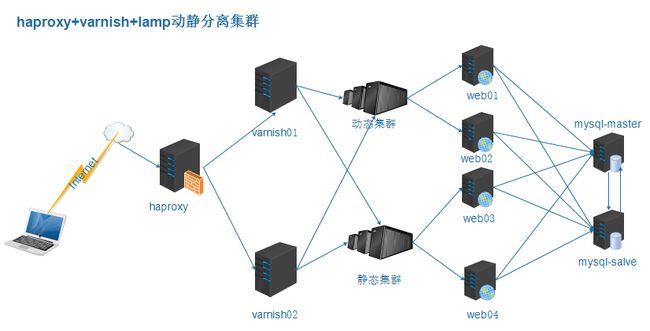

实验架构拓扑图:

架构说明:用户请求到达前端,通过haproxy调度到varnish缓存服务器上,当缓存服务器上的资源命中且未过期时直接叫资源响应改用户,当未命中时通过在两台varnish服务器上设置匹配规则将其转发至后端响应的动态和静态服务器上,后端动态或静态服务器均使用nfs网络文件共享使用同一个资源目录,同时将使用同一台厚分离出来的mysql服务器作为存储数据使用,考虑单点故障的瓶颈,mysql服务器将使用主从两台实现高可用主负责读写均可,但从服务器为只读,同理路由需要也可在前端haproxy代理提供冗余。

实验环境如下:

前端:HAProxy

1、调度服务器:Varnish1、Varnish2

2、调度算法一致性URL哈希:URL_Hash_Consistent

3、集群统计页:ipaddr/haproxy?admin

缓存服务器:Varnish

1、VarnishServer01

2、VarnishServer02

3、开启健康状态探测,提供高可用

4、负载均衡后端Web服务器组

5、动静分离后端服务器,并动静都提供负载均衡效果

后端服务器:

StaticServer01

StaticServer02

DynamicServer01

DynamicServer02

Mysql服务器:

MysqlServer-master

MysqlServer-slave

haproxy配置如下:

#--------------------------------------------------------------------- # Global settings #--------------------------------------------------------------------- global # to have these messages end up in /var/log/haproxy.log you will # need to: # # 1) configure syslog to accept network log events. This is done # by adding the '-r' option to the SYSLOGD_OPTIONS in # /etc/sysconfig/syslog # # 2) configure local2 events to go to the /var/log/haproxy.log # file. A line like the following can be added to # /etc/sysconfig/syslog # # local2.* /var/log/haproxy.log # log 127.0.0.1 local2 chroot /var/lib/haproxy pidfile /var/run/haproxy.pid maxconn 4000 user haproxy group haproxy daemon # turn on stats unix socket stats socket /var/lib/haproxy/stats #--------------------------------------------------------------------- # common defaults that all the 'listen' and 'backend' sections will # use if not designated in their block #--------------------------------------------------------------------- defaults mode http log global option httplog option dontlognull option http-server-close option forwardfor except 127.0.0.0/8 option redispatch retries 3 timeout http-request 10s timeout queue 1m timeout connect 10s timeout client 1m timeout server 1m timeout http-keep-alive 10s timeout check 10s maxconn 3000 #--------------------------------------------------------------------- # main frontend which proxys to the backends #--------------------------------------------------------------------- frontend web *:80 #acl url_static path_beg-i /static /p_w_picpaths /javascript /sytlesheets #acl url_static path_end -i .jpg .gif .png .css .js #use_backend staticif url_static use_backendvarnish_srv #--------------------------------------------------------------------- # vanrnish server balance method #--------------------------------------------------------------------- backend varnish_srv #定义varnish后端主机组 balance uri #一致性hash hash-type consistent #一致性hash url servervarnish1 10.1.10.6:9988 check #varnish服务器1,并添加健康状态检测 servervarnish2 10.1.10.7:9988 check #varnish服务器02,并添加健康状态检测 listen stats #定义状态监控管理页 bind:9002 stats uri /alren?admin #页面URL stats hide-version #影藏版文本信息 stats authadmin:alren #提供认证页面 stats admin if TRUE #认证通过则条状到相应页面

varnish配置如下:

# This is an example VCL file for Varnish.

#

# It does not do anything by default, delegating control to the

# builtin VCL. The builtin VCL is called when there is no explicit

# return statement.

#

# See the VCL chapters in the Users Guide at https://www.varnish-cache.org/docs/

# and http://varnish-cache.org/trac/wiki/VCLExamples for more examples.

# Marker to tell the VCL compiler that this VCL has been adapted to the

# new 4.0 format.

vcl 4.0; #版本信息

import directors; #导入模块

acl purges { #定义修剪规则

"127.0.0.1";

"10.1.10.0";

}

backend web1 {

.host = "10.1.10.68:80";

.port = "80";

.url ="/heath.php";

.timeout = 2s;

.interval = 1s;

.window = 6;

.threshold = 3;

.expected_response = 200;

.initial = 2;

}

backend web2 {

.host = "10.1.10.69:80";

.port = "80";

.url ="/heath.php";

.timeout = 2s;

.interval = 1s;

.window = 6;

.threshold = 3;

.expected_response = 200;

.initial = 2;

}

backend app1 {

.host = "10.1.10.70:80";

.port = "80";

.url ="/heath.html";

.timeout = 2s;

.interval = 1s;

.window = 6;

.threshold = 3;

.expected_response = 200;

.initial = 2;

}

backend app2 {

.host = "10.1.10.71:80";

.port = "80";

.url ="/heath.html";

.timeout = 2s;

.interval = 1s;

.window = 6;

.threshold = 3;

.expected_response = 200;

.initial = 2;

}

sub vcl_init {

new webcluster = directors.round_robin();

webcluster.add_backend(web1);

webcluster.add_backend(web2);

new appcluster = directors.round_robin();

appcluster.add_backend(app1);

appcluster.add_backend(app2);

}

sub vcl_recv {

if (req.method == "PURGE"){

if(!client.ip ~ purges){

return(synth(408,"you don't have permission purge " + client.ip));

}

return (purge);

}

if (req.url ~ "(?i)\.(php|asp|aspx|jsp)($|\?)") {

set req.backend_hint = appcluster.backend();

}

if (req.method != "GET" &&

req.method != "HEAD" &&

req.method != "PUT" &&

req.method != "POST" &&

req.method != "TRACE" &&

req.method != "OPTIONS" &&

req.method != "PATCH" &&

req.method != "DELETE") {

return (pipe);

}

if (req.method != "GET" && req.method != "HEAD") {

return (pass);

}

if (req.http.Authorization || req.http.Cookie) {

return (pass);

}

if (req.http.Accept-Encoding) {

if (req.url ~ "\.(bmp|png|gif|jpg|jpeg|ico|gz|tgz|bz2|tbz|zip|rar|mp3|mp4|ogg|swf|flv)$") {

unset req.http.Accept-Encoding;

} elseif (req.http.Accept-Encoding ~ "gzip") {

set req.http.Accept-Encoding = "gzip";

} elseif (req.http.Accept-Encoding ~ "deflate") {

set req.http.Accept-Encoding = "deflate";

} else {

unset req.http.Accept-Encoding;

}

}

return (hash);

}

sub vcl_pipe {

return (pipe);

}

sub vcl_miss {

return(fetch);

}

sub vcl_hash {

hash_data(req.url);

if (req.http.host) {

hash_data(req.http.host);

} else {

hash_data(server.ip);

}

if (req.http.Accept-Encoding ~ "gzip") {

hash_data ("gzip");

} elseif (req.http.Accept-Encoding ~ "deflate") {

hash_data ("deflate");

}

}

sub vcl_backend_response {

if (beresp.http.cache-control !~ "s-maxage") {

if (bereq.url ~ "(?i)\.(jpg|jpeg|png|gif|css|js|html|htm)$") {

unset beresp.http.Set-Cookie;

set beresp.ttl = 3600s;

}

}

}

sub vcl_purge {

return(synth(200,"Purged"));

}

sub vcl_deliver {

if (obj.hits > 0) {

set resp.http.X-Cache = "HIT via " + req.http.host;

set resp.http.X-Cache-Hits = obj.hits;

} else {

set resp.http.X-Cache = "MISS via " + req.http.host;

}

}

上诉完成后,配置web服务,使用nfs网络文件系统并且提供实时数据同步(rsync+inotify),启动mysql进行授权用户和创建数据库,搭建WordPress或其他应用程序,此过程简单就不啰嗦,此架构存在一定的不足之处,即单点故障会导致用户请求失败。

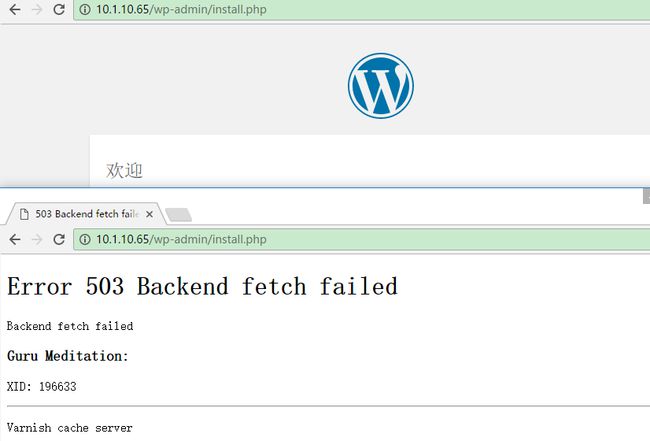

实现效果如下所示:

将nfs服务端停止后实验图: