文本编辑控件

UITextView

UITextview 有一个很坑的地方,就是你点击键盘输入完成按钮是无法结束输入并收起键盘的,也就是说他不会直接走下面的代理方法:

- (void)textViewDidEndEditing:(UITextView *)textView{

}

无论你怎么点击完成按钮它总是会以换行的形式展现给你。

所以请你不要把时间浪费在怀疑自己是否为 UITextView 设置了代理或者质疑Xcode是否出了问题。要想实现点击完成按钮收起键盘,那么请换个方式,如下:

在 UITextView 的 shouldChangeTextInRange 代理方法中监听是否输入了换行符 ‘/n’ 如果键入了换行符那就收起键盘结束输入

- (BOOL)textView:(UITextView *)textView shouldChangeTextInRange:(NSRange)range replacementText:(NSString *)text {

if ([text isEqualToString:@"\n"]) {

[textView resignFirstResponder];

return NO;

}

return YES;

}

程序执行到[textView resignFirstResponder] 标志着输入完成,shouldChangeTextInRange 代理方法会返回 NO ,不能再改变 textview 中的 text ,意思该textView可以结束编辑了。因此接下来才会走 - (void)textViewDidEndEditing:(UITextView *)textView 来结束编辑

限制 UITextView 或 UItextField 的输入字数

说到限制UITextView的字数我们很容易的会想到

- (BOOL)textView:(UITextView *)textView shouldChangeTextInRange:(NSRange)range replacementText:(NSString *)text {

}

说到限制UITextField字数会想到类似的方法

- (BOOL)textField:(UITextField *)textField shouldChangeCharactersInRange:(NSRange)range replacementString:(NSString *)string {

-

}

但你尝试一下就会发现这两个方法并不能完全达到限制字数的作用,比如:

- 输入中文达到规定上限,你继续点击联想出来的中文依然会显示在UITextField 或 UITextView 中

- 输入文字达到上限时,如果你点击了联想字符后你还可以输入Emoji表情

不信你试试啊!!!

为了解决这个问题我下面方法

- 在UIKit库中苹果分别为我们提供了 UItextView 和 UITextField 通知方法,来监听 UITextView 或 UITextField 的编辑状态。

UITextView

UIKIT_EXTERN NSNotificationName const UITextViewTextDidBeginEditingNotification;

UIKIT_EXTERN NSNotificationName const UITextViewTextDidChangeNotification;

UIKIT_EXTERN NSNotificationName const UITextViewTextDidEndEditingNotification;

UITextField

UIKIT_EXTERN NSNotificationName const UITextFieldTextDidBeginEditingNotification;

UIKIT_EXTERN NSNotificationName const UITextFieldTextDidEndEditingNotification;

UIKIT_EXTERN NSNotificationName const UITextFieldTextDidChangeNotification;

UIKIT_EXTERN NSString *const UITextFieldDidEndEditingReasonKey NS_AVAILABLE_IOS(10_0);

- 我们来自己添加监听者监听UItextView 或 UITextFiled的编辑状态并执行对应的方法

[[NSNotificationCenter defaultCenter]

addObserver:self

selector:@selector(textViewEditChanged:)

name:@"UITextViewTextDidChangeNotification"

object:self.textInput];

首先先要搞懂几个概念

UItextInput Protocol :一个协议UITextView 和 UITextFiled 都要遵循这个协议

markedText: 存在在输入框中但还没有点击确认的文字

UITextRange: 遵循 UItextInput 协议的类都要创建这个子类和UITextPosition 子类。他用来表示TextField 或者 TextView 在文本容器中的范围

UITextPosition: 遵循 UItextInput 协议的类都要创建这个子类和UITextRange 子类。 他用来表示UITextView 或者 UITextField 在文本容器中的位置

The text input system uses both these objects and UITextRange objects for communicating text-layout information. There are two reasons for using objects for text positions rather than primitive types such as NSInteger:

- (void)textViewEditChanged:(NSNotification *)obj {

UITextView *textField = (UITextView *)obj.object;

NSString *toBeString = textField.text;

UITextRange *selectedRange = [textField markedTextRange];

UITextPosition *position =

[textField positionFromPosition:selectedRange.start offset:0];

if (!position) {

if (toBeString.length > 200) {

NSRange rangeIndex =

[toBeString rangeOfComposedCharacterSequenceAtIndex:200];

//如果是汉字,就直接截取到限制的最大字符数

if (rangeIndex.length == 1) {

textField.text = [toBeString substringToIndex:200];

} else {

//如果不是汉字,那就是emoji表情了,就截取到包括完整emoji表情后的range范围的字符

NSRange rangeRange = [toBeString

rangeOfComposedCharacterSequencesForRange:NSMakeRange(0, 200)];

textField.text = [toBeString substringWithRange:rangeRange];

}

[[UIApplication sharedApplication].keyWindow

showToastMessage:@"提示"

subTitle:@"描述最多输入200个字"

type:MessageTypeFailed];

}

}

}

最后我们来解释一下这个方法的作用 rangeOfComposedCharacterSequenceAtIndex

每一个中文或者英文在NSString中的length均为1,但是一个Emoji的length的长度为2或者4,如果使用substringToIndex可能存在把Emoji截断而导致乱码的情况

所以使用rangeOfComposedCharacterSequenceAtIndex或者rangeOfComposedCharacterSequencesForRange方法,避免截断完整字符

截图拼图处理

因为我们需求是将很多图片拼接成一张图片,然后通过社交平台分享出去,分享出去的就是已经拼接好的图片,在此过程中用户是在应用内部看不到这张图片的

原理是这样的:

将所有图片取出来保存在数组中 —> 计算出所有image叠加起来的高度 —> 设置画布大小 —> 在画布上开始画图(UIGraphicsBeginImageContextWithOptions)—> 画图结束(UIGraphicsEndImageContext)

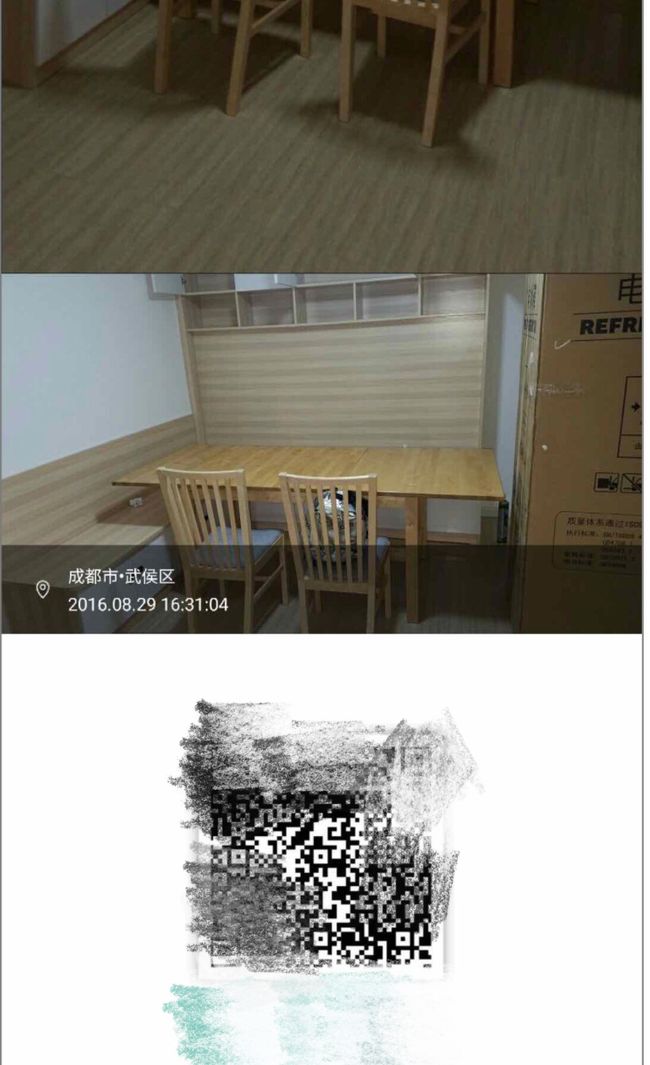

下面的代码有些复杂,不仅需要拼接图片还要将二维码和地理位置信息拼接上去,如图:

// 生成多张图片拼接

if (resources.count > 0) {

__block NSMutableArray *images = [NSMutableArray array];

__block CGFloat imageHeight = 0.0;

dispatch_group_t group = dispatch_group_create();

NSUInteger count = resources.count;

for (unsigned int i = 0; i < count; i++) {

dispatch_group_enter(group);

[[SDWebImageDownloader sharedDownloader] downloadImageWithURL:[NSURL URLWithString:resources[i]] options:0 progress:nil completed:^(UIImage *image, NSData *data, NSError *error, BOOL finished) {

if (image) {

[images addObject:image];

imageHeight = imageHeight + (image.size.height * (ScreenWidth / image.size.width)) ;

}

dispatch_group_leave(group);

}];

}

LocationAndDateView *view = [LocationAndDateView viewFromXib];

[view bindData:info];

CGSize locationImageSize = CGSizeMake(ScreenWidth, CGRectGetHeight(view.bounds));

UIGraphicsBeginImageContextWithOptions(locationImageSize, NO, [UIScreen mainScreen].scale);

[view drawViewHierarchyInRect:CGRectMake(0, 0, ScreenWidth, CGRectGetHeight(view.bounds)) afterScreenUpdates:YES];

UIImage *locationImage = UIGraphicsGetImageFromCurrentImageContext();

UIGraphicsEndImageContext();

UIImage *QRImage = [UIImage imageNamed:@"shareImage_QR"];

CGSize QRImageSize = CGSizeMake(ScreenWidth,QRImage.size.height * (ScreenWidth / QRImage.size.width));

UIGraphicsBeginImageContextWithOptions(QRImageSize, NO, [UIScreen mainScreen].scale);

dispatch_group_notify(group, dispatch_get_global_queue(QOS_CLASS_DEFAULT, 0), ^{

self.topContainerHeight.constant = imageHeight;

CGSize size = CGSizeMake(ScreenWidth, imageHeight +QRImage.size.height * (ScreenWidth / QRImage.size.width));

UIGraphicsBeginImageContextWithOptions(size, NO, [UIScreen mainScreen].scale);

CGFloat lastImageHeight = 0.0;

for (unsigned int i = 0; i < images.count; i++) {

UIImage *image = images[i];

[image drawInRect:CGRectMake(0, lastImageHeight, ScreenWidth , (image.size.height * (ScreenWidth / image.size.width)))];

lastImageHeight = lastImageHeight + (image.size.height * (ScreenWidth / image.size.width));

}

[locationImage drawInRect:CGRectMake(0, imageHeight - locationImage.size.height, ScreenWidth , locationImage.size.height)];

[QRImage drawInRect:CGRectMake(0, lastImageHeight, ScreenWidth, QRImage.size.height * (ScreenWidth / QRImage.size.width))];

UIImage *stitchImage = UIGraphicsGetImageFromCurrentImageContext();

UIGraphicsEndImageContext();

self.headerImageView.image = stitchImage;

self.headerImageView.contentMode = UIViewContentModeScaleAspectFit;

self.headerImageView.clipsToBounds = YES;

[self.topContainer setBackgroundColor:[UIColor colorWithPatternImage:stitchImage]];

dispatch_async(dispatch_get_main_queue(), ^{

completion(stitchImage);

});

});

} else { // 分享文字生成一张图片

self.topContainerHeight.constant = ScreenHeight / 2 - 40;

self.headerImageView.image = [UIImage imageNamed:@"bg_scenic_header"];

UIGraphicsBeginImageContextWithOptions(self.bounds.size, NO,

[UIScreen mainScreen].scale);

[self drawViewHierarchyInRect:self.bounds afterScreenUpdates:YES];

UIImage *screenshot = UIGraphicsGetImageFromCurrentImageContext();

UIGraphicsEndImageContext();

completion(screenshot);

}

针对图片拼接我写了一个简单的Demo GitHub地址