1.Abstract

Recently the neural machine translation is proposed;

In this paper, we conjecture that the use of a fixed-length vector is a bottleneck in improving the performance of this basic encoder–decoder architecture, and propose to extend this by allowing a model to automatically (soft-)search for parts of a source sentence that are relevant to predicting a target word, without having to form these parts as a hard segment explicitly.

2.Introduction

For translation,Most of the proposed neural machine translation models belong to a family of encoder–decoders.

A potential issue with this encoder–decoder approach is that a neural network needs to be able to compress all the necessary information of a source sentence into a fixed-length vector. SO,the performance of a basic encoder–decoder deteriorates rapidly as the length of an input sentence increases.

The new method is that:

- Each time the proposed model generates a word in a translation, it (soft-)searches for a set of positions in a source sentence where the most relevant information is concentrated.

- The model then predicts a target word based on the context vectors associated with these source positions and all the previous generated target words.

The most important distinguishing feature of this approach from the basic encoder–decoder is that:

/CORE/

It does not attempt to encode a whole input sentence into a single fixed-length vector. Instead, it encodes the input sentence into a sequence of vectors and chooses a subset of these vectors adaptively while decoding the translation.

This frees a neural translation model from having to squash all the information of a source sentence, regardless of its length, into a fixed-length vector.

/CORE/

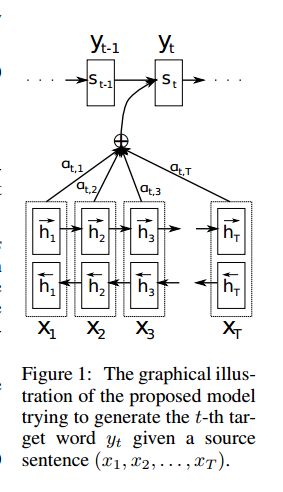

3.Learning to Align and Translate

The new architecture consists of a bidirectional RNN as an encoder and a decoder that emulates searching through a source sentence during decoding a translation.

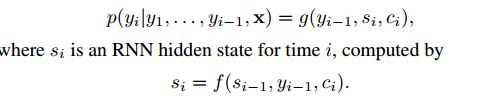

3.1 Decoder

Traditional conditional probability EQ is:

Where g is a nonlinear, potentially multi-layered, function that outputs the probability of yt, and st is the hidden state of the RNN.

Now:

It should be noted that unlike the existing encoder–decoder approach , here the probability is conditioned on a distinct context vector ci for each target word yi.

Ci depends on a sequence of annotations (h1,...,hTx) to which an encoder maps the input sentence.

Each annotation hi contains information about the whole input sequence with a strong focus on the parts surrounding the i-th word of the input sequence.

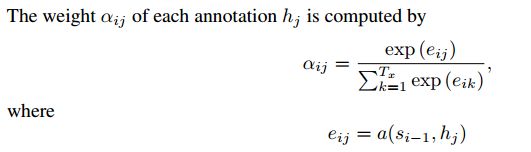

The context vector ci is computed as a weighted sum of these annotations hi.

is an alignment model which scores how well the inputs around position j and the output at position i match. The score is based on the RNN hidden state si−1 (just before emitting yi, Eq. (4)) and the j-th annotation hj of the input sentence.

We parametrize the alignment model a as a feedforward neural network which is jointly trained with all the other components of the proposed system.

Let αij be a probability that the target word yi is aligned to, or translated from, a source word xj. Then, the i-th context vector ci is the expected annotation over all the annotations with probabilities αij.

3.2 Encoder

We would like the annotation of each word to summarize not only the preceding words, but also the following words. Hence,we propose to use a bidirectional RNN.

3.3 Architectural Choices

The alignment model should be designed considering that the model needs to be evaluated Tx × Ty times for each sentence pair of lengths Tx and Ty.

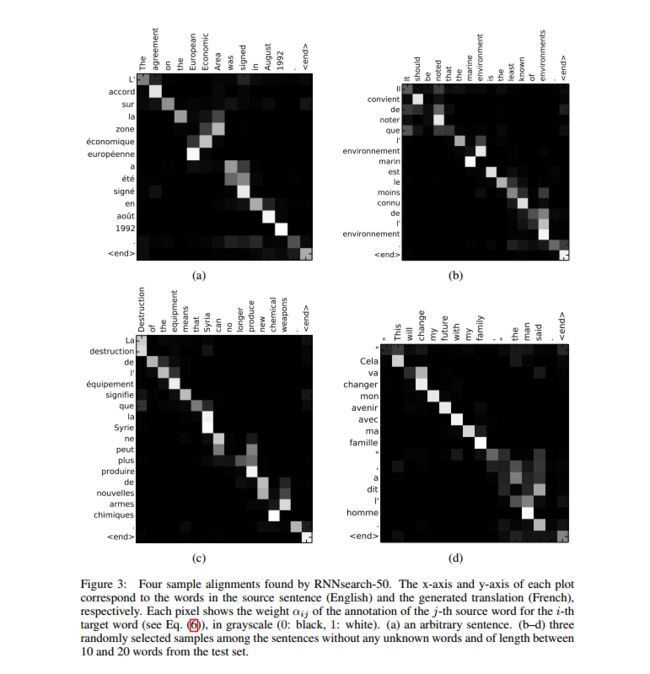

Result

In conjunction with the quantitative results presented already, these qualitative observations confirm our hypotheses that the RNNsearch architecture enables far more reliable translation of long sentences than the standard RNNenc model.

Related work

Conclusion

One of challenges left for the future is to better handle unknown, or rare words. This will be required for the model to be more widely used and to match the performance of current state-of-the-art machine translation systems in all contexts.