第四部分, 配置 Kubernetes 集群

kubectl 安装在所有需要进行操作的机器上(通常在master主机上即可)

Master

Master 需要部署 kube-apiserver , kube-scheduler , kube-controller-manager 这三个组件。

kube-scheduler 作用是调度pods分配到那个node里,简单来说就是资源调度。

kube-controller-manager 作用是 对 deployment controller , replication controller, endpoints controller, namespace controller, and serviceaccounts controller等等的循环控制,与kube-apiserver交互。

Node 需要部署kubelet, kubeproxy

kube-proxy: 作用主要是负责service的实现,具体来说,就是实现了内部从pod到service和外部的从node port向service的访问。

kubelet: 主要功能就是定时从某个地方获取节点上 pod/container 的期望状态(运行什么容器、运行的副本数量、网络或者存储如何配置等等),并调用对应的容器平台接口达到这个状态。集群状态下,kubelet 会从 master 上读取信息

Master节点部分

1 ,安装kubernetes组件

# 从github 上下载v1.11.1版本,并将组件上传到各个安装节点上

cd /tmp && wget https://dl.k8s.io/v1.11.1/kubernetes-server-linux-amd64.tar.gz

tar -xzvf kubernetes-server-linux-amd64.tar.gz && cd kubernetes && cp -r server/bin/{kube-apiserver,kube-controller-manager,kube-scheduler,kubectl,kubelet,kubeadm} /usr/local/bin/

scp server/bin/{kube-apiserver,kube-controller-manager,kube-scheduler,kubectl,kubeadm} 10.180.160.112:/usr/local/bin/

scp server/bin/{kube-proxy,kubelet} 10.180.160.113:/usr/local/bin/

scp server/bin/{kube-proxy,kubelet} 10.180.160.114:/usr/local/bin/

scp server/bin/{kube-proxy,kubelet} 10.180.160.115:/usr/local/bin/

2.创建 admin 证书

kubectl 与 kube-apiserver 的安全端口通信,需要为安全通信提供 TLS 证书和秘钥。

cd /opt/ssl/ && vi admin-csr.json

{

"CN": "admin",

"hosts": [],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "ShenZhen",

"L": "ShenZhen",

"O": "system:masters",

"OU": "System"

}

]

}

# 生成 admin 证书和私钥

cd /opt/ssl/

/opt/local/cfssl/cfssl gencert -ca=/etc/kubernetes/ssl/ca.pem \

-ca-key=/etc/kubernetes/ssl/ca-key.pem \

-config=/opt/ssl/config.json \

-profile=kubernetes admin-csr.json | /opt/local/cfssl/cfssljson -bare admin

# 查看生成

[root@kubernetes-110 ssl]# ls admin*

admin.csr admin-csr.json admin-key.pem admin.pem

cp admin*.pem /etc/kubernetes/ssl/

scp admin*.pem 10.180.160.112:/etc/kubernetes/ssl/

3, 生成 kubernetes 配置文件

生成证书相关的配置文件存储与 /root/.kube 目录中

# 配置 kubernetes 集群

kubectl config set-cluster kubernetes \

--certificate-authority=/etc/kubernetes/ssl/ca.pem \

--embed-certs=true \

--server=https://127.0.0.1:6443

# 配置 客户端认证

kubectl config set-credentials admin \

--client-certificate=/etc/kubernetes/ssl/admin.pem \

--embed-certs=true \

--client-key=/etc/kubernetes/ssl/admin-key.pem

kubectl config set-context kubernetes \

--cluster=kubernetes \

--user=admin

kubectl config use-context kubernetes

4, 创建 kubernetes 证书

cd /opt/ssl && vi kubernetes-csr.json

{

"CN": "kubernetes",

"hosts": [

"127.0.0.1",

"10.180.160.110",

"10.180.160.112",

"10.180.160.113",

"10.180.160.114",

"10.180.160.115",

"10.254.0.1",

"kubernetes",

"kubernetes.default",

"kubernetes.default.svc",

"kubernetes.default.svc.cluster",

"kubernetes.default.svc.cluster.local"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "ShenZhen",

"L": "ShenZhen",

"O": "k8s",

"OU": "System"

}

]

}

## 这里 hosts 字段中 三个 IP 分别为 127.0.0.1 本机, 10.180.160.110 和 10.180.160.112 为 Master 的IP,多个Master需要写多个。 10.254.0.1 为 kubernetes SVC 的 IP, 一般是 部署网络的第一个IP , 如: 10.254.0.1 , 在启动完成后,我们使用 kubectl get svc , 就可以查看到

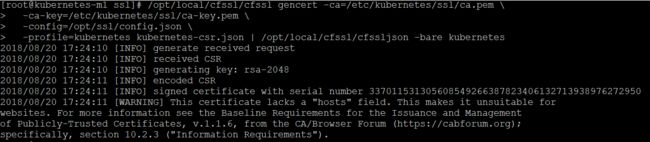

5, 生成 kubernetes 证书和私钥

/opt/local/cfssl/cfssl gencert -ca=/etc/kubernetes/ssl/ca.pem \

-ca-key=/etc/kubernetes/ssl/ca-key.pem \

-config=/opt/ssl/config.json \

-profile=kubernetes kubernetes-csr.json | /opt/local/cfssl/cfssljson -bare kubernetes

6, 查看生成

[root@kubernetes-110 ssl]# ls -lt kubernetes*

-rw-r--r-- 1 root root 1261 11月 16 15:12 kubernetes.csr

-rw------- 1 root root 1679 11月 16 15:12 kubernetes-key.pem

-rw-r--r-- 1 root root 1635 11月 16 15:12 kubernetes.pem

-rw-r--r-- 1 root root 475 11月 16 15:12 kubernetes-csr.json

# 拷贝到目录

cp kubernetes*.pem /etc/kubernetes/ssl/

scp kubernetes*.pem 10.180.160.112:/etc/kubernetes/ssl/

7,配置 kube-apiserver

kubelet 首次启动时向 kube-apiserver 发送 TLS Bootstrapping 请求,kube-apiserver 验证 kubelet 请求中的 token 是否与它配置的 token 一致,如果一致则自动为 kubelet生成证书和秘钥。

# 生成 token

[root@kubernetes-m1 ssl]# head -c 16 /dev/urandom | od -An -t x | tr -d ' '

827a9687e31668f263db7f1d90bbe890

# 创建 encryption-config.yaml 配置【注意修改为如上的token】

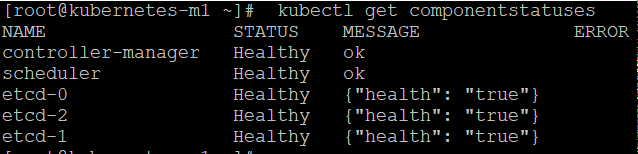

cat > encryption-config.yaml < kind: EncryptionConfig apiVersion: v1 resources: - resources: - secrets providers: - aescbc: keys: - name: key1 secret: 827a9687e31668f263db7f1d90bbe890 - identity: {} EOF # 拷贝 cp encryption-config.yaml /etc/kubernetes/ scp encryption-config.yaml 10.180.160.112:/etc/kubernetes/ # 生成高级审核配置文件 > 官方说明 https://kubernetes.io/docs/tasks/debug-application-cluster/audit/ > > 如下为最低限度的日志审核 cd /etc/kubernetes cat >> audit-policy.yaml < # Log all requests at the Metadata level. apiVersion: audit.k8s.io/v1beta1 kind: Policy rules: - level: Metadata EOF # 拷贝 scp audit-policy.yaml 10.180.160.112:/etc/kubernetes/ 8,创建 kube-apiserver.service 文件 # 自定义 系统 service 文件一般存于 /etc/systemd/system/ 下 # 配置为 各自的本地 IP vi /etc/systemd/system/kube-apiserver.service [Unit] Description=Kubernetes API Server Documentation=https://github.com/GoogleCloudPlatform/kubernetes After=network.target [Service] User=root ExecStart=/usr/local/bin/kube-apiserver \ --admission-control=NamespaceLifecycle,LimitRanger,ServiceAccount,DefaultStorageClass,ResourceQuota,NodeRestriction \ --anonymous-auth=false \ --experimental-encryption-provider-config=/etc/kubernetes/encryption-config.yaml \ --advertise-address=10.180.160.110 \ --allow-privileged=true \ --apiserver-count=3 \ --audit-policy-file=/etc/kubernetes/audit-policy.yaml \ --audit-log-maxage=30 \ --audit-log-maxbackup=3 \ --audit-log-maxsize=100 \ --audit-log-path=/var/log/kubernetes/audit.log \ --authorization-mode=Node,RBAC \ --bind-address=0.0.0.0 \ --secure-port=6443 \ --client-ca-file=/etc/kubernetes/ssl/ca.pem \ --kubelet-client-certificate=/etc/kubernetes/ssl/kubernetes.pem \ --kubelet-client-key=/etc/kubernetes/ssl/kubernetes-key.pem \ --enable-swagger-ui=true \ --etcd-cafile=/etc/kubernetes/ssl/ca.pem \ --etcd-certfile=/etc/kubernetes/ssl/etcd.pem \ --etcd-keyfile=/etc/kubernetes/ssl/etcd-key.pem \ --etcd-servers=https://10.180.160.110:2379,https://10.180.160.112:2379,https://10.180.160.113:2379 \ --event-ttl=1h \ --kubelet-https=true \ --insecure-bind-address=127.0.0.1 \ --insecure-port=8080 \ --service-account-key-file=/etc/kubernetes/ssl/ca-key.pem \ --service-cluster-ip-range=10.254.0.0/18 \ --service-node-port-range=30000-32000 \ --tls-cert-file=/etc/kubernetes/ssl/kubernetes.pem \ --tls-private-key-file=/etc/kubernetes/ssl/kubernetes-key.pem \ --enable-bootstrap-token-auth \ --v=1 Restart=on-failure RestartSec=5 Type=notify LimitNOFILE=65536 [Install] WantedBy=multi-user.target # --experimental-encryption-provider-config ,替代之前 token.csv 文件 # 这里面要注意的是 --service-node-port-range=30000-32000 # 这个地方是 映射外部端口时 的端口范围,随机映射也在这个范围内映射,指定映射端口必须也在这个范围内。 9,启动 kube-apiserver systemctl daemon-reload systemctl enable kube-apiserver.service systemctl start kube-apiserver.service systemctl status kube-apiserver.service # 如果报错 请使用 journalctl -f -t kube-apiserver 和 journalctl -u kube-apiserver 来定位问题 10, 配置 kube-controller-manager 新增加几个配置,用于自动 续期证书 –feature-gates=RotateKubeletServerCertificate=true –experimental-cluster-signing-duration=86700h0m0s # 创建 kube-controller-manager.service 文件 vi /etc/systemd/system/kube-controller-manager.service [Unit] Description=Kubernetes Controller Manager Documentation=https://github.com/GoogleCloudPlatform/kubernetes [Service] ExecStart=/usr/local/bin/kube-controller-manager \ --address=0.0.0.0 \ --master=http://127.0.0.1:8080 \ --allocate-node-cidrs=true \ --service-cluster-ip-range=10.254.0.0/18 \ --cluster-cidr=10.254.64.0/18 \ --cluster-signing-cert-file=/etc/kubernetes/ssl/ca.pem \ --cluster-signing-key-file=/etc/kubernetes/ssl/ca-key.pem \ --feature-gates=RotateKubeletServerCertificate=true \ --controllers=*,tokencleaner,bootstrapsigner \ --experimental-cluster-signing-duration=86700h0m0s \ --cluster-name=kubernetes \ --service-account-private-key-file=/etc/kubernetes/ssl/ca-key.pem \ --root-ca-file=/etc/kubernetes/ssl/ca.pem \ --leader-elect=true \ --node-monitor-grace-period=40s \ --node-monitor-period=5s \ --pod-eviction-timeout=5m0s \ --v=2 Restart=on-failure RestartSec=5 [Install] WantedBy=multi-user.target 启动 kube-controller-manager systemctl daemon-reload systemctl enable kube-controller-manager.service systemctl start kube-controller-manager.service systemctl status kube-controller-manager.service # 如果报错 请使用 journalctl -f -t kube-controller-manager 和 journalctl -u kube-controller-manager 来定位问题 11,配置 kube-scheduler # 创建 kube-cheduler.service 文件 vi /etc/systemd/system/kube-scheduler.service [Unit] Description=Kubernetes Scheduler Documentation=https://github.com/GoogleCloudPlatform/kubernetes [Service] ExecStart=/usr/local/bin/kube-scheduler \ --address=0.0.0.0 \ --master=http://127.0.0.1:8080 \ --leader-elect=true \ --v=1 Restart=on-failure RestartSec=5 [Install] WantedBy=multi-user.target 启动 kube-scheduler systemctl daemon-reload systemctl enable kube-scheduler.service systemctl start kube-scheduler.service systemctl status kube-scheduler.service 12, 验证 Master 节点 [root@kubernetes-110 ~]# kubectl get componentstatuses NAME STATUS MESSAGE ERROR controller-manager Healthy ok scheduler Healthy ok etcd-2 Healthy {"health": "true"} etcd-0 Healthy {"health": "true"} etcd-1 Healthy {"health": "true"} [root@kubernetes-112 ~]# kubectl get componentstatuses NAME STATUS MESSAGE ERROR controller-manager Healthy ok scheduler Healthy ok etcd-2 Healthy {"health": "true"} etcd-0 Healthy {"health": "true"} etcd-1 Healthy {"health": "true"} -----------------------至此Master节点服务配置完成------------------------------------- Node节点服务 1, 配置 kubelet 认证 kubelet 授权 kube-apiserver 的一些操作 exec run logs 等 # RBAC 只需创建一次就可以 kubectl create clusterrolebinding kube-apiserver:kubelet-apis --clusterrole=system:kubelet-api-admin --user kubernetes 2,创建 bootstrap kubeconfig 文件 注意: token 生效时间为 1day , 超过时间未创建自动失效,需要重新创建 token # 创建 集群所有 kubelet 的 token [root@kubernetes-110 kubernetes]# kubeadm token create --description kubelet-bootstrap-token --groups system:bootstrappers:kubernetes-110 --kubeconfig ~/.kube/config I0705 14:42:22.587674 90997 feature_gate.go:230] feature gates: &{map[]} 1jezb7.izm7refwnj3umncy [root@kubernetes-110 kubernetes]# kubeadm token create --description kubelet-bootstrap-token --groups system:bootstrappers:kubernetes-112 --kubeconfig ~/.kube/config I0705 14:42:30.553287 91021 feature_gate.go:230] feature gates: &{map[]} 1ua4d4.9bluufy3esw4lch6 [root@kubernetes-110 kubernetes]# kubeadm token create --description kubelet-bootstrap-token --groups system:bootstrappers:kubernetes-113 --kubeconfig ~/.kube/config I0705 14:42:35.681003 91047 feature_gate.go:230] feature gates: &{map[]} r8llj2.itme3y54ok531ops [root@kubernetes-110 kubernetes]# kubeadm token create --description kubelet-bootstrap-token --groups system:bootstrappers:kubernetes-114 --kubeconfig ~/.kube/config I0705 14:42:35.681003 91047 feature_gate.go:230] feature gates: &{map[]} 0c9wgx.hilomeeenx1ktenh [root@kubernetes-110 kubernetes]# kubeadm token create --description kubelet-bootstrap-token --groups system:bootstrappers:kubernetes-115 --kubeconfig ~/.kube/config I0705 14:42:35.681003 91047 feature_gate.go:230] feature gates: &{map[]} us4icn.cifmgket4f1n3486 3, 查看生成的 token [root@kubernetes-110 ssl]# kubeadm token list --kubeconfig ~/.kube/config TOKEN TTL EXPIRES USAGES DESCRIPTION EXTRA GROUPS 045jch.kjk4acth1vaul6af 23h 2018-08-30T16:51:28+08:00 authentication,signing kubelet-bootstrap-token system:bootstrappers:kubernetes-110 0c9wgx.hilomeeenx1ktenh 23h 2018-08-30T16:51:47+08:00 authentication,signing kubelet-bootstrap-token system:bootstrappers:kubernetes-114 d54kcm.fykdwkdgkgl8g1fl 23h 2018-08-30T16:51:41+08:00 authentication,signing kubelet-bootstrap-token system:bootstrappers:kubernetes-113 i0f9ep.ycmlkypo5ey9e9eq 23h 2018-08-30T16:51:35+08:00 authentication,signing kubelet-bootstrap-token system:bootstrappers:kubernetes-112 us4icn.cifmgket4f1n3486 23h 2018-08-30T16:51:56+08:00 authentication,signing kubelet-bootstrap-token system:bootstrappers:kubernetes-115 4,以下为了区分 会先生成 node 名称加 bootstrap.kubeconfig【注意修改对应的token】 ----生成 kubernetes-110 # 生成 110 的 bootstrap.kubeconfig # 配置集群参数 kubectl config set-cluster kubernetes \ --certificate-authority=/etc/kubernetes/ssl/ca.pem \ --embed-certs=true \ --server=https://127.0.0.1:6443 \ --kubeconfig=kubernetes-110-bootstrap.kubeconfig # 配置客户端认证(注意替换对应服务器的TOKEN) kubectl config set-credentials kubelet-bootstrap \ --token=045jch.kjk4acth1vaul6af \ --kubeconfig=kubernetes-110-bootstrap.kubeconfig # 配置关联 kubectl config set-context default \ --cluster=kubernetes \ --user=kubelet-bootstrap \ --kubeconfig=kubernetes-110-bootstrap.kubeconfig # 配置默认关联 kubectl config use-context default --kubeconfig=kubernetes-110-bootstrap.kubeconfig # 拷贝生成的 kubernetes-110-bootstrap.kubeconfig 文件 cp kubernetes-110-bootstrap.kubeconfig /etc/kubernetes/bootstrap.kubeconfig -----生成 kubernetes-112 # 生成 112 的 bootstrap.kubeconfig # 配置集群参数 kubectl config set-cluster kubernetes \ --certificate-authority=/etc/kubernetes/ssl/ca.pem \ --embed-certs=true \ --server=https://127.0.0.1:6443 \ --kubeconfig=kubernetes-112-bootstrap.kubeconfig # 配置客户端认证(注意替换对应服务器的TOKEN) kubectl config set-credentials kubelet-bootstrap \ --token=1ua4d4.9bluufy3esw4lch6 \ --kubeconfig=kubernetes-112-bootstrap.kubeconfig # 配置关联 kubectl config set-context default \ --cluster=kubernetes \ --user=kubelet-bootstrap \ --kubeconfig=kubernetes-112-bootstrap.kubeconfig # 配置默认关联 kubectl config use-context default --kubeconfig=kubernetes-112-bootstrap.kubeconfig # 拷贝生成的 kubernetes-112-bootstrap.kubeconfig 文件 scp kubernetes-112-bootstrap.kubeconfig 10.180.160.112:/etc/kubernetes/bootstrap.kubeconfig ---生成 kubernetes-113 # 生成 113 的 bootstrap.kubeconfig # 配置集群参数 kubectl config set-cluster kubernetes \ --certificate-authority=/etc/kubernetes/ssl/ca.pem \ --embed-certs=true \ --server=https://127.0.0.1:6443 \ --kubeconfig=kubernetes-113-bootstrap.kubeconfig # 配置客户端认证 kubectl config set-credentials kubelet-bootstrap \ --token=r8llj2.itme3y54ok531ops \ --kubeconfig=kubernetes-113-bootstrap.kubeconfig # 配置关联 kubectl config set-context default \ --cluster=kubernetes \ --user=kubelet-bootstrap \ --kubeconfig=kubernetes-113-bootstrap.kubeconfig # 配置默认关联 kubectl config use-context default --kubeconfig=kubernetes-113-bootstrap.kubeconfig # 拷贝生成的 kubernetes-113-bootstrap.kubeconfig 文件 scp kubernetes-113-bootstrap.kubeconfig 10.180.160.113:/etc/kubernetes/bootstrap.kubeconfig 【---------更多节点以此类推------------------------】 5,# 配置 bootstrap RBAC 权限 kubectl create clusterrolebinding kubelet-bootstrap --clusterrole=system:node-bootstrapper --group=system:bootstrappers # 否则报如下错误(注意) failed to run Kubelet: cannot create certificate signing request: certificatesigningrequests.certificates.k8s.io is forbidden: User "system:bootstrap:1jezb7" cannot create certificatesigningrequests.certificates.k8s.io at the cluster scope 6,创建自动批准相关 CSR 请求的 ClusterRole vi /etc/kubernetes/tls-instructs-csr.yaml kind: ClusterRole apiVersion: rbac.authorization.k8s.io/v1 metadata: name: system:certificates.k8s.io:certificatesigningrequests:selfnodeserver rules: - apiGroups: ["certificates.k8s.io"] resources: ["certificatesigningrequests/selfnodeserver"] verbs: ["create"] # 导入 yaml 文件 [root@kubernetes-110 opt]# kubectl apply -f /etc/kubernetes/tls-instructs-csr.yaml clusterrole.rbac.authorization.k8s.io "system:certificates.k8s.io:certificatesigningrequests:selfnodeserver" created # 查看 [root@kubernetes-110 opt]# kubectl describe ClusterRole/system:certificates.k8s.io:certificatesigningrequests:selfnodeserver Name: system:certificates.k8s.io:certificatesigningrequests:selfnodeserver Labels: Annotations: kubectl.kubernetes.io/last-applied-configuration={"apiVersion":"rbac.authorization.k8s.io/v1","kind":"ClusterRole","metadata":{"annotations":{},"name":"system:certificates.k8s.io:certificatesigningreq... PolicyRule: Resources Non-Resource URLs Resource Names Verbs --------- ----------------- -------------- ----- certificatesigningrequests.certificates.k8s.io/selfnodeserver [] [] [create] 7,# 将 ClusterRole 绑定到适当的用户组 # 自动批准 system:bootstrappers 组用户 TLS bootstrapping 首次申请证书的 CSR 请求 kubectl create clusterrolebinding node-client-auto-approve-csr --clusterrole=system:certificates.k8s.io:certificatesigningrequests:nodeclient --group=system:bootstrappers # 自动批准 system:nodes 组用户更新 kubelet 自身与 apiserver 通讯证书的 CSR 请求 kubectl create clusterrolebinding node-client-auto-renew-crt --clusterrole=system:certificates.k8s.io:certificatesigningrequests:selfnodeclient --group=system:nodes # 自动批准 system:nodes 组用户更新 kubelet 10250 api 端口证书的 CSR 请求 kubectl create clusterrolebinding node-server-auto-renew-crt --clusterrole=system:certificates.k8s.io:certificatesigningrequests:selfnodeserver --group=system:nodes 9, 创建 kubelet.service 文件 关于 kubectl get node 中的 ROLES 的标签【关于标签一直没太明白,后续仔细研究一下】 单 Master 打标签 kubectl label node kubernetes-m1 \ node-role.kubernetes.io/master=”” 这里需要将 单Master 更改为 NoSchedule 更新标签命令为 kubectl taint nodes kubernetes-m1 node-role.kubernetes.io/master=:NoSchedule 既 Master 又是 node 打标签 kubectl label node kubernetes-m2 node-role.kubernetes.io/master=”” 单 Node 打标签 kubectl label node kubernetes-m3 node-role.kubernetes.io/node=”” 关于删除 label 可使用 - 号相连 如: kubectl label nodes kubernetes-m2 node-role.kubernetes.io/node- 动态 kubelet 配置 官方说明 https://kubernetes.io/docs/tasks/administer-cluster/kubelet-config-file/ https://kubernetes.io/docs/tasks/administer-cluster/reconfigure-kubelet/ 目前官方还只是 beta 阶段, 动态配置 json 的具体参数可以参考 https://github.com/kubernetes/kubernetes/blob/release-1.10/pkg/kubelet/apis/kubeletconfig/v1beta1/types.go 10,# 创建 kubelet 目录 vi /etc/systemd/system/kubelet.service [Unit] Description=Kubernetes Kubelet Documentation=https://github.com/GoogleCloudPlatform/kubernetes After=docker.service Requires=docker.service [Service] WorkingDirectory=/var/lib/kubelet ExecStart=/usr/local/bin/kubelet \ --hostname-override=kubernetes-113 \ --pod-infra-container-image=jicki/pause-amd64:3.1 \ --bootstrap-kubeconfig=/etc/kubernetes/bootstrap.kubeconfig \ --kubeconfig=/etc/kubernetes/kubelet.kubeconfig \ --config=/etc/kubernetes/kubelet.config.json \ --cert-dir=/etc/kubernetes/ssl \ --logtostderr=true \ --v=2 [Install] WantedBy=multi-user.target # 创建 kubelet config 配置文件 vi /etc/kubernetes/kubelet.config.json { "kind": "KubeletConfiguration", "apiVersion": "kubelet.config.k8s.io/v1beta1", "authentication": { "x509": { "clientCAFile": "/etc/kubernetes/ssl/ca.pem" }, "webhook": { "enabled": true, "cacheTTL": "2m0s" }, "anonymous": { "enabled": false } }, "authorization": { "mode": "Webhook", "webhook": { "cacheAuthorizedTTL": "5m0s", "cacheUnauthorizedTTL": "30s" } }, "address": "10.180.160.113", "port": 10250, "readOnlyPort": 0, "cgroupDriver": "cgroupfs", "hairpinMode": "promiscuous-bridge", "serializeImagePulls": false, "RotateCertificates": true, "featureGates": { "RotateKubeletClientCertificate": true, "RotateKubeletServerCertificate": true }, "MaxPods": "512", "failSwapOn": false, "containerLogMaxSize": "10Mi", "containerLogMaxFiles": 5, "clusterDomain": "cluster.local.", "clusterDNS": ["10.254.0.2"] } # 如上配置: kubernetes-113 本机hostname 10.254.0.2 预分配的 dns 地址 cluster.local. 为 kubernetes 集群的 domain jicki/pause-amd64:3.1 这个是 pod 的基础镜像,既 gcr 的 gcr.io/google_containers/pause-amd64:3.1 镜像, 下载下来修改为自己的仓库中的比较快。 启动 kubelet systemctl daemon-reload systemctl enable kubelet.service systemctl start kubelet.service systemctl status kubelet.service # 如果报错 请使用 journalctl -f -t kubelet 和 journalctl -u kubelet 来定位问题 11,验证 nodes [root@kubernetes-110 ~]# kubectl get nodes NAME STATUS ROLES AGE VERSION kubernetes-113 Ready kubernetes-114 Ready kubernetes-115 Ready 12, 查看 kubelet 生成文件 [root@kubernetes-110 ~]# ls -lt /etc/kubernetes/ssl/kubelet-* -rw------- 1 root root 1374 4月 23 11:55 /etc/kubernetes/ssl/kubelet-server-2018-04-23-11-55-38.pem lrwxrwxrwx 1 root root 58 4月 23 11:55 /etc/kubernetes/ssl/kubelet-server-current.pem -> /etc/kubernetes/ssl/kubelet-server-2018-04-23-11-55-38.pem -rw-r--r-- 1 root root 1050 4月 23 11:55 /etc/kubernetes/ssl/kubelet-client.crt -rw------- 1 root root 227 4月 23 11:55 /etc/kubernetes/ssl/kubelet-client.key 配置 kube-proxy 13, 创建 kube-proxy 证书 # node端没有装 cfssl,在 master 端机器去配置证书,然后拷贝过来即可 [root@kubernetes-110 ~]# cd /opt/ssl vi kube-proxy-csr.json { "CN": "system:kube-proxy", "hosts": [], "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "ST": "ShenZhen", "L": "ShenZhen", "O": "k8s", "OU": "System" } ] } 生成 kube-proxy 证书和私钥 /opt/local/cfssl/cfssl gencert -ca=/etc/kubernetes/ssl/ca.pem \ -ca-key=/etc/kubernetes/ssl/ca-key.pem \ -config=/opt/ssl/config.json \ -profile=kubernetes kube-proxy-csr.json | /opt/local/cfssl/cfssljson -bare kube-proxy # 查看生成 ls kube-proxy* kube-proxy.csr kube-proxy-csr.json kube-proxy-key.pem kube-proxy.pem # 拷贝到目录 scp kube-proxy* 10.180.160.113:/etc/kubernetes//ssl/ scp kube-proxy* 10.180.160.114:/etc/kubernetes//ssl/ scp kube-proxy* 10.180.160.115:/etc/kubernetes//ssl/ 14,创建 kube-proxy kubeconfig 文件 # 配置集群 kubectl config set-cluster kubernetes \ --certificate-authority=/etc/kubernetes/ssl/ca.pem \ --embed-certs=true \ --server=https://127.0.0.1:6443 \ --kubeconfig=kube-proxy.kubeconfig # 配置客户端认证 kubectl config set-credentials kube-proxy \ --client-certificate=/etc/kubernetes/ssl/kube-proxy.pem \ --client-key=/etc/kubernetes/ssl/kube-proxy-key.pem \ --embed-certs=true \ --kubeconfig=kube-proxy.kubeconfig # 配置关联 kubectl config set-context default \ --cluster=kubernetes \ --user=kube-proxy \ --kubeconfig=kube-proxy.kubeconfig # 配置默认关联 kubectl config use-context default --kubeconfig=kube-proxy.kubeconfig # 拷贝到需要的 node 端里 scp kube-proxy.kubeconfig 10.180.160.113:/etc/kubernetes/ scp kube-proxy.kubeconfig 10.180.160.114:/etc/kubernetes/ scp kube-proxy.kubeconfig 10.180.160.115:/etc/kubernetes/ 15. 创建 kube-proxy.service 文件【在各个node节点上】 1.10 官方 ipvs 已经是默认的配置 –masquerade-all 必须添加这项配置,否则 创建 svc 在 ipvs 不会添加规则 打开 ipvs 需要安装 ipvsadm ipset conntrack 软件, 在 node 中安装 yum install ipset ipvsadm conntrack-tools.x86_64 -y yaml 配置文件中的 参数如下: https://github.com/kubernetes/kubernetes/blob/master/pkg/proxy/apis/kubeproxyconfig/types.go #[root@kubernetes-113 ~]cd /etc/kubernetes/ && vi kube-proxy.config.yaml apiVersion: kubeproxy.config.k8s.io/v1alpha1 bindAddress: 10.180.160.113 clientConnection: kubeconfig: /etc/kubernetes/kube-proxy.kubeconfig clusterCIDR: 10.254.64.0/18 healthzBindAddress: 10.180.160.113 hostnameOverride: kubernetes-113 kind: KubeProxyConfiguration metricsBindAddress: 10.180.160.113:10249 mode: "ipvs" # 创建 kube-proxy 目录 vi /etc/systemd/system/kube-proxy.service [Unit] Description=Kubernetes Kube-Proxy Server Documentation=https://github.com/GoogleCloudPlatform/kubernetes After=network.target [Service] WorkingDirectory=/var/lib/kube-proxy ExecStart=/usr/local/bin/kube-proxy \ --config=/etc/kubernetes/kube-proxy.config.yaml \ --logtostderr=true \ --v=1 Restart=on-failure RestartSec=5 LimitNOFILE=65536 [Install] WantedBy=multi-user.target 启动 kube-proxy systemctl daemon-reload systemctl enable kube-proxy.service systemctl start kube-proxy.service systemctl status kube-proxy.service # 如果报错 请使用 journalctl -f -t kube-proxy 和 journalctl -u kube-proxy 来定位问题 16, # 检查 ipvs [root@kubernetes-113 ~]# ipvsadm -L -n IP Virtual Server version 1.2.1 (size=4096) Prot LocalAddress:Port Scheduler Flags -> RemoteAddress:Port Forward Weight ActiveConn InActConn TCP 10.254.0.1:443 rr persistent 10800 -> 172.16.1.64:6443 Masq 1 0 0 -> 172.16.1.65:6443 Masq 1 0 0 # 如果报错 请使用 journalctl -f -t kube-proxy 和 journalctl -u kube-proxy 来定位问题 ---------至此 Node 端的服务安装完毕------------------------![]()