DL笔记:Ubuntu16.04安装cuda-8.0+cudnn5.1+opencv-3.1+caffe+本地浏览器访问服务器jupyter notebook设置

| Ubuntu16.04安装cuda-8.0+cudnn+opencv-3.1+caffe教程! |

文章目录

- 一、安转cuda+cudnn

- 1.1、安转cuda

- 1.2、安转cudnn

- 二、安转opencv-3.1

- 2.1、安装依赖库

- 2.2、问题1: 下载ippicv_linux_20151201失败

- 2.3、问题2: CUDA 8.0不支持OpenCV的GraphCut算法

- 三、安转caffe(convolutional architecture for feature embedding)

- 3.1、caffe简介

- 3.2、修改Makefile.config文件内容

- 3.3、修改caffe目录下的Makefile文件内容

- 3.4、编译

- 四、caffe简单例子

- 4.1、准备数据

- 4.2、定义网络结构

- 4.3、配置solver文件

- 4.4、评估模型执行的时间

- 4.5、训练模型

- 4.6、分析结果

- 4.7、本地浏览器访问服务器jupyter notebook设置

- 参考文献

一、安转cuda+cudnn

cuda8.0+cudnn5.1百度云下载链接: 提取码:0ls9

1.1、安转cuda

- 安装ssh工具:备注:这一步需要到服务器桌面上的命令窗口输入,这一步完成后,就可以用ssh工具远程连接服务器了,本文使用的是XShell。

sudo apt-get install openssh-server

- 参考我之前的链接,方法一样的;安装cuda!

1.2、安转cudnn

- 参考我之前的链接,方法一样的;安装cudnn!

二、安转opencv-3.1

2.1、安装依赖库

首先安装一些依赖包,如下:

sudo apt-get install build-essential

sudo apt-get install cmake git libgtk2.0-dev pkg-config libavcodec-dev libavformat-dev libswscale-dev

sudo apt-get install python-dev python-numpy libtbb2 libtbb-dev libjpeg-dev libpng-dev libtiff-dev libjasper-dev libdc1394-22-dev

sudo apt-get install --assume-yes libopencv-dev libdc1394-22 libdc1394-22-dev libjpeg-dev libpng12-dev libtiff5-dev libjasper-dev libavcodec-dev libavformat-dev libswscale-dev libxine2-dev libgstreamer0.10-dev libgstreamer-plugins-base0.10-dev libv4l-dev libtbb-dev libqt4-dev libfaac-dev libmp3lame-dev libopencore-amrnb-dev libopencore-amrwb-dev libtheora-dev libvorbis-dev libxvidcore-dev x264 v4l-utils unzip

sudo apt-get install ffmpeg libopencv-dev libgtk-3-dev python-numpy python3-numpy libdc1394-22 libdc1394-22-dev libjpeg-dev libpng12-dev libtiff5-dev libjasper-dev libavcodec-dev libavformat-dev libswscale-dev libxine2-dev libgstreamer1.0-dev libgstreamer-plugins-base1.0-dev libv4l-dev libtbb-dev qtbase5-dev libfaac-dev libmp3lame-dev libopencore-amrnb-dev

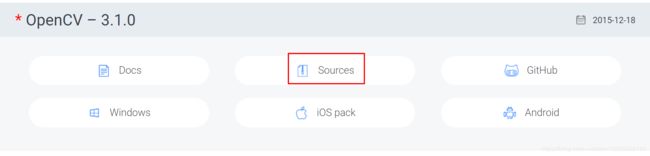

- 进入官网链接 , 选择 3.1.0 版本的 source , 下载 opencv-3.1.0.zip

- 下载之后,解压到你要安装的位置,命令行进入已解压的文件夹 opencv-3.1.0 目录下,执行:

mkdir build # 创建编译的文件目录

cd build

cmake -D CMAKE_BUILD_TYPE=Release -D CMAKE_INSTALL_PREFIX=/usr/local ..

make -j8 #编译

2.2、问题1: 下载ippicv_linux_20151201失败

- 问题1:OpenCV 3.1下载 ippicv_linux_20151201失败;安装OpenCv 3.1的过程中要下载ippicv_linux_20151201,由于网络的原因,这个文件经常会下载失败。解决的办法是手动下载: ippicv_linux_20151201;将下载后的ippicv文件拷到下面这个目录,问题得到解决。

opencv-3.1.0/3rdparty/ippicv/downloads/linux-808b791a6eac9ed78d32a7666804320e/

- 最终cmake执行成功显示如下(部分):

-- Python (for build): /usr/bin/python2.7

--

-- Java:

-- ant: NO

-- JNI: NO

-- Java wrappers: NO

-- Java tests: NO

--

-- Matlab: Matlab not found or implicitly disabled

--

-- Documentation:

-- Doxygen: NO

-- PlantUML: NO

--

-- Tests and samples:

-- Tests: YES

-- Performance tests: YES

-- C/C++ Examples: NO

--

-- Install path: /usr/local

--

-- cvconfig.h is in: /home/zhangkf/opencv-3.1.0/build

-- -----------------------------------------------------------------

--

-- Configuring done

-- Generating done

-- Build files have been written to: /home/zhangkf/opencv-3.1.0/build

2.3、问题2: CUDA 8.0不支持OpenCV的GraphCut算法

- 问题2:由于CUDA 8.0不支持OpenCV的 GraphCut 算法,可能出现以下错误:

/home/zhangkf/opencv-3.1.0/modules/cudalegacy/src/graphcuts.cpp:275:146: error: ‘nppiGraphcut8_32f8u’ was not declared in this scope

static_cast<int>(terminals.step), static_cast<int>(leftTransp.step), sznpp, labels.ptr<Npp8u>(), static_cast<int>(labels.step), state) );

^

/home/zhangkf/opencv-3.1.0/modules/core/include/opencv2/core/private.cuda.hpp:165:52: note: in definition of macro ‘nppSafeCall’

#define nppSafeCall(expr) cv::cuda::checkNppError(expr, __FILE__, __LINE__, CV_Func)

^

make[2]: *** [modules/cudalegacy/CMakeFiles/opencv_cudalegacy.dir/src/graphcuts.cpp.o] Error 1

make[2]: *** Waiting for unfinished jobs....

make[1]: *** [modules/cudalegacy/CMakeFiles/opencv_cudalegacy.dir/all] Error 2

make[1]: *** Waiting for unfinished jobs....

[ 90%] Linking CXX shared library ../../lib/libopencv_photo.so

[ 90%] Built target opencv_photo

make: *** [all] Error 2

- 解决方法:修改

/opencv-3.1.0/modules/cudalegacy/src/graphcuts.cpp文件内容,如下:

#include "precomp.hpp"

#if !defined (HAVE_CUDA) || defined (CUDA_DISABLER)

改为

#include "precomp.hpp"

#if !defined (HAVE_CUDA) || defined (CUDA_DISABLER) || (CUDART_VERSION >= 8000)

- 最终make -j8 #编译成功显示如下(部分):

[100%] Building CXX object modules/superres/CMakeFiles/opencv_test_superres.dir/test/test_main.cpp.o

[100%] Building CXX object modules/superres/CMakeFiles/opencv_test_superres.dir/test/test_superres.cpp.o

[100%] Building CXX object modules/superres/CMakeFiles/opencv_perf_superres.dir/perf/perf_superres.cpp.o

[100%] Building CXX object modules/superres/CMakeFiles/opencv_perf_superres.dir/perf/perf_main.cpp.o

[100%] Linking CXX executable ../../bin/opencv_test_superres

[100%] Built target opencv_test_superres

[100%] Linking CXX executable ../../bin/opencv_perf_superres

[100%] Built target opencv_perf_superres

Scanning dependencies of target opencv_videostab

[100%] Building CXX object modules/videostab/CMakeFiles/opencv_videostab.dir/src/frame_source.cpp.o

[100%] Building CXX object modules/videostab/CMakeFiles/opencv_videostab.dir/src/global_motion.cpp.o

[100%] Building CXX object modules/videostab/CMakeFiles/opencv_videostab.dir/src/stabilizer.cpp.o

[100%] Building CXX object modules/videostab/CMakeFiles/opencv_videostab.dir/src/inpainting.cpp.o

[100%] Building CXX object modules/videostab/CMakeFiles/opencv_videostab.dir/src/fast_marching.cpp.o

[100%] Building CXX object modules/videostab/CMakeFiles/opencv_videostab.dir/src/deblurring.cpp.o

[100%] Building CXX object modules/videostab/CMakeFiles/opencv_videostab.dir/src/wobble_suppression.cpp.o

[100%] Building CXX object modules/videostab/CMakeFiles/opencv_videostab.dir/src/outlier_rejection.cpp.o

[100%] Building CXX object modules/videostab/CMakeFiles/opencv_videostab.dir/src/motion_stabilizing.cpp.o

[100%] Building CXX object modules/videostab/CMakeFiles/opencv_videostab.dir/src/log.cpp.o

[100%] Building CXX object modules/videostab/CMakeFiles/opencv_videostab.dir/src/optical_flow.cpp.o

[100%] Linking CXX shared library ../../lib/libopencv_videostab.so

[100%] Built target opencv_videostab

[100%] Generating pyopencv_generated_include.h, pyopencv_generated_funcs.h, pyopencv_generated_types.h, pyopencv_generated_type_reg.h, pyopencv_generated_ns_reg.h

[100%] Generating pyopencv_generated_include.h, pyopencv_generated_funcs.h, pyopencv_generated_types.h, pyopencv_generated_type_reg.h, pyopencv_generated_ns_reg.h

Scanning dependencies of target opencv_python2

Scanning dependencies of target opencv_python3

[100%] Building CXX object modules/python2/CMakeFiles/opencv_python2.dir/__/src2/cv2.cpp.o

[100%] Building CXX object modules/python3/CMakeFiles/opencv_python3.dir/__/src2/cv2.cpp.o

[100%] Linking CXX shared module ../../lib/python3/cv2.cpython-34m.so

[100%] Built target opencv_python3

[100%] Linking CXX shared module ../../lib/cv2.so

[100%] Built target opencv_python2

zhangkf@Ubuntu:~/opencv-3.1.0/build$

- 安装完成后通过查看opencv版本验证是否安装成功:

pkg-config --modversion opencv

- opencv安装视频推荐:链接

三、安转caffe(convolutional architecture for feature embedding)

3.1、caffe简介

|

|

|---|

- 终于可以安装caffe了,使用Git直接下载Caffe非常简单,或者去https://github.com/BVLC/caffe下载。由于我习惯去github上找代码,所以就直接去下载的源码。

git clone https://github.com/BVLC/caffe.git

- 进入 caffe ,将 Makefile.config.example 文件复制一份并更名为 Makefile.config ,也可以在 caffe 目录下直接调用以下命令完成复制操作 :

sudo cp Makefile.config.example Makefile.config

- 复制一份的原因是编译 caffe 时需要的是 Makefile.config 文件,而Makefile.config.example 只是caffe 给出的配置文件例子,不能用来编译 caffe。然后修改 Makefile.config 文件,在 caffe 目录下打开该文件:

sudo gedit Makefile.config

3.2、修改Makefile.config文件内容

- 这里把我电脑上用到的具体配置贴出来:

## Refer to http://caffe.berkeleyvision.org/installation.html

# Contributions simplifying and improving our build system are welcome!

# cuDNN acceleration switch (uncomment to build with cuDNN).

USE_CUDNN := 1 ##########################

# CPU-only switch (uncomment to build without GPU support).

# CPU_ONLY := 1

# uncomment to disable IO dependencies and corresponding data layers

USE_OPENCV := 1 ##########################

# USE_LEVELDB := 0

# USE_LMDB := 0

# This code is taken from https://github.com/sh1r0/caffe-android-lib

# USE_HDF5 := 0

# uncomment to allow MDB_NOLOCK when reading LMDB files (only if necessary)

# You should not set this flag if you will be reading LMDBs with any

# possibility of simultaneous read and write

# ALLOW_LMDB_NOLOCK := 1

# Uncomment if you're using OpenCV 3

OPENCV_VERSION :=3 ##########################

# To customize your choice of compiler, uncomment and set the following.

# N.B. the default for Linux is g++ and the default for OSX is clang++

# CUSTOM_CXX := g++

# CUDA directory contains bin/ and lib/ directories that we need.

CUDA_DIR := /usr/local/cuda-10.0 ##########################

# On Ubuntu 14.04, if cuda tools are installed via

# "sudo apt-get install nvidia-cuda-toolkit" then use this instead:

# CUDA_DIR := /usr

# CUDA architecture setting: going with all of them.

# For CUDA < 6.0, comment the *_50 through *_61 lines for compatibility.

# For CUDA < 8.0, comment the *_60 and *_61 lines for compatibility.

# For CUDA >= 9.0, comment the *_20 and *_21 lines for compatibility.

# CUDA_ARCH := -gencode arch=compute_20,code=sm_20

#################################################################

CUDA_ARCH :=-gencode arch=compute_30,code=sm_30 \

-gencode arch=compute_35,code=sm_35 \

-gencode arch=compute_50,code=sm_50 \

-gencode arch=compute_52,code=sm_52 \

-gencode arch=compute_60,code=sm_60 \

-gencode arch=compute_61,code=sm_61 \

-gencode arch=compute_61,code=compute_61

# BLAS choice:

# atlas for ATLAS (default)

# mkl for MKL

# open for OpenBlas

BLAS := atlas ##########################

# Custom (MKL/ATLAS/OpenBLAS) include and lib directories.

# Leave commented to accept the defaults for your choice of BLAS

# (which should work)!

#BLAS_INCLUDE := /home/asrim/intel/mkl/include

#BLAS_LIB := /home/asrim/intel/mkl/lib/intel64

# Homebrew puts openblas in a directory that is not on the standard search path

# BLAS_INCLUDE := $(shell brew --prefix openblas)/include

# BLAS_LIB := $(shell brew --prefix openblas)/lib

# This is required only if you will compile the matlab interface.

# MATLAB directory should contain the mex binary in /bin.

MATLAB_DIR := /usr/local ##########################MATLAB根据自己而定

MATLAB_DIR := /usr/local/MATLAB/R2016b ##########################MATLAB根据自己而定

# NOTE: this is required only if you will compile the python interface.

# We need to be able to find Python.h and numpy/arrayobject.h.

# PYTHON_INCLUDE := /usr/include/python2.7 \

# /usr/lib/python2.7/dist-packages/numpy/core/include

# Anaconda Python distribution is quite popular. Include path:

# Verify anaconda location, sometimes it's in root.

ANACONDA_HOME := $(HOME)/anaconda3/envs/caffe ############################

PYTHON_INCLUDE := $(ANACONDA_HOME)/include \

$(ANACONDA_HOME)/include/python3.5m \

$(ANACONDA_HOME)/lib/python3.5/site-packages/numpy/core/include

# Uncomment to use Python 3 (default is Python 2)

# PYTHON_LIBRARIES := boost_python-py35 python3.5m

# PYTHON_INCLUDE := /usr/include/python3.5m \

# /usr/lib/python3.5/dist-packages/numpy/core/include

# We need to be able to find libpythonX.X.so or .dylib.

# PYTHON_LIB := /usr/lib

PYTHON_LIB := $(ANACONDA_HOME)/lib #########################

LINKFLAGS := -Wl,-rpath,$(ANACONDA_HOME)/lib ####################

# Homebrew installs numpy in a non standard path (keg only)

# PYTHON_INCLUDE += $(dir $(shell python -c 'import numpy.core; print(numpy.core.__file__)'))/include

# PYTHON_LIB += $(shell brew --prefix numpy)/lib

# Uncomment to support layers written in Python (will link against Python libs)

WITH_PYTHON_LAYER := 1

# Whatever else you find you need goes here.

INCLUDE_DIRS := $(PYTHON_INCLUDE) /usr/local/include /usr/include/hdf5/serial ##########

LIBRARY_DIRS := $(PYTHON_LIB) /usr/local/lib /usr/lib /usr/lib/x86_64-linux-gnu /usr/lib/x86_64-linux-gnu/hdf5/serial ##########

# If Homebrew is installed at a non standard location (for example your home directory) and you use it for general dependencies

# INCLUDE_DIRS += $(shell brew --prefix)/include

# LIBRARY_DIRS += $(shell brew --prefix)/lib

# NCCL acceleration switch (uncomment to build with NCCL)

# https://github.com/NVIDIA/nccl (last tested version: v1.2.3-1+cuda8.0)

# USE_NCCL := 1

# Uncomment to use `pkg-config` to specify OpenCV library paths.

# (Usually not necessary -- OpenCV libraries are normally installed in one of the above $LIBRARY_DIRS.)

# USE_PKG_CONFIG := 1

# N.B. both build and distribute dirs are cleared on `make clean`

BUILD_DIR := build

DISTRIBUTE_DIR := distribute

# Uncomment for debugging. Does not work on OSX due to https://github.com/BVLC/caffe/issues/171

# DEBUG := 1

# The ID of the GPU that 'make runtest' will use to run unit tests.

TEST_GPUID := 0

# enable pretty build (comment to see full commands)

Q ?= @

注意一点: 我的依赖库都是在anaconda虚拟环境caffe中安装的,这里

ANACONDA_HOME := $(HOME)/anaconda3/envs/caffe

- 1、若使用cudnn,则将

#USE_CUDNN := 1

修改为:

USE_CUDNN := 1

- 2、若使用的opencv版本是3的,则将

#OPENCV_VERSION := 3

修改为:

OPENCV_VERSION := 3

- 3、若要使用python来编写layer,则将

#WITH_PYTHON_LAYER := 1

修改为:

WITH_PYTHON_LAYER := 1

- 4、修改 python 路径(重要的一项)

INCLUDE_DIRS := $(PYTHON_INCLUDE) /usr/local/include

LIBRARY_DIRS := $(PYTHON_LIB) /usr/local/lib /usr/lib

修改为:

INCLUDE_DIRS := $(PYTHON_INCLUDE) /usr/local/include /usr/include/hdf5/serial

LIBRARY_DIRS := $(PYTHON_LIB) /usr/local/lib /usr/lib /usr/lib/x86_64-linux-gnu /usr/lib/x86_64-linux-gnu/hdf5/serial

3.3、修改caffe目录下的Makefile文件内容

将:

NVCCFLAGS +=-ccbin=$(CXX) -Xcompiler-fPIC $(COMMON_FLAGS)

替换为:

NVCCFLAGS += -D_FORCE_INLINES -ccbin=$(CXX) -Xcompiler -fPIC $(COMMON_FLAGS)

将:

LIBRARIES += glog gflags protobuf boost_system boost_filesystem m hdf5_hl hdf5

改为:

LIBRARIES += glog gflags protobuf boost_system boost_filesystem m hdf5_serial_hl hdf5_serial

- 然后修改 /usr/local/cuda/include/host_config.h 文件 :

将

#error-- unsupported GNU version! gcc versions later than 4.9 are not supported!

改为

//#error-- unsupported GNU version! gcc versions later than 4.9 are not supported!

3.4、编译

- 开始编译,在 caffe 目录下执行 :

make all -j8

- 如果显示结果如下(部分),则表示 caffe 已经成功安装。

CXX src/caffe/layers/cudnn_tanh_layer.cpp

CXX src/caffe/layers/power_layer.cpp

CXX src/caffe/layers/input_layer.cpp

CXX src/caffe/layers/log_layer.cpp

CXX src/caffe/layers/conv_layer.cpp

CXX src/caffe/layers/lrn_layer.cpp

CXX src/caffe/layers/loss_layer.cpp

CXX src/caffe/layers/slice_layer.cpp

CXX src/caffe/layers/batch_norm_layer.cpp

CXX src/caffe/layers/cudnn_sigmoid_layer.cpp

CXX src/caffe/layers/tile_layer.cpp

CXX src/caffe/layers/mvn_layer.cpp

CXX src/caffe/layers/spp_layer.cpp

CXX src/caffe/layers/prelu_layer.cpp

CXX src/caffe/layers/accuracy_layer.cpp

CXX src/caffe/layers/clip_layer.cpp

CXX src/caffe/layers/threshold_layer.cpp

CXX src/caffe/layers/softmax_loss_layer.cpp

CXX src/caffe/layers/embed_layer.cpp

CXX src/caffe/layers/sigmoid_cross_entropy_loss_layer.cpp

CXX src/caffe/layers/tanh_layer.cpp

CXX src/caffe/layers/cudnn_lrn_layer.cpp

CXX src/caffe/layers/elu_layer.cpp

CXX src/caffe/layers/scale_layer.cpp

CXX src/caffe/layers/bnll_layer.cpp

CXX src/caffe/layers/lstm_unit_layer.cpp

CXX src/caffe/layers/image_data_layer.cpp

CXX src/caffe/layers/dropout_layer.cpp

CXX src/caffe/layers/filter_layer.cpp

CXX src/caffe/layers/swish_layer.cpp

CXX src/caffe/layers/neuron_layer.cpp

CXX src/caffe/layers/cudnn_conv_layer.cpp

CXX src/caffe/layers/hdf5_data_layer.cpp

CXX src/caffe/layers/reduction_layer.cpp

CXX src/caffe/layers/data_layer.cpp

CXX src/caffe/layers/split_layer.cpp

CXX src/caffe/layers/batch_reindex_layer.cpp

CXX src/caffe/layers/base_data_layer.cpp

CXX src/caffe/layers/relu_layer.cpp

CXX src/caffe/layers/base_conv_layer.cpp

CXX src/caffe/layers/bias_layer.cpp

CXX src/caffe/layers/parameter_layer.cpp

CXX src/caffe/layers/euclidean_loss_layer.cpp

CXX src/caffe/layers/cudnn_pooling_layer.cpp

CXX src/caffe/layers/dummy_data_layer.cpp

CXX src/caffe/layers/recurrent_layer.cpp

CXX src/caffe/layers/infogain_loss_layer.cpp

CXX src/caffe/layers/multinomial_logistic_loss_layer.cpp

CXX src/caffe/layers/sigmoid_layer.cpp

CXX src/caffe/layers/cudnn_softmax_layer.cpp

CXX src/caffe/layers/softmax_layer.cpp

CXX src/caffe/layers/rnn_layer.cpp

CXX src/caffe/layers/flatten_layer.cpp

CXX src/caffe/layers/contrastive_loss_layer.cpp

CXX src/caffe/layers/inner_product_layer.cpp

CXX src/caffe/layers/hinge_loss_layer.cpp

CXX src/caffe/layers/exp_layer.cpp

CXX src/caffe/layers/crop_layer.cpp

CXX src/caffe/layers/argmax_layer.cpp

CXX src/caffe/layers/absval_layer.cpp

CXX src/caffe/layers/cudnn_lcn_layer.cpp

CXX src/caffe/layers/cudnn_deconv_layer.cpp

CXX src/caffe/layers/lstm_layer.cpp

CXX src/caffe/layers/concat_layer.cpp

CXX src/caffe/layers/deconv_layer.cpp

CXX src/caffe/layers/hdf5_output_layer.cpp

CXX src/caffe/layers/im2col_layer.cpp

CXX src/caffe/layers/eltwise_layer.cpp

CXX src/caffe/layers/silence_layer.cpp

CXX src/caffe/layers/memory_data_layer.cpp

CXX src/caffe/layers/window_data_layer.cpp

CXX src/caffe/layers/pooling_layer.cpp

CXX src/caffe/layers/reshape_layer.cpp

CXX src/caffe/layers/cudnn_relu_layer.cpp

CXX src/caffe/util/db_lmdb.cpp

CXX src/caffe/util/math_functions.cpp

CXX src/caffe/util/benchmark.cpp

CXX src/caffe/util/db_leveldb.cpp

CXX src/caffe/util/hdf5.cpp

CXX src/caffe/util/im2col.cpp

CXX src/caffe/util/blocking_queue.cpp

CXX src/caffe/util/io.cpp

CXX src/caffe/util/insert_splits.cpp

CXX src/caffe/util/upgrade_proto.cpp

CXX src/caffe/util/db.cpp

CXX src/caffe/util/cudnn.cpp

CXX src/caffe/util/signal_handler.cpp

CXX src/caffe/parallel.cpp

CXX src/caffe/net.cpp

AR -o .build_release/lib/libcaffe.a

LD -o .build_release/lib/libcaffe.so.1.0.0

CXX/LD -o .build_release/tools/extract_features.bin

CXX/LD -o .build_release/tools/caffe.bin

CXX/LD -o .build_release/tools/convert_imageset.bin

CXX/LD -o .build_release/tools/upgrade_solver_proto_text.bin

CXX/LD -o .build_release/tools/upgrade_net_proto_binary.bin

CXX/LD -o .build_release/tools/upgrade_net_proto_text.bin

CXX/LD -o .build_release/tools/compute_image_mean.bin

CXX/LD -o .build_release/examples/mnist/convert_mnist_data.bin

CXX/LD -o .build_release/examples/cpp_classification/classification.bin

CXX/LD -o .build_release/examples/siamese/convert_mnist_siamese_data.bin

CXX/LD -o .build_release/examples/cifar10/convert_cifar_data.bin

zhangkf@wang-ThinkStation-P520:~/caffe$ clear

sudo make test -j8

- 如果之前的配置或安装出错,那么编译就会出现各种各样的问题,所以前面的步骤一定要细心。编译成功后可运行测试:

sudo make runtest -j8

- 然后,配置环境变量,将

/home/zhangkf/anaconda3/envs/caffe/python加到环境变量:

export PYTHONPATH="/home/zhangkf/anaconda3/envs/caffe/bin/python:$PATH"

- 然后输入Python

(caffe) zhangkf@wang-ThinkStation-P520:~$ python

Python 3.4.5 |Continuum Analytics, Inc.| (default, Jul 2 2016, 17:47:47)

[GCC 4.4.7 20120313 (Red Hat 4.4.7-1)] on linux

Type "help", "copyright", "credits" or "license" for more information.

>>> import caffe

>>>

- 上面如果

ImportError: No module named caffe,需要把caffe下的Python路径导入环境变量中去;

sudo vim ~/.bashrc

# 最后一行加上,这里的路径写上你自己的路径。

export PYTHONPATH="home/zhangkf/caffe/python:$PATH"

# 记得执行下面的命令,否则的话只能在这个目录下执行Python,导入caffe了;

source ~/.bashrc

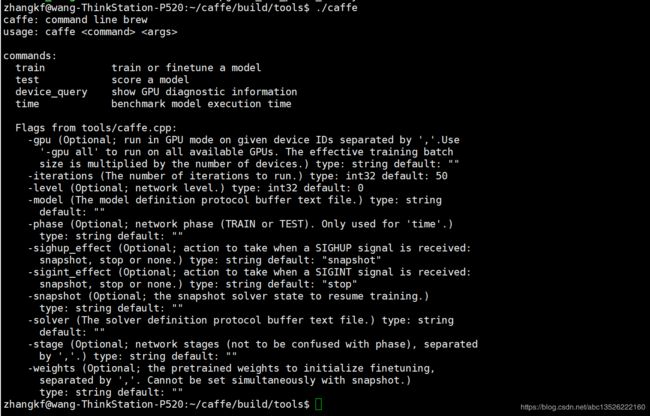

- 下面测试caffe是不是安装好

- 想要不再特定目录下正常运行caffe这个命令,需要添加环境变量。这样任何位置输入caffe都可以啦。

alias caffe="/home/zhangkf/caffe/build/tools/caffe"

四、caffe简单例子

4.1、准备数据

- mnist数据集下载,执行

/home/zhangkf/caffe/data/mnist目录下的get_mnist.sh脚本进行mnist数据集下载

bash get_mnist.sh

- 解压数据集

(caffe) zhangkf@wang-ThinkStation-P520:~/caffe/data/mnist$ tar -xvzf mnist.tar.gz

t10k-images-idx3-ubyte

train-images-idx3-ubyte

t10k-labels-idx1-ubyte

train-labels-idx1-ubyte

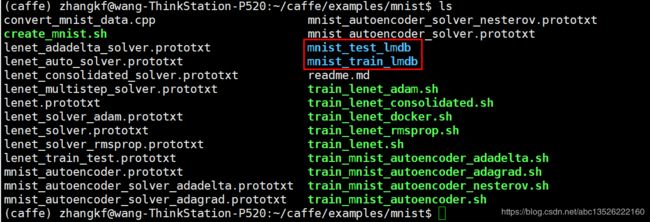

- 数据集格式转换;下载得到的MNIST数据集是二进制文件,需要转换为LEVELDB或者LMDB才能被Caffe识别. 可以通过Caffe框架的

/caffe/example/mnsit/create_mnist.sh将原始数据转换为lmdb/leveldb 格式(注意:需要在caffe路径下运行)

(caffe) zhangkf@wang-ThinkStation-P520:~/caffe$ bash examples/mnist/create_mnist.sh

Creating lmdb...

I1008 09:52:35.662119 6949 db_lmdb.cpp:35] Opened lmdb examples/mnist/mnist_train_lmdb

I1008 09:52:35.662295 6949 convert_mnist_data.cpp:88] A total of 60000 items.

I1008 09:52:35.662302 6949 convert_mnist_data.cpp:89] Rows: 28 Cols: 28

I1008 09:52:39.572388 6949 convert_mnist_data.cpp:108] Processed 60000 files.

I1008 09:52:39.897873 6961 db_lmdb.cpp:35] Opened lmdb examples/mnist/mnist_test_lmdb

I1008 09:52:39.898061 6961 convert_mnist_data.cpp:88] A total of 10000 items.

I1008 09:52:39.898067 6961 convert_mnist_data.cpp:89] Rows: 28 Cols: 28

I1008 09:52:40.528492 6961 convert_mnist_data.cpp:108] Processed 10000 files.

Done.

4.2、定义网络结构

- 定义网络结构:

hbk_mnist.prototxt

name: "hbk_mnist"

################### train/test lmdb数据层

layer {

name: "mnist"

type: "Data"

top: "data"

top: "label"

include {

phase: TRAIN

}

transform_param {

scale: 0.00390625

}

data_param {

source: "/home/zhangkf/caffe/examples/mnist/mnist_train_lmdb" # 训练集的位置

batch_size: 64

backend: LMDB

}

}

layer {

name: "mnist"

type: "Data"

top: "data"

top: "label"

include {

phase: TEST

}

transform_param {

scale: 0.00390625

}

data_param {

source: "/home/zhangkf/caffe/examples/mnist/mnist_test_lmdb" # 测试集的位置

batch_size: 100

backend: LMDB

}

}

#################### 全连接层,激活层为ReLU 784->500->10

layer {

name: "ip1"

type: "InnerProduct"

bottom: "data"

top: "ip1"

param {

lr_mult: 1

}

param {

lr_mult: 2

}

inner_product_param {

num_output: 500

weight_filler {

type: "xavier"

}

bias_filler {

type: "constant"

}

}

}

################### 激活层

layer {

name: "relu1"

type: "ReLU"

bottom: "ip1"

top: "re1"

}

################### 全连接层

layer {

name: "ip2"

type: "InnerProduct"

bottom: "re1"

top: "ip2"

param {

lr_mult: 1

}

param {

lr_mult: 2

}

inner_product_param {

num_output: 10

weight_filler {

type: "xavier"

}

bias_filler {

type: "constant"

}

}

}

# 测试验证用,不必须,输出准确率

layer {

name: "accuracy"

type: "Accuracy"

bottom: "ip2"

bottom: "label"

top: "accuracy"

include {

phase: TEST

}

}

# 代价Cost层

layer {

name: "loss"

type: "SoftmaxWithLoss"

bottom: "ip2"

bottom: "label"

top: "loss"

}

- 网络结构定义完了还是不太直观,我想看看这个网络结构到底是什么样,caffe提供了一个工具这个工具

python/draw_net.py,它可以把我们定义的网络结构图形化显示出来。

python ~/caffe/python/draw_net.py hbk_mnist.prototxt aa.png --rankdir=BT

# 其中aa.png是要生成的图片名称。

# --rankdir=BT BT表示bottom top从底向上。

# --rankdir=LR LR表示left right从左向右

4.3、配置solver文件

- 配置solver文件:

hbk_mnist_solver.prototxt

# The train/test net 文件路径

net: "hbk_mnist.prototxt" # 网络文件

# test_iter specifies how many forward passes the test should carry out.

# In the case of MNIST, we have test batch size 100 and 100 test iterations,

# covering the full 10,000 testing images.

test_iter: 100

# 训练迭代多少次执行一次Test验证

test_interval: 500

# The base learning rate, momentum and the weight decay of the network. 默认随机梯度下降

base_lr: 0.01

momentum: 0.9

weight_decay: 0.0005

# The learning rate policy

lr_policy: "inv"

gamma: 0.0001

power: 0.75

# 多少次迭代输出一次信息

display: 100

# The maximum number of iterations

max_iter: 10001

# 存储中间结果

snapshot: 5000

snapshot_prefix: "snapshot"

# solver mode: CPU or GPU

solver_mode: GPU

4.4、评估模型执行的时间

- 评估模型执行的时间大概是多少,运行如下命令(下面这个CPU跑的时间):

zhangkf@wang-ThinkStation-P520:~/caffe/my_project$ caffe time -model hbk_mnist.prototxt -iterations 10

...

...

I1008 12:57:17.865840 8901 caffe.cpp:397] Average time per layer:

I1008 12:57:17.865844 8901 caffe.cpp:400] mnist forward: 0.0028 ms.

I1008 12:57:17.865847 8901 caffe.cpp:403] mnist backward: 0.0001 ms.

I1008 12:57:17.865867 8901 caffe.cpp:400] ip1 forward: 1.8415 ms.

I1008 12:57:17.865870 8901 caffe.cpp:403] ip1 backward: 1.6795 ms.

I1008 12:57:17.865873 8901 caffe.cpp:400] relu1 forward: 0.021 ms.

I1008 12:57:17.865876 8901 caffe.cpp:403] relu1 backward: 0.022 ms.

I1008 12:57:17.865880 8901 caffe.cpp:400] ip2 forward: 0.1029 ms.

I1008 12:57:17.865885 8901 caffe.cpp:403] ip2 backward: 0.1368 ms.

I1008 12:57:17.865887 8901 caffe.cpp:400] loss forward: 0.0241 ms.

I1008 12:57:17.865890 8901 caffe.cpp:403] loss backward: 0.0008 ms.

I1008 12:57:17.865897 8901 caffe.cpp:408] Average Forward pass: 1.995 ms.

I1008 12:57:17.865900 8901 caffe.cpp:410] Average Backward pass: 1.8418 ms.

I1008 12:57:17.865905 8901 caffe.cpp:412] Average Forward-Backward: 3.8 ms.

I1008 12:57:17.865909 8901 caffe.cpp:414] Total Time: 38 ms. # 跑了38毫秒

I1008 12:57:17.865914 8901 caffe.cpp:415] *** Benchmark ends ***

- GPU跑的时间:

zhangkf@wang-ThinkStation-P520:~/caffe/my_project$ caffe time -model hbk_mnist.prototxt -iterations 10 -gpu 0

...

...

I1008 13:00:59.563093 8943 caffe.cpp:397] Average time per layer:

I1008 13:00:59.563104 8943 caffe.cpp:400] mnist forward: 0.212448 ms.

I1008 13:00:59.563109 8943 caffe.cpp:403] mnist backward: 0.0014048 ms.

I1008 13:00:59.563115 8943 caffe.cpp:400] ip1 forward: 0.0407168 ms.

I1008 13:00:59.563120 8943 caffe.cpp:403] ip1 backward: 0.0318944 ms.

I1008 13:00:59.563125 8943 caffe.cpp:400] relu1 forward: 0.0118592 ms.

I1008 13:00:59.563130 8943 caffe.cpp:403] relu1 backward: 0.0077504 ms.

I1008 13:00:59.563136 8943 caffe.cpp:400] ip2 forward: 0.0259872 ms.

I1008 13:00:59.563140 8943 caffe.cpp:403] ip2 backward: 0.02536 ms.

I1008 13:00:59.563146 8943 caffe.cpp:400] loss forward: 0.0810144 ms.

I1008 13:00:59.563151 8943 caffe.cpp:403] loss backward: 0.0143584 ms.

I1008 13:00:59.563159 8943 caffe.cpp:408] Average Forward pass: 0.41905 ms.

I1008 13:00:59.563165 8943 caffe.cpp:410] Average Backward pass: 0.113318 ms.

I1008 13:00:59.563171 8943 caffe.cpp:412] Average Forward-Backward: 0.56696 ms.

I1008 13:00:59.563177 8943 caffe.cpp:414] Total Time: 5.6696 ms. # 跑了5.6696毫秒

I1008 13:00:59.563191 8943 caffe.cpp:415] *** Benchmark ends ***

4.5、训练模型

zhangkf@wang-ThinkStation-P520:~/caffe/my_project$ caffe time -model hbk_mnist.prototxt -iterations 10 gpu 0

...

...

I1008 13:06:12.997189 8988 solver.cpp:347] Iteration 10000, Testing net (#0)

I1008 13:06:13.031898 9002 data_layer.cpp:73] Restarting data prefetching from start.

I1008 13:06:13.032205 8988 solver.cpp:414] Test net output #0: accuracy = 0.9752

I1008 13:06:13.032243 8988 solver.cpp:414] Test net output #1: loss = 0.0869556 (* 1 = 0.0869556 loss)

I1008 13:06:13.032627 8988 solver.cpp:239] Iteration 10000 (1507.71 iter/s, 0.0663259s/100 iters), loss = 0.046366

I1008 13:06:13.032657 8988 solver.cpp:258] Train net output #0: loss = 0.0463659 (* 1 = 0.0463659 loss)

I1008 13:06:13.032671 8988 sgd_solver.cpp:112] Iteration 10000, lr = 0.00594604

I1008 13:06:13.032773 8988 solver.cpp:464] Snapshotting to binary proto file snapshot_iter_10001.caffemodel

I1008 13:06:13.037735 8988 sgd_solver.cpp:284] Snapshotting solver state to binary proto file snapshot_iter_10001.solverstate

I1008 13:06:13.040637 8988 solver.cpp:332] Optimization Done.

I1008 13:06:13.040649 8988 caffe.cpp:250] Optimization Done.

- 我们发现程序跑的太快,一屏跟不上显示不完。下面把所有的

log都输入一个文件里面; - 其中 2>&1重定向命令解释

zhangkf@wang-ThinkStation-P520:~/caffe/my_project$ caffe train -solver hbk_mnist_solver.prototxt 2>&1 | tee a.log

zhangkf@wang-ThinkStation-P520:~/caffe/my_project$ clear

zhangkf@wang-ThinkStation-P520:~/caffe/my_project$ ls

1 hbk_mnist.prototxt snapshot_iter_10000.caffemodel snapshot_iter_10001.caffemodel snapshot_iter_5000.caffemodel

a.log hbk_mnist_solver.prototxt snapshot_iter_10000.solverstate snapshot_iter_10001.solverstate snapshot_iter_5000.solverstate

zhangkf@wang-ThinkStation-P520:~/caffe/my_project$

补充:vim编辑器显示行号:

vim ~/.vimrc,然后写入set ts=4 set nu

4.6、分析结果

- 根据刚才生成的

a.log文件,分析收敛性,solver中参数设置是否合适。 - caffe提供了一个工具,在

caffe/tools/extra目录下,parse_log.py文件,可以用它来提取有用的信息。 - 未完待续~~~~~~~~

4.7、本地浏览器访问服务器jupyter notebook设置

本地浏览器访问服务器jupyter notebook设置

参考文献

主要参考了以下作者,在这里表示感谢!

- Ubuntu 16.04下Anaconda编译安装Caffe

- Ubuntu16.04安装caffe非常细致教程(历经两周的血泪史)

- Ubuntu16.04 Caffe 安装步骤记录(超详尽)

- Caffe学习系列(13):数据可视化环境(python接口)配置