“搜狗问问”问答语料爬虫

本人的毕业设计是构建一个基于机器学习的问答系统,需要用到大量的问题答案对,并且每个问题下都应有相应的分类标签。

鉴于网络上有分类标签的问答语料很少被人公开,本人亲自编写爬虫来抓取语料。

中文的问答网站有:百度知道、知乎、悟空问答、奇虎问答、搜狗问问等,通过筛选,最后我锁定“搜狗问问”网站。原因是:

- 不具备反爬虫机制或者说连最基本的频繁次数限制都没有。

- 每个问题都有一个大标签和多个小标签。

- URL的结构分明

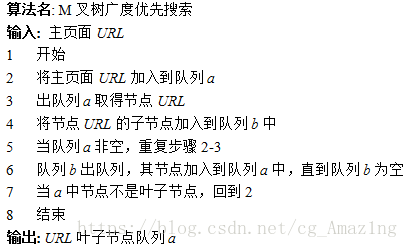

爬虫使用基于树的层次遍历算法:

使用Python编写,代码如下:

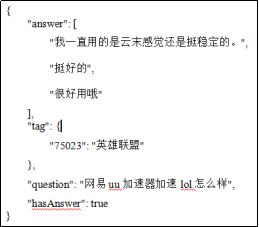

',str(answer),re.S) Answers.append(noPanswer[0]) QuestionAnswer['answer'] = Answers QuestionAnswer['question'] = question QuestionAnswer['tag'] = item['tag'] QuestionAnswer['hasAnswer'] = True except Exception,e: print str(e) else: QuestionAnswer['question'] = item['question'] QuestionAnswer['tag'] = item['tag'] QuestionAnswer['answer'] = '' QuestionAnswer['hasAnswer'] = False QuestionAnswers.append(QuestionAnswer) return QuestionAnswers #if __name__ == '__main__': baseurl = "https://wenwen.sogou.com/cate/tag?" rootUrl = 'https://wenwen.sogou.com' #问题分类标签 tagids = ['101','146','111','163614','50000010','121','93474','9996','148','50000032','135','125','9990','465873'] global smalltags smalltags = {} #遍历标签 for tagid in tagids: f = codecs.open('../../../origin_data/wenwen_corpus/QuestionAnswers/'+str(tagid)+'/test.json','a',encoding='utf-8') t = codecs.open('../../../origin_data/wenwen_corpus/QuestionAnswers/'+ str(tagid) +'/smalltag.json','a',encoding="utf-8") #每个标签拉n个页面 print u'标签:',tagid for i in range(5000,0,-1): tag = 'tag_id='+ tagid tp = '&tp=0' pno = '&pno='+str(i) ch = '&ch=ww.fly.fy'+str(i+1)+'#questionList' url = baseurl + tag + tp + tp + pno + ch print url QuestionAnswers = [] QuestionAnswers = LoadPage(url) if QuestionAnswers: for qa in QuestionAnswers: jsonStr = json.dumps(qa,ensure_ascii=False) f.write(jsonStr.encode("utf-8")+'\n') #保存tag json.dump(smalltags,t,ensure_ascii=False) t.close() f.close()#coding:utf-8 import urllib2 import re from bs4 import BeautifulSoup import codecs import sys import json stdi,stdo,stde=sys.stdin,sys.stdout,sys.stderr reload(sys) sys.stdin,sys.stdout,sys.stderr=stdi,stdo,stde sys.setdefaultencoding('utf-8') ''' 从搜狗问问爬取每个分类标签下的问题答案集,每个问题追加为json格式: { "answer": [ "我一直用的是云末感觉还是挺稳定的。" ], "tag": { "75023": "英雄联盟" }, "question": "网易uu加速器加速lol怎么样", "hasAnswer": true } ''' global rootUrl #加载页面内容 def LoadPage(url): try: user_agent = "Mozilla/5.0 (Windows NT 6.1; WOW64; rv:6.0) Gecko/20100101 Firefox/6.0 " headers = {"User-Agent" : user_agent} request = urllib2.Request(url,headers = headers) response = urllib2.urlopen(request) html = response.read() allTitles = [] allTitles = GetTitle(html) if allTitles: QuestionAnswers = [] QuestionAnswers = GetQuestionAnswers(allTitles) if QuestionAnswers: return QuestionAnswers except Exception,e: print str(e) #获取问题标题 def GetTitle(html): allTitles = [] myAttrs={'class':'sort-lst-tab'} bs = BeautifulSoup(html) titles = bs.find_all(name='a',attrs=myAttrs) for titleInfo in titles: item = {} titleInfoStr = str(titleInfo) questionInfo = re.findall(r'sort-tit">(.*?)',titleInfoStr,re.S) question = questionInfo[0] answerInfo = re.findall(r'sort-rgt-txt">(.*?)',titleInfoStr,re.S) if u'0个回答' in answerInfo: item['hasAnswer'] = False else: item['hasAnswer'] = True tags = re.findall(r'sort-tag" data-id=(.*?)/span>',titleInfoStr,re.S) tagInfo = {} for tag in tags: tagId = re.findall(r'"(.*?)">',tag,re.S) tagName = re.findall(r'>(.*?)<',tag,re.S) tagInfo[tagId[0]] = tagName[0] if tagId not in smalltags.keys(): smalltags[tagId[0]] = tagName[0] subUrl = re.findall(r'href="(.*?)"',titleInfoStr,re.S) url = rootUrl + subUrl[0] item['url'] = url item['question'] = question item['tag'] = tagInfo allTitles.append(item) return allTitles #获取问题和答案 def GetQuestionAnswers(allTitles): QuestionAnswers = [] for item in allTitles: QuestionAnswer = {} if item['hasAnswer']: Answers = [] url = item['url'] try: user_agent = "Mozilla/5.0 (Windows NT 6.1; WOW64; rv:6.0) Gecko/20100101 Firefox/6.0 " headers = {"User-Agent" : user_agent} request = urllib2.Request(url,headers = headers) response = urllib2.urlopen(request) html = response.read() questionAttrs={'id':'question_title_val'} answerAttrs={'class':'replay-info-txt answer_con'} bs = BeautifulSoup(html) #questions = bs.find_all(name='span',attrs=questionAttrs) questions = re.findall(r'question_title_val">(.*?)',html,re.S) question = questions[0] answers = bs.find_all(name='pre',attrs=answerAttrs) if answers: for answer in answers: answerStr = '' if "" in str(answer): segements = re.findall(r'

(.*?)

',str(answer),re.S) for seg in segements: answerStr = answerStr + str(seg) if answerStr.strip() != "": Answers.append(answerStr.strip()) else: noPanswer = re.findall(r'answer_con">(.*?)

爬取的数据格式: