CentOS7 安装EFK(elasticsearch、fluent、kibana)进行Docker下日志搜集

Fluentd并非是专用于日志文件收集的,而是一个通用的信息收集、整理、转发的流式数据处理工具,日志收集只是它十分典型的一个运用场景。重要的是,Fluentd的日志收集功能对容器支持十分完备,远远胜于Logstash等传统日志收集工具。一方面得益于Fluentd社区开发了几种专用于Docker日志文件的收集插件,这些插件能够在Fluentd收集完Docker日志以后自动为它加上容器相关的信息,比较推荐其中的fluent-plugin-docker-metadata-filter这一款插件,它提供的信息颇为齐全。Logstash对于这方面依然比较空缺,GitHub上唯一能够找到的一款社区插件也已经在一年前就停止开发。另一方面,当前Docker官方支持的日志驱动除了默认的使用本地目录,还可以直接发送到远程的日志存储或日志采集服务,而其中日志采集服务目前仅仅支持Splunk和Fluentd,同样没有Logstash等老一辈开源日志工具的踪影。

安装elasticsearch

安装JDK或者openJDK(这里以openJDK为例)

[root@elk elk]# yum install java-1.8.0-openjdk -y安装elasticsearch

[root@elk elk]# wget -c https://download.elastic.co/elasticsearch/release/org/elasticsearch/distribution/rpm/elasticsearch/2.3.3/elasticsearch-2.3.3.rpm

[root@elk elk]# yum localinstall elasticsearch-2.3.3.rpm -y

.....

Installing : elasticsearch-2.3.3-1.noarch 1/1

### NOT starting on installation, please execute the following statements to configure elasticsearch service to start automatically using systemd

sudo systemctl daemon-reload

sudo systemctl enable elasticsearch.service

### You can start elasticsearch service by executing

sudo systemctl start elasticsearch.service

Verifying : elasticsearch-2.3.3-1.noarch 1/1

Installed:

elasticsearch.noarch 0:2.3.3-1

[root@elk elk]# systemctl daemon-reload

[root@elk elk]# systemctl enable elasticsearch

Created symlink from /etc/systemd/system/multi-user.target.wants/elasticsearch.service to /usr/lib/systemd/system/elasticsearch.service.

[root@elk elk]# systemctl start elasticsearch修改防火墙,开放9200和9300端口

[root@elk elk]# firewall-cmd --permanent --add-port={9200/tcp,9300/tcp}

success

[root@elk elk]# firewall-cmd --reload

success

[root@elk elk]# firewall-cmd --list-all

public (default, active)

interfaces: eno16777984 eno33557248

sources:

services: dhcpv6-client ssh

ports: 9200/tcp 9300/tcp

masquerade: no

forward-ports:

icmp-blocks:

rich rules:安装kibana

安装kibana的rpm包

[root@elk elk]# wget https://download.elastic.co/kibana/kibana/kibana-4.5.1-1.x86_64.rpm

[root@elk elk]# yum localinstall kibana-4.5.1-1.x86_64.rpm –y

[root@elk elk]# systemctl enable kibana

Created symlink from /etc/systemd/system/multi-user.target.wants/kibana.service to /usr/lib/systemd/system/kibana.service.

[root@elk elk]# systemctl start kibana修改防火墙,对外开放tcp/5601

[root@elk elk]# firewall-cmd --permanent --add-port=5601/tcp

Success

[root@elk elk]# firewall-cmd --reload

success

[root@elk elk]# firewall-cmd --list-all

public (default, active)

interfaces: eno16777984 eno33557248

sources:

services: dhcpv6-client ssh

ports: 9200/tcp 9300/tcp 5601/tcp

masquerade: no

forward-ports:

icmp-blocks:

rich rules:打开浏览器测试访问kibana的首页http://192.168.10.143:5601/

安装fluent

安装前步奏

查看当前最大打开文件数:

$ ulimit -n

1024如果查看到的是1024,那么这个数值是不足的,则需要修改配置文件提高数值

vi /etc/security/limits.conf设置值如下

root soft nofile 65536

root hard nofile 65536

* soft nofile 65536

* hard nofile 65536修改network参数

vi /etc/sysctl.conf设置值如下

net.ipv4.tcp_tw_recycle = 1

net.ipv4.tcp_tw_reuse = 1

net.ipv4.ip_local_port_range = 10240 65535然后重启系统

安装fluentd

执行如下命令(命令将会自动安装td-agent,td-agent即为fluentd)

$ curl -L https://toolbelt.treasuredata.com/sh/install-redhat-td-agent2.sh | sh启动td-agent

$ /etc/init.d/td-agent start

Starting td-agent: [ OK ]

$ /etc/init.d/td-agent status

td-agent (pid 21678) is running...或者

$ systemctl start td-agent

$ systemctl status td-agent简单demo测试HTTP logs

$ curl -X POST -d 'json={"json":"message"}' http://localhost:8888/debug.test安装必要的插件

$ /usr/sbin/td-agent-gem install fluent-plugin-elasticsearch

$ /usr/sbin/td-agent-gem install fluent-plugin-typecast

$ /usr/sbin/td-agent-gem install fluent-plugin-secure-forward

$ systemctl restart td-agent配置td-agent,使docker生成的日志输出到elasticsearch

修改td-agent的配置

$ vi /etc/td-agent/td-agent.conf修改内容为如下(这里我没有填写端口,默认使用9200端口)

@type forward

port 24224

bind 0.0.0.0

<match alpine**>

@type elasticsearch

logstash_format true

flush_interval 10s # for testing

host 127.0.0.1

match>重启td-agent

$ systemctl restart td-agent启动docker镜像,这里我使用alpine做测试

$ docker run --log-driver=fluentd --log-opt tag="{{.ImageName}}/{{.Name}}/{{.ID}}" alpine:3.3 echo "helloWorld"检查fluentd是否正常转发数据

检查td-agent日志,如果最后出现如下一行,则表明正常连接elasticsearch

2016-09-02 12:01:10 +0800 [info]: Connection opened to Elasticsearch cluster => {:host=>"127.0.0.1", :port=>9200, :scheme=>"http"}检查elasticsearch存储,使用如下命令

$ curl localhost:9200/_search?pretty

{

"took" : 472,

"timed_out" : false,

"_shards" : {

"total" : 6,

"successful" : 6,

"failed" : 0

},

"hits" : {

"total" : 3,

"max_score" : 1.0,

"hits" : [ {

"_index" : ".kibana",

"_type" : "config",

"_id" : "4.5.4",

"_score" : 1.0,

"_source" : {

"buildNum" : 10000

}

}, {

"_index" : "logstash-2016.09.02",

"_type" : "fluentd",

"_id" : "AVbpDs1fOf1yFl8aApNq",

"_score" : 1.0,

"_source" : {

"log" : "helloWorld",

"container_id" : "8c58cf5be57e9905215ea8f8cd677e48594a384c30a2842facb6563c3f32097c",

"container_name" : "/sleepy_elion",

"source" : "stdout",

"@timestamp" : "2016-09-02T12:00:59+08:00"

}

}, {

"_index" : "logstash-2016.09.02",

"_type" : "fluentd",

"_id" : "AVbpDskPOf1yFl8aApNp",

"_score" : 1.0,

"_source" : {

"log" : "helloWorld",

"container_id" : "8c58cf5be57e9905215ea8f8cd677e48594a384c30a2842facb6563c3f32097c",

"container_name" : "/sleepy_elion",

"source" : "stdout",

"@timestamp" : "2016-09-02T12:00:59+08:00"

}

} ]

}

}查看到刚刚插入的数据,则表明数据已经写入到elasticsearch了

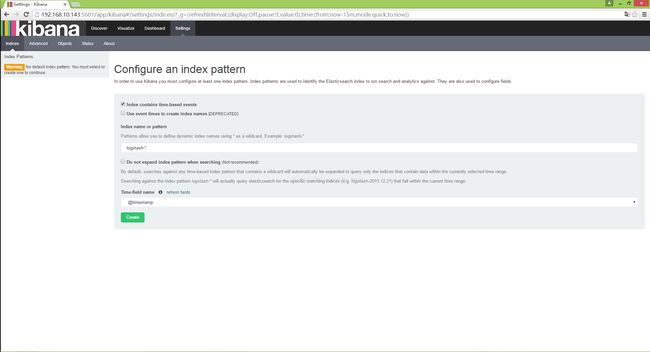

进入kibana创建索引

访问页面:http://192.168.10.143:5601/

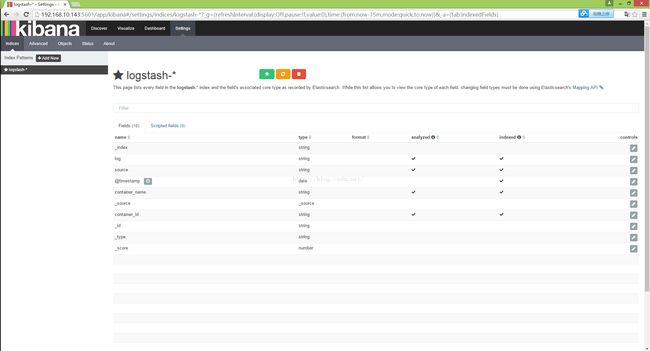

点击“create”,即可看到如下设置

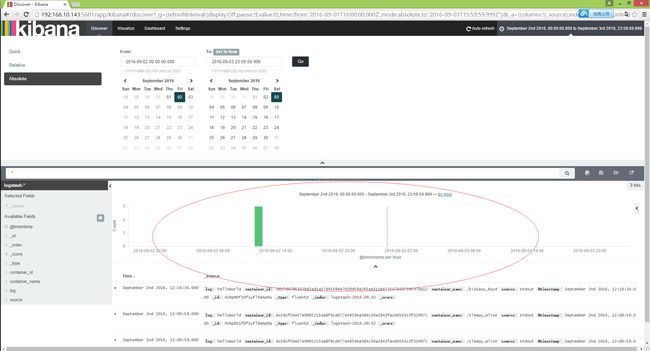

修改查询条件,则可查看到写入的数据

至此,EFK的安装与测试则已经完成