CRUSH map 定制实例解析

定制 CRUSH map:直接修改配置**

当我们使用ceph-deploy部署ceph时,它为我们的配置文件生成一个默认的CRUSH map,默认的CRUSH map 在测试和沙箱环境下是够用的;但在一个大型的生产环境中,最好为环境定制一个CRUSH map,步骤:

1、提取已有的CRUSH map ,使用-o参数,ceph将输出一个经过编译的CRUSH map 到您指定的文件

` ceph osd getcrushmap -o crushmap.txt`

2、反编译你的CRUSH map ,使用-d参数将反编译CRUSH map 到通过-o 指定的文件中

`crushtool -d crushmap.txt -o crushmap-decompile`

3、使用编辑器编辑CRUSH map

`vi crushmap-decompile`

4、重新编译这个新的CRUSH map

`crushtool -c crushmap-decompile -o crushmap-compiled`

5、将新的CRUSH map 应用到ceph 集群中

`ceph osd setcrushmap -i crushmap-compiled`

编辑注意事项:

1、我们用下面的语法声明一个node bucket:

[bucket-type] [bucket-name] {

id [a unique negative numeric ID]

weight [the relative capacity/capability of the item(s)]

alg [the bucket type: uniform | list | tree | straw ]

hash [the hash type: 0 by default]

item [item-name] weight [weight]

}

举例:

host node1 {

id -1

alg straw

hash 0

item osd.0 weight 1.00

item osd.1 weight 1.00

}

host node2 {

id -2

alg straw

hash 0

item osd.2 weight 1.00

item osd.3 weight 1.00

}

rack rack1 {

id -3

alg straw

hash 0

item node1 weight 2.00

item node2 weight 2.00

}

1.2、在层级结构中,rack bucket 层级高于host bucket,host bucket 高于 osd。

所以,rack bucket 包含node bucket,不包含任何osds,

1.3、osd 的权重默认:1.00相关于1TB,0.50相关与500GB,3.00相关于3TB;

数据传输速率较慢的可略低于此相关率,速率较快的可略高于此相关率。

2、rules:资源池布置数据所依据的规则;默认情况下一个ruleset配所有的默认资源池;

大部分情况下,当你新建一个资源池时,不需要修改默认的rules,默认的ruleset是0

语法:

rule {

ruleset

type [ replicated | erasure ]

min_size

max_size

step take

step [choose|chooseleaf] [firstn|indep]

step emit

}

举例:

# rules

rule data {

ruleset 0

type replicated

min_size 1

max_size 10

step take default

step chooseleaf firstn 0 type host

step emit

}

rule metadata {

ruleset 1

type replicated

min_size 1

max_size 10

step take default

step chooseleaf firstn 0 type host

step emit

}

rule rbd {

ruleset 2

type replicated

min_size 1

max_size 10

step take default

step chooseleaf firstn 0 type host

step emit

}

3、添加一个buckets和rules

#举例添加2个特殊的rules,一个是服务SSD pool,一个是服务SAS pool

3.1 为SSD pool添加一个bucket

pool ssd {

id -5 # do not change unnecessarily

alg straw

hash 0 # rjenkins1

item osd.0 weight 1.000

item osd.1 weight 1.000

}

添加与之对应的rule:

rule ssd {

ruleset 3

type replicated

min_size 1

max_size 10

step take ssd

step choose firstn 0 type osd

step emit

}

3.2 同理为SAS pool添加一个bucket

pool sas {

id -6 # do not change unnecessarily

alg straw

hash 0 # rjenkins1

item osd.2 weight 1.000

item osd.3 weight 1.000

}

对应的rule:

rule sas {

ruleset 4

type replicated

min_size 1

max_size 10

step take sas

step choose firstn 0 type osd

step emit

}

设置资源池使用ssd rule

ceph osd pool set newpoolname crush_ruleset 3 #ruleset 后面的数字是在rule配置里面ruleset对应的数字

crush map :微调 使用命令**

添加、移动一个osd:

ceph osd crush set {id} {name} {weight} pool={pool-name} [{bucket-type}={bucket-name} ...]

更改osd的权重:

ceph osd crush reweight {name} {weight}

删除一个osd:

ceph osd crush remove {name}

添加一个bucket:

ceph osd crush add-bucket {bucket-name} {bucket-type}

移动一个bucket:

ceph osd crush move {bucket-name} {bucket-type}={bucket-name}, [...]

删除一个bucket:

ceph osd crush remove {bucket-name}

移动bucket实例:

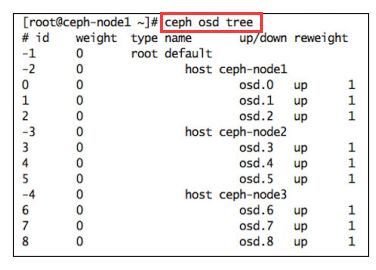

1、执行命令`ceph osd tree `查看当前的cluster 布局:图cluster1

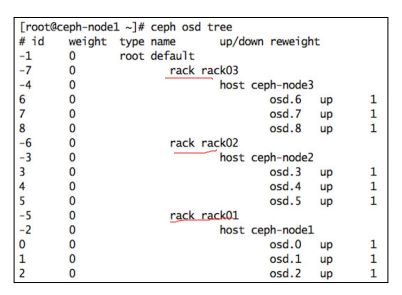

2、在当前布局中添加racks:

ceph osd crush add-bucket rack01 rack

ceph osd crush add-bucket rack02 rack

ceph osd crush add-bucket rack03 rack

3、把每一个host移动到相应的rack下面:

ceph osd crush move ceph-node1 rack=rack01

ceph osd crush move ceph-node2 rack=rack02

ceph osd crush move ceph-node3 rack=rack03

4、把所有rack移动到default root 下面:

ceph osd crush move rack03 root=default

ceph osd crush move rack02 root=default

ceph osd crush move rack01 root=default

5、再次执行命令`ceph osd tree`查看更新后的cluster 布局:图cluster2

参考文档:

http://my.oschina.net/u/2271251/blog/347206

http://my.oschina.net/renguijiayi/blog/300725

官方文档:

书籍:learning ceph

http://docs.ceph.com/docs/master/rados/operations/crush-map/#crush-map-bucket-types

本博客允许转载,但请保留此文源出处:http://blog.csdn.net/heivy/article/details/50592244