Pytorch版DCGAN图像生成技术

参考:

https://github.com/devnag/pytorch-generative-adversarial-networks/blob/master/gan_pytorch.py

https://blog.csdn.net/sunqiande88/article/details/80219842

https://blog.csdn.net/xiaoxifei/article/details/87797935

目录结构:

数据集下载:链接: https://pan.baidu.com/s/1uUl8QQcxfBkFrX3L-_Yuxg 提取码: gef6

两个文件:train.py, model.py

model.py

import torch.nn as nn

# 定义生成器网络G

class NetG(nn.Module):

def __init__(self, ngf, nz):

super(NetG, self).__init__()

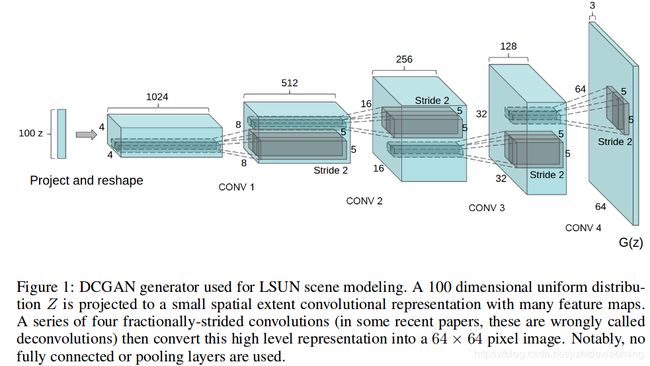

# layer1输入的是一个100x1x1的随机噪声, 输出尺寸(ngf*8)x4x4

self.layer1 = nn.Sequential(

nn.ConvTranspose2d(nz, ngf * 8, kernel_size=4, stride=1, padding=0, bias=False),

nn.BatchNorm2d(ngf * 8),

nn.ReLU(inplace=True)

)

# layer2输出尺寸(ngf*4)x8x8

self.layer2 = nn.Sequential(

nn.ConvTranspose2d(ngf * 8, ngf * 4, 4, 2, 1, bias=False),

nn.BatchNorm2d(ngf * 4),

nn.ReLU(inplace=True)

) # layer3输出尺寸(ngf*2)x16x16

self.layer3 = nn.Sequential(

nn.ConvTranspose2d(ngf * 4, ngf * 2, 4, 2, 1, bias=False),

nn.BatchNorm2d(ngf * 2),

nn.ReLU(inplace=True)

)

# layer4输出尺寸(ngf)x32x32

self.layer4 = nn.Sequential(

nn.ConvTranspose2d(ngf * 2, ngf, 4, 2, 1, bias=False),

nn.BatchNorm2d(ngf),

nn.ReLU(inplace=True)

)

# layer5输出尺寸 3x96x96

self.layer5 = nn.Sequential(

nn.ConvTranspose2d(ngf, 3, 5, 3, 1, bias=False),

nn.Tanh()

)

# 定义NetG的前向传播

def forward(self, x):

out = self.layer1(x)

out = self.layer2(out)

out = self.layer3(out)

out = self.layer4(out)

out = self.layer5(out)

return out

# 定义鉴别器网络D

class NetD(nn.Module):

def __init__(self, ndf):

super(NetD, self).__init__() # 对继承自父类的属性进行初始化

# layer1 输入 3 x 96 x 96, 输出 (ndf) x 32 x 32

self.layer1 = nn.Sequential(

nn.Conv2d(3, ndf, kernel_size=5, stride=3, padding=1, bias=False),

nn.BatchNorm2d(ndf),

nn.LeakyReLU(0.2, inplace=True)

)

# layer2 输出 (ndf*2) x 16 x 16

self.layer2 = nn.Sequential(

nn.Conv2d(ndf, ndf * 2, 4, 2, 1, bias=False),

nn.BatchNorm2d(ndf * 2),

nn.LeakyReLU(0.2, inplace=True)

)

# layer3 输出 (ndf*4) x 8 x 8

self.layer3 = nn.Sequential(

nn.Conv2d(ndf * 2, ndf * 4, 4, 2, 1, bias=False),

nn.BatchNorm2d(ndf * 4),

nn.LeakyReLU(0.2, inplace=True)

)

# layer4 输出 (ndf*8) x 4 x 4

self.layer4 = nn.Sequential(

nn.Conv2d(ndf * 4, ndf * 8, 4, 2, 1, bias=False),

nn.BatchNorm2d(ndf * 8),

nn.LeakyReLU(0.2, inplace=True)

)

# layer5 输出一个数(概率)

self.layer5 = nn.Sequential(

nn.Conv2d(ndf * 8, 1, 4, 1, 0, bias=False),

nn.Sigmoid() # 也是一个激活函数,二分类问题中,是真是假

# sigmoid可以班实数映射到【0,1】,作为概率值,

# 多分类用softmax函数

)

# 定义NetD的前向传播

def forward(self,x):

out = self.layer1(x)

out = self.layer2(out)

out = self.layer3(out)

out = self.layer4(out)

out = self.layer5(out)

return out

train.py

import argparse

import torch

import torchvision

import torchvision.utils as vutils

import torch.nn as nn

from torch.autograd import Variable

from model import NetD, NetG

parser = argparse.ArgumentParser()

parser.add_argument('--batchSize', type=int, default=64)

parser.add_argument('--imageSize', type=int, default=96)

parser.add_argument('--nz', type=int, default=100, help='size of the latent z vector')

parser.add_argument('--ngf', type=int, default=64)

parser.add_argument('--ndf', type=int, default=64)

parser.add_argument('--epoch', type=int, default=25, help='number of epochs to train for')

parser.add_argument('--lr', type=float, default=0.0002, help='learning rate, default=0.0002')

parser.add_argument('--beta1', type=float, default=0.5, help='beta1 for adam. default=0.5')

parser.add_argument('--data_path', default='data/', help='folder to train data')

parser.add_argument('--outf', default='imgs/', help='folder to output images and model checkpoints')

opt = parser.parse_args()

# 定义是否使用GPU

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

# 图像读入与预处理

transforms = torchvision.transforms.Compose([

torchvision.transforms.Scale(opt.imageSize),

torchvision.transforms.ToTensor(),

torchvision.transforms.Normalize((0.5, 0.5, 0.5), (0.5, 0.5, 0.5)), ])

dataset = torchvision.datasets.ImageFolder(opt.data_path, transform=transforms)

dataloader = torch.utils.data.DataLoader(

dataset=dataset,

batch_size=opt.batchSize,

shuffle=True,

drop_last=True,

)

netG = NetG(opt.ngf, opt.nz).to(device)

netD = NetD(opt.ndf).to(device)

criterion = nn.BCELoss()

optimizerG = torch.optim.Adam(netG.parameters(), lr=opt.lr, betas=(opt.beta1, 0.999))

optimizerD = torch.optim.Adam(netD.parameters(), lr=opt.lr, betas=(opt.beta1, 0.999))

for epoch in range(1, opt.epoch + 1):

for i, (imgs, _) in enumerate(dataloader): # 每次epoch,遍历所有图片,共800个batch

# 1,固定生成器G,训练鉴别器D

real_label = Variable(torch.ones(opt.batchSize)).cuda()

fake_label = Variable(torch.zeros(opt.batchSize)).cuda()

netD.zero_grad()

# 让D尽可能的把真图片判别为1

real_imgs = Variable(imgs.to(device))

real_output = netD(real_imgs)

d_real_loss = criterion(real_output, real_label)

real_scores = real_output

# d_real_loss.backward() # compute/store gradients, but don't change params

# 让D尽可能把假图片判别为0

noise = Variable(torch.randn(opt.batchSize, opt.nz, 1, 1)).to(device)

noise = noise.to(device)

fake_imgs = netG(noise) # 生成假图

fake_output = netD(fake_imgs.detach()) # 避免梯度传到G,因为G不用更新, detach分离

d_fake_loss = criterion(fake_output, fake_label)

fake_scores = fake_output

# d_fake_loss.backward()

d_total_loss = d_fake_loss + d_real_loss

netG.zero_grad()

d_total_loss.backward() # 反向传播,计算梯度

optimizerD.step() # Only optimizes D's parameters; changes based on stored gradients from backward()

# 2,固定鉴别器D,训练生成器G

fake_output = netD(fake_imgs)

g_fake_loss = criterion(fake_output, real_label)

g_fake_loss.backward() # 反向传播,计算梯度

optimizerG.step() # 梯度信息来更新网络的参数,Only optimizes G's parameters

print('[%d/%d][%d/%d] real_scores: %.3f fake_scores %.3f'

% (epoch, opt.epoch, i, len(dataloader), real_scores.data.mean(), fake_scores.data.mean()))

if i % 100 == 0:

vutils.save_image(fake_imgs.data,

'%s/fake_samples_epoch_%03d_batch_i_%03d.png' % (opt.outf, epoch, i),

normalize=True)

# vutils.save_image(fake.data,

# '%s/fake_samples_epoch_%03d.png' % (opt.outf, epoch),

# normalize=True)

torch.save(netG.state_dict(), '%s/netG_%03d.pth' % (opt.outf, epoch))

torch.save(netD.state_dict(), '%s/netD_%03d.pth' % (opt.outf, epoch))

注意:训练D的时候,使用下面的代码,通过分离(detach)G网络生成的fake_imgs,从而固定住G网络

fake_output = netD(fake_imgs.detach())训练生成器的时候,虽然fake_ouput是讲过D网络判别,然后g_fake_loss反向传播计算梯度,但是通过下面代码只更新了G网络的参数:

# optimizerG = torch.optim.Adam(netG.parameters(), lr=opt.lr, betas=(opt.beta1, 0.999))

optimizerG.step() # Only optimizes G's parameters效果

epoch1:

epoch13: