PYTORCH BUG 总结!!!!!!!!!

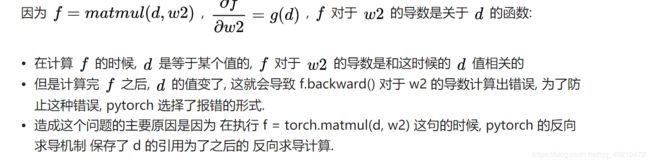

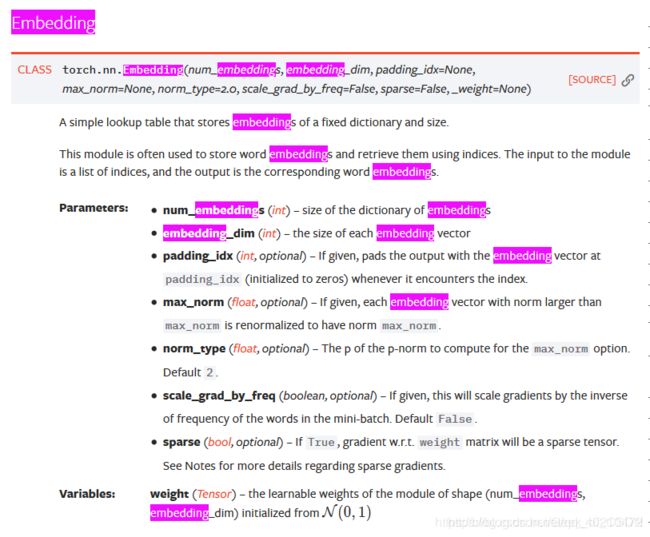

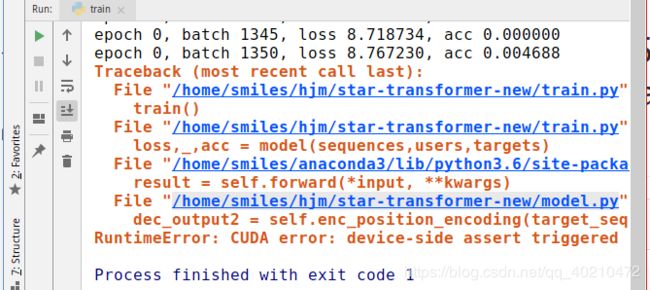

cuda error:device-side assert triggered

1、根据上图中的bug,查找可得,embedding的num_embeddings 是要设置成你的字典的大小,但是要记住,一定是vocab_size + 1,而不是vocab_size,即要设置为字典大小+1。

bug 2 :cuda out of memory:

这个问题出现有很多原因:先贴一个最近遇到的

losses.append(loss.item)

使用上语句报显存过多的错。将上语句改为下语句则错误消失。

losses.append(loss.item())

原因:如果在累加损失时未将其转换为Python数字,则可能出现程序内存使用量增加的情况。这是因为上面表达式的右侧原本是一个Python浮点数,而它现在是一个零维张量。因此,总损失累加了张量和它们的梯度历史,这可能会产生很大的autograd 图,耗费内存和计算资源。

BUG3 :error :torch.dtype object has no attribute 'type

出现这个问题是 :

`def get_mrr(indices,targets):

tmp = targets.view(-1,1)

targets = tmp.expand_as(indices)

hits = (targets == indices).nonzero()

ranks = hits[:,-1] + 1

ranks = ranks.float()

rranks = torch.reciprocal(ranks)

mrr = torch.sum(rranks).data/targets.size(0)

return mrr`

注意: 上方的mrr 是torch.tensor

mean_mrr = np.mean(mrrs)

在进行上述操作的时候报错:error :torch.dtype object has no attribute 'type

- [ ]

原因:Apparently Numpy is not able to compute the average of one list in self.stats.

torch.tensor是不能用numpy进行均值计算的。

所以解决方法是取scalar

也就是mrr.item()

BUG4 :RuntimeError: one of the variables needed for gradient computation has been modified by an inplace o

import torch

x = torch.FloatTensor([[1., 2.]])

w1 = torch.FloatTensor([[2.], [1.]])

w2 = torch.FloatTensor([3.])

w1.requires_grad = True

w2.requires_grad = True

d = torch.matmul(x, w1)

f = torch.matmul(d, w2)

d[:] = 1 # 因为这句, 代码报错了 RuntimeError: one of the variables needed for gradient computation has been modified by an inplace operation

f.backward()

import torch

x = torch.FloatTensor([[1., 2.]])

w1 = torch.FloatTensor([[2.], [1.]])

w2 = torch.FloatTensor([3.])

w1.requires_grad = True

w2.requires_grad = True

d = torch.matmul(x, w1)

d[:] = 1 # 稍微调换一下位置, 就没有问题了

f = torch.matmul(d, w2)

f.backward()

bug 5 cant-optimize-a-non-leaf-tensor

a = torch.rand(10, requires_grad=True) # a is a leaf variable

a = torch.rand(10, requires_grad=True).double() # a is NOT a leaf variable as it was created by the operation that cast a float tensor into a double tensor

a = torch.rand(10).requires_grad_().double() # equivalent to the formulation just above: not a leaf variable

a = torch.rand(10).double() # a does not require gradients and has not operation creating it (tracked by the autograd engine).

a = torch.rand(10).doube().requires_grad_() # a requires gradients and has no operations creating it: it's a leaf variable and can be given to an optimizer.

a = torch.rand(10, requires_grad=True, device="cuda") # a requires grad, has not operation creating it: it's a leaf variable as well and can be given to an optimizer

我的错误是出现在

user_features = torch.randn(n_users, latent_vectors, requires_grad=True).to(device)

改后

user_features = torch.randn(n_users, latent_vectors, requires_grad=True,device=“cuda”)

就解决了这个问题 参考链接 https://discuss.pytorch.org/t/valueerror-cant-optimize-a-non-leaf-tensor/21751/1

A leaf Variable is a variable that is at the beginning of the graph. That means that no operation tracked by the autograd engine created it.

This is what you want when you optimize neural networks as it is usually your weights or input.

也就是叶子变量是位于graph开头的变量。这意味着autograd引擎跟踪的操作没有创建它。

当您优化神经网络时,这就是您想要的,因为它通常是您的权重或输入。

所以解决这个问题我们就是要生成一个leaf variable