hive学习5:自定义函数之json对象转换hive数据存储

0元数据,上传到服务器上:

{"movie":"2717","rate":"3","timeStamp":"978298196","uid":"2"}

{"movie":"2571","rate":"4","timeStamp":"978299773","uid":"2"}

{"movie":"1917","rate":"3","timeStamp":"978300174","uid":"2"}

{"movie":"2396","rate":"4","timeStamp":"978299641","uid":"2"}

{"movie":"3735","rate":"3","timeStamp":"978298814","uid":"2"}

{"movie":"1953","rate":"4","timeStamp":"978298775","uid":"2"}

{"movie":"1597","rate":"3","timeStamp":"978300025","uid":"2"}

{"movie":"3809","rate":"3","timeStamp":"978299712","uid":"2"}

{"movie":"1954","rate":"5","timeStamp":"978298841","uid":"2"}

{"movie":"1955","rate":"4","timeStamp":"978299200","uid":"2"}

{"movie":"235","rate":"3","timeStamp":"978299351","uid":"2"}

{"movie":"1124","rate":"5","timeStamp":"978299418","uid":"2"}

{"movie":"1957","rate":"5","timeStamp":"978298750","uid":"2"}

{"movie":"163","rate":"4","timeStamp":"978299809","uid":"2"}#创建t_json表

0: jdbc:hive2://localhost:10000> create table t_json(line string)

0: jdbc:hive2://localhost:10000> row format delimited;

#导入数据

0: jdbc:hive2://localhost:10000> load data local inpath '/home/hadoop/data.json' into table t_json;

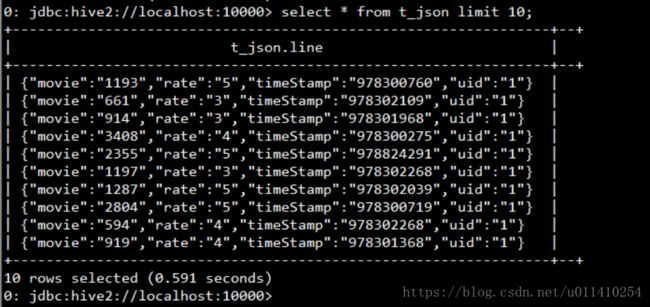

导入后结果:

以上数据初始化话完成。

1、创建java项目。不介绍,项目创建好后,可以加入pom依赖,或者直接把hive依赖的jar包拷贝项目里,

2、创建java类JsonParser

package com.zsy.hive.udf;

import java.io.IOException;

import org.apache.hadoop.hive.ql.exec.UDF;

import parquet.org.codehaus.jackson.map.ObjectMapper;

public class JsonParser extends UDF {

ObjectMapper objectMapper = new ObjectMapper();

public String evaluate(String jsonLine) {

MovieRateBean readValue;

try {

readValue = objectMapper.readValue(jsonLine, MovieRateBean.class);

return readValue.toString();

} catch (IOException e) {

}

return "";

}

}3、创建bean对象:

package com.zsy.hive.udf;

public class MovieRateBean {

private String movie;

private String rate;

private String timeStamp;

private String uid;

public String getMovie() {

return movie;

}

public void setMovie(String movie) {

this.movie = movie;

}

public String getRate() {

return rate;

}

public void setRate(String rate) {

this.rate = rate;

}

public String getTimeStamp() {

return timeStamp;

}

public void setTimeStamp(String timeStamp) {

this.timeStamp = timeStamp;

}

public String getUid() {

return uid;

}

public void setUid(String uid) {

this.uid = uid;

}

@Override

public String toString() {

return movie + "," + rate + "," + timeStamp + "," + uid;

}

}

4、打包,放到hive所在的服务器。

5、执行命令,把jar包加入classpath里:

0: jdbc:hive2://localhost:10000> add JAR /home/hadoop/udf.jar;6、创建函数:

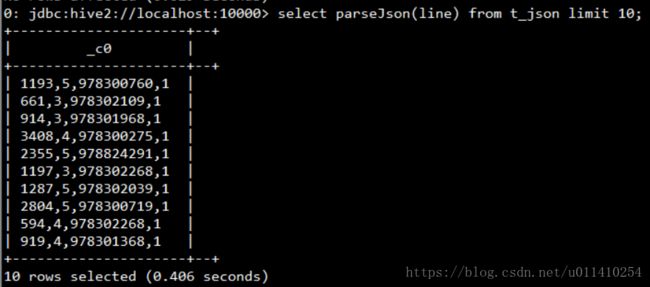

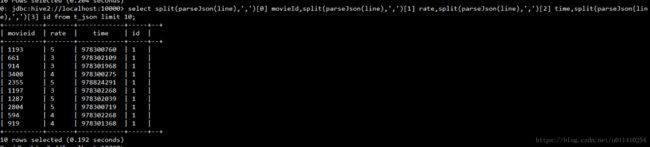

0: jdbc:hive2://localhost:10000> create temporary function parseJson as 'com.zsy.hive.udf.JsonParser';跟进逗号进行分割:select split(parseJson(line),',')[0] movieId,split(parseJson(line),',')[1] rate,split(parseJson(line),',')[2] time,split(parseJson(line),',')[3] id from t_json limit 10;

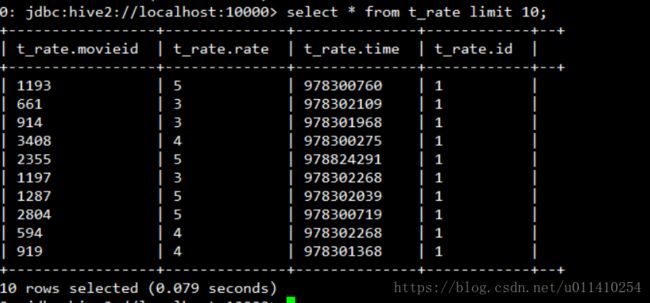

查询的结果保存到hive表里:

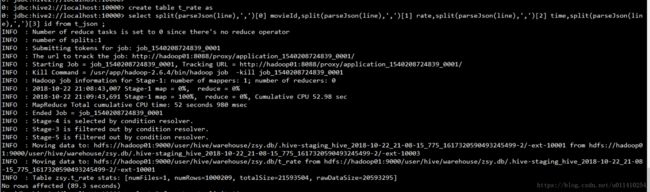

create table t_rate as select split(parseJson(line),',')[0] movieId,split(parseJson(line),',')[1] rate,split(parseJson(line),',')[2] time,split(parseJson(line),',')[3] id from t_json ;

运行过程:

结果:

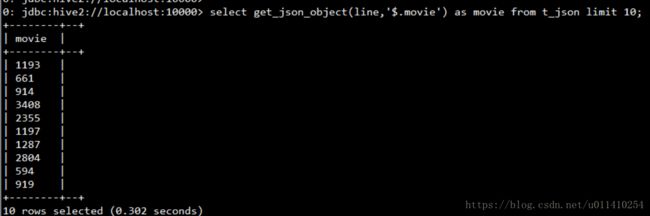

以上是通过自定义hive函数来解决的方式,其实hive有一个内置函数,接下来演示下内置函数:

select get_json_object(line,'$.movie') as movie from t_json limit 10;

内置的函数只能实现简单的json格式,复杂的json格式还是要定义函数来实现