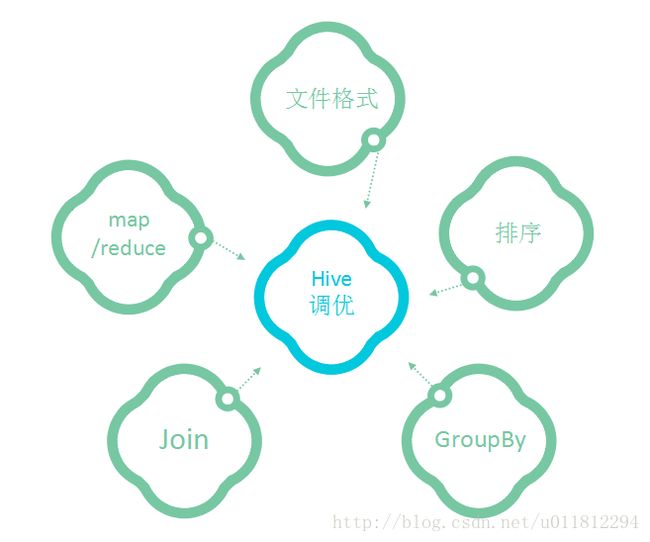

Hive调优实践

1 文件格式的选择

ORC格式确实要比textFile要更适合于hive,查询速度会提高20-40%左右

例子1:

youtube1的文件格式是TextFIle,youtube3的文件格式是orc

hive> select videoId,uploader,age,views from youtube1 order by views limit 10;

Query ID = hadoop_20170710085454_6768a540-a0b3-4d98-92a0-f97d4eff8b42

Total jobs = 1

Launching Job 1 out of 1

Number of reduce tasks determined at compile time: 1

Starting Job = job_1499153664137_0070, Tracking URL = http://master:8088/proxy/application_1499153664137_0070/

Kill Command = /home/hadoop/app/hadoop/bin/hadoop job -kill job_1499153664137_0070

Hadoop job information for Stage-1: number of mappers: 6; number of reducers: 1

2017-07-10 08:55:18,434 Stage-1 map = 0%, reduce = 0%

2017-07-10 08:55:56,924 Stage-1 map = 1%, reduce = 0%, Cumulative CPU 11.99 sec

...

2017-07-10 08:56:33,719 Stage-1 map = 100%, reduce = 100%, Cumulative CPU 62.7 sec

MapReduce Total cumulative CPU time: 1 minutes 2 seconds 700 msec

Ended Job = job_1499153664137_0070

MapReduce Jobs Launched:

Stage-Stage-1: Map: 6 Reduce: 1 Cumulative CPU: 62.7 sec HDFS Read: 1086704175 HDFS Write: 323 SUCCESS

Total MapReduce CPU Time Spent: 1 minutes 2 seconds 700 msec

OK

P4c-EViSRsw ERNESTINEbrowning 1240 0

woMdGKHIg3o Maxwell739 1240 0

_jVH58-X4C4 nelenajolly 1240 0

k1iTl0Kh4DQ rachellelala 1240 0

8LdPZ_n1S4c GohanxVidel21 1240 0

PSex2TAkQC8 Qingy3 1254 0

YC20zP9p_wI aimeenmegan 1254 0

3XduLiQMMTM marshallgovindan 1240 0

IjeXG6yXXZ4 SenateurDupont1973 1254 0

sw4XgF1zkXE bablooian 1240 0

Time taken: 119.739 seconds, Fetched: 10 row(s)hive> select videoId,uploader,age,views from youtube3 order by views limit 10;

Query ID = hadoop_20170710085959_e6d66799-0a8a-4696-bf93-a0abc3f00de0

Total jobs = 1

Launching Job 1 out of 1

Number of reduce tasks determined at compile time: 1

Starting Job = job_1499153664137_0071, Tracking URL = http://master:8088/proxy/application_1499153664137_0071/

Kill Command = /home/hadoop/app/hadoop/bin/hadoop job -kill job_1499153664137_0071

Hadoop job information for Stage-1: number of mappers: 4; number of reducers: 1

2017-07-10 08:59:43,472 Stage-1 map = 0%, reduce = 0%

2017-07-10 09:00:12,555 Stage-1 map = 25%, reduce = 0%, Cumulative CPU 2.99 sec

...

2017-07-10 09:00:52,499 Stage-1 map = 100%, reduce = 100%, Cumulative CPU 50.2 sec

MapReduce Total cumulative CPU time: 50 seconds 200 msec

Ended Job = job_1499153664137_0071

MapReduce Jobs Launched:

Stage-Stage-1: Map: 4 Reduce: 1 Cumulative CPU: 50.2 sec HDFS Read: 95047649 HDFS Write: 323 SUCCESS

Total MapReduce CPU Time Spent: 50 seconds 200 msec

OK

P4c-EViSRsw ERNESTINEbrowning 1240 0

woMdGKHIg3o Maxwell739 1240 0

_jVH58-X4C4 nelenajolly 1240 0

k1iTl0Kh4DQ rachellelala 1240 0

8LdPZ_n1S4c GohanxVidel21 1240 0

PSex2TAkQC8 Qingy3 1254 0

YC20zP9p_wI aimeenmegan 1254 0

3XduLiQMMTM marshallgovindan 1240 0

IjeXG6yXXZ4 SenateurDupont1973 1254 0

sw4XgF1zkXE bablooian 1240 0

Time taken: 109.776 seconds, Fetched: 10 row(s)例子2:

hive> select tagId, count(a.videoid) as sum from (select videoid,tagId from youtube1 lateral view explode(category) catetory as tagId) a group by a.tagId order by sum desc;

Query ID = hadoop_20170710090404_46a79a3d-8863-4390-8898-7f82c5a3b7ab

Total jobs = 2

Launching Job 1 out of 2

Number of reduce tasks not specified. Estimated from input data size: 5

Starting Job = job_1499153664137_0072, Tracking URL = http://master:8088/proxy/application_1499153664137_0072/

Kill Command = /home/hadoop/app/hadoop/bin/hadoop job -kill job_1499153664137_0072

Hadoop job information for Stage-1: number of mappers: 6; number of reducers: 5

2017-07-10 09:05:04,067 Stage-1 map = 0%, reduce = 0%

2017-07-10 09:05:40,498 Stage-1 map = 6%, reduce = 0%, Cumulative CPU 5.9 sec

...

2017-07-10 09:06:09,681 Stage-1 map = 100%, reduce = 100%, Cumulative CPU 39.56 sec

MapReduce Total cumulative CPU time: 39 seconds 560 msec

Ended Job = job_1499153664137_0072

Launching Job 2 out of 2

Number of reduce tasks determined at compile time: 1

Starting Job = job_1499153664137_0073, Tracking URL = http://master:8088/proxy/application_1499153664137_0073/

Kill Command = /home/hadoop/app/hadoop/bin/hadoop job -kill job_1499153664137_0073

Hadoop job information for Stage-2: number of mappers: 2; number of reducers: 1

2017-07-10 09:06:45,360 Stage-2 map = 0%, reduce = 0%

...

2017-07-10 09:07:42,083 Stage-2 map = 100%, reduce = 100%, Cumulative CPU 3.62 sec

MapReduce Total cumulative CPU time: 3 seconds 620 msec

Ended Job = job_1499153664137_0073

MapReduce Jobs Launched:

Stage-Stage-1: Map: 6 Reduce: 5 Cumulative CPU: 39.56 sec HDFS Read: 1086732303 HDFS Write: 1188 SUCCESS

Stage-Stage-2: Map: 2 Reduce: 1 Cumulative CPU: 3.62 sec HDFS Read: 8218 HDFS Write: 356 SUCCESS

Total MapReduce CPU Time Spent: 43 seconds 180 msec

OK

Entertainment 1304724

Music 1274825

Comedy 449652

Blogs 447581

People 447581

Film 442109

Animation 442109

Sports 390619

Politics 186753

News 186753

Autos 169883

Vehicles 169883

Howto 124885

Style 124885

Pets 86444

Animals 86444

Travel 82068

Events 82068

Education 54133

Technology 50925

Science 50925

UNA 42928

Nonprofits 16925

Activism 16925

Gaming 10182

Time taken: 201.127 seconds, Fetched: 25 row(s)hive> select tagId, count(a.videoid) as sum from (select videoid,tagId from youtube3 lateral view

explode(category) catetory as tagId) a group by a.tagId order by sum desc;

Query ID = hadoop_20170710090909_bfddc50b-665d-4296-9475-0cee55058c85

Total jobs = 2

Launching Job 1 out of 2

Number of reduce tasks not specified. Estimated from input data size: 3

Starting Job = job_1499153664137_0074, Tracking URL = http://master:8088/proxy/application_1499153664137_0074/

Kill Command = /home/hadoop/app/hadoop/bin/hadoop job -kill job_1499153664137_0074

Hadoop job information for Stage-1: number of mappers: 4; number of reducers: 3

2017-07-10 09:09:46,075 Stage-1 map = 0%, reduce = 0%

...

2017-07-10 09:10:39,596 Stage-1 map = 100%, reduce = 100%, Cumulative CPU 20.87 sec

MapReduce Total cumulative CPU time: 20 seconds 870 msec

Ended Job = job_1499153664137_0074

Launching Job 2 out of 2

Number of reduce tasks determined at compile time: 1

Starting Job = job_1499153664137_0075, Tracking URL = http://master:8088/proxy/application_1499153664137_0075/

Kill Command = /home/hadoop/app/hadoop/bin/hadoop job -kill job_1499153664137_0075

Hadoop job information for Stage-2: number of mappers: 3; number of reducers: 1

2017-07-10 09:11:16,172 Stage-2 map = 0%, reduce = 0%

...

2017-07-10 09:12:01,694 Stage-2 map = 100%, reduce = 100%, Cumulative CPU 4.43 sec

MapReduce Total cumulative CPU time: 4 seconds 430 msec

Ended Job = job_1499153664137_0075

MapReduce Jobs Launched:

Stage-Stage-1: Map: 4 Reduce: 3 Cumulative CPU: 20.87 sec HDFS Read: 47331548 HDFS Write: 996 SUCCESS

Stage-Stage-2: Map: 3 Reduce: 1 Cumulative CPU: 4.43 sec HDFS Read: 9228 HDFS Write: 356 SUCCESS

Total MapReduce CPU Time Spent: 25 seconds 300 msec

OK

Entertainment 1304724

Music 1274825

Comedy 449652

People 447581

Blogs 447581

Film 442109

Animation 442109

Sports 390619

Politics 186753

News 186753

Autos 169883

Vehicles 169883

Style 124885

Howto 124885

Pets 86444

Animals 86444

Travel 82068

Events 82068

Education 54133

Science 50925

Technology 50925

UNA 42928

Nonprofits 16925

Activism 16925

Gaming 10182

Time taken: 177.463 seconds, Fetched: 25 row(s)我这里的比较是从CPU的耗时进行比较,至于Job的初始化等耗时由于存在不确定性,会影响比较的准确性所以暂时忽略

| 语句 | youtube1耗时 | youtube3耗时 |

|---|---|---|

| select videoId,uploader,age,views from youtube1/youtube3 order by views limit 10; | Total MapReduce CPU Time Spent: 1 minutes 2 seconds 700 msec(63s) | Total MapReduce CPU Time Spent: 50 seconds 200 msec(51s) |

| select tagId, count(a.videoid) as sum from (select videoid,tagId from youtube1/youtube3 lateral view explode(category) catetory as tagId) a group by a.tagId order by sum desc; | Total MapReduce CPU Time Spent: 43 seconds 180 msec(44s) | Total MapReduce CPU Time Spent: 25 seconds 300 msec(26s) |

…

由上面表格我们可以看出以orc作为文件格式的表格的查询速度会比以textfile为文件格式的表格要提升20-40%左右

2 order by 和distribute by&sort by

如果在数据量比较大的情况下,也就是执行一个job时间比较长,那么在使用order by 进行topn之前先用distribute by 和 sort by 先局部进行topN,然后最终结果在使用order by效果会更好.如果你的job执行起来也就是几分钟的话,那就不建议使用,因为先使用distribute by和sort by 先 topN 再对结果order by 要启动三个 job来完成,MR的启动时间有点长,这是没必要的开销,比直接使用order by花费更多时间

2.1 使用 order by

hive> select * from youtube3 order by views limit 10;

Query ID = hadoop_20170710103535_4de243fb-f26b-42ab-8cc9-aef568b4a5cb

Total jobs = 1

Launching Job 1 out of 1

Number of reduce tasks determined at compile time: 1

Starting Job = job_1499153664137_0094, Tracking URL = http://master:8088/proxy/application_1499153664137_0094/

Kill Command = /home/hadoop/app/hadoop/bin/hadoop job -kill job_1499153664137_0094

Hadoop job information for Stage-1: number of mappers: 4; number of reducers: 1

2017-07-10 10:35:55,171 Stage-1 map = 0%, reduce = 0%

2017-07-10 10:36:33,748 Stage-1 map = 25%, reduce = 0%, Cumulative CPU 24.59 sec

...

2017-07-10 10:37:45,584 Stage-1 map = 100%, reduce = 100%, Cumulative CPU 114.3 sec

MapReduce Total cumulative CPU time: 1 minutes 54 seconds 300 msec

Ended Job = job_1499153664137_0094

MapReduce Jobs Launched:

Stage-Stage-1: Map: 4 Reduce: 1 Cumulative CPU: 114.3 sec HDFS Read: 574632526 HDFS Write: 2016 SUCCESS

Total MapReduce CPU Time Spent: 1 minutes 54 seconds 300 msec

OK

P4c-EViSRsw ERNESTINEbrowning 1240 ["Entertainment"] 66 0 0.0 0 0 ["b0b7yT9e7hM","UEq74FEphvc","PjQ2y_af08Y","myj6exBy5IQ","vKMljsGZluc","l4FzM1qImdg","mXw1UE2mOuk","gH_K5iqSdgA","InHt3_nkT7s","h3gMHd0XKnE","fXRt4ua3UsQ","T6-5K58vhl4","P1iwvBnOoHg","QAg12t6UvcQ","jy7zqy_Dzic","emUoDRc98ig","g66xEcC9kWY","AbYjCb1jHP8","RF0aRkdtiAM","BURRJkwFkqc"]

woMdGKHIg3o Maxwell739 1240 ["Pets","Animals"] 103 0 0.0 0 0 ["hSSqMOL6ThE","c2k9YCkcoaU","9idmedIj5pk","AUokp8o5aZc","Vhc9bGFKhds","LNQI20dunF0","BOlo7q7MSZA","9cGYnRGqgyM","_oO1WPlJUxc","XMqP8E6kfdY","qnR6BnVByVA","GkGd4RyWMYU","VHt8IZSDU1o","Z83w2lIJZVM","jmnugMwoP1Q","llfOxhnlrJU","JXUmvz4cIRA","Q8nHWUG7aAA","dqQOVI6hDCI","m80Qz8nwmHI"]

_jVH58-X4C4 nelenajolly 1240 ["Entertainment"] 205 0 0.0 0 0 ["VkNejMz7FeI","3AfW6V5EAWY","y8i8U9Ni6Dc","_I3Aeh_HHEg","sZBoBpxnoY8","fheFnYcUxLs","MzFWXWkmvz8","OQDRuqWsnp8","qGW43kf2QEU","u79DsR40LOA","X4TtK-3hryw","ANL8q16edOE","OMLKjvcs5EY","qohfnkV8J3Y","jLEFgyU6TdI","cEmyMSvPvVg","-1B8IkZkGOs","STUi3-cV4RQ","-IU8xCjTNW8","-OfnDq4HLPw"]

k1iTl0Kh4DQ rachellelala 1240 ["People","Blogs"] 48 0 0.0 0 0 []

8LdPZ_n1S4c GohanxVidel21 1240 ["Entertainment"] 271 0 0.0 0 0 ["Dt-0QZX5MII","K34GSodojaM","g6hYT815-9I","r5aYMYBEyPo","Bp_JAed6uTc","qe3PF-KyjCI","nWvwSYIUMpE","LxKS1beypxo","5ZmqPCjpmik","m_wYIbkkizc","9xDjgav1pkk","w5WJKrLR78M","nxj_3D3lIQ0","VSEV1IXg2hQ","gwEJPELAJiM","qDrBEST48_I","LymqYIU3E4U","2bhoPxDzU3w","nr_-AyOyfqk","Cg4qrZG1uVc"]

PSex2TAkQC8 Qingy3 1254 ["Entertainment"] 300 0 0.0 0 0 ["FOs2-r_Fikg","I2TrAyBGGcY","iHws_tdiK64","Yfr_wFmWHTI","FK-Z56YqTsU","Rmfy5-dPl6E","nsfOPbS2bSk","tz59v4WgXhA","ggPoCRixQvQ","hvtWJxJn_GI","qKD6VMiCSlI","O9pFAKOON7o","MZta_rn6o48","y2JV5Hs4WYg","kzP6HA-8MmA","60AR_R_UklQ","tNDVwd00Mow","N0lmZqXKJUw","dYxKJNYpjSA","EWBW5QuAedY"]

YC20zP9p_wI aimeenmegan 1254 ["Film","Animation"] 138 0 0.0 0 0 ["AuuCpfU1aNA","pfeU2WJPlnc","tdxYPHX7mhs","Vig624KxPPQ","mFWhAjQdjyI","7rbpfmqKfKI","Of8uv_etw1w","iuzYVo8L7KU","M_g220ZmoRo","oJ2YCvmg24M","PmxQP2h6Qvg","1u4HgPlGqrg","mGdoxToj5IA","hAnjWHP7KAc","rK66jzIvynM","RgK4BaBnIBU","B9Yp1lD4Ohg","vFRHyRmZ8c0","ZFoswQiXhwU","ptKLZlhz2SI"]

3XduLiQMMTM marshallgovindan 1240 ["Education"] 545 0 0.0 0 0 []

IjeXG6yXXZ4 SenateurDupont1973 1254 ["Howto","Style"] 467 0 0.0 0 0 []

sw4XgF1zkXE bablooian 1240 ["People","Blogs"] 47 0 0.0 0 0 []

Time taken: 153.26 seconds, Fetched: 10 row(s)2.2 使用distribute by和sort by

hive> select a.* from (select * from youtube3 distribute by views sort by views limit 10) a order by views limit 10;

Query ID = hadoop_20170710104040_52c1a6e7-0dc7-4359-82d0-e566c536947a

Total jobs = 3

Launching Job 1 out of 3

Number of reduce tasks not specified. Defaulting to jobconf value of: 2

Starting Job = job_1499153664137_0095, Tracking URL = http://master:8088/proxy/application_1499153664137_0095/

Kill Command = /home/hadoop/app/hadoop/bin/hadoop job -kill job_1499153664137_0095

Hadoop job information for Stage-1: number of mappers: 4; number of reducers: 2

2017-07-10 10:41:35,382 Stage-1 map = 0%, reduce = 0%

...

2017-07-10 10:44:04,783 Stage-1 map = 100%, reduce = 100%, Cumulative CPU 116.58 sec

MapReduce Total cumulative CPU time: 1 minutes 56 seconds 580 msec

Ended Job = job_1499153664137_0095

Launching Job 2 out of 3

Number of reduce tasks determined at compile time: 1

Starting Job = job_1499153664137_0096, Tracking URL = http://master:8088/proxy/application_1499153664137_0096/

Kill Command = /home/hadoop/app/hadoop/bin/hadoop job -kill job_1499153664137_0096

Hadoop job information for Stage-2: number of mappers: 1; number of reducers: 1

2017-07-10 10:44:39,421 Stage-2 map = 0%, reduce = 0%

2017-07-10 10:45:08,358 Stage-2 map = 100%, reduce = 0%, Cumulative CPU 1.12 sec

2017-07-10 10:45:28,180 Stage-2 map = 100%, reduce = 100%, Cumulative CPU 2.68 sec

MapReduce Total cumulative CPU time: 2 seconds 680 msec

Ended Job = job_1499153664137_0096

Launching Job 3 out of 3

Number of reduce tasks determined at compile time: 1

Starting Job = job_1499153664137_0097, Tracking URL = http://master:8088/proxy/application_1499153664137_0097/

Kill Command = /home/hadoop/app/hadoop/bin/hadoop job -kill job_1499153664137_0097

Hadoop job information for Stage-3: number of mappers: 1; number of reducers: 1

2017-07-10 10:46:05,072 Stage-3 map = 0%, reduce = 0%

2017-07-10 10:46:33,177 Stage-3 map = 100%, reduce = 0%, Cumulative CPU 1.07 sec

2017-07-10 10:47:00,963 Stage-3 map = 100%, reduce = 100%, Cumulative CPU 2.4 sec

MapReduce Total cumulative CPU time: 2 seconds 400 msec

Ended Job = job_1499153664137_0097

MapReduce Jobs Launched:

Stage-Stage-1: Map: 4 Reduce: 2 Cumulative CPU: 116.58 sec HDFS Read: 574635698 HDFS Write: 5128 SUCCESS

Stage-Stage-2: Map: 1 Reduce: 1 Cumulative CPU: 2.68 sec HDFS Read: 11354 HDFS Write: 2314 SUCCESS

Stage-Stage-3: Map: 1 Reduce: 1 Cumulative CPU: 2.4 sec HDFS Read: 9143 HDFS Write: 1965 SUCCESS

Total MapReduce CPU Time Spent: 2 minutes 1 seconds 660 msec

OK

dTPF85D4tEs Antoinnette71 1240 ["Entertainment"] 73 0 0.0 0 0 []

5e_flEf6uoU alexandreetmartin 1254 ["Comedy"] 137 0 0.0 0 0 ["e8F9txsHzQI","nibCFP4OB74","tH-7yll1t24","T5K17x93aMM","KxSCMsBipAM","JYEsGC71bmo","PAgQcF7eOt0","-jpkLH4RQ7I","UsY-Iu8QsqA","ru48hMHNZ08","D4aHmwmEBkw","vVYTYnT8zXE","wBcaCI0UMAE","qs1Queu8tGE","zsLRrBHIlZc","8lmWTQZtOsY","ztunItmPYzM","O8KUVYl8_wI","yNYTg9aZ1FY","MPF3JwAgNTY"]

I3bMdoqH2g4 lapino50 1254 ["Comedy"] 131 0 0.0 0 0 []

AvdoZL0U0Ko kristianbreen 1240 ["Comedy"] 121 0 0.0 0 0 []

9SAe80p-U48 BobRucklepuckle 1240 ["Gaming"] 170 0 0.0 0 0 ["z04IyYFXfP0","jcaDekCI408","bV3XZe0Az34","O7aYaJ8PtCs","I07LmzF46a8","zEl2frWgqdU","zBA-h4uM3cY","0t_mN96jRb8","bahgug-PFPs","VGDOZiVRUtg","OaXE6pMEsZc","A2Is6NIEuso","hNtqpVP5st4","TxZ2lzPbuDA","UFSc3rrAYLQ","58DEKFeWh4I","YDEQIbcDO1M","o9dyNqactV8","Usb-fZm56GM","XvV1yvSPJ_s"]

P2cQ-W9J99A 636141 1254 ["Comedy"] 77 0 0.0 0 0 ["74sW9OHvkCg","onKIV_-f6ew","XtHYdkH1flg","-SM5P5lz86A","zpz3HFBQR2o","U9lOI7n8sB4","w99Q5PLtjjE","5N6Cchi9Gtw","p9Bn2NSfzHw","DR2IaxcAAUU","BiGZd4BPlqw","0GMzzp_nJbw","e2KVuzAcsv0","jEz_k247tws","OEt50_BdIpQ","MV95TjaXkX8","c8lyUi8QKWs","P1OXAQHv09E","gtp8fgYhp50","L5-0k1HHh_k"]

JobgU5-dFy4 singindancinfool 1254 ["Entertainment"] 184 0 0.0 0 0 ["7MFG_yaFlvk","c--loq6HsDQ","CyrZR3BSH5o","Wni8rmr7rzs","XvFfRpQS4GU","ENSiblZIMV0","0enG7lzuaqM","c8y-zzaI4g8","Czfk0W888V0","dc1ls-qQrYg","3ATYGqE6Hww","U9DPjKzumYw","esEiXSOjs2c","WRCpHFSYXdA","32YyZMxFrBA","Abg7uBdwGJM","BqELlVTMv8o","2tbj33kpBig","LronHxxGti8","NtSl2jdPezI"]

YC20zP9p_wI aimeenmegan 1254 ["Film","Animation"] 138 0 0.0 0 0 ["AuuCpfU1aNA","pfeU2WJPlnc","tdxYPHX7mhs","Vig624KxPPQ","mFWhAjQdjyI","7rbpfmqKfKI","Of8uv_etw1w","iuzYVo8L7KU","M_g220ZmoRo","oJ2YCvmg24M","PmxQP2h6Qvg","1u4HgPlGqrg","mGdoxToj5IA","hAnjWHP7KAc","rK66jzIvynM","RgK4BaBnIBU","B9Yp1lD4Ohg","vFRHyRmZ8c0","ZFoswQiXhwU","ptKLZlhz2SI"]

vBGEkIG8Zdk hachem44 1254 ["Entertainment"] 122 0 0.0 0 0 []

P4eiE33eqOU BsmStudio 1254 ["Comedy"] 287 0 0.0 0 0 ["1_ao3c5jicU","RRMAZj-msOc","X04hwJyaO_4","4oee8Zn3hHI","60W-uUYnj3w","FtoJoBp03r4","_IMSMYLM0zs","22Sz120MAT8","F-B35V6Sszk","1AExOXwL0Ag","owRgISGt_iw","Wz0ZfHbPC-c","b7uLUY-FdKM","yevHaMC10IE","jGvBLJfEIcs","Ma4Ozief4k8","ZwnLErETvKU","oE4oeyRMcKI","IQnEUxtk-Pw"]

Time taken: 368.489 seconds, Fetched: 10 row(s)| 语句 | 直接使用order by | 先使用distribute by和sort by再使用order by |

|---|---|---|

| select * from youtube3 order by views limit 10; | Total MapReduce CPU Time Spent: 1 minutes 54 seconds 300 msec(115s) Time taken: 153.26 seconds, Fetched: 10 row(s) |

|

| select a.* from (select * from youtube3 distribute by views sort by views limit 10) a order by views limit 10; | Total MapReduce CPU Time Spent: 2 minutes 1 seconds 660 msec(122s) Time taken: 368.489 seconds, Fetched: 10 row(s) |

…

由上面表格我们可以看出,使用distribute by和sort by 来优化order by 对于使用场景是有要求的,并不是拿来就用的,而是使用于数据量较大的场景,要不然单单是启动多两个job的时间就够你喝一壶了。数据量小直接使用order by即可。

3 group by 的数据倾斜处理

- hive.map.aggr = true; 相当于combine

- hive.groupby.skewindata = true;开启两个job,这个参数的修改跟在orderby 之前使用sort by和distribute by处理一样,数据量不大没必要开启

4 Join的优化

- map join当小表的数据量比较小时,会自动启用mapjoin,性能能提高30%左右

- hive.auto.convert.join = true;自动优化是否采用map join

- hive.auto.convert.join.noconditionaltask = true; 根据小表的大小来决定是否开启map join

- hive.auto.convert.join.noconditionaltask.size = 10000000;10M 当小表的值小于设定的值时启用map join,只有上一个参数为true时有用

- bucketmap join

- hive.optimize.bucketmapjoin=true; 当两表都采用桶分表,并且小表的桶数是大表桶数的倍数时启用,减少数据的遍历

- 过滤数据后再join

- 如果多表join的on条件一样,一起写会转化成一个job

4.1 开启MapJoin和关闭MapJoin的比较

4.1.1 不启动MapJoin

hive> set hive.auto.convert.join = false;

hive> set hive.auto.convert.join.noconditionaltask = false;

hive> set hive.optimize.bucketmapjoin=false;查看表格结构:

hive> desc formatted user;

# col_name data_type comment

uploader string

videos int

friends int

# Detailed Table Information

Database: default

Owner: hadoop

CreateTime: Fri Jul 07 13:46:53 CST 2017

LastAccessTime: UNKNOWN

Protect Mode: None

Retention: 0

Location: hdfs://cluster1/user/hive/warehouse/user

Table Type: MANAGED_TABLE

Table Parameters:

COLUMN_STATS_ACCURATE true

numFiles 1

numRows 1192676

rawDataSize 121652944

totalSize 9783258

transient_lastDdlTime 1499406528

# Storage Information

SerDe Library: org.apache.hadoop.hive.ql.io.orc.OrcSerde

InputFormat: org.apache.hadoop.hive.ql.io.orc.OrcInputFormat

OutputFormat: org.apache.hadoop.hive.ql.io.orc.OrcOutputFormat

Compressed: No

Num Buckets: 24

Bucket Columns: [uploader]

Sort Columns: []

Storage Desc Params:

field.delim \t

serialization.format \t

Time taken: 0.087 seconds, Fetched: 34 row(s)hive> desc formatted youtube3;

# col_name data_type comment

videoid string

uploader string

age int

category array<string>

length int

views int

rate float

ratings int

comments int

relatedid array<string>

# Detailed Table Information

Database: default

Owner: hadoop

CreateTime: Fri Jul 07 13:28:56 CST 2017

LastAccessTime: UNKNOWN

Protect Mode: None

Retention: 0

Location: hdfs://cluster1/user/hive/warehouse/youtube3

Table Type: MANAGED_TABLE

Table Parameters:

COLUMN_STATS_ACCURATE true

numFiles 6

numRows 5134636

rawDataSize 7949154631

totalSize 574644356

transient_lastDdlTime 1499405660

# Storage Information

SerDe Library: org.apache.hadoop.hive.ql.io.orc.OrcSerde

InputFormat: org.apache.hadoop.hive.ql.io.orc.OrcInputFormat

OutputFormat: org.apache.hadoop.hive.ql.io.orc.OrcOutputFormat

Compressed: No

Num Buckets: 8

Bucket Columns: [uploader]

Sort Columns: []

Storage Desc Params:

colelction.delim &

field.delim \t

serialization.format \t

Time taken: 0.086 seconds, Fetched: 42 row(s)实例测试:

hive> select a.uploader,a.friends,b.videoId from user a join youtube3 b on a.uploader = b.uploader limit 10;

Query ID = hadoop_20170710092626_a2f303f3-8ee5-4747-a6c0-96ccb5d2f0d7

Total jobs = 1

Launching Job 1 out of 1

Number of reduce tasks not specified. Estimated from input data size: 3

Starting Job = job_1499153664137_0077, Tracking URL = http://master:8088/proxy/application_1499153664137_0077/

Kill Command = /home/hadoop/app/hadoop/bin/hadoop job -kill job_1499153664137_0077

Hadoop job information for Stage-1: number of mappers: 5; number of reducers: 3

2017-07-10 09:26:41,012 Stage-1 map = 0%, reduce = 0%

...

2017-07-10 09:27:49,553 Stage-1 map = 100%, reduce = 100%, Cumulative CPU 66.63 sec

MapReduce Total cumulative CPU time: 1 minutes 6 seconds 630 msec

Ended Job = job_1499153664137_0077

MapReduce Jobs Launched:

Stage-Stage-1: Map: 5 Reduce: 3 Cumulative CPU: 66.63 sec HDFS Read: 86211567 HDFS Write: 698 SUCCESS

Total MapReduce CPU Time Spent: 1 minutes 6 seconds 630 msec

OK

a0000a 7 6NmdrPmWjSU

a00393977 0 _EEwnI7pCCk

a007plan 0 VlDabTbGLNc

a02030203 0 AaY3pbdfbUM

a02030203 0 P28i2Xu4WB8

a02030203 0 LFzKFOWOHBg

a042538 14 YHu_VlY_p_E

a042538 14 8ECefR4g0Tg

a04297 0 2rqgJ1FNflo

a04297 0 iT_yac8DcQk

Time taken: 109.44 seconds, Fetched: 10 row(s)4.1.2 开启自动MapJoin优化

hive> set hive.auto.convert.join = true;

hive> set hive.auto.convert.join.noconditionaltask = true;hive> select a.uploader,a.friends,b.videoId from user a join youtube3 b on a.uploader = b.uploader limit 10;

Query ID = hadoop_20170710093232_6b9bf92d-5d02-4ce6-bb35-a58625a8f03b

Total jobs = 1

17/07/10 09:32:35 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Execution log at: /tmp/hadoop/hadoop_20170710093232_6b9bf92d-5d02-4ce6-bb35-a58625a8f03b.log

2017-07-10 09:32:37 Starting to launch local task to process map join; maximum memory = 518979584

2017-07-10 09:32:50 Processing rows: 200000 Hashtable size: 199999 Memory usage: 76725768 percentage: 0.148

...

2017-07-10 09:32:56 Processing rows: 1100000 Hashtable size: 1099999 Memory usage: 316646056 percentage: 0.61

2017-07-10 09:32:56 Dump the side-table for tag: 0 with group count: 1192676 into file: file:/tmp/hadoop/81308db8-add0-47bf-9a70-ae8e83f4f05c/hive_2017-07-10_09-32-30_881_1416539360570079360-1/-local-10003/HashTable-Stage-3/MapJoin-mapfile160--.hashtable

2017-07-10 09:32:59 Uploaded 1 File to: file:/tmp/hadoop/81308db8-add0-47bf-9a70-ae8e83f4f05c/hive_2017-07-10_09-32-30_881_1416539360570079360-1/-local-10003/HashTable-Stage-3/MapJoin-mapfile160--.hashtable (36232906 bytes)

2017-07-10 09:32:59 End of local task; Time Taken: 21.563 sec.

Execution completed successfully

MapredLocal task succeeded

Launching Job 1 out of 1

Number of reduce tasks is set to 0 since there's no reduce operator

Starting Job = job_1499153664137_0079, Tracking URL = http://master:8088/proxy/application_1499153664137_0079/

Kill Command = /home/hadoop/app/hadoop/bin/hadoop job -kill job_1499153664137_0079

Hadoop job information for Stage-3: number of mappers: 4; number of reducers: 0

2017-07-10 09:33:42,458 Stage-3 map = 0%, reduce = 0%

2017-07-10 09:34:16,719 Stage-3 map = 50%, reduce = 0%, Cumulative CPU 13.33 sec

2017-07-10 09:34:27,090 Stage-3 map = 100%, reduce = 0%, Cumulative CPU 27.05 sec

MapReduce Total cumulative CPU time: 27 seconds 50 msec

Ended Job = job_1499153664137_0079

MapReduce Jobs Launched:

Stage-Stage-3: Map: 4 Cumulative CPU: 27.05 sec HDFS Read: 20408350 HDFS Write: 1094 SUCCESS

Total MapReduce CPU Time Spent: 27 seconds 50 msec

OK

maxmagpies 22 9UagGiEP_kU

lilsteps97 2 hKE3F0cLl_M

cokerish 6 mZpSi9Sfs2k

mikecrazy03 17 a-nkbgF3Wcw

xoPaiigexo 21 CmCl_hSXM80

r4nd0mUs3r 133 2Yke3hdSeJQ

popkorn615 39 sc88PbADsk8

popkorn615 39 bGNzyCCK2Uo

tuxkiller007 0 s1n2y3c2z9k

popkorn615 39 norcLxmpS7o

Time taken: 118.299 seconds, Fetched: 10 row(s)4.1.3 开启BucketMapJoin优化

hive> set hive.optimize.bucketmapjoin=true;hive> select a.uploader,a.friends,b.videoId from user a join youtube3 b on a.uploader = b.uploader limit 10;

Query ID = hadoop_20170710093535_dddfa9db-cae4-4a9d-bbe0-0dba73ee1cb5

Total jobs = 1

17/07/10 09:36:02 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Execution log at: /tmp/hadoop/hadoop_20170710093535_dddfa9db-cae4-4a9d-bbe0-0dba73ee1cb5.log

2017-07-10 09:36:05 Starting to launch local task to process map join; maximum memory = 518979584

2017-07-10 09:36:18 Processing rows: 200000 Hashtable size: 199999 Memory usage: 76897640 percentage: 0.148

...

2017-07-10 09:36:23 Processing rows: 1100000 Hashtable size: 1099999 Memory usage: 315574944 percentage: 0.608

2017-07-10 09:36:23 Dump the side-table for tag: 0 with group count: 1192676 into file: file:/tmp/hadoop/81308db8-add0-47bf-9a70-ae8e83f4f05c/hive_2017-07-10_09-35-58_131_4163936080161520499-1/-local-10003/HashTable-Stage-3/MapJoin-mapfile170--.hashtable

2017-07-10 09:36:26 Uploaded 1 File to: file:/tmp/hadoop/81308db8-add0-47bf-9a70-ae8e83f4f05c/hive_2017-07-10_09-35-58_131_4163936080161520499-1/-local-10003/HashTable-Stage-3/MapJoin-mapfile170--.hashtable (36232906 bytes)

2017-07-10 09:36:26 End of local task; Time Taken: 21.374 sec.

Execution completed successfully

MapredLocal task succeeded

Launching Job 1 out of 1

Number of reduce tasks is set to 0 since there's no reduce operator

Starting Job = job_1499153664137_0080, Tracking URL = http://master:8088/proxy/application_1499153664137_0080/

Kill Command = /home/hadoop/app/hadoop/bin/hadoop job -kill job_1499153664137_0080

Hadoop job information for Stage-3: number of mappers: 4; number of reducers: 0

2017-07-10 09:37:09,550 Stage-3 map = 0%, reduce = 0%

2017-07-10 09:37:43,845 Stage-3 map = 25%, reduce = 0%, Cumulative CPU 12.14 sec

2017-07-10 09:37:44,884 Stage-3 map = 50%, reduce = 0%, Cumulative CPU 12.58 sec

2017-07-10 09:37:54,187 Stage-3 map = 100%, reduce = 0%, Cumulative CPU 25.41 sec

MapReduce Total cumulative CPU time: 25 seconds 410 msec

Ended Job = job_1499153664137_0080

MapReduce Jobs Launched:

Stage-Stage-3: Map: 4 Cumulative CPU: 25.41 sec HDFS Read: 20408350 HDFS Write: 1094 SUCCESS

Total MapReduce CPU Time Spent: 25 seconds 410 msec

OK

maxmagpies 22 9UagGiEP_kU

lilsteps97 2 hKE3F0cLl_M

cokerish 6 mZpSi9Sfs2k

mikecrazy03 17 a-nkbgF3Wcw

xoPaiigexo 21 CmCl_hSXM80

r4nd0mUs3r 133 2Yke3hdSeJQ

popkorn615 39 sc88PbADsk8

popkorn615 39 bGNzyCCK2Uo

tuxkiller007 0 s1n2y3c2z9k

popkorn615 39 norcLxmpS7o

Time taken: 117.119 seconds, Fetched: 10 row(s)4.1.4 总结

使用语句:select a.uploader,a.friends,b.videoId from user a join youtube3 b on a.uploader = b.uploader limit 10;

| 配置 | 开启 | 关闭 |

|---|---|---|

| map join | (将数据放到缓存中)End of local task; Time Taken: 21.563 sec. Total MapReduce CPU Time Spent: 27 seconds 50 msec CPU总耗时(22+28 = 50s) |

Total MapReduce CPU Time Spent: 1 minutes 6 seconds 630 msec CPU总耗时66s |

| bucket map join | End of local task; Time Taken: 21.374 sec. Total MapReduce CPU Time Spent: 25 seconds 410 msec CPU总耗时(22+26)48s |

End of local task; Time Taken: 21.563 sec. Total MapReduce CPU Time Spent: 27 seconds 50 msec CPU总耗时(22+28)50s |

…

由上面数据我们可以看出,map join在cpu耗时方面还是有所减少,而bucket map join相对于map join的提升就不明显,可能原因还是在于数据量问题,我们可以看出map join相对于普通的reduce join执行速度还是有提升的,而且数据量越大的时候又是会更明显。但是数据量不大的情况,有文件的读取上传等操作导致他的优势不明显,可能反而最终耗时更长。

5 参数配置:map数和reduce数的修改

5.1 map数的设置

5.1.1 小文件太多怎么办,会导致map数太多,不断创建和销毁任务会导致无谓的开销

- set hive.input.format = org.apache.hadoop.hive.ql.io.CombineHiveInputFormat 用于合并小文件

- set mapred.max.split.size = 128000000 限制文件的大小

- set hive.merge.mapfiles = true; map任务后对文件进行合并

- set hive.merge.mapredfiles = true; mapreduce任务后对文件进行合并

5.1.2 map数太少怎么办,设置map.tasks数

5.1.3 map任务和mapreduce任务后文件的合并与否的比较

1)不进行合并的设置

hive> set hive.merge.mapfiles=false;

hive> set hive.merge.mapredfiles = false;hive> select tagId, count(a.videoid) as sum from (select videoid,tagId from youtube3 lateral view explode(category) catetory as tagId) a group by a.tagId order by sum desc;

Query ID = hadoop_20170710095252_9c97d548-c734-4df3-8d78-541c7e4ec66f

Total jobs = 2

Launching Job 1 out of 2

Number of reduce tasks not specified. Estimated from input data size: 3

In order to change the average load for a reducer (in bytes):

set hive.exec.reducers.bytes.per.reducer=<number>

In order to limit the maximum number of reducers:

set hive.exec.reducers.max=<number>

In order to set a constant number of reducers:

set mapreduce.job.reduces=<number>

Starting Job = job_1499153664137_0082, Tracking URL = http://master:8088/proxy/application_1499153664137_0082/

Kill Command = /home/hadoop/app/hadoop/bin/hadoop job -kill job_1499153664137_0082

Hadoop job information for Stage-1: number of mappers: 4; number of reducers: 3

2017-07-10 09:53:26,240 Stage-1 map = 0%, reduce = 0%

2017-07-10 09:53:54,547 Stage-1 map = 25%, reduce = 0%, Cumulative CPU 2.05 sec

...

2017-07-10 09:54:22,238 Stage-1 map = 100%, reduce = 100%, Cumulative CPU 21.08 sec

MapReduce Total cumulative CPU time: 21 seconds 80 msec

Ended Job = job_1499153664137_0082

Launching Job 2 out of 2

Number of reduce tasks determined at compile time: 1

Starting Job = job_1499153664137_0083, Tracking URL = http://master:8088/proxy/application_1499153664137_0083/

Kill Command = /home/hadoop/app/hadoop/bin/hadoop job -kill job_1499153664137_0083

Hadoop job information for Stage-2: number of mappers: 1; number of reducers: 1

2017-07-10 09:54:59,413 Stage-2 map = 0%, reduce = 0%

...

2017-07-10 09:55:56,728 Stage-2 map = 100%, reduce = 100%, Cumulative CPU 2.43 sec

MapReduce Total cumulative CPU time: 2 seconds 430 msec

Ended Job = job_1499153664137_0083

MapReduce Jobs Launched:

Stage-Stage-1: Map: 4 Reduce: 3 Cumulative CPU: 21.08 sec HDFS Read: 47331544 HDFS Write: 996 SUCCESS

Stage-Stage-2: Map: 1 Reduce: 1 Cumulative CPU: 2.43 sec HDFS Read: 5826 HDFS Write: 356 SUCCESS

Total MapReduce CPU Time Spent: 23 seconds 510 msec

OK

Entertainment 1304724

Music 1274825

Comedy 449652

People 447581

Blogs 447581

Film 442109

Animation 442109

Sports 390619

Politics 186753

News 186753

Autos 169883

Vehicles 169883

Style 124885

Howto 124885

Animals 86444

Pets 86444

Travel 82068

Events 82068

Education 54133

Science 50925

Technology 50925

UNA 42928

Nonprofits 16925

Activism 16925

Gaming 10182

Time taken: 196.576 seconds, Fetched: 25 row(s)2)进行合并的设置

hive> set hive.merge.mapfiles=true;

hive> set hive.merge.mapredfiles=true;hive> select tagId, count(a.videoid) as sum from (select videoid,tagId from youtube3 lateral view explode(category) catetory as tagId) a group by a.tagId order by sum desc;

Query ID = hadoop_20170710090909_bfddc50b-665d-4296-9475-0cee55058c85

Total jobs = 2

Launching Job 1 out of 2

Number of reduce tasks not specified. Estimated from input data size: 3

Starting Job = job_1499153664137_0074, Tracking URL = http://master:8088/proxy/application_1499153664137_0074/

Kill Command = /home/hadoop/app/hadoop/bin/hadoop job -kill job_1499153664137_0074

Hadoop job information for Stage-1: number of mappers: 4; number of reducers: 3

2017-07-10 09:09:46,075 Stage-1 map = 0%, reduce = 0%

2017-07-10 09:10:15,149 Stage-1 map = 25%, reduce = 0%, Cumulative CPU 1.99 sec

...

2017-07-10 09:10:39,596 Stage-1 map = 100%, reduce = 100%, Cumulative CPU 20.87 sec

MapReduce Total cumulative CPU time: 20 seconds 870 msec

Ended Job = job_1499153664137_0074

Launching Job 2 out of 2

Number of reduce tasks determined at compile time: 1

Starting Job = job_1499153664137_0075, Tracking URL = http://master:8088/proxy/application_1499153664137_0075/

Kill Command = /home/hadoop/app/hadoop/bin/hadoop job -kill job_1499153664137_0075

Hadoop job information for Stage-2: number of mappers: 3; number of reducers: 1

2017-07-10 09:11:16,172 Stage-2 map = 0%, reduce = 0%

...

2017-07-10 09:12:01,694 Stage-2 map = 100%, reduce = 100%, Cumulative CPU 4.43 sec

MapReduce Total cumulative CPU time: 4 seconds 430 msec

Ended Job = job_1499153664137_0075

MapReduce Jobs Launched:

Stage-Stage-1: Map: 4 Reduce: 3 Cumulative CPU: 20.87 sec HDFS Read: 47331548 HDFS Write: 996 SUCCESS

Stage-Stage-2: Map: 3 Reduce: 1 Cumulative CPU: 4.43 sec HDFS Read: 9228 HDFS Write: 356 SUCCESS

Total MapReduce CPU Time Spent: 25 seconds 300 msec

OK

Entertainment 1304724

Music 1274825

Comedy 449652

People 447581

Blogs 447581

Film 442109

Animation 442109

Sports 390619

Politics 186753

News 186753

Autos 169883

Vehicles 169883

Style 124885

Howto 124885

Pets 86444

Animals 86444

Travel 82068

Events 82068

Education 54133

Science 50925

Technology 50925

UNA 42928

Nonprofits 16925

Activism 16925

Gaming 10182

Time taken: 177.463 seconds, Fetched: 25 row(s)5.2 reduce数的配置

reduce数太多或者太少都不好,太多的话会导致后续的job的map数过多,太少会导致浪费资源

reduce数由这三个参数来决定

- set hive.exec.reducers.bytes.per.reducer=1024000000;当数据量超过该值时才会进行切分reduce,要不只有一个

- set hive.exec.reducer.max=999;默认为999

- set mapreduce.job.reduces = 3;直接设置,无视上述参数设定

设置reduces

hive> set mapreduce.job.reduces;

mapreduce.job.reduces=-15.2.1 未设置reduce数时,也就是reduce是根据节点数据量大小进行自动切分

结果如下:

hive> select tagId, count(a.videoid) as sum from (select videoid,tagId from youtube3 lateral view explode(category) catetory as tagId) a group by a.tagId order by sum desc;

Query ID = hadoop_20170710095252_9c97d548-c734-4df3-8d78-541c7e4ec66f

Total jobs = 2

Launching Job 1 out of 2

Number of reduce tasks not specified. Estimated from input data size: 3

In order to change the average load for a reducer (in bytes):

set hive.exec.reducers.bytes.per.reducer=

In order to limit the maximum number of reducers:

set hive.exec.reducers.max=

In order to set a constant number of reducers:

set mapreduce.job.reduces=

Starting Job = job_1499153664137_0082, Tracking URL = http://master:8088/proxy/application_1499153664137_0082/

Kill Command = /home/hadoop/app/hadoop/bin/hadoop job -kill job_1499153664137_0082

Hadoop job information for Stage-1: number of mappers: 4; number of reducers: 3

2017-07-10 09:53:26,240 Stage-1 map = 0%, reduce = 0%

2017-07-10 09:53:54,547 Stage-1 map = 25%, reduce = 0%, Cumulative CPU 2.05 sec

...

2017-07-10 09:54:22,238 Stage-1 map = 100%, reduce = 100%, Cumulative CPU 21.08 sec

MapReduce Total cumulative CPU time: 21 seconds 80 msec

Ended Job = job_1499153664137_0082

Launching Job 2 out of 2

Number of reduce tasks determined at compile time: 1

In order to change the average load for a reducer (in bytes):

set hive.exec.reducers.bytes.per.reducer=

In order to limit the maximum number of reducers:

set hive.exec.reducers.max=

In order to set a constant number of reducers:

set mapreduce.job.reduces=

Starting Job = job_1499153664137_0083, Tracking URL = http://master:8088/proxy/application_1499153664137_0083/

Kill Command = /home/hadoop/app/hadoop/bin/hadoop job -kill job_1499153664137_0083

Hadoop job information for Stage-2: number of mappers: 1; number of reducers: 1

2017-07-10 09:54:59,413 Stage-2 map = 0%, reduce = 0%

2017-07-10 09:55:27,659 Stage-2 map = 100%, reduce = 0%, Cumulative CPU 1.1 sec

2017-07-10 09:55:56,728 Stage-2 map = 100%, reduce = 100%, Cumulative CPU 2.43 sec

MapReduce Total cumulative CPU time: 2 seconds 430 msec

Ended Job = job_1499153664137_0083

MapReduce Jobs Launched:

Stage-Stage-1: Map: 4 Reduce: 3 Cumulative CPU: 21.08 sec HDFS Read: 47331544 HDFS Write: 996 SUCCESS

Stage-Stage-2: Map: 1 Reduce: 1 Cumulative CPU: 2.43 sec HDFS Read: 5826 HDFS Write: 356 SUCCESS

Total MapReduce CPU Time Spent: 23 seconds 510 msec

OK

Entertainment 1304724

Music 1274825

Comedy 449652

People 447581

Blogs 447581

Film 442109

Animation 442109

Sports 390619

Politics 186753

News 186753

Autos 169883

Vehicles 169883

Style 124885

Howto 124885

Animals 86444

Pets 86444

Travel 82068

Events 82068

Education 54133

Science 50925

Technology 50925

UNA 42928

Nonprofits 16925

Activism 16925

Gaming 10182

Time taken: 196.576 seconds, Fetched: 25 row(s)

hive> set hive.merge.mapfiles=true;

hive> set hive.merge.mapredfiles=true;

hive> select tagId, count(a.videoid) as sum from (select videoid,tagId from youtube3 lateral view explode(category) catetory as tagId) a group by a.tagId order by sum desc;

Query ID = hadoop_20170710095757_8fc6f5e5-6b3a-4618-b8b0-57ec16ea0225

Total jobs = 2

Launching Job 1 out of 2

Number of reduce tasks not specified. Estimated from input data size: 3

In order to change the average load for a reducer (in bytes):

set hive.exec.reducers.bytes.per.reducer=

In order to limit the maximum number of reducers:

set hive.exec.reducers.max=

In order to set a constant number of reducers:

set mapreduce.job.reduces=

Starting Job = job_1499153664137_0084, Tracking URL = http://master:8088/proxy/application_1499153664137_0084/

Kill Command = /home/hadoop/app/hadoop/bin/hadoop job -kill job_1499153664137_0084

Hadoop job information for Stage-1: number of mappers: 4; number of reducers: 3

2017-07-10 09:58:31,781 Stage-1 map = 0%, reduce = 0%

...

2017-07-10 09:59:24,606 Stage-1 map = 100%, reduce = 100%, Cumulative CPU 20.81 sec

MapReduce Total cumulative CPU time: 20 seconds 810 msec

Ended Job = job_1499153664137_0084

Launching Job 2 out of 2

Number of reduce tasks determined at compile time: 1

In order to change the average load for a reducer (in bytes):

set hive.exec.reducers.bytes.per.reducer=

In order to limit the maximum number of reducers:

set hive.exec.reducers.max=

In order to set a constant number of reducers:

set mapreduce.job.reduces=

Starting Job = job_1499153664137_0085, Tracking URL = http://master:8088/proxy/application_1499153664137_0085/

Kill Command = /home/hadoop/app/hadoop/bin/hadoop job -kill job_1499153664137_0085

Hadoop job information for Stage-2: number of mappers: 1; number of reducers: 1

2017-07-10 10:00:00,596 Stage-2 map = 0%, reduce = 0%

2017-07-10 10:00:29,530 Stage-2 map = 100%, reduce = 0%, Cumulative CPU 1.08 sec

2017-07-10 10:00:58,684 Stage-2 map = 100%, reduce = 100%, Cumulative CPU 2.67 sec

MapReduce Total cumulative CPU time: 2 seconds 670 msec

Ended Job = job_1499153664137_0085

MapReduce Jobs Launched:

Stage-Stage-1: Map: 4 Reduce: 3 Cumulative CPU: 20.81 sec HDFS Read: 47331712 HDFS Write: 996 SUCCESS

Stage-Stage-2: Map: 1 Reduce: 1 Cumulative CPU: 2.67 sec HDFS Read: 5826 HDFS Write: 356 SUCCESS

Total MapReduce CPU Time Spent: 23 seconds 480 msec

OK

Entertainment 1304724

Music 1274825

Comedy 449652

People 447581

Blogs 447581

Film 442109

Animation 442109

Sports 390619

Politics 186753

News 186753

Autos 169883

Vehicles 169883

Style 124885

Howto 124885

Animals 86444

Pets 86444

Travel 82068

Events 82068

Education 54133

Science 50925

Technology 50925

UNA 42928

Nonprofits 16925

Activism 16925

Gaming 10182

Time taken: 187.501 seconds, Fetched: 25 row(s) 5.2.2 将reduce数设置为3

结果如下:

hive> set mapreduce.job.reduces=3;

hive> select tagId, count(a.videoid) as sum from (select videoid,tagId from youtube3 lateral view explode(category) catetory as tagId) a group by a.tagId order by sum desc;

Query ID = hadoop_20170710100303_395d684c-fdd7-4ac6-a1f1-d7aa9269dd2a

Total jobs = 2

Launching Job 1 out of 2

Number of reduce tasks not specified. Defaulting to jobconf value of: 3

In order to change the average load for a reducer (in bytes):

set hive.exec.reducers.bytes.per.reducer=<number>

In order to limit the maximum number of reducers:

set hive.exec.reducers.max=<number>

In order to set a constant number of reducers:

set mapreduce.job.reduces=<number>

Starting Job = job_1499153664137_0086, Tracking URL = http://master:8088/proxy/application_1499153664137_0086/

Kill Command = /home/hadoop/app/hadoop/bin/hadoop job -kill job_1499153664137_0086

Hadoop job information for Stage-1: number of mappers: 4; number of reducers: 3

2017-07-10 10:04:03,317 Stage-1 map = 0%, reduce = 0%

2017-07-10 10:04:32,522 Stage-1 map = 25%, reduce = 0%, Cumulative CPU 2.0 sec

2017-07-10 10:04:36,916 Stage-1 map = 34%, reduce = 0%, Cumulative CPU 6.11 sec

2017-07-10 10:04:41,375 Stage-1 map = 50%, reduce = 0%, Cumulative CPU 7.62 sec

2017-07-10 10:04:48,683 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 16.05 sec

2017-07-10 10:04:50,754 Stage-1 map = 100%, reduce = 33%, Cumulative CPU 17.43 sec

2017-07-10 10:04:53,926 Stage-1 map = 100%, reduce = 67%, Cumulative CPU 19.1 sec

2017-07-10 10:04:57,047 Stage-1 map = 100%, reduce = 100%, Cumulative CPU 20.75 sec

MapReduce Total cumulative CPU time: 20 seconds 750 msec

Ended Job = job_1499153664137_0086

Launching Job 2 out of 2

Number of reduce tasks determined at compile time: 1

In order to change the average load for a reducer (in bytes):

set hive.exec.reducers.bytes.per.reducer=<number>

In order to limit the maximum number of reducers:

set hive.exec.reducers.max=<number>

In order to set a constant number of reducers:

set mapreduce.job.reduces=<number>

Starting Job = job_1499153664137_0087, Tracking URL = http://master:8088/proxy/application_1499153664137_0087/

Kill Command = /home/hadoop/app/hadoop/bin/hadoop job -kill job_1499153664137_0087

Hadoop job information for Stage-2: number of mappers: 2; number of reducers: 1

2017-07-10 10:05:32,722 Stage-2 map = 0%, reduce = 0%

2017-07-10 10:05:59,576 Stage-2 map = 50%, reduce = 0%, Cumulative CPU 0.93 sec

2017-07-10 10:06:00,604 Stage-2 map = 100%, reduce = 0%, Cumulative CPU 2.03 sec

2017-07-10 10:06:19,183 Stage-2 map = 100%, reduce = 100%, Cumulative CPU 3.61 sec

MapReduce Total cumulative CPU time: 3 seconds 610 msec

Ended Job = job_1499153664137_0087

MapReduce Jobs Launched:

Stage-Stage-1: Map: 4 Reduce: 3 Cumulative CPU: 20.75 sec HDFS Read: 47331712 HDFS Write: 996 SUCCESS

Stage-Stage-2: Map: 2 Reduce: 1 Cumulative CPU: 3.61 sec HDFS Read: 7527 HDFS Write: 356 SUCCESS

Total MapReduce CPU Time Spent: 24 seconds 360 msec

OK

Entertainment 1304724

Music 1274825

Comedy 449652

People 447581

Blogs 447581

Film 442109

Animation 442109

Sports 390619

Politics 186753

News 186753

Autos 169883

Vehicles 169883

Style 124885

Howto 124885

Pets 86444

Animals 86444

Travel 82068

Events 82068

Education 54133

Technology 50925

Science 50925

UNA 42928

Nonprofits 16925

Activism 16925

Gaming 10182

Time taken: 177.949 seconds, Fetched: 25 row(s)5.2.3 将reduce设为5的结果

hive> set mapreduce.job.reduces=5;

hive> select tagId, count(a.videoid) as sum from (select videoid,tagId from youtube3 lateral view explode(category) catetory as tagId) a group by a.tagId order by sum desc;

Query ID = hadoop_20170710100909_13293e63-288b-43d2-aa71-b50fd3992a9d

Total jobs = 2

Launching Job 1 out of 2

Number of reduce tasks not specified. Defaulting to jobconf value of: 5

In order to change the average load for a reducer (in bytes):

set hive.exec.reducers.bytes.per.reducer=<number>

In order to limit the maximum number of reducers:

set hive.exec.reducers.max=<number>

In order to set a constant number of reducers:

set mapreduce.job.reduces=<number>

Starting Job = job_1499153664137_0088, Tracking URL = http://master:8088/proxy/application_1499153664137_0088/

Kill Command = /home/hadoop/app/hadoop/bin/hadoop job -kill job_1499153664137_0088

Hadoop job information for Stage-1: number of mappers: 4; number of reducers: 5

2017-07-10 10:10:23,022 Stage-1 map = 0%, reduce = 0%

2017-07-10 10:10:54,170 Stage-1 map = 25%, reduce = 0%, Cumulative CPU 3.81 sec

2017-07-10 10:10:56,290 Stage-1 map = 50%, reduce = 0%, Cumulative CPU 9.27 sec

2017-07-10 10:10:58,461 Stage-1 map = 75%, reduce = 0%, Cumulative CPU 11.33 sec

2017-07-10 10:11:05,880 Stage-1 map = 88%, reduce = 0%, Cumulative CPU 14.86 sec

2017-07-10 10:11:07,987 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 15.2 sec

2017-07-10 10:11:14,235 Stage-1 map = 100%, reduce = 20%, Cumulative CPU 16.87 sec

2017-07-10 10:11:18,373 Stage-1 map = 100%, reduce = 40%, Cumulative CPU 18.56 sec

2017-07-10 10:11:19,412 Stage-1 map = 100%, reduce = 60%, Cumulative CPU 20.24 sec

2017-07-10 10:11:21,495 Stage-1 map = 100%, reduce = 80%, Cumulative CPU 21.66 sec

2017-07-10 10:11:22,525 Stage-1 map = 100%, reduce = 100%, Cumulative CPU 23.08 sec

MapReduce Total cumulative CPU time: 23 seconds 80 msec

Ended Job = job_1499153664137_0088

Launching Job 2 out of 2

Number of reduce tasks determined at compile time: 1

In order to change the average load for a reducer (in bytes):

set hive.exec.reducers.bytes.per.reducer=<number>

In order to limit the maximum number of reducers:

set hive.exec.reducers.max=<number>

In order to set a constant number of reducers:

set mapreduce.job.reduces=<number>

Starting Job = job_1499153664137_0089, Tracking URL = http://master:8088/proxy/application_1499153664137_0089/

Kill Command = /home/hadoop/app/hadoop/bin/hadoop job -kill job_1499153664137_0089

Hadoop job information for Stage-2: number of mappers: 2; number of reducers: 1

2017-07-10 10:12:00,374 Stage-2 map = 0%, reduce = 0%

2017-07-10 10:12:27,396 Stage-2 map = 50%, reduce = 0%, Cumulative CPU 0.91 sec

2017-07-10 10:12:28,425 Stage-2 map = 100%, reduce = 0%, Cumulative CPU 2.03 sec

2017-07-10 10:12:46,169 Stage-2 map = 100%, reduce = 100%, Cumulative CPU 3.61 sec

MapReduce Total cumulative CPU time: 3 seconds 610 msec

Ended Job = job_1499153664137_0089

MapReduce Jobs Launched:

Stage-Stage-1: Map: 4 Reduce: 5 Cumulative CPU: 23.08 sec HDFS Read: 47339903 HDFS Write: 1188 SUCCESS

Stage-Stage-2: Map: 2 Reduce: 1 Cumulative CPU: 3.61 sec HDFS Read: 8218 HDFS Write: 356 SUCCESS

Total MapReduce CPU Time Spent: 26 seconds 690 msec

OK

Entertainment 1304724

Music 1274825

Comedy 449652

Blogs 447581

People 447581

Film 442109

Animation 442109

Sports 390619

Politics 186753

News 186753

Autos 169883

Vehicles 169883

Style 124885

Howto 124885

Animals 86444

Pets 86444

Travel 82068

Events 82068

Education 54133

Science 50925

Technology 50925

UNA 42928

Nonprofits 16925

Activism 16925

Gaming 10182

Time taken: 184.918 seconds, Fetched: 25 row(s)5.2.4 将reduce数设置为2

hive> set mapreduce.job.reduces=2;

hive> select tagId, count(a.videoid) as sum from (select videoid,tagId from youtube3 lateral view explode(category) catetory as tagId) a group by a.tagId order by sum desc;

Query ID = hadoop_20170710101515_d3f7eb83-0edf-463b-90d6-306b6eed2079

Total jobs = 2

Launching Job 1 out of 2

Number of reduce tasks not specified. Defaulting to jobconf value of: 2

In order to change the average load for a reducer (in bytes):

set hive.exec.reducers.bytes.per.reducer=<number>

In order to limit the maximum number of reducers:

set hive.exec.reducers.max=<number>

In order to set a constant number of reducers:

set mapreduce.job.reduces=<number>

Starting Job = job_1499153664137_0090, Tracking URL = http://master:8088/proxy/application_1499153664137_0090/

Kill Command = /home/hadoop/app/hadoop/bin/hadoop job -kill job_1499153664137_0090

Hadoop job information for Stage-1: number of mappers: 4; number of reducers: 2

2017-07-10 10:16:03,018 Stage-1 map = 0%, reduce = 0%

2017-07-10 10:16:31,593 Stage-1 map = 25%, reduce = 0%, Cumulative CPU 1.99 sec

2017-07-10 10:16:34,769 Stage-1 map = 50%, reduce = 0%, Cumulative CPU 5.8 sec

2017-07-10 10:16:51,865 Stage-1 map = 63%, reduce = 0%, Cumulative CPU 14.03 sec

2017-07-10 10:16:52,986 Stage-1 map = 75%, reduce = 0%, Cumulative CPU 14.28 sec

2017-07-10 10:16:54,084 Stage-1 map = 84%, reduce = 0%, Cumulative CPU 15.01 sec

2017-07-10 10:16:55,138 Stage-1 map = 84%, reduce = 13%, Cumulative CPU 15.39 sec

2017-07-10 10:16:56,210 Stage-1 map = 100%, reduce = 13%, Cumulative CPU 16.39 sec

2017-07-10 10:16:57,298 Stage-1 map = 100%, reduce = 50%, Cumulative CPU 17.73 sec

2017-07-10 10:16:58,330 Stage-1 map = 100%, reduce = 100%, Cumulative CPU 19.42 sec

MapReduce Total cumulative CPU time: 19 seconds 420 msec

Ended Job = job_1499153664137_0090

Launching Job 2 out of 2

Number of reduce tasks determined at compile time: 1

In order to change the average load for a reducer (in bytes):

set hive.exec.reducers.bytes.per.reducer=<number>

In order to limit the maximum number of reducers:

set hive.exec.reducers.max=<number>

In order to set a constant number of reducers:

set mapreduce.job.reduces=<number>

Starting Job = job_1499153664137_0091, Tracking URL = http://master:8088/proxy/application_1499153664137_0091/

Kill Command = /home/hadoop/app/hadoop/bin/hadoop job -kill job_1499153664137_0091

Hadoop job information for Stage-2: number of mappers: 1; number of reducers: 1

2017-07-10 10:17:35,020 Stage-2 map = 0%, reduce = 0%

2017-07-10 10:18:01,896 Stage-2 map = 100%, reduce = 0%, Cumulative CPU 0.9 sec

2017-07-10 10:18:31,798 Stage-2 map = 100%, reduce = 100%, Cumulative CPU 2.45 sec

MapReduce Total cumulative CPU time: 2 seconds 450 msec

Ended Job = job_1499153664137_0091

MapReduce Jobs Launched:

Stage-Stage-1: Map: 4 Reduce: 2 Cumulative CPU: 19.42 sec HDFS Read: 47327614 HDFS Write: 900 SUCCESS

Stage-Stage-2: Map: 1 Reduce: 1 Cumulative CPU: 2.45 sec HDFS Read: 5475 HDFS Write: 356 SUCCESS

Total MapReduce CPU Time Spent: 21 seconds 870 msec

OK

Entertainment 1304724

Music 1274825

Comedy 449652

Blogs 447581

People 447581

Film 442109

Animation 442109

Sports 390619

Politics 186753

News 186753

Autos 169883

Vehicles 169883

Howto 124885

Style 124885

Animals 86444

Pets 86444

Travel 82068

Events 82068

Education 54133

Science 50925

Technology 50925

UNA 42928

Activism 16925

Nonprofits 16925

Gaming 10182

Time taken: 190.953 seconds, Fetched: 25 row(s)使用语句:select tagId, count(a.videoid) as sum from (select videoid,tagId from youtube3 lateral view explode(category) catetory as tagId) a group by a.tagId order by sum desc;

reduce数的选择只能通过不断地调整来达到一个最优的方案

| reduce数 | CPU耗时 | 详细情况 |

|---|---|---|

| 不设置时,自动切分为3 | 24s | Stage-Stage-1: Map: 4 Reduce: 3 Cumulative CPU: 21.08 sec HDFS Read: 47331544 HDFS Write: 996 SUCCESS Stage-Stage-2: Map: 1 Reduce: 1 Cumulative CPU: 2.43 sec HDFS Read: 5826 HDFS Write: 356 SUCCESS Total MapReduce CPU Time Spent: 23 seconds 510 msec |

| 设置为5 | 26s | MapReduce Jobs Launched: Stage-Stage-1: Map: 4 Reduce: 5 Cumulative CPU: 23.08 sec HDFS Read: 47339903 HDFS Write: 1188 SUCCESS Stage-Stage-2: Map: 2 Reduce: 1 Cumulative CPU: 3.61 sec HDFS Read: 8218 HDFS Write: 356 SUCCESS Total MapReduce CPU Time Spent: 26 seconds 690 msec |

| 设置为2 | 22s | MapReduce Jobs Launched: Stage-Stage-1: Map: 4 Reduce: 2 Cumulative CPU: 19.42 sec HDFS Read: 47327614 HDFS Write: 900 SUCCESS Stage-Stage-2: Map: 1 Reduce: 1 Cumulative CPU: 2.45 sec HDFS Read: 5475 HDFS Write: 356 SUCCESS Total MapReduce CPU Time Spent: 21 seconds 870 msec |

…

所以在此条查询中reduce最佳是2