scrapy 爬取知乎用户信息

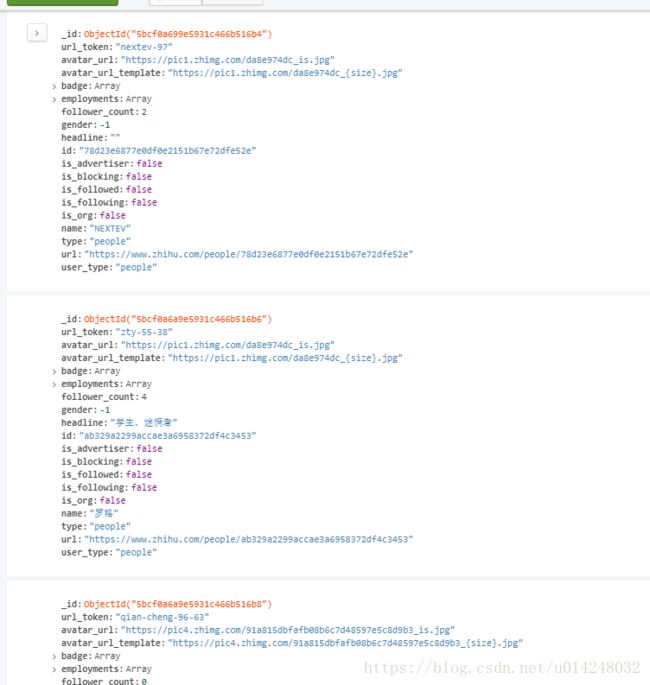

先从知乎的轮子哥开始爬去他的粉丝列表和关注列表,然后再爬取他粉丝和关注的人里的用户,递归爬取,然后存储到MongoDB里。

目前还没有写代理池,爬取太频繁容易被知乎识别出来,会被要求输入验证码。所以现在我还是把settings里的AUTOTHROTTLE_ENABLED = True,延迟操作,但是爬取效率肯定会低很多。

看视频的时候request的url和翻页的url里都有/api/v4这个字符串,然而现在知乎把翻页url里的/api/v4删除了,导致一直404无法翻页,解决方法就是接收到翻页的url后把/api/v4插入到https://www.zhihu.com后面即可翻页。

zhihu.py

# -*- coding: utf-8 -*-

import scrapy

from scrapy import Request, Spider

import json

from ..items import UserItem

class ZhihuSpider(Spider):

name = 'zhihu'

allowed_domains = ['zhihu.com']

start_urls = ['http://zhihu.com/']

start_user = "excited-vczh" # 爬虫起始用户"

user_url = "https://www.zhihu.com/api/v4/members/{user}?include={include}"

user_query = "allow_message%2Cis_followed%2Cis_following%2Cis_org%2Cis_blocking%2Cemployments%2Canswer_count%2Cfollower_count%2Carticles_count%2Cgender%2Cbadge%5B%3F(type%3Dbest_answerer)%5D.topics"

followers_url = "https://www.zhihu.com/api/v4/members/{user}/followers?include={include}&offset={offset}&limit={limit}" # 用户的粉丝列表

followers_query = "data%5B*%5D.answer_count%2Carticles_count%2Cgender%2Cfollower_count%2Cis_followed%2Cis_following%2Cbadge%5B%3F(type%3Dbest_answerer)%5D.topics"

follow_url = "https://www.zhihu.com/api/v4/members/{user}/followees?include={include}&offset={offset}&limit=limit" # 用户的关注列表

follow_query = "data%5B*%5D.answer_count%2Carticles_count%2Cgender%2Cfollower_count%2Cis_followed%2Cis_following%2Cbadge%5B%3F(type%3Dbest_answerer)%5D.topics"

def start_requests(self):

yield Request(

url=self.followers_url.format(user=self.start_user, include=self.followers_query, offset=0, limit=20),

callback=self.followers_parse) # 粉丝列表解析

yield Request(

url=self.user_url.format(uaser=self.start_user, include=self.user_query),

callback=self.user_parse) # 个人网页解析

yield Request(

url=self.followers_url.format(user=self.start_user, include=self.follow_query, offset=0, limit=20),

callback=self.follow_parse) # 关注列表解析

def user_parse(self, response): # 解析用户

result = json.loads(response.text)

item = UserItem()

for field in item.fields:

if field in result.keys():

item[field] = result.get(field)

print(item["name"])

yield item

yield Request(

url=self.followers_url.format(user=result.get("url_token"), include=self.followers_query, offset=0,

limit=20),

callback=self.followers_parse) # 解析用户的粉丝列表

yield Request(

url=self.follow_url.format(user=result.get("url_token"), include=self.follow_query, offset=0, limit=20),

callback=self.follow_parse) # 解析用户的关注列表

def followers_parse(self, response): # 解析粉丝列表

results = json.loads(response.text)

if "data" in results.keys():

for result in results.get("data"):

yield Request(url=self.user_url.format(user=result.get("url_token"), include=self.user_query),

callback=self.user_parse) # 解析粉丝

if "paging" in results.keys() and results.get("paging").get("is_end") == False: # 翻页条件

page = results.get("paging").get("next")

next_page = page.split("com")[0] + "com/api/v4" + page.split("com")[1] # 下一页的url

yield Request(url=next_page, callback=self.followers_parse) # 翻页

def follow_parse(self, response): # 解析关注列表

results = json.loads(response.text)

if "data" in results.keys():

for result in results.get("data"):

yield Request(url=self.user_url.format(user=result.get("url_token"), include=self.user_query),

callback=self.user_parse) # 解析关注者

if "paging" in results.keys() and results.get("paging").get("is_end") == False:

page = results.get("paging").get("next")

next_page = page.split("com")[0] + "com/api/v4" + page.split("com")[1]

yield Request(url=next_page, callback=self.follow_parse) # 翻页

piplines.py

import pymongo

class MongoPipeline(object):

collection_name = 'scrapy_items'

def __init__(self, mongo_uri, mongo_db):

self.mongo_uri = mongo_uri

self.mongo_db = mongo_db

@classmethod

def from_crawler(cls, crawler):

return cls(

mongo_uri=crawler.settings.get('MONGO_URI'),

mongo_db=crawler.settings.get('MONGO_DATABASE')

)

def open_spider(self, spider):

self.client = pymongo.MongoClient(self.mongo_uri)

self.db = self.client[self.mongo_db]

def close_spider(self, spider):

self.client.close()

def process_item(self, item, spider):

self.db["user"].update({"url_token":item["url_token"]},{"$set":item},True)#用户去重

return itemitems.py

# -*- coding: utf-8 -*-

# Define here the models for your scraped items

#

# See documentation in:

# https://doc.scrapy.org/en/latest/topics/items.html

from scrapy import Item, Field

class UserItem(Item):

avatar_url = Field()

avatar_url_template = Field()

badge = Field()

employments = Field()

follower_count = Field()

gender = Field()

headline = Field()

id = Field()

is_advertiser = Field()

is_blocking = Field()

is_followed = Field()

is_following = Field()

is_org = Field()

name = Field()

type = Field()

url = Field()

url_token = Field()

user_type = Field()

srttings.py

# -*- coding: utf-8 -*-

# Scrapy settings for spider1 project

#

# For simplicity, this file contains only settings considered important or

# commonly used. You can find more settings consulting the documentation:

#

# https://doc.scrapy.org/en/latest/topics/settings.html

# https://doc.scrapy.org/en/latest/topics/downloader-middleware.html

# https://doc.scrapy.org/en/latest/topics/spider-middleware.html

BOT_NAME = 'spider1'

SPIDER_MODULES = ['spider1.spiders']

NEWSPIDER_MODULE = 'spider1.spiders'

# Crawl responsibly by identifying yourself (and your website) on the user-agent

#USER_AGENT = 'spider1 (+http://www.yourdomain.com)'

# Obey robots.txt rules

ROBOTSTXT_OBEY = False

# Configure maximum concurrent requests performed by Scrapy (default: 16)

#CONCURRENT_REQUESTS = 32

# Configure a delay for requests for the same website (default: 0)

# See https://doc.scrapy.org/en/latest/topics/settings.html#download-delay

# See also autothrottle settings and docs

#DOWNLOAD_DELAY = 3

# The download delay setting will honor only one of:

#CONCURRENT_REQUESTS_PER_DOMAIN = 16

#CONCURRENT_REQUESTS_PER_IP = 16

# Disable cookies (enabled by default)

#COOKIES_ENABLED = False

# Disable Telnet Console (enabled by default)

#TELNETCONSOLE_ENABLED = False

# Override the default request headers:

DEFAULT_REQUEST_HEADERS = {

'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8',

'Accept-Language': 'en',

"User-Agent":"Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/67.0.3396.62 Safari/537.36"

}

# Enable or disable spider middlewares

# See https://doc.scrapy.org/en/latest/topics/spider-middleware.html

#SPIDER_MIDDLEWARES = {

# 'spider1.middlewares.Spider1SpiderMiddleware': 543,

#}

# Enable or disable downloader middlewares

# See https://doc.scrapy.org/en/latest/topics/downloader-middleware.html

#DOWNLOADER_MIDDLEWARES = {

# 'spider1.middlewares.Spider1DownloaderMiddleware': 543,

#}

# Enable or disable extensions

# See https://doc.scrapy.org/en/latest/topics/extensions.html

#EXTENSIONS = {

# 'scrapy.extensions.telnet.TelnetConsole': None,

#}

# Configure item pipelines

# See https://doc.scrapy.org/en/latest/topics/item-pipeline.html

ITEM_PIPELINES = {

'spider1.pipelines.MongoPipeline': 300,

}

# Enable and configure the AutoThrottle extension (disabled by default)

# See https://doc.scrapy.org/en/latest/topics/autothrottle.html

AUTOTHROTTLE_ENABLED = True

# The initial download delay

AUTOTHROTTLE_START_DELAY = 2

# The maximum download delay to be set in case of high latencies

AUTOTHROTTLE_MAX_DELAY = 10

# The average number of requests Scrapy should be sending in parallel to

# each remote server

AUTOTHROTTLE_TARGET_CONCURRENCY = 1.0

# Enable showing throttling stats for every response received:

AUTOTHROTTLE_DEBUG = False

# Enable and configure HTTP caching (disabled by default)

# See https://doc.scrapy.org/en/latest/topics/downloader-middleware.html#httpcache-middleware-settings

#HTTPCACHE_ENABLED = True

#HTTPCACHE_EXPIRATION_SECS = 0

#HTTPCACHE_DIR = 'httpcache'

#HTTPCACHE_IGNORE_HTTP_CODES = []

#HTTPCACHE_STORAGE = 'scrapy.extensions.httpcache.FilesystemCacheStorage'

MOMGO_URI = "localhost"

MONGO_DATABASE = "zhihu"