Pytorch入门教程(七):卷积相关

1. 卷积基本操作

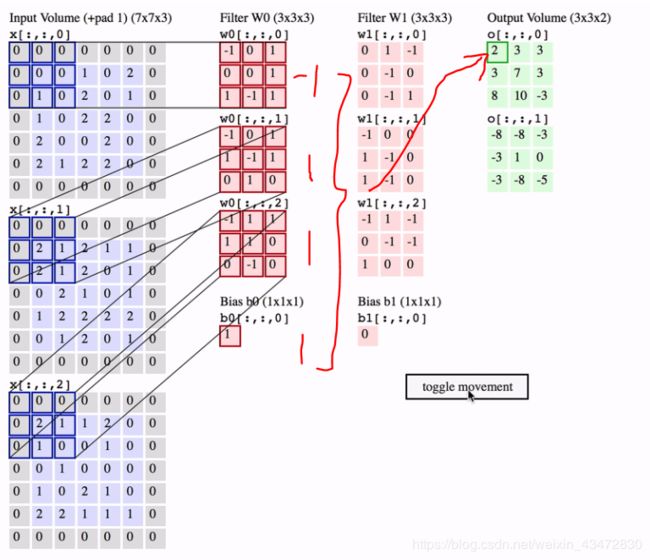

图像多个通道,分别卷积,最后求和,加偏置,形成新的像素点。

import torch

import torch.nn as nn

x = torch.randn(10, 1, 28, 28) # 10张图片,1个通道,28*28大小

layer = nn.Conv2d(1, 3, kernel_size=3, stride=1, padding=0) # 1个输入通道(与所输入的图片通道相同),

# 3个卷积核(将要输出的卷积通道数),3*3大小,步长为1,不填充

out = layer(x) # 输出10张图片,3个卷积后的通道,26*26大小

print("shape", out.shape) # 输出形状

print("weight", layer.weight) # 输出权重

print("bias", layer.bias) # 偏置结果:

shape torch.Size([10, 3, 26, 26])

weight Parameter containing:

tensor([[[[ 0.0259, 0.0438, 0.1578],

[-0.2911, -0.1639, 0.1765],

[ 0.2297, -0.2030, -0.2391]]],

[[[-0.0350, -0.0117, 0.2991],

[ 0.3243, -0.2971, 0.1746],

[-0.0482, 0.1946, -0.1645]]],

[[[ 0.2764, -0.2696, -0.3317],

[ 0.1563, -0.1096, 0.1644],

[-0.0760, 0.2367, 0.2039]]]], requires_grad=True)

bias Parameter containing:

tensor([ 0.3205, 0.0316, -0.2219], requires_grad=True)

2. 池化层

x = torch.randn(10, 3, 28, 28) # 10张图片,3个通道,28*28大小

layer = nn.MaxPool2d(2, stride=2) # 最大池化,2*2大小池化层,步长为2

out = layer(x)

print(out.shape) # torch.Size([10, 3, 14, 14])大小变为原来的一半3. 上采样

import torch

import torch.nn as nn

import torch.nn.functional as F

x = torch.randn(10, 3, 14, 14) # 10张图片,3个通道,14*14大小

out = F.interpolate(x, scale_factor=2, mode="nearest") # 插值上采样,放大2倍,临近插值

print(out.shape) # torch.Size([10, 3, 28, 28])4 Batch Norm 批量归一化

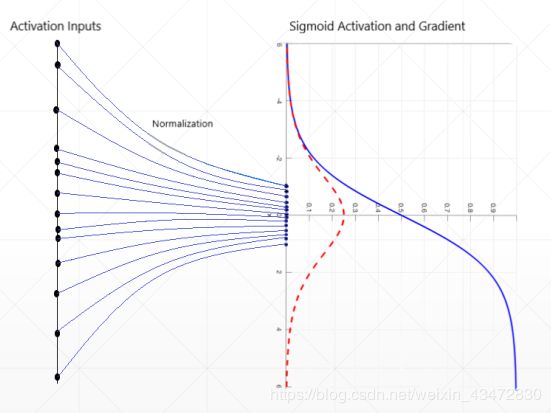

数据分布对激活函数的影响:

对于S函数,如果输入太大/小的话,会出现梯度离散现象,使得更新速度变快。解决方法:更改数据分布形式,符合正态分布。

x = torch.randn(10, 3, 28, 28) # 10张图片,3个通道,28*28大小

layer = nn.BatchNorm2d(3) # 对每个通道进行归一化,要与需要归一化的图片通道相同

out = layer(x)

print(out.shape) # torch.Size([10, 3, 28, 28])

print(layer.weight) # 权重

print(layer.bias) # 偏置torch.Size([10, 3, 28, 28])

Parameter containing:

tensor([0.9443, 0.8868, 0.1899], requires_grad=True)

Parameter containing:

tensor([0., 0., 0.], requires_grad=True)