kafka partition分配原理探究

kafka的官方文档提供了这样一段描述

In fact, the only metadata retained on a per-consumer basis is the offset or position of that consumer in the log. This offset is controlled by the consumer: normally a consumer will advance its offset linearly as it reads records, but, in fact, since the position is controlled by the consumer it can consume records in any order it likes. For example a consumer can reset to an older offset to reprocess data from the past or skip ahead to the most recent record and start consuming from "now".

kafka不同于其他mq,由于他基于硬盘的存储,所以kafka不会删除消费过的数据,所以consumer可以从指定的offset读取数据,针对这个特性做了以下实验。

生产者代码略,消费者代码如下

public static void main(String[] args) {

Properties props = new Properties();

props.put("bootstrap.servers", "localhost:9092,localhost:9093,localhost:9094");

props.put("group.id", "test");

props.put("enable.auto.commit", "false");

props.put("key.deserializer", "org.apache.kafka.common.serialization.StringDeserializer");

props.put("value.deserializer", "org.apache.kafka.common.serialization.StringDeserializer");

KafkaConsumer consumer = new KafkaConsumer<>(props);

//consumer.subscribe(Arrays.asList("my-topic"));

final int minBatchSize = 1;

List> buffer = new ArrayList<>();

TopicPartition partition0 = new TopicPartition("ycx3", 0);

TopicPartition partition1 = new TopicPartition("ycx3", 1);

TopicPartition partition2 = new TopicPartition("ycx3", 2);

consumer.assign(Arrays.asList(partition0,partition1,partition2));

consumer.seek(partition0, 220);

consumer.seek(partition1,160);

consumer.seek(partition2,180);

//consumer.seekToEnd(Arrays.asList(partition1,partition2));

while (true) {

try{

ConsumerRecords records = consumer.poll(1000);

以下代码略...

}

这里面有两个问题,

offset是否跨partiton ?

partiton与consumer如何指定 ?

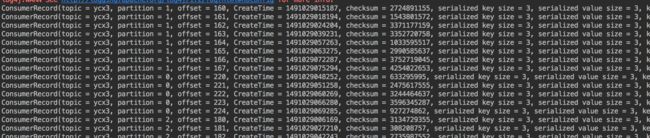

首先第一个问题,kafka的最小物理单位是partition,所以offset是记录在partition中的(segment index中),那么partition是跨机器的,kafka有没有通过zk将partiton的节点统一管理呢,从以上的实验来看,kafka的不同partition是有可能出现相同offset的,所以可见offset的是partiton内管理的,并没有在manager中统一管理的。所以我们再指定offset的时候要同时指定partiton。

那么第二个问题,既然offset是以partiton作为单位存储的,那么当一个consumer监听多个partiton的时候,consumer如何知道自己该去哪个partiton拉数据呢?(因为consumer是poll方式,所以猜测是轮训)

在kafka的ZookeeperConsumerConnector中发现这样一段代码

private def rebalance(cluster: Cluster): Boolean = {

val myTopicThreadIdsMap = TopicCount.constructTopicCount(

group, consumerIdString, zkUtils, config.excludeInternalTopics).getConsumerThreadIdsPerTopic

val brokers = zkUtils.getAllBrokersInCluster()

if (brokers.size == 0) {

// This can happen in a rare case when there are no brokers available in the cluster when the consumer is started.

// We log a warning and register for child changes on brokers/id so that rebalance can be triggered when the brokers

// are up.

warn("no brokers found when trying to rebalance.")

zkUtils.zkClient.subscribeChildChanges(BrokerIdsPath, loadBalancerListener)

true

}

else {

/**

* fetchers must be stopped to avoid data duplication, since if the current

* rebalancing attempt fails, the partitions that are released could be owned by another consumer.

* But if we don't stop the fetchers first, this consumer would continue returning data for released

* partitions in parallel. So, not stopping the fetchers leads to duplicate data.

*/

closeFetchers(cluster, kafkaMessageAndMetadataStreams, myTopicThreadIdsMap)

if (consumerRebalanceListener != null) {

info("Invoking rebalance listener before relasing partition ownerships.")

consumerRebalanceListener.beforeReleasingPartitions(

if (topicRegistry.size == 0)

new java.util.HashMap[String, java.util.Set[java.lang.Integer]]

else

topicRegistry.map(topics =>

topics._1 -> topics._2.keys // note this is incorrect, see KAFKA-2284

).toMap.asJava.asInstanceOf[java.util.Map[String, java.util.Set[java.lang.Integer]]]

)

}

releasePartitionOwnership(topicRegistry)

val assignmentContext = new AssignmentContext(group, consumerIdString, config.excludeInternalTopics, zkUtils)

val globalPartitionAssignment = partitionAssignor.assign(assignmentContext)

val partitionAssignment = globalPartitionAssignment.get(assignmentContext.consumerId)

val currentTopicRegistry = new Pool[String, Pool[Int, PartitionTopicInfo]](

valueFactory = Some((_: String) => new Pool[Int, PartitionTopicInfo]))

// fetch current offsets for all topic-partitions

val topicPartitions = partitionAssignment.keySet.toSeq

val offsetFetchResponseOpt = fetchOffsets(topicPartitions)

后续代码略........

}

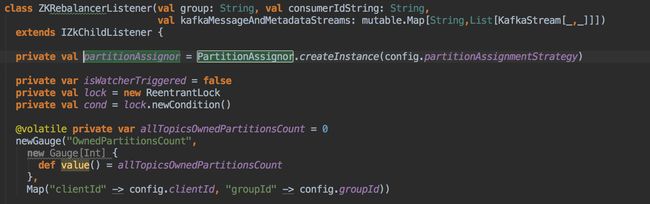

}val globalPartitionAssignment = partitionAssignor.assign(assignmentContext) 发现定义了这样一个类

继续跟踪...

val nPartsPerConsumer = curPartitions.size / curConsumers.size // 每个consumer至少保证消费的分区数

val nConsumersWithExtraPart = curPartitions.size % curConsumers.size // 还剩下多少个分区需要单独分配给开头的线程们

...

for (consumerThreadId <- consumerThreadIdSet) { // 对于每一个consumer线程

val myConsumerPosition = curConsumers.indexOf(consumerThreadId) //算出该线程在所有线程中的位置,介于[0, n-1]

assert(myConsumerPosition >= 0)

// startPart 就是这个线程要消费的起始分区数

val startPart = nPartsPerConsumer * myConsumerPosition + myConsumerPosition.min(nConsumersWithExtraPart)

// nParts 就是这个线程总共要消费多少个分区

val nParts = nPartsPerConsumer + (if (myConsumerPosition + 1 > nConsumersWithExtraPart) 0 else 1)

...

}这里 kafka 提供两种分配策略 range和roundrobin,由参数partition.assignment.strategy指定,默认是range策略。本文只讨论range策略。所谓的range其实就是按照阶段平均分配。

本文只是讨论partiton与consumer 的分配策略,算法问题暂不讨论