CentOs 6.4 VM 扩展磁盘

在创建虚拟机的时候,因为没有设置足够的磁盘空间,导致后续使用的时候,一不小心磁盘就满了,为了解决这个问题扩容势在必行,在网上搜了一通,按照方法做了一遍,遇到各种问题,做到一半发现不行了,搁置了几天,又重新来做,终于成功了。

PS:如果问题解决不了,可以过两天再来解决,换个思路说不定很容易就搞定了。

闲话不多说,我们就开始吧,

PS2:过程中参考了很多网上的做法,我将原文的链接也一并贴出,如果我有描述的不清楚的地方,大家可以去翻翻原贴,希望有所帮助。

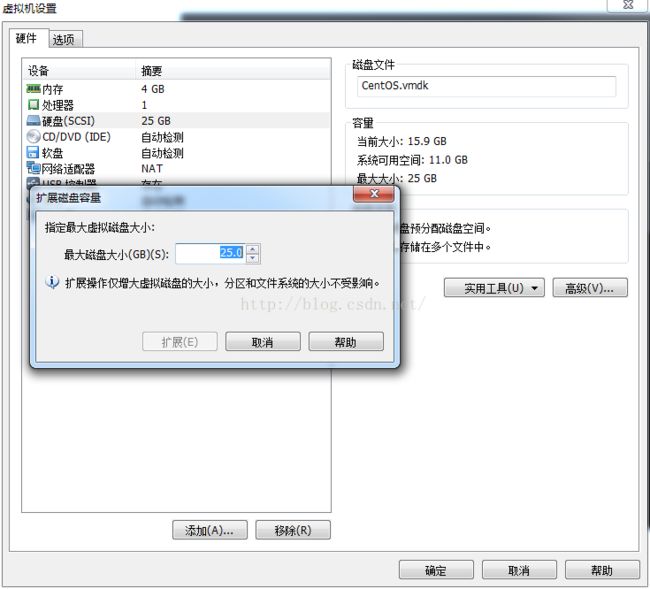

1.关闭虚拟机,在虚拟机管理界面点击【硬盘】-》【实用工具】调整虚拟机磁盘大小。

2.启动VM环境,用root用户进入Linux系统,添加新分区。

2.1查看当前分区状况

[root@hadoop-senior conf]# fdisk -l

Disk /dev/sda: 26.8 GB, 26843545600 bytes

255 heads, 63 sectors/track, 3263 cylinders

Units = cylinders of 16065 * 512 = 8225280 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk identifier: 0x000adb00

Device Boot Start End Blocks Id System

/dev/sda1 * 1 26 204800 83 Linux

Partition 1 does not end on cylinder boundary.

/dev/sda2 26 2089 16571392 83 Linux

/dev/sda3 2089 2611 4194304 82 Linux swap / Solaris

当前最大分区为sda3,新创建的分区为sda4。

参考文章:

http://www.linuxidc.com/Linux/2011-02/32083.htm

以下操作因为当时操作的记录没有保存,所以只能文字描述了。

2.2输入【fdisk /dev/sda】

2.3命令行提示下输入【m】

2.4输入命令【n】添加新分区。

2.5输入命令【p】创建主分区。

2.6输入【回车】,选择默认大小,这样不浪费空间

2.7输入【回车】,选择默认的start cylinder。

2.8输入【w】,保持修改

3.在根目录下创建disk4目录,将新分区mount到disk4目录下

3.1mount报错,

[root@hadoop-senior conf]# mount /dev/sda4 /disk4

mount: unknown filesystem type 'LVM2_member'

原因可能是没有创建逻辑卷、卷组和格式化引起的

参考文章:

http://blog.itpub.net/28602568/viewspace-1797429/

3.1.1查看物理卷:

[root@hadoop-senior conf]# pvs

PV VG Fmt Attr PSize PFree

/dev/sda4 lvm2 a-- 5.00g 5.00g

3.1.2查看逻辑卷:

[root@hadoop-senior conf]# lvdisplay

No volume groups found

[root@hadoop-senior conf]#

3.1.3查看卷组:

[root@hadoop-senior conf]# vgs

No volume groups found

[root@hadoop-senior conf]#

3.1.4创建卷组、逻辑卷

创建卷组:

[root@hadoop-senior conf]# vgcreate vgdata /dev/sda4 ##vgdata为卷组名

Volume group "vgdata" successfully created

查看创建结果

[root@hadoop-senior conf]# vgs

VG #PV #LV #SN Attr VSize VFree

vgdata 1 0 0 wz--n- 4.99g 4.99g

[root@hadoop-senior conf]# vgdisplay

--- Volume group ---

VG Name vgdata

System ID

Format lvm2

Metadata Areas 1

Metadata Sequence No 1

VG Access read/write

VG Status resizable

MAX LV 0

Cur LV 0

Open LV 0

Max PV 0

Cur PV 1

Act PV 1

VG Size 4.99 GiB

PE Size 4.00 MiB

Total PE 1278

Alloc PE / Size 0 / 0

Free PE / Size 1278 / 4.99 GiB

VG UUID NY821V-62fa-8XUP-nn3i-2GJJ-0J0v-Bdo0zK

3.1.5创建逻辑卷:

[root@hadoop-senior conf]# lvcreate -L4.9G vgdata -n lvolhome /dev/sda4

Rounding up size to full physical extent 4.90 GiB

Logical volume "lvolhome" created

查看结果:

[root@hadoop-senior conf]# lvdisplay

--- Logical volume ---

LV Path /dev/vgdata/lvolhome

LV Name lvolhome

VG Name vgdata

LV UUID fuugQB-rMIQ-m6Ud-Yfje-kGkM-utFC-0EcdWF

LV Write Access read/write

LV Creation host, time hadoop-senior.ibeifeng.com, 2016-12-02 22:09:09 +0800

LV Status available

# open 0

LV Size 4.90 GiB

Current LE 1255

Segments 1

Allocation inherit

Read ahead sectors auto

- currently set to 256

Block device 253:0

3.1.6格式化磁盘:

格式化报错,提示磁盘正在被使用

[root@hadoop-senior conf]# mkfs.ext4 /dev/sda4

mke2fs 1.41.12 (17-May-2010)

/dev/sda4 is apparently in use by the system; will not make a filesystem here!

参考文章:

http://www.myhack58.com/Article/54/93/2015/64408.htm

3.1.7手动移除DM管理:

[root@hadoop-senior conf]# dmsetup status

vgdata-lvolhome: 0 10280960 linear

[root@hadoop-senior conf]# dmsetup remove_all

[root@hadoop-senior conf]# dmsetup status

No devices found

3.1.8格式化并重新mount:

[root@hadoop-senior conf]# mkfs.ext4 /dev/sda4

mke2fs 1.41.12 (17-May-2010)

Filesystem label=

OS type: Linux

Block size=4096 (log=2)

Fragment size=4096 (log=2)

Stride=0 blocks, Stripe width=0 blocks

327680 inodes, 1309631 blocks

65481 blocks (5.00%) reserved for the super user

First data block=0

Maximum filesystem blocks=1342177280

40 block groups

32768 blocks per group, 32768 fragments per group

8192 inodes per group

Superblock backups stored on blocks:

32768, 98304, 163840, 229376, 294912, 819200, 884736

Writing inode tables: done

Creating journal (32768 blocks): done

Writing superblocks and filesystem accounting information: done

This filesystem will be automatically checked every 30 mounts or

180 days, whichever comes first. Use tune2fs -c or -i to override.

[root@hadoop-senior conf]# mount /dev/sda4 /disk4

4.mount成功,磁盘扩容成功

mount成功之前:

[root@hadoop-senior conf]# df -lh

Filesystem Size Used Avail Use% Mounted on

/dev/sda2 16G 12G 3.3G 78% /

tmpfs 1.9G 72K 1.9G 1% /dev/shm

/dev/sda1 194M 29M 156M 16% /boot

mount 成功后:

[root@hadoop-senior conf]# df -lh

Filesystem Size Used Avail Use% Mounted on

/dev/sda2 16G 12G 3.3G 78% /

tmpfs 1.9G 72K 1.9G 1% /dev/shm

/dev/sda1 194M 29M 156M 16% /boot

/dev/sda4 5.0G 138M 4.6G 3% /disk4