Hbase2.1.0 on Hadoop3.0.3集群(基于CentOS7.5)

引言:

HBase是一个分布式数据库,主要功能是存储海量数据和实时的数据查询。

一、HBase的应用场景

监控拍照、金融交易流水数据、电商个人行为数据、电话信息流水。

二、HBase的特点

- 容量大(适合TB级别以上的数据存储)

- 面向列的存储(根据数据的增加而增加列)

- 多版本(比如一个人的家庭住址可能随时间变化而变化)

- 扩展性(数据存储在HDFS上)

- 适于存储稀疏数据

- 高性能读写

- 高可靠,无单点故障

完全分布式集群搭建请移步:

在CentOS7.5上搭建Hadoop3.0.3完全分布式集群

当前CentOS,JDK和Hadoop版本:

[root@master ~]# cat /etc/redhat-release

CentOS Linux release 7.5.1804 (Core)

[root@master ~]# java -version

openjdk version "1.8.0_191"

OpenJDK Runtime Environment (build 1.8.0_191-b12)

OpenJDK 64-Bit Server VM (build 25.191-b12, mixed mode)

[root@master ~]# hadoop version

Hadoop 3.0.3

Source code repository https://yjzhangal@git-wip-us.apache.org/repos/asf/hadoop.git -r 37fd7d752db73d984dc31e0cdfd590d252f5e075

Compiled by yzhang on 2018-05-31T17:12Z

Compiled with protoc 2.5.0

From source with checksum 736cdcefa911261ad56d2d120bf1fa

This command was run using /usr/local/hadoop/share/hadoop/common/hadoop-common-3.0.3.jar

可通过如下命令查看JAVA_HOME:

[root@master ~]# echo $JAVA_HOME

/usr/lib/jvm/java-1.8.0-openjdk-1.8.0.191.b12-0.el7_5.x86_64/jre

| Hostname | HDFS | MapReduce/Yarn | IP | Zookeeper-3.4.13 | RegionServer | HMaster |

|---|---|---|---|---|---|---|

| master | NameNode | ResourceManager | 10.0.86.245 | √ | √ | |

| ceph1 | DataNode | NodeManager | 10.0.86.246 | √ | √ | backup |

| ceph2 | DataNode | NodeManager | 10.0.86.221 | √ | √ | |

| ceph3 | DataNode | NodeManager | 10.0.86.253 | √ |

一、下载解压Hbase2.1.0

对应镜像包可在官网上下载,这里我是已经下载好的,直接tar开。

HBase官网当前支持版本的镜像下载地址

[root@master ~]# tar -zxvf hbase-2.1.0-bin.tar.gz

[root@master ~]# mv hbase-2.1.0/ /usr/local/hbase/

二、配置hbase-env.sh文件

[root@master ~]# vim /usr/local/hbase/conf/hbase-env.sh

①配置JAVA_HOME参数

export JAVA_HOME=/usr/lib/jvm/java-1.8.0-openjdk-1.8.0.191.b12-0.el7_5.x86_64/jre

②配置HBASE_MANAGES_ZK参数

Hbase中有自带的Zookeeper,但此处我在集群搭建了Zookeeper-3.4.13,所以此处设置为false。否则会默认选择自带的Zookeeper。

export HBASE_MANAGES_ZK=false

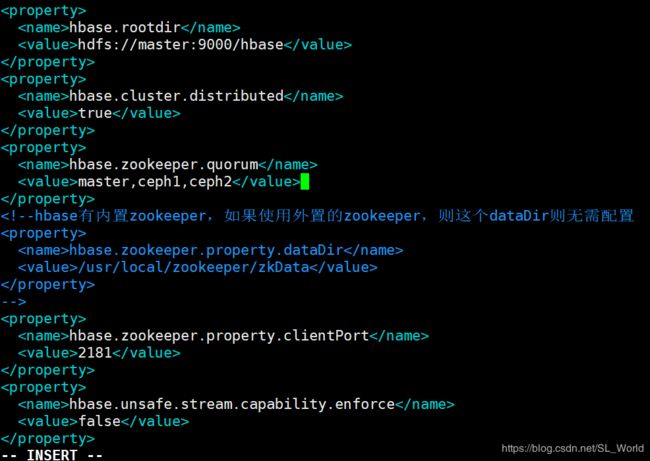

三、配置hbase-site.xml文件

[root@master ~]# vim /usr/local/hbase/conf/hbase-site.xml

<configuration>

<property>

<name>hbase.rootdir</name>

<value>hdfs://master:9000/hbase</value>

</property>

<property>

<name>hbase.cluster.distributed</name>

<value>true</value>

</property>

<property>

<name>hbase.zookeeper.quorum</name>

<value>master,ceph1,ceph2,ceph3</value>

</property>

<!--hbase有内置zookeeper,如果使用外置的zookeeper,则这个dataDir则无需配置

<property>

<name>hbase.zookeeper.property.dataDir</name>

<value>/usr/local/zookeeper/zkData</value>

</property>

-->

<property>

<name>hbase.unsafe.stream.capability.enforce</name>

<value>false</value>

</property>

</configuration>

切记上面的hbase.unsafe.stream.capability.enforce属性,一定不要忘了添加,否则后续启动HBase会报IllegalStateException异常,这里目的是禁止检查流功能~

<property>

<name>hbase.unsafe.stream.capability.enforce</name>

<value>false</value>

</property>

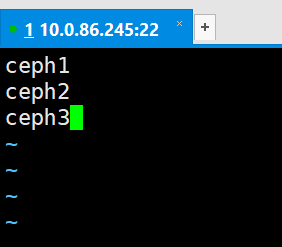

四、配置regionservers和backup-masters文件

[root@master ~]# vim /usr/local/hbase/conf/regionservers

[root@master ~]# vim /usr/local/hbase/conf/backup-masters

此处设置备用的master,保证主master宕机后,直接可把ceph1切换为master。保证集群的正常使用。

后面集群搭建成功后可在浏览器看到这个备用结点。

五、把文件分发到其他节点

[root@master ~]# rsync -avP /usr/local/hbase ceph1:/usr/local/

[root@master ~]# rsync -avP /usr/local/hbase ceph2:/usr/local/

[root@master ~]# rsync -avP /usr/local/hbase ceph3:/usr/local/

顺便配置一下环境变量:

[root@master ~]# vim ~/.bashrc

# Hbase

export HBASE_HOME=/usr/local/hbase

export HBASE_CONF_DIR=/usr/local/hbase/conf

export PATH=$PATH:$HBASE_HOME/bin

配置立即生效

[root@master ~]# source ~/.bashrc

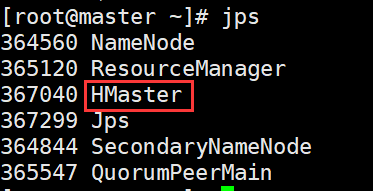

六、启动Hbase

前提:应该先保证启动好Zookeeper和HDFS

其中QuorumPeerMain是Zookeeper进程。

启动命令如下:

[root@master ~]# start-hbase.sh

或者分别登录到每个结点去启动(先启动regionserver再启HMaster)

[root@ceph1 ~]# hbase-daemon.sh start regionserver

[root@ceph2 ~]# hbase-daemon.sh start regionserver

[root@ceph3 ~]# hbase-daemon.sh start regionserver

[root@master ~]# hbase-daemon.sh start master

启动成功后可以通过jps查看(ceph1节点作为备用master,故此处也有HMaster进程):

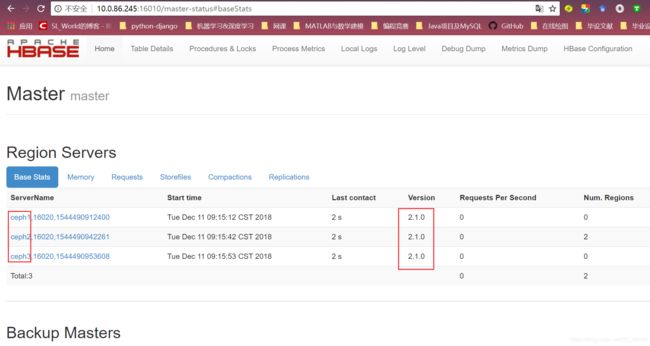

也可以通过浏览器查看:

我的(HBase-2.1.0)地址是:http://10.0.86.245:16010

HBase表操作命令请移步:

HBase2.1.0表操作命令

启动遇到的坑点

问题一:

问题描述:启动hbase时报出如下Class path contains multiple SLF4J bindings错误:

[root@master ~]# start-hbase.sh

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/usr/local/hadoop/share/hadoop/common/lib/slf4j-log4j12-1.7.25.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/usr/local/hbase/lib/client-facing-thirdparty/slf4j-log4j12-1.7.25.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory]

...

解决方法:说明是这个slf4j-log4j12-1.7.25.jar和包跟其他的class path中包含的与此类似的jar包重复了,所以,应该去掉对应的jar包。

登录到每个结点上删除这个jar包即可。

[root@master ~]# rm -rf /usr/local/hbase/lib/client-facing-thirdparty/slf4j-log4j12-1.7.25.jar

[root@ceph1 ~]# rm -rf /usr/local/hbase/lib/client-facing-thirdparty/slf4j-log4j12-1.7.25.jar

[root@ceph2 ~]# rm -rf /usr/local/hbase/lib/client-facing-thirdparty/slf4j-log4j12-1.7.25.jar

[root@ceph3 ~]# rm -rf /usr/local/hbase/lib/client-facing-thirdparty/slf4j-log4j12-1.7.25.jar

问题二:

RegionServer进程启动没几秒就消失,查看日志,发现报错如下:

[root@ceph1 ~]# vim /usr/local/hbase/logs/hbase-root-regionserver-ceph1.log

2018-12-10 21:26:11,474 ERROR [main] regionserver.HRegionServer: Failed construction RegionServer

java.lang.NoClassDefFoundError: org/apache/htrace/SamplerBuilder

at org.apache.hadoop.hdfs.DFSClient.<init>(DFSClient.java:635)

at org.apache.hadoop.hdfs.DFSClient.<init>(DFSClient.java:619)

at org.apache.hadoop.hdfs.DistributedFileSystem.initialize(DistributedFileSystem.java:149)

at org.apache.hadoop.fs.FileSystem.createFileSystem(FileSystem.java:2669)

at org.apache.hadoop.fs.FileSystem.access$200(FileSystem.java:94)

at org.apache.hadoop.fs.FileSystem$Cache.getInternal(FileSystem.java:2703)

at org.apache.hadoop.fs.FileSystem$Cache.get(FileSystem.java:2685)

at org.apache.hadoop.fs.FileSystem.get(FileSystem.java:373)

at org.apache.hadoop.fs.Path.getFileSystem(Path.java:295)

at org.apache.hadoop.hbase.util.CommonFSUtils.getRootDir(CommonFSUtils.java:358)

at org.apache.hadoop.hbase.util.CommonFSUtils.isValidWALRootDir(CommonFSUtils.java:407)

at org.apache.hadoop.hbase.util.CommonFSUtils.getWALRootDir(CommonFSUtils.java:383)

at org.apache.hadoop.hbase.regionserver.HRegionServer.initializeFileSystem(HRegionServer.java:691)

at org.apache.hadoop.hbase.regionserver.HRegionServer.<init>(HRegionServer.java:600)

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)

at java.lang.reflect.Constructor.newInstance(Constructor.java:423)

at org.apache.hadoop.hbase.regionserver.HRegionServer.constructRegionServer(HRegionServer.java:2991)

at org.apache.hadoop.hbase.regionserver.HRegionServerCommandLine.start(HRegionServerCommandLine.java:63)

at org.apache.hadoop.hbase.regionserver.HRegionServerCommandLine.run(HRegionServerCommandLine.java:87)

at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:70)

at org.apache.hadoop.hbase.util.ServerCommandLine.doMain(ServerCommandLine.java:149)

at org.apache.hadoop.hbase.regionserver.HRegionServer.main(HRegionServer.java:3009)

Caused by: java.lang.ClassNotFoundException: org.apache.htrace.SamplerBuilder

at java.net.URLClassLoader.findClass(URLClassLoader.java:382)

at java.lang.ClassLoader.loadClass(ClassLoader.java:424)

at sun.misc.Launcher$AppClassLoader.loadClass(Launcher.java:349)

at java.lang.ClassLoader.loadClass(ClassLoader.java:357)

... 24 more

核心问题就两句话:

1)java.lang.NoClassDefFoundError: org/apache/htrace/SamplerBuilder

2)Caused by: java.lang.ClassNotFoundException: org.apache.htrace.SamplerBuilder

解决方法如下:

[root@ceph1 ~]# cp $HBASE_HOME/lib/client-facing-thirdparty/htrace-core-3.1.0-incubating.jar $HBASE_HOME/lib/

这里我的完整路径是:

![]()

再次启动,不论刷几次jps,妈妈再也不用担心我的HRegionServer一会就消失啦~:

[root@ceph1 ~]# hbase-daemon.sh start regionserver

[root@ceph2 ~]# hbase-daemon.sh start regionserver

[root@ceph3 ~]# hbase-daemon.sh start regionserver

问题三:

刚解决完问题二,成功启动了HRegionServer,回到主节点启动HMaster又失败了 ̄へ ̄

HMaster进程启动没几秒就消失,查看日志,发现报错如下:

2018-02-08 17:26:54,256 ERROR [Thread-14] master.HMaster: Failed to become active master

java.lang.IllegalStateException: The procedure WAL relies on the ability to hsync for proper

operation during component failures, but the underlying filesystem does not support doing so.

Please check the config value of 'hbase.procedure.store.wal.use.hsync' to set the desired

level of robustness and ensure the config value of 'hbase.wal.dir' points to a FileSystem

mount that can provide it.

at org.apache.hadoop.hbase.procedure2.store.wal.WALProcedureStore.rollWriter(WALProcedureStore.java:1044)

at org.apache.hadoop.hbase.procedure2.store.wal.WALProcedureStore.rollWriter(WALProcedureStore.java:1044)

2018-12-10 22:06:39,256 ERROR [Thread-14] master.HMaster: Failed to become active master

at org.apache.hadoop.hbase.procedure2.store.wal.WALProcedureStore.rollWriter(WALProcedureStore.java:1044)

at org.apache.hadoop.hbase.procedure2.store.wal.WALProcedureStore.rollWriter(WALProcedureStore.java:1044)

at java.lang.Thread.run(Thread.java:748)

解决方法如下:

在hbase-site.xml中添加如下属性:

[root@master ~]# vim /usr/local/hbase/conf/hbase-site.xml

下面这个属性主要作用是禁止检查流功能(stream capabilities)[hflush/hsync]

<property>

<name>hbase.unsafe.stream.capability.enforce</name>

<value>false</value>

</property>

再次启动,不论刷几次jps,妈妈再也不用担心我的HMaster一会就消失啦~:

[root@master ~]# hbase-daemon.sh start master