问题1

问题:org.apache.hadoop.hdfs.server.common.Storage: java.io.IOException: Incompatible clusterIDs in /data/program/hadoop/hdfs/data: namenode clusterID = CID-715d917d-2477-41a8-97fe-6b22ae9bad6e; datanode clusterID = CID-11a94f7e-0ba2-4e00-8057-23de4244f219

2017-02-26 00:26:46,150 FATAL org.apache.hadoop.hdfs.server.datanode.DataNode: Initialization failed for Block pool (Datanode Uuid unassigned) service to /192.168.1.131:9000. Exiting.

java.io.IOException: All specified directories are failed to load.

at org.apache.hadoop.hdfs.server.datanode.DataStorage.recoverTransitionRead(DataStorage.

at org.apache.hadoop.hdfs.server.datanode.DataNode.initStorage(DataNode.

at org.apache.hadoop.hdfs.server.datanode.DataNode.initBlockPool(DataNode.

at org.apache.hadoop.hdfs.server.datanode.BPOfferService.verifyAndSetNamespaceInfo(BPOfferService.

at org.apache.hadoop.hdfs.server.datanode.BPServiceActor.connectToNNAndHandshake(BPServiceActor.

at org.apache.hadoop.hdfs.server.datanode.BPServiceActor.run(BPServiceActor.

2017-02-26 00:26:46,152 WARN org.apache.hadoop.hdfs.server.datanode.DataNode: Ending block pool service for: Block pool (Datanode Uuid unassigned) service to /192.168.1.131:9000

2017-02-26 00:26:46,255 INFO org.apache.hadoop.hdfs.server.datanode.DataNode: Removed Block pool (Datanode Uuid unassigned)

2017-02-26 00:26:48,258 WARN org.apache.hadoop.hdfs.server.datanode.DataNode: Exiting Datanode

2017-02-26 00:26:48,261 INFO org.apache.hadoop.util.ExitUtil: Exiting with status 0

解决办法:每次namenode format会重新创建一个namenodeId,而data目录包含了上次format时的id,namenode format清空了namenode下的数据,但是没有清空datanode下的数据,导致启动时失败,所要做的就是每次fotmat前,清空data下的所有目录。

方法1:停掉集群,删除问题节点的data目录下的所有内容。即hdfs-site.xml文件中配置的dfs.data.dir目录。重新格式化namenode。

方法2:先停掉集群,然后将datanode节点目录/dfs/data/current/VERSION中的修改为与namenode一致即可。

问题2

问题:在配置免密登录的时候,配置的步骤没有错误,但却无法免密登录。

解决办法:首先查看登录的日志:cat /var/log/secure,然后分析原因。在日志中显示如下:

Authentication refused:bad ownership or modes for directory /root/.ssh

该问题是因为权限问题,sshd为了安全,对属主的目录和文件权限有所要求,如果权限不对,则ssh的免密码登录不生效。.ssh目录的权限一般为755或者700。rsa_id.pub以及authorized_keys的权限一般为644,rsa权限必须为600。

问题3

问题:2017-02-26 00:37:12,419 ERROR org.apache.hadoop.hdfs.server.datanode.DataNode: Initialization failed for Block pool BP-1117540795-127.0.0.1-1488040411210 (Datanode Uuid null) service to 192.168.1.131/192.168.1.131:9000 Datanode denied communication with namenode because hostname cannot be resolved (ip=192.168.1.133, hostname=192.168.1.133): DatanodeRegistration(0.0.0.0:50010, datanodeUuid=6bc06fed-eec5-482b-9e2b-e74483edb50f, infoPort=50075, infoSecurePort=0, ipcPort=50020, storageInfo=lv=-56;cid=CID-24c1246c-c1eb-4152-a856-f114c169c884;nsid=1820489334;c=0)

at org.apache.hadoop.hdfs.server.blockmanagement.DatanodeManager.registerDatanode(DatanodeManager.

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.registerDatanode(FSNamesystem.

at org.apache.hadoop.hdfs.server.namenode.NameNodeRpcServer.registerDatanode(NameNodeRpcServer.

at org.apache.hadoop.hdfs.protocolPB.DatanodeProtocolServerSideTranslatorPB.registerDatanode(DatanodeProtocolServerSideTranslatorPB.

at org.apache.hadoop.hdfs.protocol.proto.DatanodeProtocolProtos$DatanodeProtocolService$2.callBlockingMethod(DatanodeProtocolProtos.

at org.apache.hadoop.ipc.ProtobufRpcEngine$Server$ProtoBufRpcInvoker.call(ProtobufRpcEngine.

at org.apache.hadoop.ipc.RPC$Server.call(RPC.

at org.apache.hadoop.ipc.Server$Handler$1.run(Server.

at org.apache.hadoop.ipc.Server$Handler$1.run(Server.

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.

at org.apache.hadoop.ipc.Server$Handler.run(Server.

解决办法:该问题是由于未设置host的缘故,之后重新设置好host即可。

问题4

问题:FATAL org.apache.hadoop.yarn.server.nodemanager.NodeManager: Error starting NodeManager

org.apache.hadoop.yarn.exceptions.YarnRuntimeException: org.apache.hadoop.yarn.exceptions.YarnRuntimeException: Recieved SHUTDOWN signal from Resourcemanager ,Registration of NodeManager failed, Message from ResourceManager: NodeManager fromhadoop133 doesn't satisfy minimum allocations, Sending SHUTDOWN signal to the NodeManager.

at org.apache.hadoop.yarn.server.nodemanager.NodeStatusUpdaterImpl.serviceStart(NodeStatusUpdaterImpl.

at org.apache.hadoop.service.AbstractService.start(AbstractService.

at org.apache.hadoop.service.CompositeService.serviceStart(CompositeService.

at org.apache.hadoop.yarn.server.nodemanager.NodeManager.serviceStart(NodeManager.

at org.apache.hadoop.service.AbstractService.start(AbstractService.

at org.apache.hadoop.yarn.server.nodemanager.NodeManager.initAndStartNodeManager(NodeManager.

at org.apache.hadoop.yarn.server.nodemanager.NodeManager.main(NodeManager.

Caused by: org.apache.hadoop.yarn.exceptions.YarnRuntimeException: Recieved SHUTDOWN signal from Resourcemanager ,Registration of NodeManager failed, Message from ResourceManager: NodeManager fromhadoop133 doesn't satisfy minimum allocations, Sending SHUTDOWN signal to the NodeManager.

at org.apache.hadoop.yarn.server.nodemanager.NodeStatusUpdaterImpl.registerWithRM(NodeStatusUpdaterImpl.

at org.apache.hadoop.yarn.server.nodemanager.NodeStatusUpdaterImpl.serviceStart(NodeStatusUpdaterImpl.

... 6 more

解决办法:yarn-site.xml中的内存参数(yarn.nodemanager.resource.memory-mb)设置的问题,好像不能设置1G以下的(2g最好)。

问题5

问题:hadoop No FileSystem for scheme hdfs

解决办法:这个很有可能是客户端Hadoop版本和服务端版本不一致导致的,或者导入的jar包缺失,要确保导入的依赖包完整。

问题6

问题:Hadoop Permission denied: user=GavinCee, access=WRITE, inode="/test":root:supergroup:drwxr-xr-x

解决办法:到服务器上修改hadoop的配置文件:conf/hdfs-core.xml, 找到 dfs.permissions 的配置项 , 将value值改为 false。

dfs.permissions

false

If "true", enable permission checking in HDFS.If "false", permission checking is turned off,but all other behavior is unchanged.Switching from one parameter value to the other does not change the mode,owner or group of files or directories.

修改完重启下hadoop的进程才能生效。

ps,个人开发方便故如此设置,谨慎的还是要创建个用户并授予权限。

问题7

问题:将本地文件复制到hdfs上去或者在hafs上新建文件时会出现以下错误:Exception in thread "main" org.apache.hadoop.ipc.RemoteException(org.apache.hadoop.hdfs.server.namenode.SafeModeException): Cannot create directory /test. Name node is in safe mode.

解决办法:hdfs在启动开始时会进入安全模式,这时文件系统中的内容不允许修改也不允许删除,直到安全模式结束。安全模式主要是为了系统启动的时候检查各个DataNode上数据块的有效性,同时根据策略必要的复制或者删除部分数据块。运行期通过命令也可以进入安全模式。在实践过程中,系统启动的时候去修改和删除文件也会有安全模式不允许修改的出错提示,只需要等待一会儿即可。

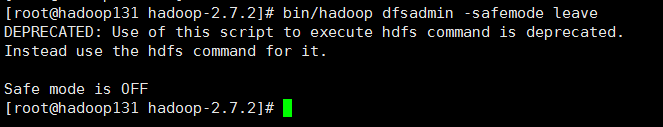

可以等待其自动退出安全模式,也可以使用手动命令来离开安全模式,如下:

关闭成功。

分享: