Pytorch之Bert文本分类(一)

本文主要是针对入门级别的Bert使用,先让模型能够实现文本分类,后续会讲解huggingface的Bert流程化的使用,包括英文文本分类和中文文本分类。

英文部分使用

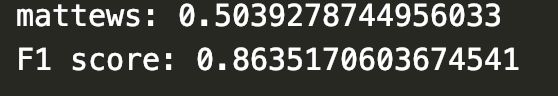

BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding中的Cola数据集,任务如下图

这个数据集包括四列:[‘sentence_source’, ‘label’, ‘label_notes’, ‘sentence’]

第一列是句子来源,第三列大部分缺失,我们的目的是做文本分类,这俩列可以不用。

第二列lable:[0,1]

第四列sentence:英文

下面就开始:

这里我们使用自己的方法快速处理数据集进行模型训练,后续文章将会进一步使用huggingface的方法来处理数据集

https://github.com/huggingface/transformers

首先定义路径:

data_path='cola_public/raw/'#数据集路径

bert_pre_model='bert-base-uncased/pytorch_model.bin'#预训练模型文件

bert_config='bert-base-uncased/bert_config.json'#配置文件

bert_pre_tokenizer='bert-base-uncased/bert-base-uncased-vocab.txt'#词表

首先将数据使用pandas读取,并处理成bert的输入格式

- 处理后的句子

- [0,0,0…,1,1,1…]编号如果是一句话,不输入也可以,默认会自动生成[0,0,0…0]

- attietion mask 数据集中有效的长度,模型能attention到的所有位置

#读取训练数据 os.path.join(data_dir, "train.txt")

df = pd.read_csv(os.path.join(data_path,"in_domain_train.tsv"), delimiter='\t', header=None,\

names=['sentence_source', 'label', 'label_notes', 'sentence'])

#提取语句并处理

sentencses=['[CLS] ' + sent + ' [SEP]' for sent in df.sentence.values]

labels=df.label.values

print("第一句话:",sentencses[0])

tokenizer=BertTokenizer.from_pretrained(bert_pre_tokenizer,do_lower_case=True)

tokenized_sents=[tokenizer.tokenize(sent) for sent in sentencses]

print("tokenized的第一句话:",tokenized_sents[0])

#定义句子最大长度(512)

MAX_LEN=128

#将分割后的句子转化成数字 word-->idx

input_ids=[tokenizer.convert_tokens_to_ids(sent) for sent in tokenized_sents]

print("转化后的第一个句子:",input_ids[0])

#做PADDING,这里使用keras的包做pad,也可以自己手动做pad,truncating表示大于最大长度截断

#大于128做截断,小于128做PADDING

input_ids=pad_sequences(input_ids, maxlen=MAX_LEN, dtype="long", truncating="post", padding="post")

print("Padding 第一个句子:",input_ids[0])

#建立mask

attention_masks = []

for seq in input_ids:

seq_mask = [float(i>0) for i in seq]

attention_masks.append(seq_mask)

print("第一个attention mask:",attention_masks[0])

![]()

划分训练集,这里使用sklearn的方法,当然自己可以手动改切分。

#划分训练集、验证集

train_inputs, validation_inputs, train_labels, validation_labels = train_test_split(input_ids, labels,

random_state=2018, test_size=0.1)

train_masks, validation_masks, _, _ = train_test_split(attention_masks, input_ids,

random_state=2018, test_size=0.1)

print("训练集的一个inputs",train_inputs[0])

print("训练集的一个mask",train_masks[0])

到这里我们就可以生成dataloader放入到模型中进行训练了

#将训练集、验证集转化成tensor

train_inputs = torch.tensor(train_inputs)

validation_inputs = torch.tensor(validation_inputs)

train_labels = torch.tensor(train_labels)

validation_labels = torch.tensor(validation_labels)

train_masks = torch.tensor(train_masks)

validation_masks = torch.tensor(validation_masks)

#生成dataloader

batch_size = 32

train_data = TensorDataset(train_inputs, train_masks, train_labels)

train_sampler = RandomSampler(train_data)

train_dataloader = DataLoader(train_data, sampler=train_sampler, batch_size=batch_size)

validation_data = TensorDataset(validation_inputs, validation_masks, validation_labels)

validation_sampler = SequentialSampler(validation_data)

validation_dataloader = DataLoader(validation_data, sampler=validation_sampler, batch_size=batch_size)

加载预训练模型,首先加载bert的配置文件,然后使用BertForSequenceClassification这个类来进行文本分类

modelConfig = BertConfig.from_pretrained('bert-base-uncased/bert_config.json')

model = BertForSequenceClassification.from_pretrained('bert-base-uncased/pytorch_model.bin', config=modelConfig)

print(model.cuda())

定义优化器,注意BertAdam、AdamW是不同版本的adam优化方法,版本更新太快,知道使用就行,定义需要weight decay的参数

‘gamma’, ‘beta’ 是值LayerNormal层的,不要decay,直接训练即可。其他参数除去bias,均使用weight decay的方法进行训练

weight decay可以简单理解在Adam上的一个优化的基础上成使用L2正则(AdamW)。

param_optimizer = list(model.named_parameters())

no_decay = ['bias', 'gamma', 'beta']

optimizer_grouped_parameters = [

{'params': [p for n, p in param_optimizer if not any(nd in n for nd in no_decay)],

'weight_decay_rate': 0.01},

{'params': [p for n, p in param_optimizer if any(nd in n for nd in no_decay)],

'weight_decay_rate': 0.0}

]

optimizer = BertAdam(optimizer_grouped_parameters,

lr=2e-5,

warmup=.1)

接下来就是训练部分了,注意在训练是传入label,模型可以直接得到loss,如果不传入label,便只有一个logits

- (loss,logits) train

- (logits) dev 或者 test

#定义一个计算准确率的函数

def flat_accuracy(preds, labels):

pred_flat = np.argmax(preds, axis=1).flatten()

labels_flat = labels.flatten()

return np.sum(pred_flat == labels_flat) / len(labels_flat)

#训练开始

train_loss_set = []#可以将loss加入到列表中,后期画图使用

epochs = 10

for _ in trange(epochs, desc="Epoch"):

#训练开始

model.train()

tr_loss = 0

nb_tr_examples, nb_tr_steps = 0, 0

for step, batch in enumerate(train_dataloader):

batch = tuple(t.to(device) for t in batch)

b_input_ids, b_input_mask, b_labels = batch

optimizer.zero_grad()

#取第一个位置,BertForSequenceClassification第一个位置是Loss,第二个位置是[CLS]的logits

loss = model(b_input_ids, token_type_ids=None, attention_mask=b_input_mask, labels=b_labels)[0]

train_loss_set.append(loss.item())

loss.backward()

optimizer.step()

tr_loss += loss.item()

nb_tr_examples += b_input_ids.size(0)

nb_tr_steps += 1

print("Train loss: {}".format(tr_loss / nb_tr_steps))

#模型评估

model.eval()

eval_loss, eval_accuracy = 0, 0

nb_eval_steps, nb_eval_examples = 0, 0

for batch in validation_dataloader:

batch = tuple(t.to(device) for t in batch)

b_input_ids, b_input_mask, b_labels = batch

with torch.no_grad():

logits = model(b_input_ids, token_type_ids=None, attention_mask=b_input_mask)[0]

logits = logits.detach().cpu().numpy()

label_ids = b_labels.to('cpu').numpy()

tmp_eval_accuracy = flat_accuracy(logits, label_ids)

eval_accuracy += tmp_eval_accuracy

nb_eval_steps += 1

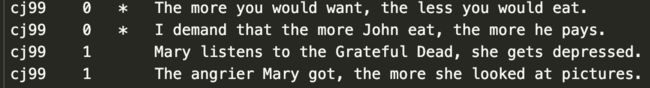

print("Validation Accuracy: {}".format(eval_accuracy / nb_eval_steps))

[1] BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding

[2] https://github.com/huggingface/transformers