【SeetaFace】中科院山世光老师开源的Seetaface人脸识别引擎测试

Seetaface发布了第二个版本——Seetaface2:

https://github.com/seetaface/SeetaFaceEngine2

SeetaFace2 Engine contains three key parts, i.e., SeetaFace2 Detection, SeetaFace2 Alignment and SeetaFace2 Identification. It can run in Android OS, Windows and Linux.

-

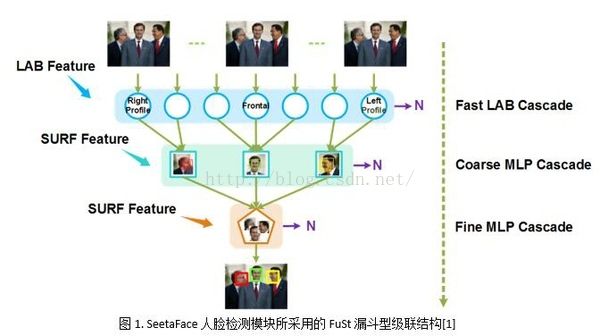

SeetaFace2 Detection. See SeetaFace Detection for more details.

-

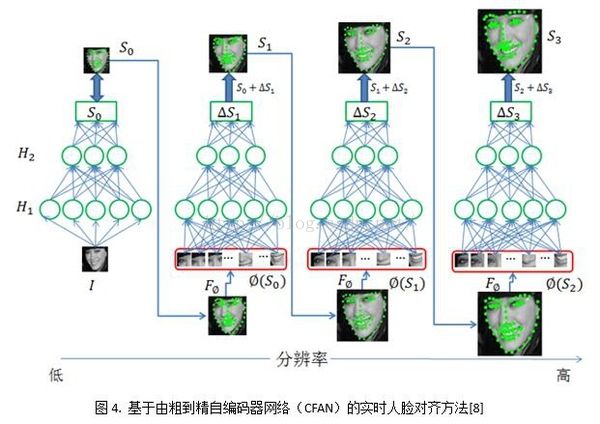

SeetaFace2 Alignment. See SeetaFace Alignment for more details.

-

SeetaFace2 Identification. See SeetaFace Identification for more details.

【知乎】如何评价中科院山世光老师开源的Seetaface人脸识别引擎?

【源码下载地址】seetaface/SeetaFaceEngine

【测试源码下载地址】

中科院山世光老师开源的Seetaface人脸识别引擎SeetaFacesTest.rar

中科院山世光老师开源的Seetaface人脸识别引擎测试SeetaFacesTest_面部五点特征.rar

中科院山世光老师开源的Seetaface人脸识别引擎SeetaFacesTest人脸识别Identification.rar

SeetaFace大总结

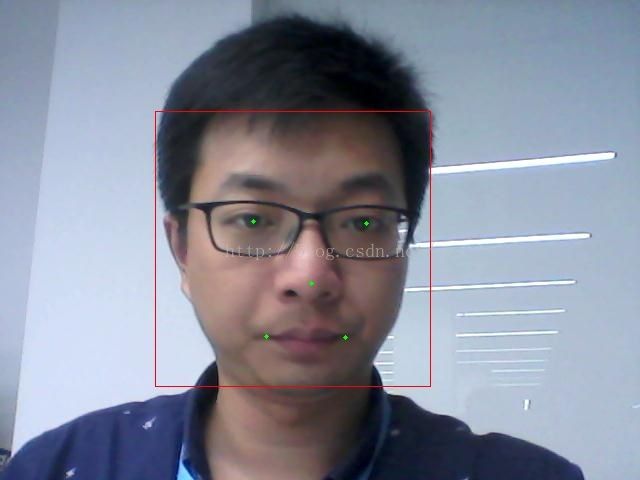

人脸检测效果图:

(百度下载的图片)

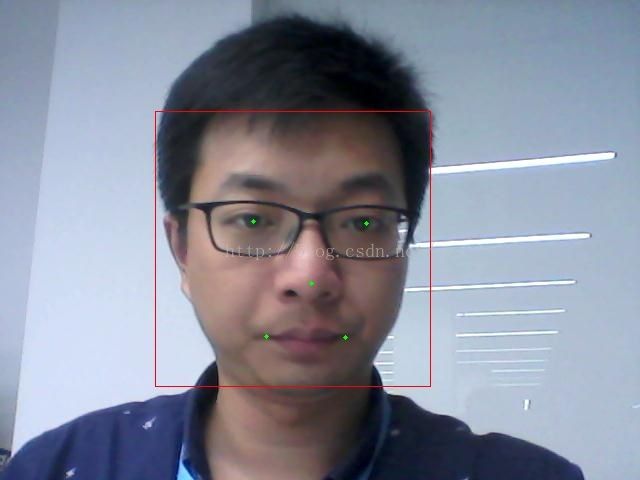

面部特征检测效果图:

// SeetaFacesTest.cpp : 定义控制台应用程序的入口点。

//

#include "stdafx.h"

#include

#include

#include

#include

#include

#include

#include // Gaussian Blur

#include "face_detection.h"

using namespace cv;

using namespace std;

int main()

{

Mat frame = imread("02.jpg");// 输入视频帧序列

namedWindow("Test00"); //创建一个名为Test窗口

imshow("Test00", frame); //窗口中显示图像

const char* img_path = "02.jpg";

seeta::FaceDetection detector(".\\Model\\seeta_fd_frontal_v1.0.bin");

detector.SetMinFaceSize(40);

detector.SetScoreThresh(2.f);

detector.SetImagePyramidScaleFactor(0.8f);

detector.SetWindowStep(4, 4);

cv::Mat img = cv::imread(img_path, cv::IMREAD_UNCHANGED);

cv::Mat img_gray;

if (img.channels() != 1)

cv::cvtColor(img, img_gray, cv::COLOR_BGR2GRAY);

else

img_gray = img;

seeta::ImageData img_data;

img_data.data = img_gray.data;

img_data.width = img_gray.cols;

img_data.height = img_gray.rows;

img_data.num_channels = 1;

long t0 = cv::getTickCount();

std::vector faces = detector.Detect(img_data);

long t1 = cv::getTickCount();

double secs = (t1 - t0) / cv::getTickFrequency();

cout << "Detections takes " << secs << " seconds " << endl;

#ifdef USE_OPENMP

cout << "OpenMP is used." << endl;

#else

cout << "OpenMP is not used. " << endl;

#endif

#ifdef USE_SSE

cout << "SSE is used." << endl;

#else

cout << "SSE is not used." << endl;

#endif

cout << "Image size (wxh): " << img_data.width << "x"

<< img_data.height << endl;

cv::Rect face_rect;

int32_t num_face = static_cast(faces.size());

for (int32_t i = 0; i < num_face; i++) {

face_rect.x = faces[i].bbox.x;

face_rect.y = faces[i].bbox.y;

face_rect.width = faces[i].bbox.width;

face_rect.height = faces[i].bbox.height;

cv::rectangle(img, face_rect, CV_RGB(0, 0, 255), 4, 8, 0);

}

cv::namedWindow("Test", cv::WINDOW_AUTOSIZE);

cv::imshow("Test", img);

cv::waitKey(0);

cv::destroyAllWindows();

return 0;

}

//---------------------------------------------------------

// SeetaFacesTest.cpp : 定义控制台应用程序的入口点。

//

#include "stdafx.h"

#include

#include

#include

#include

#include

#include

#include // Gaussian Blur

#include "face_detection.h"

#include "face_alignment.h"

using namespace cv;

using namespace std;

int main()

{

// Initialize face detection model

seeta::FaceDetection detector("seeta_fd_frontal_v1.0.bin");

detector.SetMinFaceSize(40);

detector.SetScoreThresh(2.f);

detector.SetImagePyramidScaleFactor(0.8f);

detector.SetWindowStep(4, 4);

// Initialize face alignment model

seeta::FaceAlignment point_detector("seeta_fa_v1.1.bin");

//load image

const char* picturename = "11.jpg";

IplImage *img_grayscale = NULL;

img_grayscale = cvLoadImage(picturename, 0);

if (img_grayscale == NULL)

{

return 0;

}

IplImage *img_color = cvLoadImage(picturename, 1);

int pts_num = 5;

int im_width = img_grayscale->width;

int im_height = img_grayscale->height;

unsigned char* data = new unsigned char[im_width * im_height];

unsigned char* data_ptr = data;

unsigned char* image_data_ptr = (unsigned char*)img_grayscale->imageData;

int h = 0;

for (h = 0; h < im_height; h++) {

memcpy(data_ptr, image_data_ptr, im_width);

data_ptr += im_width;

image_data_ptr += img_grayscale->widthStep;

}

seeta::ImageData image_data;

image_data.data = data;

image_data.width = im_width;

image_data.height = im_height;

image_data.num_channels = 1;

// Detect faces

std::vector faces = detector.Detect(image_data);

int32_t face_num = static_cast(faces.size());

if (face_num == 0)

{

delete[]data;

cvReleaseImage(&img_grayscale);

cvReleaseImage(&img_color);

return 0;

}

// Detect 5 facial landmarks

seeta::FacialLandmark points[5];

point_detector.PointDetectLandmarks(image_data, faces[0], points);

// Visualize the results

cvRectangle(img_color, cvPoint(faces[0].bbox.x, faces[0].bbox.y), cvPoint(faces[0].bbox.x + faces[0].bbox.width - 1, faces[0].bbox.y + faces[0].bbox.height - 1), CV_RGB(255, 0, 0));

for (int i = 0; i

for (int m = 0; m

在使用FaceAlignment里的源代码时,会报错:std::max......

是因为在max中两个参数的类型要一致;

#include

using namespace std;

int extend_lx = max((double)(floor(left_x - extend_factor*bbox_w)), double(0));

int extend_rx = min((double)(floor(right_x + extend_factor*bbox_w)), double(im_width - 1));

int extend_ly = max((double)(floor(left_y - (extend_factor - extend_revised_y)*bbox_h)), double(0));

int extend_ry = min((double)(floor(right_y + (extend_factor + extend_revised_y)*bbox_h)), double(im_height - 1));

// SeetaFacesTest.cpp : 定义控制台应用程序的入口点。

//

#include "stdafx.h"

#include

#include

#include

#include

#include

#include "face_identification.h"

#include "face_detection.h"

#include

//#pragma comment(lib, "SeetaTest.lib")

using namespace cv;

using namespace std;

using namespace seeta;

#define TEST(major, minor) major##_##minor##_Tester()

#define EXPECT_NE(a, b) if ((a) == (b)) std::cout << "ERROR: "

#define EXPECT_EQ(a, b) if ((a) != (b)) std::cout << "ERROR: "

void TEST(FaceRecognizerTest, CropFace) {

FaceIdentification face_recognizer(".\\model\\seeta_fr_v1.0.bin");

std::string test_dir = ".\\data\\";

/* data initialize */

std::ifstream ifs;

std::string img_name;

FacialLandmark pt5[5];

ifs.open(".\\data\\test_file_list.txt", std::ifstream::in);

clock_t start, count = 0;

int img_num = 0;

while (ifs >> img_name) {

img_num++;

// read image

std::string imgpath = test_dir + img_name;

cv::Mat src_img = cv::imread(imgpath, 1);

EXPECT_NE(src_img.data, nullptr) << "Load image error!";

// ImageData store data of an image without memory alignment.

ImageData src_img_data(src_img.cols, src_img.rows, src_img.channels());

src_img_data.data = src_img.data;

// 5 located landmark points (left eye, right eye, nose, left and right

// corner of mouse).

for (int i = 0; i < 5; ++i) {

ifs >> pt5[i].x >> pt5[i].y;

}

// Create a image to store crop face.

cv::Mat dst_img(face_recognizer.crop_height(), face_recognizer.crop_width(), CV_8UC(face_recognizer.crop_channels()));

ImageData dst_img_data(dst_img.cols, dst_img.rows, dst_img.channels());

dst_img_data.data = dst_img.data;

/* Crop Face */

start = clock();

uint8_t rst_cropface = face_recognizer.CropFace(src_img_data, pt5, dst_img_data);

cout << "CropFace : " << rst_cropface << endl;

count += clock() - start;

// Show crop face

/*cv::imshow("Crop Face", dst_img);

cv::waitKey(0);

cv::destroyWindow("Crop Face");*/

}

ifs.close();

std::cout << "Test successful! \nAverage crop face time: "

<< 1000.0 * count / CLOCKS_PER_SEC / img_num << "ms" << std::endl;

}

void TEST(FaceRecognizerTest, ExtractFeature) {

FaceIdentification face_recognizer(".\\model\\seeta_fr_v1.0.bin");

std::string test_dir = ".\\data\\";

int feat_size = face_recognizer.feature_size();

EXPECT_EQ(feat_size, 2048);

FILE* feat_file = NULL;

// Load features extract from caffe

fopen_s(&feat_file, ".\\data\\feats.dat", "rb");

int n, c, h, w;

EXPECT_EQ(fread(&n, sizeof(int), 1, feat_file), (unsigned int)1);

EXPECT_EQ(fread(&c, sizeof(int), 1, feat_file), (unsigned int)1);

EXPECT_EQ(fread(&h, sizeof(int), 1, feat_file), (unsigned int)1);

EXPECT_EQ(fread(&w, sizeof(int), 1, feat_file), (unsigned int)1);

float* feat_caffe = new float[n * c * h * w];

float* feat_sdk = new float[n * c * h * w];

EXPECT_EQ(fread(feat_caffe, sizeof(float), n * c * h * w, feat_file), n * c * h * w);

EXPECT_EQ(feat_size, c * h * w);

int cnt = 0;

/* Data initialize */

std::ifstream ifs(".\\data\\crop_file_list.txt");

std::string img_name;

clock_t start, count = 0;

int img_num = 0, lb;

double average_sim = 0.0;

while (ifs >> img_name >> lb) {

// read image

std::string imgpath = test_dir + img_name;

cv::Mat src_img = cv::imread(imgpath, 1);

EXPECT_NE(src_img.data, nullptr) << "Load image error!";

cv::resize(src_img, src_img, cv::Size(face_recognizer.crop_height(), face_recognizer.crop_width()));

// ImageData store data of an image without memory alignment.

ImageData src_img_data(src_img.cols, src_img.rows, src_img.channels());

src_img_data.data = src_img.data;

/* Extract feature */

start = clock();

face_recognizer.ExtractFeature(src_img_data, feat_sdk + img_num * feat_size);

count += clock() - start;

/* Caculate similarity*/

float* feat1 = feat_caffe + img_num * feat_size;

float* feat2 = feat_sdk + img_num * feat_size;

float sim = face_recognizer.CalcSimilarity(feat1, feat2);

std::cout << "CalcSimilarity : " << sim << endl;

average_sim += sim;

img_num++;

}

ifs.close();

average_sim /= img_num;

if (1.0 - average_sim > 0.01) {

std::cout << "average similarity: " << average_sim << std::endl;

}

else {

std::cout << "Test successful!\nAverage extract feature time: "

<< 1000.0 * count / CLOCKS_PER_SEC / img_num << "ms" << std::endl;

}

delete[]feat_caffe;

delete[]feat_sdk;

}

void TEST(FaceRecognizerTest, ExtractFeatureWithCrop) {

FaceIdentification face_recognizer(".\\Model\\seeta_fr_v1.0.bin");

std::string test_dir = ".\\data\\";

int feat_size = face_recognizer.feature_size();

EXPECT_EQ(feat_size, 2048);

FILE* feat_file = NULL;

// Load features extract from caffe

fopen_s(&feat_file, ".\\data\\feats.dat", "rb");

int n, c, h, w;

EXPECT_EQ(fread(&n, sizeof(int), 1, feat_file), (unsigned int)1);

EXPECT_EQ(fread(&c, sizeof(int), 1, feat_file), (unsigned int)1);

EXPECT_EQ(fread(&h, sizeof(int), 1, feat_file), (unsigned int)1);

EXPECT_EQ(fread(&w, sizeof(int), 1, feat_file), (unsigned int)1);

float* feat_caffe = new float[n * c * h * w];

float* feat_sdk = new float[n * c * h * w];

EXPECT_EQ(fread(feat_caffe, sizeof(float), n * c * h * w, feat_file),

n * c * h * w);

EXPECT_EQ(feat_size, c * h * w);

int cnt = 0;

/* Data initialize */

std::ifstream ifs(".\\data\\test_file_list.txt");

std::string img_name;

FacialLandmark pt5[5];

clock_t start, count = 0;

int img_num = 0;

double average_sim = 0.0;

while (ifs >> img_name) {

// read image

std::string imgpath = test_dir + img_name;

cv::Mat src_img = cv::imread(imgpath, 1);

EXPECT_NE(src_img.data, nullptr) << "Load image error!";

// ImageData store data of an image without memory alignment.

ImageData src_img_data(src_img.cols, src_img.rows, src_img.channels());

src_img_data.data = src_img.data;

// 5 located landmark points (left eye, right eye, nose, left and right

// corner of mouse).

for (int i = 0; i < 5; ++i) {

ifs >> pt5[i].x >> pt5[i].y;

}

/* Extract feature: ExtractFeatureWithCrop */

start = clock();

face_recognizer.ExtractFeatureWithCrop(src_img_data, pt5,

feat_sdk + img_num * feat_size);

count += clock() - start;

/* Caculate similarity*/

float* feat1 = feat_caffe + img_num * feat_size;

float* feat2 = feat_sdk + img_num * feat_size;

float sim = face_recognizer.CalcSimilarity(feat1, feat2);

average_sim += sim;

std::cout << "CalcSimilarity : " << sim << std::endl;

img_num++;

}

ifs.close();

average_sim /= img_num;

if (1.0 - average_sim > 0.02) {

std::cout << "average similarity: " << average_sim << std::endl;

}

else {

std::cout << "Test successful!\nAverage extract feature time: "

<< 1000.0 * count / CLOCKS_PER_SEC / img_num << "ms" << std::endl;

}

delete[]feat_caffe;

delete[]feat_sdk;

}

int main()

{

TEST(FaceRecognizerTest, CropFace);

TEST(FaceRecognizerTest, ExtractFeature);

TEST(FaceRecognizerTest, ExtractFeatureWithCrop);

/*

const char* img_path = "12.jpg";

//seeta::FaceDetection detector(".\\Model\\seeta_fd_frontal_v1.0.bin");

seeta::FaceDetection detector(".\\Model\\seeta_fd_frontal_v1.0.bin");

detector.SetMinFaceSize(100);

detector.SetScoreThresh(2.f);

detector.SetImagePyramidScaleFactor(1.0f);

detector.SetWindowStep(4, 4);

cv::Mat img = cv::imread(img_path, cv::IMREAD_UNCHANGED);

cv::Mat img_gray;

if (img.channels() != 1)

cv::cvtColor(img, img_gray, cv::COLOR_BGR2GRAY);

else

img_gray = img;

seeta::ImageData img_data;

img_data.data = img_gray.data;

img_data.width = img_gray.cols;

img_data.height = img_gray.rows;

img_data.num_channels = 1;

long t0 = cv::getTickCount();

std::vector faces = detector.Detect(img_data);

long t1 = cv::getTickCount();

double secs = (t1 - t0) / cv::getTickFrequency();

cv::Rect face_rect;

int32_t num_face = static_cast(faces.size());

for (int32_t i = 0; i < num_face; i++) {

face_rect.x = faces[i].bbox.x;

face_rect.y = faces[i].bbox.y;

face_rect.width = faces[i].bbox.width;

face_rect.height = faces[i].bbox.height;

cv::rectangle(img, face_rect, CV_RGB(0, 0, 255), 10, 8, 0);

}

cout << "renlian number:" << num_face << endl;

Size size(img_data.width/5 , img_data.height/5 );

Mat dst;

cv:resize(img, dst, size);

cv::namedWindow("Test", cv::WINDOW_AUTOSIZE);

cv::imshow("Test", dst);

cv::waitKey(0);

cv::destroyAllWindows();*/

return 0;

}

————————————————————————————————————————————————————————

class FaceDetection {

public:

SEETA_API explicit FaceDetection();

//SEETA_API FaceDetection(const char* model_path);

SEETA_API ~FaceDetection();

SEETA_API void LoadFaceDetectionModel(const char* model_path);

FaceDetection::FaceDetection()

: impl_(new seeta::FaceDetection::Impl()) {

}

void FaceDetection::LoadFaceDetectionModel(const char* model_path){

impl_->detector_->LoadModel(model_path);

}Taily老段的微信公众号,欢迎交流学习

https://blog.csdn.net/taily_duan/article/details/81214815