神经网络matlab工具箱有关参数设置

1、常见参数

net.trainParam.epochs 最大训练次数

net.trainParam.goal 训练要求精度

net.trainParam.lr 学习速率

net.trainParam.show 显示训练迭代过程

net.trainParam.time 最大训练时间

一般用到的就是这些

2、更改相关的参数

也可以更改许多属性。这需要看书《神经网络模型及其matlab仿真程序设计》

3、工具箱中的有关问题

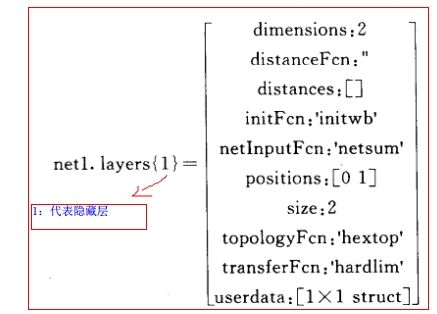

上图中,哪一个进度条到头了,就代表着根据这一项准则,神经网络的训练停止,如图就是因为连续6次验证检验均不能使其validation误差下降,则训练终止。

则各进度条上面的值均代表训练停止时的参数值。

各进度条右侧的值均代表停止的阈值。

左面的:???

3.1datadivision

默认为

B.D(1996)在他的经典专著Pattern Recognition and Neural Networks中给出了这三个词的定义。

Training set: A set of examples used for learning, which is to fit the parameters of the classifier.

Validation set: A set of examples used to tune the parameters of a classifier, for example to choose the number of hidden units in a neural network.

Test set: A set of examples used only to assess the performance of a fully specified classifier.

显然,training set是用来训练模型或确定模型参数的,如ANN中权值等; validation set是用来做模型选择(model selection),即做模型的最终优化及确定的,如ANN的结构;而 test set则纯粹是为了测试已经训练好的模型的推广能力。当然,test set这并不能保证模型的正确性,他只是说相似的数据用此模型会得出相似的结果。

1. The error rate estimate of the final model on validation data will be biased (smaller than the true error rate) since the validation set is used to select the final model.

2. After assessing the final model with the test set, YOU MUST NOT tune the model any further.

而神经网络最好选择的结果是validation set对应的误差最小的模型。

net.divideParam.trainRatio = 70/100;

net.divideParam.valRatio = 15/100;

net.divideParam.testRatio = 15/100;3.2training:对应训练方法

创建神经元时可以指定

net = feedforwardnet(50,'trainlm');%隐层有20个神经元3.3performance

3.4validation checks:

数据被自动分成training set、validation set 及test set 三部分,training set是训练样本数据,validation set是验证样本数据,test set是测试样本数据,这样这三个数据集是没有重叠的。在训练时,用training训练,每训练一次,系统自动会将validation set中的样本数据输入神经网络进行验证,在validation set输入后会得出一个误差,而此前对validation set会设置一个步数,比如默认是6echo,则系统判断validation对应的误差是否在连续6次检验后不下降,如果不下降或者甚至上升,说明training set训练【应该是validation set对应的吧。。】的误差已经不再减小,没有更好的效果了,这时再训练就没必要了,就停止训练,不然可能陷入过学习。所以validation set有个设置步数,作用就在这里。根据matlab版本的不同,具体怎么分配样本也不一样,像R2009应该是自动分配的

validation checks 到达6之后,说明建的网络是能力不能提高了

net.trainParam.max_fail=100; % 最小确认失败次数

3.3 mu

定义训练精度

trainlm函数使用的是Levenberg-Marquardt训练函数,Mu是算法里面的一个参数:

% Each variable is adjusted according to Levenberg-Marquardt,

% jj = jX * jX

% je = jX * E

% dX = -(jj+I*mu) \ je

论坛上的回答是:

变量mu确定了学习是根据牛顿法还是梯度法来完成,下式为更新参数的L-M规则:

% jj = jX * jX

% je = jX * E

% dX = -(jj+I*mu) \ je

随着mu的增大,LM的项jj可以忽略。因此学习过程主要根据梯度下降即mu/je项,只要迭代使误差增加,mu也就会增加,直到误差不再增加为止,但是,如果mu太大,则会使学习停止,当已经找到最小误差时,就会出现这种情况,这就是为什么当mu达到最大值时要停止学习的原因。

**********

net.trainParam.mu 0.001 Initial Mu

net.trainParam.mu_dec 0.1 Mu decrease factor

net.trainParam.mu_inc 10 Mu increase factor

net.trainParam.mu_max 1e10 Maximum Mu

3.4performance

Performance就是程序中你指定的训练结束参数,这里是Mean Squared Error(均方误差),1.84e03是初始值,0.01是结束阈值,即程序里的goal

net.trainparam.goal = 0.01 ;%训练精度

3.4gradient

梯度

以上属性都可以设置

另外一个比较好的例子:https://zhidao.baidu.com/question/561438493672659524.html

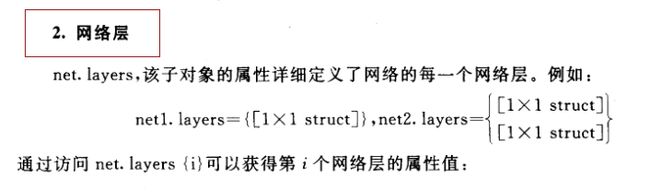

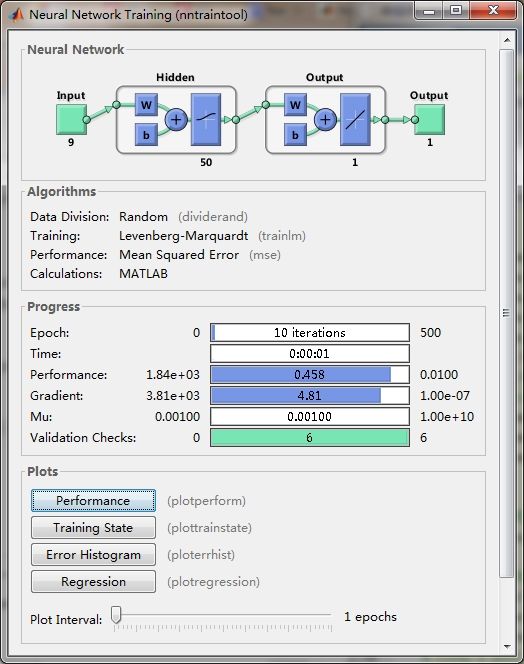

4.net内部剖析:

net =

Neural Network

name: 'Feed-Forward Neural Network'

userdata: (your custom info)

dimensions:

numInputs: 1

numLayers: 2

numOutputs: 1

numInputDelays: 0

numLayerDelays: 0

numFeedbackDelays: 0

numWeightElements: 20

sampleTime: 1

connections:

biasConnect: [1; 1]

inputConnect: [1; 0]

layerConnect: [0 0; 1 0]

outputConnect: [0 1]

subobjects:

input: Equivalent to inputs{1}

output: Equivalent to outputs{2}

inputs: {1x1 cell array of 1 input}

layers: {2x1 cell array of 2 layers}

outputs: {1x2 cell array of 1 output}

biases: {2x1 cell array of 2 biases}

inputWeights: {2x1 cell array of 1 weight}

layerWeights: {2x2 cell array of 1 weight}

functions:

adaptFcn: 'adaptwb'

adaptParam: (none)

derivFcn: 'defaultderiv'

divideFcn: 'dividerand'

divideParam: .trainRatio, .valRatio, .testRatio

divideMode: 'sample'

initFcn: 'initlay'

performFcn: 'mse'

performParam: .regularization, .normalization

plotFcns: {'plotperform', plottrainstate, ploterrhist,

plotregression}

plotParams: {1x4 cell array of 4 params}

trainFcn: 'trainlm'

trainParam: .showWindow, .showCommandLine, .show, .epochs,

.time, .goal, .min_grad, .max_fail, .mu, .mu_dec,

.mu_inc, .mu_max, .lr

weight and bias values:

IW: {2x1 cell} containing 1 input weight matrix

LW: {2x2 cell} containing 1 layer weight matrix

b: {2x1 cell} containing 2 bias vectors

methods:

adapt: Learn while in continuous use

configure: Configure inputs & outputs

gensim: Generate Simulink model

init: Initialize weights & biases

perform: Calculate performance

sim : Evaluate network outputs given inputs

train: Train network with examples

view: View diagram

unconfigure: Unconfigure inputs & outputs

通常情况下的程序:

%创建神经网络

net = feedforwardnet(50,'trainlm');%隐层有20个神经元

net.trainParam.epochs = 500;%训练的最大步数

net.trainparam.show = 50 ;%显示训练迭代过程

net.trainparam.goal = 0.01 ;%训练精度

net.trainParam.lr = 0.01 ;%学习速率

net.layers{1}.transferFCn='logsig'%设置隐藏层'logsig'

net.layers{2}.transferFCn='purelin'%设置输出层'logsig'

% Setup Division of Data for Training, Validation, Testing

net.divideParam.trainRatio = 80/100;

net.divideParam.valRatio = 20/100;

net.divideParam.testRatio = 0/100;

%训练神经网络模型。

[net,tr] = train(net,Xtrain',Ytrain');

%训练数据作为测试数据,计算其误差

y = net(Xtest');

有关链接:

http://blog.csdn.net/superdont/article/details/5506164

参数介绍:http://blog.csdn.net/h2008066215019910120/article/details/16805987

比较全:http://blog.sina.com.cn/s/blog_48ee23c80100rmkx.html

关于权值和阈值:http://muchong.com/html/200906/1359847.html