day06_hive和hbase

一. hive-2.3.2

下载:http://mirrors.hust.edu.cn/apache/hive/hive-2.3.2/apache-hive-2.3.2-bin.tar.gz

需要配置mysql 的root用户其他IP的访问权限

conf/hive-site.xml

<configuration>

<property>

<name>javax.jdo.option.ConnectionURLname>

<value>jdbc:mysql://hadoop1:3306/hive?createDatabaseIfNotExist=true&useSSL=falsevalue>

property>

<property>

<name>javax.jdo.option.ConnectionDriverNamename>

<value>com.mysql.jdbc.Drivervalue>

property>

<property>

<name>javax.jdo.option.ConnectionUserNamename>

<value>rootvalue>

property>

<property>

<name>javax.jdo.option.ConnectionPasswordname>

<value>123456value>

property>

<property>

<name>datanucleus.autoCreateSchemaname>

<value>truevalue>

property>

<property>

<name>datanucleus.fixedDatastorename>

<value>truevalue>

property>

<property>

<name>datanucleus.autoCreateTablesname>

<value>Truevalue>

property>

<property>

<name>hive.metastore.schema.verificationname>

<value>falsevalue>

property>

configuration>启动HIVE时 也要把hadoop启动起来

基本使用

创建表

hive> create table t_order(id int, name string, rongliang string, price double)

> row format delimited

> fields terminated by '\t'

> ;文本文件 xxx.data

0000101 iphone6plus 64G 6288

0000102 xiaominote 64G 3288

0000103 iphone5s 64G 2288

0000104 mi3 64G 6611

0000105 xiaomi 128G 628

0000106 huawei 64G 4244

0000107 zhongxing 64G 6288数据既可以从本地导入, 也可以从hdfs中导入, 下面是本地倒入

hive>load data local inpath '/home/hadoop/hivetestdata/xxx.data' into table t_order;查询数据

hive> select * from t_order;

OK

101 iphone6plus 64G 6288.0

102 xiaominote 64G 3288.0

103 iphone5s 64G 2288.0

104 mi3 64G 6611.0

105 xiaomi 128G 628.0

106 huawei 64G 4244.0

107 zhongxing 64G 6288.0

Time taken: 2.169 seconds, Fetched: 7 row(s)

hive> select count(1) from t_order;hive> select count(1) from t_order;

WARNING: Hive-on-MR is deprecated in Hive 2 and may not be available in the future versions. Consider using a different execution engine (i.e. spark, tez) or using Hive 1.X releases.

Query ID = hadoop_20180401142812_93965d7d-c675-495e-8f68-1e5820dc70dc

Total jobs = 1

Launching Job 1 out of 1

Number of reduce tasks determined at compile time: 1

In order to change the average load for a reducer (in bytes):

set hive.exec.reducers.bytes.per.reducer=

In order to limit the maximum number of reducers:

set hive.exec.reducers.max=

In order to set a constant number of reducers:

set mapreduce.job.reduces=

Starting Job = job_1522238071822_0001, Tracking URL = http://hadoop1:8088/proxy/application_1522238071822_0001/

Kill Command = /data/hadoop-2.7.5/bin/hadoop job -kill job_1522238071822_0001

Hadoop job information for Stage-1: number of mappers: 1; number of reducers: 1

2018-04-01 14:28:36,974 Stage-1 map = 0%, reduce = 0%

2018-04-01 14:28:55,665 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 3.19 sec

2018-04-01 14:29:13,731 Stage-1 map = 100%, reduce = 100%, Cumulative CPU 8.57 sec

MapReduce Total cumulative CPU time: 8 seconds 570 msec

Ended Job = job_1522238071822_0001

MapReduce Jobs Launched:

Stage-Stage-1: Map: 1 Reduce: 1 Cumulative CPU: 8.57 sec HDFS Read: 8041 HDFS Write: 101 SUCCESS

Total MapReduce CPU Time Spent: 8 seconds 570 msec

OK

7

Time taken: 64.647 seconds, Fetched: 1 row(s)

二. hbase-1.2.6

hbase是一个类似Nosql数据库,是一个hdfs文件系统上的数据库,比文件系统更适合随机读写

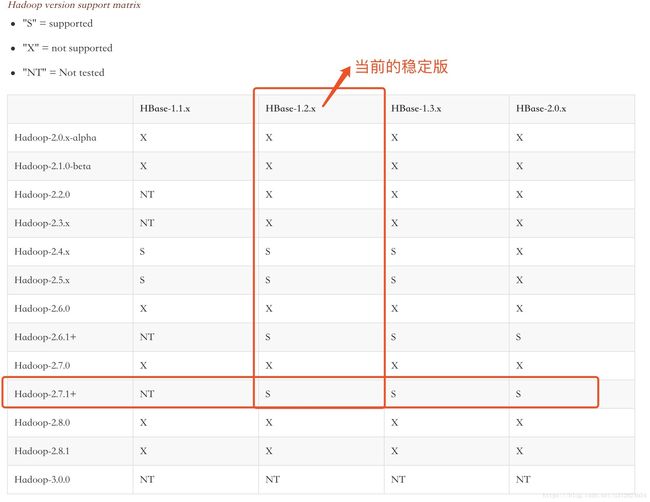

因为是使用hdfs作为基础 所以 hbase和hadoop的版本需要要很好的支持

根据官方给出的测试, 我是使用的Hadoop-2.7.5, 所以可以使用hbase1.2.6

hbase-env.sh

export HBASE_MANAGES_ZK=falsehbase-site.xml

<configuration>

<property>

<name>hbase.rootdirname>

<value>hdfs://ns1/hbasevalue>

property>

<property>

<name>hbase.cluster.distributedname>

<value>truevalue>

property>

<property>

<name>hbase.zookeeper.quorumname>

<value>hadoop1:2181,hadoop2:2181,hadoop3:2181value>

property>

configuration>core-site.xml hdfs-site.xml

把hadoop下的 core-site.xml 和 hdfs-site.xml 拷贝过来

regionservers

hadoop1

hadoop2

hadoop3部署

scp -r hbase-1.2.6/ hadoop2:/data/

scp -r hbase-1.2.6/ hadoop3:/data/机器及角色分配如下:

| hadoop1 | hadoop2 | hadoop3 |

|---|---|---|

| HMaster | HMaster | |

| HRegionServer | HRegionServer | HRegionServer |

hadoop1

# ./start-hbase.sh #启动master和 HRegionServerhadoop2

# ./hbase-daemon.sh start master #单独启动master