优达学城-神经网络之预测共享单车使用情况 代码分析

优达学城-神经网络之预测共享单车使用情况 代码分析

标签(): 机器学习

代码来自于优达学城深度学习纳米学位课程的第一个项目

https://cn.udacity.com/course/deep-learning-nanodegree-foundation–nd101-cn

通过这个项目可以从单车的近两年使用数据用神经网络预测以后的共享单车是使用情况

预先准备配置环境参照优达学城提供的教程

https://classroom.udacity.com/nanodegrees/nd101-cn/parts/e7f2a11a-4da3-4deb-8d5c-635907a09460/modules/b710d7cd-83a7-48c5-8b43-63beebd97369/lessons/4c03fd28-20ca-40e6-89cc-e72ae96141c2/project

现在开始我们的项目

一.导入需要用到的库

%matplotlib inline

%config InlineBackend.figure_format = 'retina'

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt二.导入优达提供的单车数据

data_path = 'Bike-Sharing-Dataset/hour.csv'

rides = pd.read_csv(data_path)三.查看数据

rides.head()

数据简介

此数据集包含的是从 2011 年 1 月 1 日到 2012 年 12 月 31 日期间每天每小时的骑车人数。骑车用户分成临时用户和注册用户,cnt 列是骑车用户数汇总列。你可以在上方看到前几行数据。

下图展示的是数据集中前 10 天左右的骑车人数(某些天不一定是 24 个条目,所以不是精确的 10 天)。你可以在这里看到每小时租金。这些数据很复杂!周末的骑行人数少些,工作日上下班期间是骑行高峰期。我们还可以从上方的数据中看到温度、湿度和风速信息,所有这些信息都会影响骑行人数。你需要用你的模型展示所有这些数据。

四.数据绘制

rides[:24*10].plot(x='dteday', y='cnt')

绘制了10天内每个时段的骑行总量,并画图表示

五.虚拟变量(哑变量)

下面是一些分类变量,例如季节、天气、月份。要在我们的模型中包含这些数据,我们需要创建二进制虚拟变量。用 Pandas 库中的 get_dummies() 就可以轻松实现。

dummy_fields = ['season', 'weathersit', 'mnth', 'hr', 'weekday']

for each in dummy_fields:

dummies = pd.get_dummies(rides[each], prefix=each, drop_first=False)

rides = pd.concat([rides, dummies], axis=1)

fields_to_drop = ['instant', 'dteday', 'season', 'weathersit',

'weekday', 'atemp', 'mnth', 'workingday', 'hr']

data = rides.drop(fields_to_drop, axis=1)

data.head()六.调整目标变量

为了更轻松地训练网络,我们将对每个连续变量标准化,即转换和调整变量,使它们的均值为 0,标准差为 1。

我们会保存换算因子,以便当我们使用网络进行预测时可以还原数据。

quant_features = ['casual', 'registered', 'cnt', 'temp', 'hum', 'windspeed']

# Store scalings in a dictionary so we can convert back later

scaled_features = {}

for each in quant_features:

mean, std = data[each].mean(), data[each].std()

scaled_features[each] = [mean, std]

data.loc[:, each] = (data[each] - mean)/std七.将数据拆分为训练、测试和验证数据集

我们将大约最后 21 天的数据保存为测试数据集,这些数据集会在训练完网络后使用。我们将使用该数据集进行预测,并与实际的骑行人数进行对比。

# Save data for approximately the last 21 days

test_data = data[-21*24:]

# Now remove the test data from the data set

data = data[:-21*24]

# Separate the data into features and targets

target_fields = ['cnt', 'casual', 'registered']

features, targets = data.drop(target_fields, axis=1), data[target_fields]

test_features, test_targets = test_data.drop(target_fields, axis=1), test_data[target_fields]八.开始构建神经网络

class NeuralNetwork(object):

def __init__(self, input_nodes, hidden_nodes, output_nodes, learning_rate):

# Set number of nodes in input, hidden and output layers.

self.input_nodes = input_nodes

self.hidden_nodes = hidden_nodes

self.output_nodes = output_nodes

# Initialize weights

self.weights_input_to_hidden = np.random.normal(0.0, self.input_nodes**-0.5,

(self.input_nodes, self.hidden_nodes))

self.weights_hidden_to_output = np.random.normal(0.0, self.hidden_nodes**-0.5,

(self.hidden_nodes, self.output_nodes))

self.lr = learning_rate

#### TODO: Set self.activation_function to your implemented sigmoid function ####

#

# Note: in Python, you can define a function with a lambda expression,

# as shown below.

self.activation_function = lambda x : 1 / ( 1 + np.exp(-x) ) # Replace 0 with your sigmoid calculation.

### If the lambda code above is not something you're familiar with,

# You can uncomment out the following three lines and put your

# implementation there instead.

#

#def sigmoid(x):

# return 0 # Replace 0 with your sigmoid calculation here

#self.activation_function = sigmoid

def train(self, features, targets):

''' Train the network on batch of features and targets.

Arguments

---------

features: 2D array, each row is one data record, each column is a feature

targets: 1D array of target values

'''

n_records = features.shape[0]

delta_weights_i_h = np.zeros(self.weights_input_to_hidden.shape)

delta_weights_h_o = np.zeros(self.weights_hidden_to_output.shape)

for X, y in zip(features, targets):

#### Implement the forward pass here ####

### Forward pass ###

# TODO: Hidden layer - Replace these values with your calculations.

hidden_inputs = np.dot(X,weights_input_to_hidden) # signals into hidden layer

hidden_outputs = self.activation_function( hidden_inputs ) # signals from hidden layer

# TODO: Output layer - Replace these values with your calculations.

final_inputs = np.dot(hidden_outputs,weights_hidden_to_output) # signals into final output layer

final_outputs = final_inputs # signals from final output layer

#### Implement the backward pass here ####

### Backward pass ###

# TODO: Output error - Replace this value with your calculations.

error = y - final_outputs

# Output layer error is the difference between desired target and actual output.

# TODO: Calculate the hidden layer's contribution to the error

hidden_error = np.dot( self.weights_hidden_to_output, output_error_term )

# TODO: Backpropagated error terms - Replace these values with your calculations.

output_error_term = error

hidden_error_term = hidden_error * hidden_outputs * (1 - hidden_outputs)

# Weight step (input to hidden)

delta_weights_i_h += hidden_error_term * X[:,None]

# Weight step (hidden to output)

delta_weights_h_o += output_error_term * hidden_outputs[:,None]

# TODO: Update the weights - Replace these values with your calculations.

self.weights_hidden_to_output += self.lr * delta_weights_i_h/n_record # update hidden-to-output weights with gradient descent step

self.weights_input_to_hidden += self.lr * delta_weights_h_o/n_record # update input-to-hidden weights with gradient descent step

def run(self, features):

''' Run a forward pass through the network with input features

Arguments

---------

features: 1D array of feature values

'''

#### Implement the forward pass here ####

# TODO: Hidden layer - replace these values with the appropriate calculations.

hidden_inputs = np.dot( features, self.weights_input_to_hidden ) # signals into hidden layer

hidden_outputs = self.activation_function( hidden_inputs ) # signals from hidden layer

# TODO: Output layer - Replace these values with the appropriate calculations.

final_inputs = np.dot( hidden_output, self.weights_hidden_to_output ) # signals into final output layer

final_outputs = final_inputs # signals from final output layer

return final_outputs

该段需要添加的代码:

1.

self.activation_function = lambda x : 1 / ( 1 + np.exp(-x) )

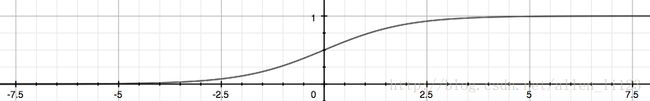

该代码表示了激活函数为S型函数:

该函数的函数图像如下

在预测时输入x变量所得的g(z)即结果为1的概率值

2.用输入层乘以权重矩阵获得隐藏层的数据

然后把隐藏层的数据用激活函数(S型函数)进行转化

hidden_inputs = np.dot(X,weights_input_to_hidden) # signals into hidden layer

hidden_outputs = self.activation_function( hidden_inputs ) # signals from hidden layer

3.隐藏层的数据乘以权重矩阵得到输出层的数据

输出层的数据不需要用激活函数进行转化

final_inputs = np.dot(hidden_outputs,weights_hidden_to_output) # signals into final output layer

final_outputs = final_inputs # signals from final output layer

4.误差为真实值与预测值的差值

反向传播的误差就是真实误差

error = y - final_outputs

output_error_term = error

5.用反向传播计算隐藏层的误差

hidden_error = np.dot( self.weights_hidden_to_output, output_error_term )

hidden_error_term = hidden_error * hidden_outputs * (1 - hidden_outputs)

6.将每一项的输出层与隐藏层误差进行累加

delta_weights_i_h += hidden_error_term * X[:,None]

delta_weights_h_o += output_error_term * hidden_outputs[:,None]

7.对权重进行更新

self.weights_hidden_to_output += self.lr * delta_weights_i_h/n_record

self.weights_input_to_hidden += self.lr * delta_weights_h_o/n_record

8.用前向传播对数据进行计算

hidden_inputs = np.dot( features, self.weights_input_to_hidden )

hidden_outputs = self.activation_function( hidden_inputs )

final_inputs = np.dot( hidden_output, self.weights_hidden_to_output )

final_outputs = final_inputs # signals from final output layer

九.计算平方误差

def MSE(y, Y):

return np.mean((y-Y)**2)

十.将最后六十天作为验证集

# Hold out the last 60 days or so of the remaining data as a validation set

train_features, train_targets = features[:-60*24], targets[:-60*24]

val_features, val_targets = features[-60*24:], targets[-60*24:]十一.单元测试

import unittest

inputs = np.array([[0.5, -0.2, 0.1]])

targets = np.array([[0.4]])

test_w_i_h = np.array([[0.1, -0.2],

[0.4, 0.5],

[-0.3, 0.2]])

test_w_h_o = np.array([[0.3],

[-0.1]])

class TestMethods(unittest.TestCase):

##########

# Unit tests for data loading

##########

def test_data_path(self):

# Test that file path to dataset has been unaltered

self.assertTrue(data_path.lower() == 'bike-sharing-dataset/hour.csv')

def test_data_loaded(self):

# Test that data frame loaded

self.assertTrue(isinstance(rides, pd.DataFrame))

##########

# Unit tests for network functionality

##########

def test_activation(self):

network = NeuralNetwork(3, 2, 1, 0.5)

# Test that the activation function is a sigmoid

self.assertTrue(np.all(network.activation_function(0.5) == 1/(1+np.exp(-0.5))))

def test_train(self):

# Test that weights are updated correctly on training

network = NeuralNetwork(3, 2, 1, 0.5)

network.weights_input_to_hidden = test_w_i_h.copy()

network.weights_hidden_to_output = test_w_h_o.copy()

network.train(inputs, targets)

self.assertTrue(np.allclose(network.weights_hidden_to_output,

np.array([[ 0.37275328],

[-0.03172939]])))

# print(network.weights_input_to_hidden)

self.assertTrue(np.allclose(network.weights_input_to_hidden,

np.array([[ 0.10562014, -0.20185996],

[0.39775194, 0.50074398],

[-0.29887597, 0.19962801]])))

def test_run(self):

# Test correctness of run method

network = NeuralNetwork(3, 2, 1, 0.5)

network.weights_input_to_hidden = test_w_i_h.copy()

network.weights_hidden_to_output = test_w_h_o.copy()

self.assertTrue(np.allclose(network.run(inputs), 0.09998924))

suite = unittest.TestLoader().loadTestsFromModule(TestMethods())

unittest.TextTestRunner().run(suite)十二.通过调参训练网络

import sys

### Set the hyperparameters here ###

iterations = 1000

learning_rate = 0.5

hidden_nodes = 10

output_nodes = 1

N_i = train_features.shape[1]

network = NeuralNetwork(N_i, hidden_nodes, output_nodes, learning_rate)

losses = {'train':[], 'validation':[]}

for ii in range(iterations):

# Go through a random batch of 128 records from the training data set

batch = np.random.choice(train_features.index, size=128)

X, y = train_features.iloc[batch].values, train_targets.iloc[batch]['cnt']

network.train(X, y)

# Printing out the training progress

train_loss = MSE(network.run(train_features).T, train_targets['cnt'].values)

val_loss = MSE(network.run(val_features).T, val_targets['cnt'].values)

sys.stdout.write("\rProgress: {:2.1f}".format(100 * ii/float(iterations)) \

+ "% ... Training loss: " + str(train_loss)[:5] \

+ " ... Validation loss: " + str(val_loss)[:5])

sys.stdout.flush()

losses['train'].append(train_loss)

losses['validation'].append(val_loss)

十三.检查预测结果

fig, ax = plt.subplots(figsize=(8,4))

mean, std = scaled_features['cnt']

predictions = network.run(test_features).T*std + mean

ax.plot(predictions[0], label='Prediction')

ax.plot((test_targets['cnt']*std + mean).values, label='Data')

ax.set_xlim(right=len(predictions))

ax.legend()

dates = pd.to_datetime(rides.loc[test_data.index]['dteday'])

dates = dates.apply(lambda d: d.strftime('%b %d'))

ax.set_xticks(np.arange(len(dates))[12::24])

_ = ax.set_xticklabels(dates[12::24], rotation=45)