不同Hive SQL下转换成MapReduce的情况

@Author : Spinach | GHB

@Link : http://blog.csdn.net/bocai8058

文章目录

- Hive概念

- MapReduce实现基本SQL操作的原理

- join实现

- group by实现

- distinct实现

- 多个distinct字段的实现

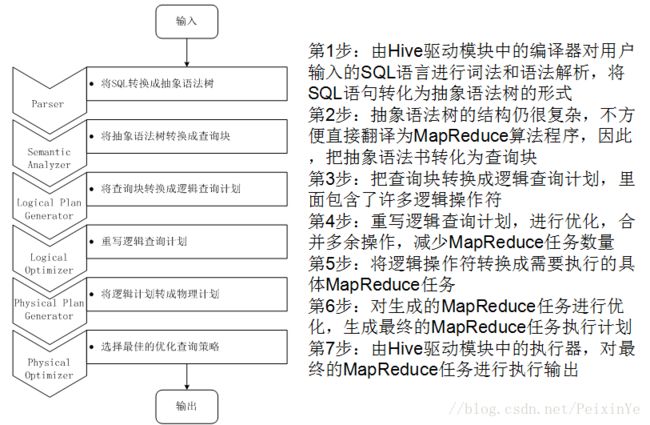

- SQL转换为MapReduce的过程

- 执行顺序解析(部分示例)

- mysql语句执行顺序

- hive sql语句执行顺序

- explain查看执行计划

- 示例1:select...from...where...group by...

- 示例2:select...from...where...group by...

Hive概念

Hive是基于Hadoop的一个数据仓库系统,在各大公司都有广泛的应用。美团数据仓库也是基于Hive搭建,每天执行近万次的Hive ETL计算流程,负责每天数百GB的数据存储和分析。Hive的稳定性和性能对我们的数据分析非常关键。

MapReduce实现基本SQL操作的原理

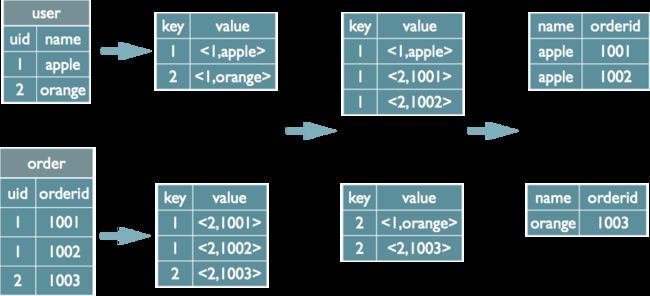

join实现

select u.name, o.orderid from order o join user u on o.uid = u.uid;

在map的输出value中为不同表的数据打上tag标记,在reduce阶段根据tag判断数据来源。MapReduce的过程如下(这里只是说明最基本的Join的实现,还有其他的实现方式)

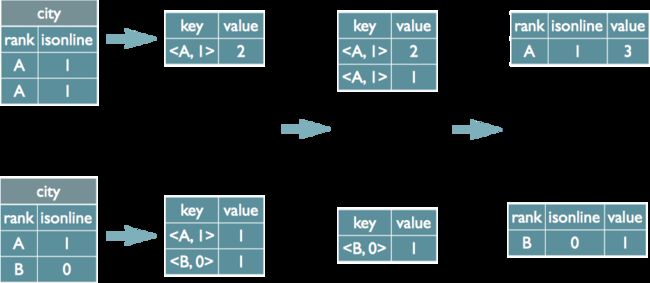

group by实现

select rank, isonline, count(*) from city group by rank, isonline;

将GroupBy的字段组合为map的输出key值,利用MapReduce的排序,在reduce阶段保存LastKey区分不同的key。MapReduce的过程如下(当然这里只是说明Reduce端的非Hash聚合过程)

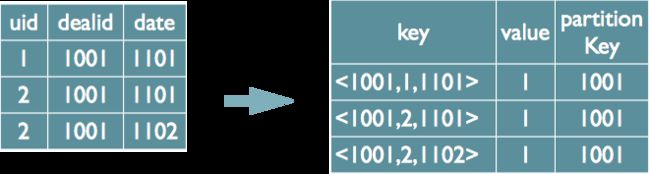

distinct实现

select dealid, count(distinct uid) num from order group by dealid;

当只有一个distinct字段时,如果不考虑Map阶段的Hash GroupBy,只需要将GroupBy字段和Distinct字段组合为map输出key,利用mapreduce的排序,同时将GroupBy字段作为reduce的key,在reduce阶段保存LastKey即可完成去重

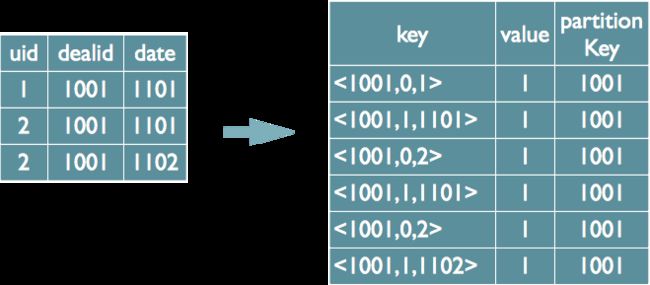

多个distinct字段的实现

select dealid, count(distinct uid), count(distinct date) from order group by dealid;

实现方式一:

如果仍然按照上面一个distinct字段的方法,即下图这种实现方式,无法跟据uid和date分别排序,也就无法通过LastKey去重,仍然需要在reduce阶段在内存中通过Hash去重。

实现方式二:

可以对所有的distinct字段编号,每行数据生成n行数据,那么相同字段就会分别排序,这时只需要在reduce阶段记录LastKey即可去重。

这种实现方式很好的利用了MapReduce的排序,节省了reduce阶段去重的内存消耗,但是缺点是增加了shuffle的数据量。

需要注意的是,在生成reduce value时,除第一个distinct字段所在行需要保留value值,其余distinct数据行value字段均可为空。

SQL转换为MapReduce的过程

说明:

- 当启动MapReduce程序时,Hive本身是不会生成MapReduce算法程序的

- 需要通过一个表示“Job执行计划”的XML文件驱动执行内置的、原生的Mapper和Reducer模块

- Hive通过和JobTracker通信来初始化MapReduce任务,不必直接部署在JobTracker所在的管理节点上执行

- 通常在大型集群上,会有专门的网关机来部署Hive工具。网关机的作用主要是远程操作和管理节点上的JobTracker通信来执行任务

- 数据文件通常存储在HDFS上,HDFS由名称节点管理

执行顺序解析(部分示例)

mysql语句执行顺序

# 代码写的顺序

select ... from... where.... group by... having... order by..

或者

from ... select ...

# 代码的执行顺序

from... where...group by... having.... select ... order by...

hive sql语句执行顺序

# 代码写的顺序

select ... from... where.... group by... having... order by..

或者

from ... select ...

# 代码的执行顺序

from... where.... select...group by... having ... order by...

explain查看执行计划

示例1:select…from…where…group by…

explain

select city,ad_type,device,sum(cnt) as cnt

from tb_pmp_raw_log_basic_analysis

where day = '2016-05-28' and type = 0 and media = 'sohu' and (deal_id = '' or deal_id = '-' or deal_id is NULL)

group by city,ad_type,device

显示执行计划如下:

STAGE DEPENDENCIES:

Stage-1 is a root stage

Stage-0 is a root stage

STAGE PLANS:

Stage: Stage-1

Map Reduce

Map Operator Tree:

TableScan

alias: tb_pmp_raw_log_basic_analysis

Statistics: Num rows: 8195357 Data size: 580058024 Basic stats: COMPLETE Column stats: NONE

Filter Operator

predicate: (((deal_id = '') or (deal_id = '-')) or deal_id is null) (type: boolean)

Statistics: Num rows: 8195357 Data size: 580058024 Basic stats: COMPLETE Column stats: NONE

Select Operator

expressions: city (type: string), ad_type (type: string), device (type: string), cnt (type: bigint)

outputColumnNames: city, ad_type, device, cnt

Statistics: Num rows: 8195357 Data size: 580058024 Basic stats: COMPLETE Column stats: NONE

Group By Operator

aggregations: sum(cnt)

keys: city (type: string), ad_type (type: string), device (type: string)

mode: hash

outputColumnNames: _col0, _col1, _col2, _col3

Statistics: Num rows: 8195357 Data size: 580058024 Basic stats: COMPLETE Column stats: NONE

Reduce Output Operator

key expressions: _col0 (type: string), _col1 (type: string), _col2 (type: string)

sort order: +++

Map-reduce partition columns: _col0 (type: string), _col1 (type: string), _col2 (type: string)

Statistics: Num rows: 8195357 Data size: 580058024 Basic stats: COMPLETE Column stats: NONE

value expressions: _col3 (type: bigint)

Reduce Operator Tree:

Group By Operator

aggregations: sum(VALUE._col0)

keys: KEY._col0 (type: string), KEY._col1 (type: string), KEY._col2 (type: string)

mode: mergepartial

outputColumnNames: _col0, _col1, _col2, _col3

Statistics: Num rows: 4097678 Data size: 290028976 Basic stats: COMPLETE Column stats: NONE

Select Operator

expressions: _col0 (type: string), _col1 (type: string), _col2 (type: string), _col3 (type: bigint)

outputColumnNames: _col0, _col1, _col2, _col3

Statistics: Num rows: 4097678 Data size: 290028976 Basic stats: COMPLETE Column stats: NONE

File Output Operator

compressed: false

Statistics: Num rows: 4097678 Data size: 290028976 Basic stats: COMPLETE Column stats: NONE

table:

input format: org.apache.hadoop.mapred.TextInputFormat

output format: org.apache.hadoop.hive.ql.io.HiveIgnoreKeyTextOutputFormat

serde: org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe

Stage: Stage-0

Fetch Operator

limit: -1

# 具体介绍如下:

stage1的map阶段

TableScan:from加载表,描述中有行数和大小等

Filter Operator:where过滤条件筛选数据,描述有具体筛选条件和行数、大小等

Select Operator:筛选列,描述中有列名、类型,输出类型、大小等。

Group By Operator:分组,描述了分组后需要计算的函数,keys描述用于分组的列,outputColumnNames为输出的列名,可以看出列默认使用固定的别名_col0,以及其他信息

Reduce Output Operator:map端本地的reduce,进行本地的计算,然后按列映射到对应的reduce

stage1的reduce阶段Reduce Operator Tree

Group By Operator:总体分组,并按函数计算。map计算后的结果在reduce端的合并。描述类似。mode: mergepartial是说合并map的计算结果。map端是hash映射分组

Select Operator:最后过滤列用于输出结果

File Output Operator:输出结果到临时文件中,描述介绍了压缩格式、输出文件格式。

stage0第二阶段没有,这里可以实现limit 100的操作。

示例2:select…from…where…group by…

引用:https://blog.csdn.net/longshenlmj/article/details/51569892 | http://www.cnblogs.com/Dhouse/p/7132476.html | https://blog.csdn.net/PeixinYe/article/details/79587164