02大数据学习之hadoop搭建——hadoop-2.6.1

前言——hadoop搭建2.x和1.x有区别,这里不做阐述。介绍2.6.1搭建的过程,主要是配置文件中参数的配置

需要的工具包——jdk1.7,hadoop-2.6.1,集群环境是上篇博客中自己搭建的虚拟集群:

1、安装包下载:方法自行get

2、先安装jdk,

- 将压缩包解压:tar zxvf jdk-7u67-linux-x64.tar.gz

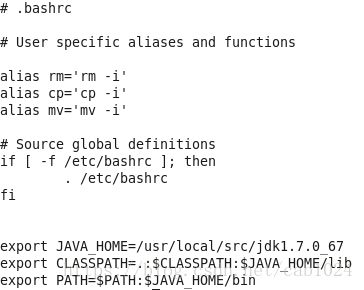

- 配置jdk环境变量:master、slave1、slave2:vim ~/.bashrc

scp -r /usr/local/src/jdk1.7.0_67 root@slave1:/usr/local/src/jdk1.7.0_67

scp -r /usr/local/src/jdk1.7.0_67 root@slave2:/usr/local/src/jdk1.7.0_67

3、hadoop安装:

- 解压安装包到指定目录下:tar zxvf hadoop-2.6.1.tar.gz

- 修改Hadoop配置文件:master :cd hadoop-2.6.1/etc/hadoop

vim hadoop-env.sh

export JAVA_HOME=/usr/local/src/jdk1.7.0_67

vim yarn-env.sh

export JAVA_HOME=/usr/local/src/

jdk1.7.0_67

vim slaves

slave1

slave2

vim core-site.xml

vim hdfs-site.xml

vim mapred-site.xml

vim yarn-site.xml

-

#创建临时目录和文件目录

mkdir /usr/local/src/hadoop-2.6.1/tmp

mkdir -p /usr/local/src/hadoop-2.6.1/dfs/name

mkdir -p /usr/local/src/hadoop-2.6.1/dfs/data

- 配置环境变量:master、slave1、slave2:vim ~/.bashrc

-

HADOOP_HOME=/usr/local/src/hadoop-2.6.1export PATH=$PATH:$HADOOP_HOME/bin

-

拷贝安装包:

scp -r /usr/local/src/hadoop-2.6.1 root@slave1:/usr/local/src/hadoop-2.6.1

scp -r /usr/local/src/hadoop-2.6.1 root@slave2:/usr/local/src/hadoop-2.6.1

启动集群

#Master

#初始化Namenode

hadoop namenode -format //只能初始化一次,如果安装的有问题,需要将三个节点中dfs下的文件都删掉。 rm -rf /home/hadoop/hadoop/dfs

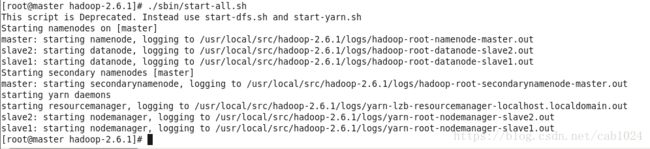

启动hadoop集群:./sbin/start-all.sh

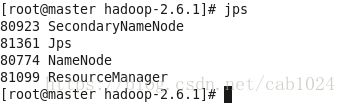

查看master进程状态:jps

查看slave1、slave2进程状态:jps

关闭集群:./sbin/stop-all.sh

总结:在这个过程中一定要细致,先配置主节点,然后再拷贝到从节点,自己在配置过程中就是遇到了ssh互信的问题,导致有的节点无法启动,后来从新互信以后就解决了connection time out,已及refused的问题,在启动集群以后试试能不能put上文件:hadoop fs -put filename /filedir

这里就是整个hadoop集群搭建的过程!