集成学习Bagging和Boosting算法总结

一、集成学习综述

- 1.集成方法或元算法是对其他算法进行组合的一种方式,下面的博客中主要关注的是AdaBoost元算法。将不同的分类器组合起来,而这种组合结果被称为集成方法/元算法。使用集成算法时会有很多的形式,如:

- 不同算法的集成

- 同一种算法在不同设置下的集成

- 数据集不同部分分配给不同分类器之后的集成

- 2.AdaBoost算法优缺点

- 优点:泛化错误率低,易编码,可以应用在大部分分类器上,无参数调整

- 缺点:对离群点敏感

- 适用数据类型:数值型和标称型数据

二、基于数据集多重采样的分类器

- 1.bagging方法(bootstrap aggregating)

- 主要思想:

- (1). 从原始数据集中抽取新的训练集。每次从原始数据集中使用有放回采样数据的方法,抽取n个样本(在原始数据集中,有些样本可能被重复采样,而有些样本可能一次都未被采样到)。共进行k次抽取,得到k个新的数据集(k个新训练集之间是相互独立的),新的数据集的大小和原始数据集的大小相等。

- (2). 每次使用一个新的训练集得到一个模型,k个新的训练集总共可以得到k个新的模型

- (3). 对分类问题:将(2)中得到的k个模型采用投票方式得到分类结果;对回归问题:计算(2)中模型的均值作为最后的结果(所有模型的重要性相同!!!)

- 主要思想:

- 2.boosting方法

- 不论是在bagging还是boosting当中,所使用的多个分类器的类型都是一样的。但是,在boosting中,不同的分类器通过串行训练来获得的,每个新分类器都根据已训练出的分类器的性能来进行训练。boosting是通过关注被已有分类器错分的那些数据来获得新的分类器,,boosting方法有多个版本,下面介绍的是最流行的一个版本AdaBoosting算法。

- 主要思想:

- (1). 对每一次的训练数据样本赋予一个权重,并且每一次样本的权重分布依赖上一次的分类结果。

- (2). 基分类器之间采用序列的线性加权方式来组合。

三、bagging方法与boosting方法对比

- 1.样本选择上:

- bagging方法:新的训练集是在原始训练集中采用有放回的方式采样样本的,从原始训练集中选取的每个新的训练集之间是相互独立的。

- boosting方法:每一次的训练集不变,只是训练集中的每个样本在分类器中的权重发生变化,而权重是根据上一次的分类结果进行调整的。

- 2.样本权重上:

- bagging方法:使用均匀选取样本,每个样本的权重相同。

- boosting方法:根据错误率不断调整样本的权重,错误率越大,权重越大。

- 3.预测函数:

- bagging方法:所有预测函数的权重相等

- boosting方法:每个弱分类器都有相应的权重,对分类误差小的分类器会有更大的权重

- 4.并行计算:

- bagging方法:各个预测函数可以并行计算

- boosting方法:各个预测函数只能顺序生成,因为后一个模型需要前一个模型的输出结果

四、集成学习的常见应用

- 1.常见算法

- Bagging + 决策树 = 随机森林

- AdaBoost + 决策树 = 提升树

- Gradient Boosting + 决策树 = GBDT

- 2.基于错误率提升分类器的性能(AdaBoost算法原理介绍)

- 2.1 AdaBoost算法介绍

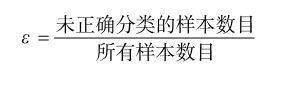

集成学习算法思想:使用弱分类器和多个样本来构建一个强分类器。AdaBoost是adaptive boosting的缩写,主要运行过程是:首先,对训练数据集中的每个样本进行训练,并赋予每个样本一个权重,这些权重构成一个向量D。一开始,这些样本的初始权重都是相同的!然后,在训练数据上训练出一个弱分类器并计算弱分类器的错误率。接着,在相同的训练数据上再次训练弱分类器。在分类器的第二次训练过程中,将会重新调整每个样本的权重!其中对第一次中分对的样本降低其权重,在第一次中分错的样本提高其权重。为了从所有弱分类器中得到最终的分类结果,AdaBoost还会对每个弱分类器都分配一个权重值alpha,这些alpha值是基于每个弱分类器的错误率进行计算出来的。 - 2.2 错误率ε的定义如下:

- 2.3 alpha的计算公式

- 2.4 AdaBoost算法流程如下

- 2.5 对上图的解释如下:

- 首先,对训练数据集中的每个样本进行初始化权重,此时每个样本的权重是相同的,这些权重构成了权重向量D;然后,经过第一个弱分类器后,训练集中每个样本的权重发生变化,根据第一个弱分类器的分类结果计算其错误率ε;接着,计算出alpha的值;计算出aplha值之后,可以对权重向量D进行更新,使得对第一个分类器分类结果中分类错误的样本,提高其权重。对分类正确的样本,降低其权重。权重向量D的更新方法如下:

- 在计算出D后,AdaBoost又开始进行下一轮的迭代,AdaBoost算法会不断的重复训练和调整权重,直到训练错误率为0或弱分类器的数目达到用户的指定值为止。

- 2.1 AdaBoost算法介绍

- 3.1 从上图可以看出,试着从某个坐标轴上选择一个值(即选择一条与坐标轴平行的直线)来将所有的蓝色圆点和橘色圆点分开,这显然是不可能的。这就是单层决策树难以处理的一个著名问题。通过使用多颗单层决策树,我们可以构建出一个能够对该数据集完全正确分类的分类器。

#################数据集的可视化#####################

def loadSimData():

"""

创建单层决策树的数据集

"""

dataMat = np.matrix([[1., 2.1],

[1.5, 1.6],

[1.3, 1.],

[1., 1.],

[2., 1.]])

classLabels = [1.0, 1.0, -1.0, -1.0, 1.0]

return dataMat, classLabels

def showDataSet(dataMat, labelMat):

"""

数据可视化

"""

data_plus = [] # 正样本

data_minus = [] # 负样本

for i in range(len(dataMat)):

if labelMat[i] > 0:

data_plus.append(dataMat[i])

else:

data_minus.append(dataMat[i])

data_plus_np = np.array(data_plus)

data_minus_np = np.array(data_minus) # 转化成numpy中的数据类型

plt.scatter(np.transpose(data_plus_np)[0], np.transpose(data_plus_np)[1])

plt.scatter(np.transpose(data_minus_np)[0], np.transpose(data_minus_np)[1])

plt.title("Dataset Visualize")

plt.xlabel("x1")

plt.ylabel("x2")

plt.show()

if __name__ == '__main__':

data_Arr, classLabels = loadSimData()

showDataSet(data_Arr, classLabels)

- 3.2 蓝色横线上的是一个类别,蓝色横线下边是一个类别。显然,此时有一个蓝点分类错误,计算此时的分类误差错误率为1/5 = 0.2。这个横线与坐标轴的y轴的交点,就是我们设置的阈值,通过不断改变阈值的大小,找到使单层决策树的分类误差最小的阈值。同理,竖线也是如此,找到最佳分类的阈值,就找到了最佳单层决策树。

#!/usr/bin/env python

# -*- coding: utf-8 -*-

# @Date : 2019-05-12 21:31:41

# @Author : cdl ([email protected])

# @Link : https://github.com/cdlwhm1217096231/python3_spider

# @Version : $Id$

import numpy as np

import matplotlib.pyplot as plt

# 数据集可视化

def loadSimpleData():

dataMat = np.matrix([[1., 2.1],

[1.5, 1.6],

[1.3, 1.],

[1., 1.],

[2., 1.]])

classLabels = [1.0, 1.0, -1.0, -1.0, 1.0]

return dataMat, classLabels

def showDataSet(dataMat, labelMat):

data_plus = []

data_minus = []

for i in range(len(dataMat)):

if labelMat[i] > 0:

data_plus.append(dataMat[i])

else:

data_minus.append(dataMat[i])

data_plus_np = np.array(data_plus)

data_minus_np = np.array(data_minus)

plt.scatter(np.transpose(data_plus_np)[0], np.transpose(data_plus)[1])

plt.scatter(np.transpose(data_minus_np)[0], np.transpose(data_minus)[1])

plt.title("dataset visualize")

plt.xlabel("x")

plt.ylabel("y")

plt.show()

# 构建单层决策树分类函数

def stumpClassify(dataMat, dimen, threshval, threshIneq):

"""

dataMat:数据矩阵

dimen:第dimen列,即第几个特征

threshval:阈值

threshIneq:标志

返回值retArray:分类结果

"""

retArray = np.ones((np.shape(dataMat)[0], 1)) # 初始化retArray为1

if threshIneq == "lt":

retArray[dataMat[:, dimen] <= threshval] = -1.0 # 如果小于阈值,则赋值为-1

else:

retArray[dataMat[:, dimen] > threshval] = 1.0 # 如果大于阈值,则赋值为-1

return retArray

# 找到数据集上最佳的单层决策树,单层决策树是指只考虑其中的一个特征,在该特征的基础上进行分类,寻找分类错误率最低的阈值即可。例如本文中的例子是,如果以第一列特征为基础,阈值选择1.3,并且设置>1.3的为-1,<1.3的为+1,这样就构造出了一个二分类器

def buildStump(dataMat, classLabels, D):

"""

dataMat:数据矩阵

classLabels:数据标签

D:样本权重

返回值是:bestStump:最佳单层决策树信息;minError:最小误差;bestClasEst:最佳的分类结果

"""

dataMat = np.matrix(dataMat)

labelMat = np.matrix(classLabels).T

m, n = np.shape(dataMat)

numSteps = 10.0

bestStump = {} # 存储最佳单层决策树信息的字典

bestClasEst = np.mat(np.zeros((m, 1))) # 最佳分类结果

minError = float("inf")

for i in range(n): # 遍历所有特征

rangeMin = dataMat[:, i].min()

rangeMax = dataMat[:, i].max()

stepSize = (rangeMax - rangeMin) / numSteps # 计算步长

for j in range(-1, int(numSteps) + 1):

for inequal in ["lt", "gt"]:

threshval = (rangeMin + float(j) * stepSize) # 计算阈值

predictVals = stumpClassify(

dataMat, i, threshval, inequal) # 计算分类结果

errArr = np.mat(np.ones((m, 1))) # 初始化误差矩阵

errArr[predictVals == labelMat] = 0 # 分类完全正确,赋值为0

# 基于权重向量D而不是其他错误计算指标来评价分类器的,不同的分类器计算方法不一样

weightedError = D.T * errArr # 计算弱分类器的分类错误率---这里没有采用常规方法来评价这个分类器的分类准确率,而是乘上了样本权重D

print("split: dim %d, thresh %.2f, thresh ineqal: %s, the weighted error is %.3f" % (

i, threshval, inequal, weightedError))

if weightedError < minError:

minError = weightedError

bestClasEst = predictVals.copy()

bestStump["dim"] = i

bestStump["thresh"] = threshval

bestStump["ineq"] = inequal

return bestStump, minError, bestClasEst

- 3.3 通过遍历,改变不同的阈值,计算最终的分类误差,找到分类误差最小的分类方式,即为我们要找的最佳单层决策树。这里lt表示less than,表示分类方式,对于小于阈值的样本点赋值为-1,gt表示greater than,也是表示分类方式,对于大于阈值的样本点赋值为-1。经过遍历,我们找到,训练好的最佳单层决策树的最小分类误差为0,就是对于该数据集,无论用什么样的单层决策树,分类误差最小就是0。这就是我们训练好的弱分类器。接下来,使用AdaBoost算法提升分类器性能,将分类误差缩短到0,看下AdaBoost算法是如何实现的。

# 使用Adaboost算法提升弱分类器性能

def adbBoostTrainDS(dataMat, classLabels, numIt=40):

"""

dataMat:数据矩阵

classLabels:标签矩阵

numIt:最大迭代次数

返回值:weakClassArr 训练好的分类器 aggClassEst:类别估计累计值

"""

weakClassArr = []

m = np.shape(dataMat)[0]

D = np.mat(np.ones((m, 1)) / m) # 初始化样本权重D

aggClassEst = np.mat(np.zeros((m, 1)))

for i in range(numIt):

bestStump, error, classEst = buildStump(

dataMat, classLabels, D) # 构建单个单层决策树

# 计算弱分类器权重alpha,使error不等于0,因为分母不能为0

alpha = float(0.5 * np.log((1.0 - error) / max(error, 1e-16)))

bestStump["alpha"] = alpha # 存储每个弱分类器的权重alpha

weakClassArr.append(bestStump) # 存储单个单层决策树

print("classEst: ", classEst.T)

expon = np.multiply(-1 * alpha *

np.mat(classLabels).T, classEst) # 计算e的指数项

D = np.multiply(D, np.exp(expon))

D = D / D.sum()

# 计算AdaBoost误差,当误差为0时,退出循环

aggClassEst += alpha * classEst # 计算类别估计累计值--注意这里包括了目前已经训练好的每一个弱分类器

print("aggClassEst: ", aggClassEst.T)

aggErrors = np.multiply(np.sign(aggClassEst) != np.mat(

classLabels).T, np.ones((m, 1))) # 目前集成分类器的分类误差

errorRate = aggErrors.sum() / m # 集成分类器分类错误率,如果错误率为0,则整个集成算法停止,训练完成

print("total error: ", errorRate)

if errorRate == 0.0:

break

return weakClassArr, aggClassEst

- 3.4 使用AdaBoost提升分类器性能

# Adaboost分类函数

def adaClassify(dataToClass, classifier):

"""

dataToClass:待分类样本

classifier:训练好的强分类器

"""

dataMat = np.mat(dataToClass)

m = np.shape(dataMat)[0]

aggClassEst = np.mat(np.zeros((m, 1)))

for i in range(len(classifier)): # 遍历所有分类器,进行分类

classEst = stumpClassify(

dataMat, classifier[i]["dim"], classifier[i]["thresh"], classifier[i]["ineq"])

aggClassEst += classifier[i]["alpha"] * classEst

print(aggClassEst)

return np.sign(aggClassEst)

- 3.5 整个Adaboost提升算法的代码如下:

#!/usr/bin/env python

# -*- coding: utf-8 -*-

# @Date : 2019-05-12 21:31:41

# @Author : cdl ([email protected])

# @Link : https://github.com/cdlwhm1217096231/python3_spider

# @Version : $Id$

import numpy as np

import matplotlib.pyplot as plt

# 数据集可视化

def loadSimpleData():

dataMat = np.matrix([[1., 2.1],

[1.5, 1.6],

[1.3, 1.],

[1., 1.],

[2., 1.]])

classLabels = [1.0, 1.0, -1.0, -1.0, 1.0]

return dataMat, classLabels

def showDataSet(dataMat, labelMat):

data_plus = []

data_minus = []

for i in range(len(dataMat)):

if labelMat[i] > 0:

data_plus.append(dataMat[i])

else:

data_minus.append(dataMat[i])

data_plus_np = np.array(data_plus)

data_minus_np = np.array(data_minus)

plt.scatter(np.transpose(data_plus_np)[0], np.transpose(data_plus)[1])

plt.scatter(np.transpose(data_minus_np)[0], np.transpose(data_minus)[1])

plt.title("dataset visualize")

plt.xlabel("x")

plt.ylabel("y")

plt.show()

# 构建单层决策树分类函数

def stumpClassify(dataMat, dimen, threshval, threshIneq):

"""

dataMat:数据矩阵

dimen:第dimen列,即第几个特征

threshval:阈值

threshIneq:标志

返回值retArray:分类结果

"""

retArray = np.ones((np.shape(dataMat)[0], 1)) # 初始化retArray为1

if threshIneq == "lt":

retArray[dataMat[:, dimen] <= threshval] = -1.0 # 如果小于阈值,则赋值为-1

else:

retArray[dataMat[:, dimen] > threshval] = 1.0 # 如果大于阈值,则赋值为-1

return retArray

# 找到数据集上最佳的单层决策树,单层决策树是指只考虑其中的一个特征,在该特征的基础上进行分类,寻找分类错误率最低的阈值即可。例如本文中的例子是,如果以第一列特征为基础,阈值选择1.3,并且设置>1.3的为-1,<1.3的为+1,这样就构造出了一个二分类器

def buildStump(dataMat, classLabels, D):

"""

dataMat:数据矩阵

classLabels:数据标签

D:样本权重

返回值是:bestStump:最佳单层决策树信息;minError:最小误差;bestClasEst:最佳的分类结果

"""

dataMat = np.matrix(dataMat)

labelMat = np.matrix(classLabels).T

m, n = np.shape(dataMat)

numSteps = 10.0

bestStump = {} # 存储最佳单层决策树信息的字典

bestClasEst = np.mat(np.zeros((m, 1))) # 最佳分类结果

minError = float("inf")

for i in range(n): # 遍历所有特征

rangeMin = dataMat[:, i].min()

rangeMax = dataMat[:, i].max()

stepSize = (rangeMax - rangeMin) / numSteps # 计算步长

for j in range(-1, int(numSteps) + 1):

for inequal in ["lt", "gt"]:

threshval = (rangeMin + float(j) * stepSize) # 计算阈值

predictVals = stumpClassify(

dataMat, i, threshval, inequal) # 计算分类结果

errArr = np.mat(np.ones((m, 1))) # 初始化误差矩阵

errArr[predictVals == labelMat] = 0 # 分类完全正确,赋值为0

# 基于权重向量D而不是其他错误计算指标来评价分类器的,不同的分类器计算方法不一样

weightedError = D.T * errArr # 计算弱分类器的分类错误率---这里没有采用常规方法来评价这个分类器的分类准确率,而是乘上了样本权重D

print("split: dim %d, thresh %.2f, thresh ineqal: %s, the weighted error is %.3f" % (

i, threshval, inequal, weightedError))

if weightedError < minError:

minError = weightedError

bestClasEst = predictVals.copy()

bestStump["dim"] = i

bestStump["thresh"] = threshval

bestStump["ineq"] = inequal

return bestStump, minError, bestClasEst

# 使用Adaboost算法提升弱分类器性能

def adbBoostTrainDS(dataMat, classLabels, numIt=40):

"""

dataMat:数据矩阵

classLabels:标签矩阵

numIt:最大迭代次数

返回值:weakClassArr 训练好的分类器 aggClassEst:类别估计累计值

"""

weakClassArr = []

m = np.shape(dataMat)[0]

D = np.mat(np.ones((m, 1)) / m) # 初始化样本权重D

aggClassEst = np.mat(np.zeros((m, 1)))

for i in range(numIt):

bestStump, error, classEst = buildStump(

dataMat, classLabels, D) # 构建单个单层决策树

# 计算弱分类器权重alpha,使error不等于0,因为分母不能为0

alpha = float(0.5 * np.log((1.0 - error) / max(error, 1e-16)))

bestStump["alpha"] = alpha # 存储每个弱分类器的权重alpha

weakClassArr.append(bestStump) # 存储单个单层决策树

print("classEst: ", classEst.T)

expon = np.multiply(-1 * alpha *

np.mat(classLabels).T, classEst) # 计算e的指数项

D = np.multiply(D, np.exp(expon))

D = D / D.sum()

# 计算AdaBoost误差,当误差为0时,退出循环

aggClassEst += alpha * classEst # 计算类别估计累计值--注意这里包括了目前已经训练好的每一个弱分类器

print("aggClassEst: ", aggClassEst.T)

aggErrors = np.multiply(np.sign(aggClassEst) != np.mat(

classLabels).T, np.ones((m, 1))) # 目前集成分类器的分类误差

errorRate = aggErrors.sum() / m # 集成分类器分类错误率,如果错误率为0,则整个集成算法停止,训练完成

print("total error: ", errorRate)

if errorRate == 0.0:

break

return weakClassArr, aggClassEst

# Adaboost分类函数

def adaClassify(dataToClass, classifier):

"""

dataToClass:待分类样本

classifier:训练好的强分类器

"""

dataMat = np.mat(dataToClass)

m = np.shape(dataMat)[0]

aggClassEst = np.mat(np.zeros((m, 1)))

for i in range(len(classifier)): # 遍历所有分类器,进行分类

classEst = stumpClassify(

dataMat, classifier[i]["dim"], classifier[i]["thresh"], classifier[i]["ineq"])

aggClassEst += classifier[i]["alpha"] * classEst

print(aggClassEst)

return np.sign(aggClassEst)

if __name__ == "__main__":

dataMat, classLabels = loadSimpleData()

showDataSet(dataMat, classLabels)

weakClassArr, aggClassEst = adbBoostTrainDS(dataMat, classLabels)

print(adaClassify([[0, 0], [5, 5]], weakClassArr))

- 结果如下:

split: dim 0, thresh 0.90, thresh ineqal: lt, the weighted error is 0.400 split: dim 0, thresh 0.90, thresh ineqal: gt, the weighted error is 0.400 split: dim 0, thresh 1.00, thresh ineqal: lt, the weighted error is 0.400 split: dim 0, thresh 1.00, thresh ineqal: gt, the weighted error is 0.400 split: dim 0, thresh 1.10, thresh ineqal: lt, the weighted error is 0.400 split: dim 0, thresh 1.10, thresh ineqal: gt, the weighted error is 0.400 split: dim 0, thresh 1.20, thresh ineqal: lt, the weighted error is 0.400 split: dim 0, thresh 1.20, thresh ineqal: gt, the weighted error is 0.400 split: dim 0, thresh 1.30, thresh ineqal: lt, the weighted error is 0.200 split: dim 0, thresh 1.30, thresh ineqal: gt, the weighted error is 0.400 split: dim 0, thresh 1.40, thresh ineqal: lt, the weighted error is 0.200 split: dim 0, thresh 1.40, thresh ineqal: gt, the weighted error is 0.400 split: dim 0, thresh 1.50, thresh ineqal: lt, the weighted error is 0.400 split: dim 0, thresh 1.50, thresh ineqal: gt, the weighted error is 0.400 split: dim 0, thresh 1.60, thresh ineqal: lt, the weighted error is 0.400 split: dim 0, thresh 1.60, thresh ineqal: gt, the weighted error is 0.400 split: dim 0, thresh 1.70, thresh ineqal: lt, the weighted error is 0.400 split: dim 0, thresh 1.70, thresh ineqal: gt, the weighted error is 0.400 split: dim 0, thresh 1.80, thresh ineqal: lt, the weighted error is 0.400 split: dim 0, thresh 1.80, thresh ineqal: gt, the weighted error is 0.400 split: dim 0, thresh 1.90, thresh ineqal: lt, the weighted error is 0.400 split: dim 0, thresh 1.90, thresh ineqal: gt, the weighted error is 0.400 split: dim 0, thresh 2.00, thresh ineqal: lt, the weighted error is 0.600 split: dim 0, thresh 2.00, thresh ineqal: gt, the weighted error is 0.400 split: dim 1, thresh 0.89, thresh ineqal: lt, the weighted error is 0.400 split: dim 1, thresh 0.89, thresh ineqal: gt, the weighted error is 0.400 split: dim 1, thresh 1.00, thresh ineqal: lt, the weighted error is 0.200 split: dim 1, thresh 1.00, thresh ineqal: gt, the weighted error is 0.400 split: dim 1, thresh 1.11, thresh ineqal: lt, the weighted error is 0.200 split: dim 1, thresh 1.11, thresh ineqal: gt, the weighted error is 0.400 split: dim 1, thresh 1.22, thresh ineqal: lt, the weighted error is 0.200 split: dim 1, thresh 1.22, thresh ineqal: gt, the weighted error is 0.400 split: dim 1, thresh 1.33, thresh ineqal: lt, the weighted error is 0.200 split: dim 1, thresh 1.33, thresh ineqal: gt, the weighted error is 0.400 split: dim 1, thresh 1.44, thresh ineqal: lt, the weighted error is 0.200 split: dim 1, thresh 1.44, thresh ineqal: gt, the weighted error is 0.400 split: dim 1, thresh 1.55, thresh ineqal: lt, the weighted error is 0.200 split: dim 1, thresh 1.55, thresh ineqal: gt, the weighted error is 0.400 split: dim 1, thresh 1.66, thresh ineqal: lt, the weighted error is 0.400 split: dim 1, thresh 1.66, thresh ineqal: gt, the weighted error is 0.400 split: dim 1, thresh 1.77, thresh ineqal: lt, the weighted error is 0.400 split: dim 1, thresh 1.77, thresh ineqal: gt, the weighted error is 0.400 split: dim 1, thresh 1.88, thresh ineqal: lt, the weighted error is 0.400 split: dim 1, thresh 1.88, thresh ineqal: gt, the weighted error is 0.400 split: dim 1, thresh 1.99, thresh ineqal: lt, the weighted error is 0.400 split: dim 1, thresh 1.99, thresh ineqal: gt, the weighted error is 0.400 split: dim 1, thresh 2.10, thresh ineqal: lt, the weighted error is 0.600 split: dim 1, thresh 2.10, thresh ineqal: gt, the weighted error is 0.400 classEst: [[-1. 1. -1. -1. 1.]] aggClassEst: [[-0.69314718 0.69314718 -0.69314718 -0.69314718 0.69314718]] total error: 0.2 split: dim 0, thresh 0.90, thresh ineqal: lt, the weighted error is 0.250 split: dim 0, thresh 0.90, thresh ineqal: gt, the weighted error is 0.250 split: dim 0, thresh 1.00, thresh ineqal: lt, the weighted error is 0.625 split: dim 0, thresh 1.00, thresh ineqal: gt, the weighted error is 0.250 split: dim 0, thresh 1.10, thresh ineqal: lt, the weighted error is 0.625 split: dim 0, thresh 1.10, thresh ineqal: gt, the weighted error is 0.250 split: dim 0, thresh 1.20, thresh ineqal: lt, the weighted error is 0.625 split: dim 0, thresh 1.20, thresh ineqal: gt, the weighted error is 0.250 split: dim 0, thresh 1.30, thresh ineqal: lt, the weighted error is 0.500 split: dim 0, thresh 1.30, thresh ineqal: gt, the weighted error is 0.250 split: dim 0, thresh 1.40, thresh ineqal: lt, the weighted error is 0.500 split: dim 0, thresh 1.40, thresh ineqal: gt, the weighted error is 0.250 split: dim 0, thresh 1.50, thresh ineqal: lt, the weighted error is 0.625 split: dim 0, thresh 1.50, thresh ineqal: gt, the weighted error is 0.250 split: dim 0, thresh 1.60, thresh ineqal: lt, the weighted error is 0.625 split: dim 0, thresh 1.60, thresh ineqal: gt, the weighted error is 0.250 split: dim 0, thresh 1.70, thresh ineqal: lt, the weighted error is 0.625 split: dim 0, thresh 1.70, thresh ineqal: gt, the weighted error is 0.250 split: dim 0, thresh 1.80, thresh ineqal: lt, the weighted error is 0.625 split: dim 0, thresh 1.80, thresh ineqal: gt, the weighted error is 0.250 split: dim 0, thresh 1.90, thresh ineqal: lt, the weighted error is 0.625 split: dim 0, thresh 1.90, thresh ineqal: gt, the weighted error is 0.250 split: dim 0, thresh 2.00, thresh ineqal: lt, the weighted error is 0.750 split: dim 0, thresh 2.00, thresh ineqal: gt, the weighted error is 0.250 split: dim 1, thresh 0.89, thresh ineqal: lt, the weighted error is 0.250 split: dim 1, thresh 0.89, thresh ineqal: gt, the weighted error is 0.250 split: dim 1, thresh 1.00, thresh ineqal: lt, the weighted error is 0.125 split: dim 1, thresh 1.00, thresh ineqal: gt, the weighted error is 0.250 split: dim 1, thresh 1.11, thresh ineqal: lt, the weighted error is 0.125 split: dim 1, thresh 1.11, thresh ineqal: gt, the weighted error is 0.250 split: dim 1, thresh 1.22, thresh ineqal: lt, the weighted error is 0.125 split: dim 1, thresh 1.22, thresh ineqal: gt, the weighted error is 0.250 split: dim 1, thresh 1.33, thresh ineqal: lt, the weighted error is 0.125 split: dim 1, thresh 1.33, thresh ineqal: gt, the weighted error is 0.250 split: dim 1, thresh 1.44, thresh ineqal: lt, the weighted error is 0.125 split: dim 1, thresh 1.44, thresh ineqal: gt, the weighted error is 0.250 split: dim 1, thresh 1.55, thresh ineqal: lt, the weighted error is 0.125 split: dim 1, thresh 1.55, thresh ineqal: gt, the weighted error is 0.250 split: dim 1, thresh 1.66, thresh ineqal: lt, the weighted error is 0.250 split: dim 1, thresh 1.66, thresh ineqal: gt, the weighted error is 0.250 split: dim 1, thresh 1.77, thresh ineqal: lt, the weighted error is 0.250 split: dim 1, thresh 1.77, thresh ineqal: gt, the weighted error is 0.250 split: dim 1, thresh 1.88, thresh ineqal: lt, the weighted error is 0.250 split: dim 1, thresh 1.88, thresh ineqal: gt, the weighted error is 0.250 split: dim 1, thresh 1.99, thresh ineqal: lt, the weighted error is 0.250 split: dim 1, thresh 1.99, thresh ineqal: gt, the weighted error is 0.250 split: dim 1, thresh 2.10, thresh ineqal: lt, the weighted error is 0.750 split: dim 1, thresh 2.10, thresh ineqal: gt, the weighted error is 0.250 classEst: [[ 1. 1. -1. -1. -1.]] aggClassEst: [[ 0.27980789 1.66610226 -1.66610226 -1.66610226 -0.27980789]] total error: 0.2 split: dim 0, thresh 0.90, thresh ineqal: lt, the weighted error is 0.143 split: dim 0, thresh 0.90, thresh ineqal: gt, the weighted error is 0.143 split: dim 0, thresh 1.00, thresh ineqal: lt, the weighted error is 0.357 split: dim 0, thresh 1.00, thresh ineqal: gt, the weighted error is 0.143 split: dim 0, thresh 1.10, thresh ineqal: lt, the weighted error is 0.357 split: dim 0, thresh 1.10, thresh ineqal: gt, the weighted error is 0.143 split: dim 0, thresh 1.20, thresh ineqal: lt, the weighted error is 0.357 split: dim 0, thresh 1.20, thresh ineqal: gt, the weighted error is 0.143 split: dim 0, thresh 1.30, thresh ineqal: lt, the weighted error is 0.286 split: dim 0, thresh 1.30, thresh ineqal: gt, the weighted error is 0.143 split: dim 0, thresh 1.40, thresh ineqal: lt, the weighted error is 0.286 split: dim 0, thresh 1.40, thresh ineqal: gt, the weighted error is 0.143 split: dim 0, thresh 1.50, thresh ineqal: lt, the weighted error is 0.357 split: dim 0, thresh 1.50, thresh ineqal: gt, the weighted error is 0.143 split: dim 0, thresh 1.60, thresh ineqal: lt, the weighted error is 0.357 split: dim 0, thresh 1.60, thresh ineqal: gt, the weighted error is 0.143 split: dim 0, thresh 1.70, thresh ineqal: lt, the weighted error is 0.357 split: dim 0, thresh 1.70, thresh ineqal: gt, the weighted error is 0.143 split: dim 0, thresh 1.80, thresh ineqal: lt, the weighted error is 0.357 split: dim 0, thresh 1.80, thresh ineqal: gt, the weighted error is 0.143 split: dim 0, thresh 1.90, thresh ineqal: lt, the weighted error is 0.357 split: dim 0, thresh 1.90, thresh ineqal: gt, the weighted error is 0.143 split: dim 0, thresh 2.00, thresh ineqal: lt, the weighted error is 0.857 split: dim 0, thresh 2.00, thresh ineqal: gt, the weighted error is 0.143 split: dim 1, thresh 0.89, thresh ineqal: lt, the weighted error is 0.143 split: dim 1, thresh 0.89, thresh ineqal: gt, the weighted error is 0.143 split: dim 1, thresh 1.00, thresh ineqal: lt, the weighted error is 0.500 split: dim 1, thresh 1.00, thresh ineqal: gt, the weighted error is 0.143 split: dim 1, thresh 1.11, thresh ineqal: lt, the weighted error is 0.500 split: dim 1, thresh 1.11, thresh ineqal: gt, the weighted error is 0.143 split: dim 1, thresh 1.22, thresh ineqal: lt, the weighted error is 0.500 split: dim 1, thresh 1.22, thresh ineqal: gt, the weighted error is 0.143 split: dim 1, thresh 1.33, thresh ineqal: lt, the weighted error is 0.500 split: dim 1, thresh 1.33, thresh ineqal: gt, the weighted error is 0.143 split: dim 1, thresh 1.44, thresh ineqal: lt, the weighted error is 0.500 split: dim 1, thresh 1.44, thresh ineqal: gt, the weighted error is 0.143 split: dim 1, thresh 1.55, thresh ineqal: lt, the weighted error is 0.500 split: dim 1, thresh 1.55, thresh ineqal: gt, the weighted error is 0.143 split: dim 1, thresh 1.66, thresh ineqal: lt, the weighted error is 0.571 split: dim 1, thresh 1.66, thresh ineqal: gt, the weighted error is 0.143 split: dim 1, thresh 1.77, thresh ineqal: lt, the weighted error is 0.571 split: dim 1, thresh 1.77, thresh ineqal: gt, the weighted error is 0.143 split: dim 1, thresh 1.88, thresh ineqal: lt, the weighted error is 0.571 split: dim 1, thresh 1.88, thresh ineqal: gt, the weighted error is 0.143 split: dim 1, thresh 1.99, thresh ineqal: lt, the weighted error is 0.571 split: dim 1, thresh 1.99, thresh ineqal: gt, the weighted error is 0.143 split: dim 1, thresh 2.10, thresh ineqal: lt, the weighted error is 0.857 split: dim 1, thresh 2.10, thresh ineqal: gt, the weighted error is 0.143 classEst: [[1. 1. 1. 1. 1.]] aggClassEst: [[ 1.17568763 2.56198199 -0.77022252 -0.77022252 0.61607184]] total error: 0.0 [[-0.69314718] [ 0.69314718]] [[-1.66610226] [ 1.66610226]] [[-2.56198199] [ 2.56198199]] [[-1.] [ 1.]] [Finished in 2.5s]