HAProxy 1.8 新特性

The monumental stable release of HAProxy 1.8 is coming! The HAProxy 1.8 release candidate 1 (RC1) has been published by the R&D team here at HAProxy Technologies, and in this blog post we are going to take you through some of the release highlights, new features, and configuration examples to get you up to speed.

For HAProxy 1.8, our development team was primarily focused on two areas:

- Performance and Application Acceleration — HAProxy has always been known for its reliability and performance, but in HAProxy 1.8 we’ve managed to make further improvements and include some features to speed up your applications (like HTTP/2 support).

- Cloud and Microservices — more settings can now be changed during runtime, and we have supported a couple of different ways to do so (Runtime API, DNS)

Performance and Application Acceleration

HTTP/2 Protocol Support

The HTTP/2 protocol has been seeing fast adoption, and HAProxy 1.8 now supports HTTP/2 on the client side (in the frontend sections) and can act as a gateway between HTTP/2 clients and your HTTP/1.1 and HTTP/1.0 applications.

To enable support for HTTP/2, the bind line in the frontend section must be configured as an SSL endpoint and alpn must announce h2:

- frontend f_myapp

- bind :443 ssl crt /path/to/cert.crt alpn h2,http/1.1

- mode http

This is all you need to enable HTTP/2 support on a particular frontend, but a complete and more detailed blog post related to the HTTP/2 support is also coming out soon, so stay tuned!

Multithreading

Since version 1.4, HAProxy has supported the multi process mode. This allowed users to get more performance out of a single physical machine at the cost of a few acceptable drawbacks related to each process having its own memory area, such as:

- stick-tables were per-process and data couldn’t be shared between multiple processes

- health checks were performed per process

- maxconn values (global, frontend, server) were counted per process

Sometimes these limitations got in our users’ way, and as a workaround we used to configure a bunch of “client side processes” pointing to a single “server side process” on the same machine. It was a bit hackish, but it worked very well with the Runtime API and the proxy protocol.

In HAProxy 1.8 we’ve added support for an additional mode — using threads instead of processes, which has an advantage in that it solves all of the limitations listed above.

The implementation and challenges involved here were very interesting (e.g. concurrent access to data in memory), and a complete blog post on this subject will be published in the near future.

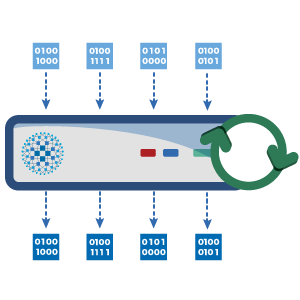

Hitless Reloads

This was definitely one of the more interesting problems that the HAProxy Technologies R&D team had a chance to identify and fix! Initially, we wanted to make absolutely sure that configuration reloads or version upgrades of the HAProxy processes did not break any client connections in the process.

This was definitely one of the more interesting problems that the HAProxy Technologies R&D team had a chance to identify and fix! Initially, we wanted to make absolutely sure that configuration reloads or version upgrades of the HAProxy processes did not break any client connections in the process.

We believed that this required some improvements in HAProxy, which were yet to be determined. But after a thorough investigation, it turned out that the issue was unrelated to HAProxy itself. The issue was present in the kernel and it was affecting HAProxy as well as all other software that was regularly pushed to the limits as HAProxy was.

We published a blog post detailing the complete problem, titled “Truly Seamless Reloads with HAProxy – No More Hacks!”. It contains a description of what improvements were done in HAProxy to solve the issue and what effective workarounds can be applied for other software.

HTTP Small Object Caching

HTTP response caching is a very handy feature when it comes to web application acceleration. The main use for it is offloading some percentage of static content delivery from the application servers themselves, and letting them more easily perform their primary duty — delivering the application.

HAProxy is staying true to its principle of not accessing the disks during runtime and so all objects are cached in memory. The maximum object size is as big as the value of the global parameter “tune.bufsize”, which defaults to 16KB.

This is a general-purpose caching mechanism that makes HAProxy usable as a small object accelerator in front of web applications or other layers like Varnish. The cool thing here is that you can control how large the objects to cache and accelerate delivery should be.

Backends in Cloud and Microservices Environments

Cloud and microservices environments have redefined the way modern load balancers should work and the way they should expose functionality during runtime.

Historically, HAProxy’s configuration was pretty static and changing the list of backend servers was only possible by reloading the configuration. Over time, this approach became unsuitable for environments where backend servers are scaled up and down very often or where VMs and containers often change their IP addresses.

With HAProxy 1.7, it was possible to change the servers’ IP addresses and ports during runtime by using the Runtime API, and DNS resolution during runtime was also possible.

But HAProxy 1.8 contains a whole new set of features that can be combined together to allow robust backend servers scaling, IP address changes, and much more. Here are just a couple of pointers:

Server-template Configuration Directive

The server-template configuration directive allows users to instantiate a number of placeholder backend servers in a single configuration line, instead of having to add tens or hundreds of configuration lines explicitly.

For example, if a service needs to scale up to 200 nodes, it can be configured as follows:

server-template www 200 10.0.0.1:8080 check

This would create 200 placeholder backend servers named www1 to www200 with the same IP address and port. A third party “controller” can then use the Runtime API to keep the list up to date with correct backend server data and settings, and this information would often come from an orchestration system.

DNS for Service Discovery

HAProxy can keep the list of backend servers up to date by using the DNS SRV or A records.

As long as the orchestration system supports DNS SRV records (and Kubernetes and Consul do), the server-template directive could be configured as follows:

server-template www 200 _http._tcp.red.default.svc.cluster.local:8080 check resolvers kube

If the orchestration system does not support DNS SRV records (such as Docker swarm mode), then A records can now be used as well. HAProxy’s DNS support has been improved so that it does not duplicate IP addresses when different DNS record types are encountered, and it automatically adjusts the number of backend servers to the number of records provided in the DNS responses.

We have described this functionality in detail in a recent blog post titled “DNS for Service Discovery in HAProxy”.

Dynamic Cookies

One of the challenges our users faced with scaling the backend servers was the cookie-based session persistence. Even though applications developed for dynamic environments were supposed to be as stateless as possible, some of them still required persistence or had additional benefits when it was enabled or present. Furthermore, two HAProxy processes load balancing the same application had to be able to apply the same persistence and consistency in the order the servers were provided.

A new keyword for the directive cookie now exists for this purpose: dynamic. The cookie value is a hash of the backend server’s IP and port, since this is the only unique criterion available in many environments. And in order to prevent reversing the cookie value, we must also use the dynamic-cookie-key option to add a key:

- dynamic-cookie-key MYKEY

- cookie SRVID insert dynamic

- server-template www 20 _http._tcp.red.default.svc.cluster.local:8080 check resolvers kube

Using the above configuration, a response sent back to the client would look like the following:

Set-Cookie: SRVID=8eefd5695557c25f; path=/

Improved Runtime API

The Runtime API allows administrators and automation systems to interact with HAProxy during runtime. HAProxy version 1.8 comes with further improvements and new directives:

- disable dynamic-cookie backend

: disable dynamic cookie for backend - enable dynamic-cookie backend

: enable dynamic cookie for backend - set dynamic-cookie-key backend

: set cookie key hash - set server

/ agent-addr : change server agent address - set server

/ agent-send : change server agent string - set server

/ fqdn : change server’s FQDN - show acl [

]: show list of ACLs in HAProxy or dump ACL contents - show cli sockets: show list of Runtime API clients

- show fd: (for debugging only) show list of open file descriptors

- show info json: famous “show info” in JSON output format

- show stat [{

| } ] json: famous “show stat” in JSON output format format and displayed per frontend or backend - show schema json: dump JSON schema used for “show stat” and “show info” commands

We explained in detail how the Runtime API can be used to make changes during runtime in the blog post titled “Dynamic Scaling for Microservices with the HAProxy Runtime API”.

Other Improvements

Master/Worker Mode

Historically, HAProxy always started as many processes as were requested by configuration – one by default, and more if the setting nbproc was set to higher than 1. All processes were then used to manage the traffic and the PID of each process was written at startup to pidfile. But this wasn’t really convenient in cases where 3rd party software was monitoring and controlling the HAProxy processes (e.g. needing to reload them) as it had to be aware of HAProxy’s individual processes and control each one separately. One of the examples in this category was certainly systemd.

To address these problems in a general way, a new master/worker mode was implemented in HAProxy 1.8. HAProxy now launches one master process and a number of additional worker processes (one or more, as specified in the configuration). The new master process monitors all the worker processes and controls them from a single instance as you would expect. This relieves systemd or any other software from having to manage individual HAProxy worker processes.

To enable the master/worker mode in HAProxy 1.8, please use one of the following approaches:

- Use the new directive in the global configuration section named “master-worker”

- Start the haproxy process with the command line argument -W

Configuration example:

- global

- master-worker

In the master/worker mode, in the output of ‘ps axf’ it will be possible to distinguish the master process from its worker processes. Here is an example for nbproc = 2:

- $ ps axf -o pid,command

- 15012 \_ haproxy -f ./master.cfg -d

- 15013 \_ haproxy -f ./master.cfg -d

- 15014 \_ haproxy -f ./master.cfg -d

In the example above, sending a signal USR2 to the master process (kill -SIGUSR2 15012) would trigger a reload of all worker processes automatically.

This mode is now the preferred mode for systemd-based installations and for configurations with nbprocgreater than 1.

TLS 1.3 0-RTT

There was a lot of general demand for 0-RTT support (often called just TLS 1.3), but it is important to know that by default 0-RTT makes TLS vulnerable to replay attacks and that a higher protocol must prevent that.

The IETF HTTP working group has been working on a draft to define how to safely map HTTP on top of 0-RTT to provide both performance and security. HAProxy 1.8 is the first server-side component to implement this draft, and we will soon be running interoperability tests with a well known browser which has just implemented it on the client side.

In HAProxy, this feature can be enabled by compiling with openssl-1.1.1 and adding “allow-0rtt” directive on the bind line.

SSL/TLS Mode Async

The OpenSSL library version 1.1.0 and above supports asynchronous I/O operations. This means that the key computation phase (the heaviest part of the TLS protocol) can be run in a non-blocking way in HAProxy.

To make this work, you must compile HAProxy with the latest openssl library version (1.1.0f at the time of writing this article), enable the TLS engine that supports asynchronous TLS operations, and add the following configuration to your global section:

- global

- ssl-engine rdrand # intel engine available in openssl

- ssl-mode-async

Your HAProxy will then become more responsive at processing traffic while SSL keys are being computed.

New Sample Fetches

HAProxy is able to extract data from traffic passing through it. We call these functions “sample fetches”. More information about sample fetches can be found under “Fetching Data Samples” in the HAPEE documentation.

HAProxy 1.8 comes with a group of new sample fetches:

- distcc_body: parses distcc message and returns body associated to it

- distcc_param: parses distcc message and returns parameter associated to it

- hostname: returns system hostname

- srv_queue: returns number of connections currently pending in server’s queue

- ssl_fc_cipherlist_bin: returns binary form of client hello cipher list

- ssl_fc_cipherlist_hex: same as above, but encoded as hexadecimal

- ssl_fc_cipherlist_str: returns decoded text form of client hello cipher list

- ssl_fc_cipherlist_xxh: returns xxh64 of client hello cipher list

- req.hdrs: returns current request headers

- req.hdrs_bin: returns current request headers in preparsed binary form

New Converters

HAProxy 1.8 comes with a group of new converters:

- b64dec: decodes base64 encoded string into binary

- nbsrv: returns number of available servers in a backend

- sha1: computes SHA1 digest of binary input

- xxh32: hashes binary input into unsigned 32 bit

- xxh64: hashes binary input into unsigned 64 bit

Lua API Improvements

The following improvements now exist in the Lua API:

- Core Class:

- core.backends: array of backends

- core.frontends: array of frontends

- Proxy Class:

- proxy.name: string containing proxy name

- HTTP Applet Class:

- set_var(applet, var, value): set HAProxy variable

- unset_var(applet, var): unset HAProxy variable

- get_var(applet, var): return content of HAProxy variable

- TCP Applet Class:

- set_var(applet, var, value): set HAProxy variable

- unset_var(applet, var): unset HAProxy variable

- get_var(applet, var): return content of HAProxy variable

The example Lua code below creates a converter which converts a backend UUID into the corresponding proxy name:

- core.register_converters("get_backend_name", function(id)

- for bename,be in pairs(core.backends) do

- if id == be.uuid then

- return bename

- end

- end

- return "notfound"

- end)

To use it, simply set an HTTP rule like this one:

http-response set-header X-backend %[be_id,lua.get_backend_name]

With the above Lua code and configuration, HAProxy will set header “X-backend” in all responses sent to the clients. The value of the header will contain the backend name. The same approach could be used for frontends as well, just by replacing “backend” with “frontend” in appropriate places.

Conclusion

We hope you have enjoyed this quick tour of the notable features coming up in HAProxy 1.8. The HAProxy Technologies R&D team has been working tirelessly to deliver them, and we thank the HAProxy community members who have also made contributions to this release. We hope to get many community members testing out HAProxy 1.8 release candidate 1 (RC1) to make sure it’s the best release of HAProxy yet.

If you would like to make use of some of the new features before waiting for the stable release of HAProxy 1.8 (late November 2017), please see our HAProxy Enterprise Edition – Trial Version or contactHAProxy Technologies for expert advice. HAProxy Enterprise Edition is our commercially supported version of HAProxy based on the HAProxy Community Edition stable branch where we backport many features from the dev branch and package it to make the most stable, reliable, advanced and secure version of HAProxy. It also comes with high performance modules, specialized tools and scripts, and optimized third party software to make it a true enterprise ADC. The best part is that you get enterprise support directly from the authoritative experts here at HAProxy Technologies.

In parallel with preparations for the stable release of HAProxy 1.8, we will also be publishing further blog posts describing the new features in detail written directly by members of our HAProxy Technologies R&D team.

Stay tuned!