在Kubernetes上使用vSphereVolume

在Kubernetes上使用vSphereVolume

在现有的vSphere平台上部署Kubernetes时,可以使用vSphereVolume作为后端存储,省去部署和维护其他分布式存放服务的麻烦。

1、环境介绍

- Kubernetes

[root@master-0 ~]# kubectl get nodes -o wide

NAME STATUS ROLES AGE VERSION EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

master-0 Ready master 19d v1.9.2+coreos.0 192.168.112.240 CentOS Linux 7 (Core) 3.10.0-862.el7.x86_64 docker://1.13.1

worker-0 Ready 19d v1.9.2+coreos.0 192.168.112.241 CentOS Linux 7 (Core) 3.10.0-862.el7.x86_64 docker://1.13.1

worker-1 Ready 19d v1.9.2+coreos.0 192.168.112.242 CentOS Linux 7 (Core) 3.10.0-862.el7.x86_64 docker://1.13.1 - vSphere

- VirtualCenter:vcenter.dameng.com

- DataCenter:vdatacenter

- DataStore:YPTSTORE

- Version:

[root@master-0 ~]# govc about

Name: VMware vCenter Server

Vendor: VMware, Inc.

Version: 6.0.0

Build: 5318203

OS type: linux-x64

API type: VirtualCenter

API version: 6.0

Product ID: vpx

UUID: bef8467d-4716-40b8-b381-210248a29a562、配置vSphere

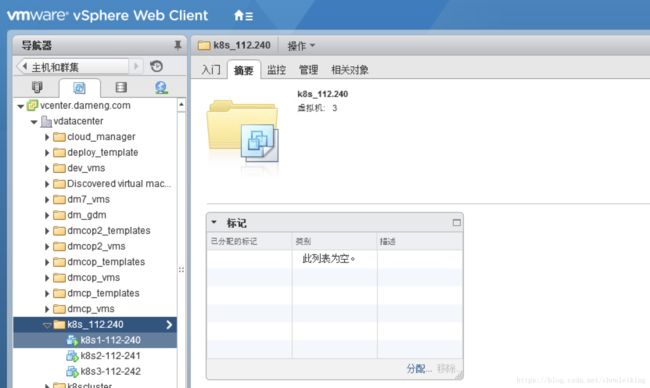

1、确保所有kubernetes节点对应的虚拟机在同一个目录中,本文对应目录:k8s_112.240

2、设置所有kubernetes节点对应的虚拟机disk.EnableUUID为启用状态

### 安装客户端工具govc ###

[root@master-0 ~]# wget https://github.com/vmware/govmomi/releases/download/v0.18.0/govc_linux_amd64.gz

[root@master-0 ~]# gunzip govc_linux_amd64.gz

[root@master-0 ~]# mv govc_linux_amd64 /usr/local/bin/govc

[root@master-0 ~]# chmod +x /usr/local/bin/govc

### 配置环境变量 ###

export GOVC_URL='vcenter.dameng.com'

export GOVC_USERNAME='[email protected]'

export GOVC_PASSWORD='awesomePassword'

export GOVC_INSECURE=1

### 测试配置 ###

[root@master-0 ~]# govc env

GOVC_USERNAME=administrator@dameng.com

GOVC_PASSWORD=awesomePassword

GOVC_URL=vcenter.dameng.com

GOVC_INSECURE=1

### 设置disk.EnableUUID ###

[root@master-0 ~]# govc ls /vdatacenter/vm/k8s_112.240

/vdatacenter/vm/k8s_112.240/k8s2-112-241

/vdatacenter/vm/k8s_112.240/k8s3-112-242

/vdatacenter/vm/k8s_112.240/k8s1-112-240

[root@master-0 ~]# govc vm.change -e="disk.enableUUID=1" -vm='/vdatacenter/vm/k8s_112.240/k8s1-112-240'

[root@master-0 ~]# govc vm.change -e="disk.enableUUID=1" -vm='/vdatacenter/vm/k8s_112.240/k8s2-112-241'

[root@master-0 ~]# govc vm.change -e="disk.enableUUID=1" -vm='/vdatacenter/vm/k8s_112.240/k8s3-112-242'3、创建VMDK

[root@master-0 ~]# govc datastore.disk.create -ds YPTSTORE -size 10G k8sData/MySQLDisk.vmdk1、目录需要事前建立,否则会报错

2、vmdk文件创建之后的初始大小为0kb

4、配置vSphere用户和角色(略)

3、配置Kubernetes

3.1、配置Kubernetes Cloud Provider

- 在master节点上创建vsphere.conf配置文件

[root@master-0 ~]# mkdir /etc/kubernetes/cloud-config

[root@master-0 ~]# cat > /etc/kubernetes/cloud-config/vsphere.conf << EOF

[Global]

user = "[email protected]"

password = "awesomePassword"

port = "443"

insecure-flag = "1"

datacenters = "vdatacenter"

[VirtualCenter "vcenter.dameng.com"]

[Workspace]

server = "vcenter.dameng.com"

datacenter = "vdatacenter"

default-datastore = "YPTSTORE"

resourcepool-path = "vnewcluster/resource-pool"

folder = "k8s_112.240"

[Disk]

scsicontrollertype = pvscsi

[Network]

public-network = "VM Network"

EOF- 在master节点上修改kubelet启动参数文件,添加 –cloud-provider=vsphere –cloud-config=/etc/kubernetes/cloud-config/vsphere.conf

[root@master-0 ~]# vi /etc/kubernetes/kubelet.env

### Upstream source https://github.com/kubernetes/release/blob/master/debian/xenial/kubeadm/channel/stable/etc/systemd/system/kubelet.service.d/10-kubeadm.conf

### All upstream values should be present in this file

# logging to stderr means we get it in the systemd journal

KUBE_LOGTOSTDERR="--logtostderr=true"

KUBE_LOG_LEVEL="--v=2"

# The address for the info server to serve on (set to 0.0.0.0 or "" for all interfaces)

KUBELET_ADDRESS="--address=192.168.112.240 --node-ip=192.168.112.240"

# The port for the info server to serve on

# KUBELET_PORT="--port=10250"

# You may leave this blank to use the actual hostname

KUBELET_HOSTNAME="--hostname-override=master-0"

KUBELET_ARGS="--bootstrap-kubeconfig=/etc/kubernetes/bootstrap-kubelet.conf \

--kubeconfig=/etc/kubernetes/kubelet.conf \

--authorization-mode=Webhook \

--client-ca-file=/etc/kubernetes/ssl/ca.crt \

--pod-manifest-path=/etc/kubernetes/manifests \

--cadvisor-port=0 \

--pod-infra-container-image=192.168.101.88:5000/k8s1.9/pause-amd64:3.0 \

--kube-reserved cpu=100m,memory=256M \

--node-status-update-frequency=10s \

--cgroup-driver=cgroupfs \

--docker-disable-shared-pid=True \

--anonymous-auth=false \

--fail-swap-on=True \

--cluster-dns=10.233.0.3 --cluster-domain=cluster.local --resolv-conf=/etc/resolv.conf --kube-reserved cpu=200m,memory=512M "

KUBELET_NETWORK_PLUGIN="--network-plugin=cni --cni-conf-dir=/etc/cni/net.d --cni-bin-dir=/opt/cni/bin"

# Should this cluster be allowed to run privileged docker containers

KUBE_ALLOW_PRIV="--allow-privileged=true"

KUBELET_CLOUDPROVIDER="--cloud-provider=vsphere --cloud-config=/etc/kubernetes/cloud-config/vsphere.conf"

PATH=/usr/local/bin:/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin- 在master节点上修改apiserver的manifest文件

[root@master-0 ~]# vi /etc/kubernetes/manifests/kube-apiserver.yaml

apiVersion: v1

kind: Pod

metadata:

annotations:

scheduler.alpha.kubernetes.io/critical-pod: ""

creationTimestamp: null

labels:

component: kube-apiserver

tier: control-plane

name: kube-apiserver

namespace: kube-system

spec:

containers:

- command:

- kube-apiserver

- --bind-address=0.0.0.0

- --insecure-bind-address=127.0.0.1

- --admission-control=Initializers,NamespaceLifecycle,LimitRanger,ServiceAccount,DefaultStorageClass,ValidatingAdmissionWebhook,ResourceQuota

- --allow-privileged=true

- --apiserver-count=1

- --insecure-port=8080

- --runtime-config=admissionregistration.k8s.io/v1alpha1

- --service-node-port-range=30000-32767

- --storage-backend=etcd3

- --proxy-client-cert-file=/etc/kubernetes/ssl/front-proxy-client.crt

- --kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname

- --service-account-key-file=/etc/kubernetes/ssl/sa.pub

- --tls-private-key-file=/etc/kubernetes/ssl/apiserver.key

- --kubelet-client-key=/etc/kubernetes/ssl/apiserver-kubelet-client.key

- --secure-port=6443

- --requestheader-client-ca-file=/etc/kubernetes/ssl/front-proxy-ca.crt

- --requestheader-allowed-names=front-proxy-client

- --advertise-address=192.168.112.240

- --client-ca-file=/etc/kubernetes/ssl/ca.crt

- --tls-cert-file=/etc/kubernetes/ssl/apiserver.crt

- --proxy-client-key-file=/etc/kubernetes/ssl/front-proxy-client.key

- --requestheader-group-headers=X-Remote-Group

- --requestheader-extra-headers-prefix=X-Remote-Extra-

- --service-cluster-ip-range=10.233.0.0/18

- --enable-bootstrap-token-auth=true

- --requestheader-username-headers=X-Remote-User

- --kubelet-client-certificate=/etc/kubernetes/ssl/apiserver-kubelet-client.crt

- --authorization-mode=Node,RBAC

- --etcd-servers=https://192.168.112.240:2379

- --etcd-cafile=/etc/kubernetes/ssl/etcd/ca.pem

- --etcd-certfile=/etc/kubernetes/ssl/etcd/node-master-0.pem

- --etcd-keyfile=/etc/kubernetes/ssl/etcd/node-master-0-key.pem

- --cloud-provider=vsphere

- --cloud-config=/etc/kubernetes/cloud-config/vsphere.conf

image: 192.168.101.88:5000/k8s1.9/hyperkube:v1.9.2_coreos.0

livenessProbe:

failureThreshold: 8

httpGet:

host: 192.168.112.240

path: /healthz

port: 6443

scheme: HTTPS

initialDelaySeconds: 15

timeoutSeconds: 15

name: kube-apiserver

resources:

requests:

cpu: 250m

volumeMounts:

- mountPath: /etc/ssl/certs

name: ca-certs

readOnly: true

- mountPath: /etc/pki

name: ca-certs-etc-pki

readOnly: true

- mountPath: /etc/kubernetes/ssl

name: k8s-certs

readOnly: true

- mountPath: /etc/kubernetes/cloud-config

name: cloud-config

readOnly: true

hostNetwork: true

volumes:

- hostPath:

path: /etc/kubernetes/ssl

type: DirectoryOrCreate

name: k8s-certs

- hostPath:

path: /etc/ssl/certs

type: DirectoryOrCreate

name: ca-certs

- hostPath:

path: /etc/pki

type: DirectoryOrCreate

name: ca-certs-etc-pki

- hostPath:

path: /etc/kubernetes/cloud-config

type: DirectoryOrCreate

name: cloud-config

status: {}注意:这里我将vsphere.conf文件放在/etc/kubernetes/cloud-config目录下,apiserver不会将此目录挂载到容器内,所以这里处理增加启动参数外,我需要添加volumes~

- 在master节点上修改controller-manager的manifest文件

[root@master-0 ~]# vi /etc/kubernetes/manifests/kube-controller-manager.yaml

apiVersion: v1

kind: Pod

metadata:

annotations:

scheduler.alpha.kubernetes.io/critical-pod: ""

creationTimestamp: null

labels:

component: kube-controller-manager

tier: control-plane

name: kube-controller-manager

namespace: kube-system

spec:

containers:

- command:

- kube-controller-manager

- --feature-gates=Initializers=False,PersistentLocalVolumes=False

- --node-monitor-grace-period=40s

- --node-monitor-period=5s

- --pod-eviction-timeout=5m0s

- --cluster-signing-key-file=/etc/kubernetes/ssl/ca.key

- --address=127.0.0.1

- --leader-elect=true

- --use-service-account-credentials=true

- --controllers=*,bootstrapsigner,tokencleaner

- --kubeconfig=/etc/kubernetes/controller-manager.conf

- --root-ca-file=/etc/kubernetes/ssl/ca.crt

- --service-account-private-key-file=/etc/kubernetes/ssl/sa.key

- --cluster-signing-cert-file=/etc/kubernetes/ssl/ca.crt

- --allocate-node-cidrs=true

- --cluster-cidr=10.233.64.0/18

- --node-cidr-mask-size=24

- --cloud-provider=vsphere

- --cloud-config=/etc/kubernetes/cloud-config/vsphere.conf

image: 192.168.101.88:5000/k8s1.9/hyperkube:v1.9.2_coreos.0

livenessProbe:

failureThreshold: 8

httpGet:

host: 127.0.0.1

path: /healthz

port: 10252

scheme: HTTP

initialDelaySeconds: 15

timeoutSeconds: 15

name: kube-controller-manager

resources:

requests:

cpu: 200m

volumeMounts:

- mountPath: /etc/kubernetes/ssl

name: k8s-certs

readOnly: true

- mountPath: /etc/ssl/certs

name: ca-certs

readOnly: true

- mountPath: /etc/kubernetes/controller-manager.conf

name: kubeconfig

readOnly: true

- mountPath: /etc/pki

name: ca-certs-etc-pki

readOnly: true

- mountPath: /etc/kubernetes/cloud-config

name: cloud-config

readOnly: true

hostNetwork: true

volumes:

- hostPath:

path: /etc/kubernetes/ssl

type: DirectoryOrCreate

name: k8s-certs

- hostPath:

path: /etc/ssl/certs

type: DirectoryOrCreate

name: ca-certs

- hostPath:

path: /etc/kubernetes/controller-manager.conf

type: FileOrCreate

name: kubeconfig

- hostPath:

path: /etc/pki

type: DirectoryOrCreate

name: ca-certs-etc-pki

- hostPath:

path: /etc/kubernetes/cloud-config

type: DirectoryOrCreate

name: cloud-config

status: {}- 在所有worker节点上修改kubelet启动参数文件

[root@worker-0 ~]# vi /etc/kubernetes/kubelet.env

### Upstream source https://github.com/kubernetes/release/blob/master/debian/xenial/kubeadm/channel/stable/etc/systemd/system/kubelet.service.d/10-kubeadm.conf

### All upstream values should be present in this file

# logging to stderr means we get it in the systemd journal

KUBE_LOGTOSTDERR="--logtostderr=true"

KUBE_LOG_LEVEL="--v=2"

# The address for the info server to serve on (set to 0.0.0.0 or "" for all interfaces)

KUBELET_ADDRESS="--address=192.168.112.241 --node-ip=192.168.112.241"

# The port for the info server to serve on

# KUBELET_PORT="--port=10250"

# You may leave this blank to use the actual hostname

KUBELET_HOSTNAME="--hostname-override=worker-0"

KUBELET_ARGS="--bootstrap-kubeconfig=/etc/kubernetes/bootstrap-kubelet.conf \

--kubeconfig=/etc/kubernetes/kubelet.conf \

--authorization-mode=Webhook \

--client-ca-file=/etc/kubernetes/ssl/ca.crt \

--pod-manifest-path=/etc/kubernetes/manifests \

--cadvisor-port=0 \

--pod-infra-container-image=192.168.101.88:5000/k8s1.9/pause-amd64:3.0 \

--kube-reserved cpu=100m,memory=256M \

--node-status-update-frequency=10s \

--cgroup-driver=cgroupfs \

--docker-disable-shared-pid=True \

--anonymous-auth=false \

--fail-swap-on=True \

--cluster-dns=10.233.0.3 --cluster-domain=cluster.local --resolv-conf=/etc/resolv.conf --kube-reserved cpu=100m,memory=256M "

KUBELET_NETWORK_PLUGIN="--network-plugin=cni --cni-conf-dir=/etc/cni/net.d --cni-bin-dir=/opt/cni/bin"

# Should this cluster be allowed to run privileged docker containers

KUBE_ALLOW_PRIV="--allow-privileged=true"

KUBELET_CLOUDPROVIDER="--cloud-provider=vsphere"

PATH=/usr/local/bin:/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin*注意:**node节点上只需要增加–cloud-provider=vsphere*,不需要其他配置

- 创建ClusterRole和ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRole

metadata:

name: vsphere-cloud-provider

rules:

- apiGroups: [""]

resources: ["nodes"]

verbs: ["get", "list", "watch"]

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRoleBinding

metadata:

name: vsphere-cloud-provider

namespace: kube-system

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: vsphere-cloud-provider

subjects:

- kind: ServiceAccount

name: vsphere-cloud-provider

namespace: kube-system这一步应该是一个多余的操作,由一个可能的bug导致,默认的vsphere-cloud-provider没有查询node的权限,错误信息如下:

E0912 05:25:40.720017 1 reflector.go:205] k8s.io/kubernetes/pkg/cloudprovider/providers/vsphere/vsphere.go:227: Failed to list *v1.Node: nodes is forbidden: User “system:serviceaccount:kube-system:vsphere-cloud-provider” cannot list nodes at the cluster scope

- 重启所有节点上的kubelet服务

[root@master-0 ~]# systemctl daemon-reload

[root@master-0 ~]# systemctl restart kubelet一般情况下,apiserver和controller-manager会在你修改manifest文件之后自动重启,如果不放心,可以删除对应pod,kubernetes会自动拉起新的pod

3.2、测试vSphereVolume

- 使用volume部署MySQL

apiVersion: v1

kind: Secret

metadata:

name: hive-metadata-mysql-secret

labels:

app: hive-metadata-mysql

type: Opaque

data:

mysql-root-password: RGFtZW5nQDc3Nw==

---

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: hive-metadata-mysql

name: hive-metadata-mysql

spec:

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

app: hive-metadata-mysql

template:

metadata:

labels:

app: hive-metadata-mysql

spec:

initContainers:

- name: remove-lost-found

image: 192.168.101.88:5000/k8s1.9/busybox:1.29.2

imagePullPolicy: IfNotPresent

command: ["rm", "-rf", "/var/lib/mysql/lost+found"]

volumeMounts:

- name: data

mountPath: /var/lib/mysql

containers:

- name: mysql

image: 192.168.101.88:5000/dmcop2/mysql:5.7

volumeMounts:

- name: data

mountPath: /var/lib/mysql

ports:

- containerPort: 3306

protocol: TCP

env:

- name: MYSQL_ROOT_PASSWORD

valueFrom:

secretKeyRef:

name: hive-metadata-mysql-secret

key: mysql-root-password

volumes:

- name: data

vsphereVolume:

volumePath: "[YPTSTORE] k8sData/MySQLDisk.vmdk"

fsType: ext4

---

kind: Service

apiVersion: v1

metadata:

labels:

app: hive-metadata-mysql

name: hive-metadata-mysql-service

spec:

ports:

- name: tcp

port: 3306

targetPort: 3306

selector:

app: hive-metadata-mysql

type: NodePort[root@master-0 vsphere]# kubectl get pods

NAME READY STATUS RESTARTS AGE

hive-metadata-mysql-7f8f4d959-dhc7f 1/1 Running 0 1h

[root@master-0 vsphere]# kubectl exec hive-metadata-mysql-7f8f4d959-dhc7f -ti bash

root@hive-metadata-mysql-7f8f4d959-dhc7f:/# df -h

Filesystem Size Used Avail Use% Mounted on

overlay 50G 10G 41G 20% /

tmpfs 16G 0 16G 0% /dev

tmpfs 16G 0 16G 0% /sys/fs/cgroup

/dev/mapper/centos-root 50G 10G 41G 20% /etc/hosts

shm 64M 0 64M 0% /dev/shm

/dev/sdb 9.8G 246M 9.0G 3% /var/lib/mysql

tmpfs 16G 12K 16G 1% /run/secrets/kubernetes.io/serviceaccount

tmpfs 16G 0 16G 0% /proc/scsi

tmpfs 16G 0 16G 0% /sys/firmware- 使用PV部署MySQL

[root@master-0 vsphere]# govc datastore.disk.create -ds YPTSTORE -size 10G k8sData/MySQLDisk-PV.vmdk

[12-09-18 16:22:29] Creating [YPTSTORE] k8sData/MySQLDisk-PV.vmdk...OK[root@master-0 vsphere]# cat > mysql-pv.yml << EOF

apiVersion: v1

kind: PersistentVolume

metadata:

labels:

app: hive-metadata-mysql

name: hive-metadata-mysql-pv

spec:

capacity:

storage: 10Gi

accessModes:

- ReadWriteOnce

persistentVolumeReclaimPolicy: Retain

vsphereVolume:

volumePath: "[YPTSTORE] k8sData/MySQLDisk-PV.vmdk"

fsType: ext4

---

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: hive-metadata-mysql-pvc

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 10Gi

selector:

matchLabels:

app: hive-metadata-mysql

---

apiVersion: v1

kind: Secret

metadata:

name: hive-metadata-mysql-secret

labels:

app: hive-metadata-mysql

type: Opaque

data:

mysql-root-password: RGFtZW5nQDc3Nw==

---

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: hive-metadata-mysql

name: hive-metadata-mysql

spec:

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

app: hive-metadata-mysql

template:

metadata:

labels:

app: hive-metadata-mysql

spec:

initContainers:

- name: remove-lost-found

image: 192.168.101.88:5000/k8s1.9/busybox:1.29.2

imagePullPolicy: IfNotPresent

command: ["rm", "-rf", "/var/lib/mysql/lost+found"]

volumeMounts:

- name: data

mountPath: /var/lib/mysql

containers:

- name: mysql

image: 192.168.101.88:5000/dmcop2/mysql:5.7

volumeMounts:

- name: data

mountPath: /var/lib/mysql

ports:

- containerPort: 3306

protocol: TCP

env:

- name: MYSQL_ROOT_PASSWORD

valueFrom:

secretKeyRef:

name: hive-metadata-mysql-secret

key: mysql-root-password

volumes:

- name: data

persistentVolumeClaim:

claimName: hive-metadata-mysql-pvc

---

kind: Service

apiVersion: v1

metadata:

labels:

app: hive-metadata-mysql

name: hive-metadata-mysql-service

spec:

ports:

- name: tcp

port: 3306

targetPort: 3306

selector:

app: hive-metadata-mysql

type: NodePort

EOF[root@master-0 vsphere]# kubectl apply -f mysql-pv.yml

persistentvolume "hive-metadata-mysql-pv" created

persistentvolumeclaim "hive-metadata-mysql-pvc" created

secret "hive-metadata-mysql-secret" created

deployment "hive-metadata-mysql" created

service "hive-metadata-mysql-service" created

[root@master-0 vsphere]# kubectl get pv,pvc,pod

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

pv/hive-metadata-mysql-pv 10Gi RWO Retain Bound default/hive-metadata-mysql-pvc 50s

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

pvc/hive-metadata-mysql-pvc Bound hive-metadata-mysql-pv 10Gi RWO 50s

NAME READY STATUS RESTARTS AGE

po/hive-metadata-mysql-7947b6dbfd-728dd 1/1 Running 0 49s

[root@master-0 vsphere]# kubectl exec hive-metadata-mysql-7947b6dbfd-728dd -ti bash

root@hive-metadata-mysql-7947b6dbfd-728dd:/# df -h

Filesystem Size Used Avail Use% Mounted on

overlay 50G 8.8G 42G 18% /

tmpfs 16G 0 16G 0% /dev

tmpfs 16G 0 16G 0% /sys/fs/cgroup

/dev/mapper/centos-root 50G 8.8G 42G 18% /etc/hosts

shm 64M 0 64M 0% /dev/shm

/dev/sdb 9.8G 246M 9.0G 3% /var/lib/mysql

tmpfs 16G 12K 16G 1% /run/secrets/kubernetes.io/serviceaccount

tmpfs 16G 0 16G 0% /proc/scsi

tmpfs 16G 0 16G 0% /sys/firmware- 使用StorageClass部署MySQL

[root@master-0 vsphere]# cat > mysql-sc.yml << EOF

kind: StorageClass

apiVersion: storage.k8s.io/v1

metadata:

name: vsphere-fast-sc

provisioner: kubernetes.io/vsphere-volume

parameters:

diskformat: thin

fsType: ext4

---

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: hive-metadata-mysql-pvc

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 10Gi

storageClassName: vsphere-fast-sc

---

apiVersion: v1

kind: Secret

metadata:

name: hive-metadata-mysql-secret

labels:

app: hive-metadata-mysql

type: Opaque

data:

mysql-root-password: RGFtZW5nQDc3Nw==

---

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: hive-metadata-mysql

name: hive-metadata-mysql

spec:

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

app: hive-metadata-mysql

template:

metadata:

labels:

app: hive-metadata-mysql

spec:

initContainers:

- name: remove-lost-found

image: 192.168.101.88:5000/k8s1.9/busybox:1.29.2

imagePullPolicy: IfNotPresent

command: ["rm", "-rf", "/var/lib/mysql/lost+found"]

volumeMounts:

- name: data

mountPath: /var/lib/mysql

containers:

- name: mysql

image: 192.168.101.88:5000/dmcop2/mysql:5.7

volumeMounts:

- name: data

mountPath: /var/lib/mysql

ports:

- containerPort: 3306

protocol: TCP

env:

- name: MYSQL_ROOT_PASSWORD

valueFrom:

secretKeyRef:

name: hive-metadata-mysql-secret

key: mysql-root-password

volumes:

- name: data

persistentVolumeClaim:

claimName: hive-metadata-mysql-pvc

---

kind: Service

apiVersion: v1

metadata:

labels:

app: hive-metadata-mysql

name: hive-metadata-mysql-service

spec:

ports:

- name: tcp

port: 3306

targetPort: 3306

selector:

app: hive-metadata-mysql

type: NodePort

EOF[root@master-0 vsphere]# kubectl apply -f mysql-sc.yml

storageclass "vsphere-fast-sc" created

persistentvolumeclaim "hive-metadata-mysql-pvc" created

secret "hive-metadata-mysql-secret" created

deployment "hive-metadata-mysql" created

service "hive-metadata-mysql-service" created

[root@master-0 vsphere]# kubectl get sc,pv,pvc,pod

NAME PROVISIONER AGE

storageclasses/vsphere-fast-sc kubernetes.io/vsphere-volume 1m

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

pv/pvc-c85e69ba-b66c-11e8-a78e-0050568eded2 10Gi RWO Delete Bound default/hive-metadata-mysql-pvc vsphere-fast-sc 1m

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

pvc/hive-metadata-mysql-pvc Bound pvc-c85e69ba-b66c-11e8-a78e-0050568eded2 10Gi RWO vsphere-fast-sc 1m

NAME READY STATUS RESTARTS AGE

po/hive-metadata-mysql-7947b6dbfd-ck4rh 1/1 Running 0 1m参考:https://kubernetes.io/docs/concepts/storage/storage-classes/#vsphere

说明:自动创建的vmdk存在在kubevols目录下

4、问题排查记录

问题排查方法:

- 查看kubelet日志,命令:journalctl -xe -u kubelet -f

- 查看apiserver、controller-manager日志,命令:kubectl logs -f -n xxxx

- 设置日志级别,在kubelet.env和manifest文件中设置–v=2,最高级别:9

- 容器启动失败时,查看容器日志的方法:

### 以apiserver为例 ### [root@master-0 ~]# docker ps -a | grep apiserver 996f698e37bb 192.168.101.88:5000/k8s1.9/hyperkube@sha256:487ab0f671b81a428f70f7cc3dd44dd36adebed1ac85df78ef03a15a377e1737 "kube-apiserver --..." 4 hours ago Up 4 hours k8s_kube-apiserver_kube-apiserver-master-0_kube-system_48d38fc3566742ce246a1106b1a1b6a8_0 73f7d8aaeb3e 192.168.101.88:5000/k8s1.9/pause-amd64:3.0 "/pause" 4 hours ago Up 4 hours k8s_POD_kube-apiserver-master-0_kube-system_48d38fc3566742ce246a1106b1a1b6a8_0 [root@master-0 ~]# docker inspect 996f698e37bb | grep Id "Id": "996f698e37bb2d750cebfb4fe0a42d3aaa174f0be838ad4e516f082d97250468", [root@master-0 ~]# cd /var/lib/docker/containers/996f698e37bb2d750cebfb4fe0a42d3aaa174f0be838ad4e516f082d97250468/ [root@master-0 996f698e37bb2d750cebfb4fe0a42d3aaa174f0be838ad4e516f082d97250468]# ll total 44 -rw-r-----. 1 root root 24985 Sep 12 15:21 996f698e37bb2d750cebfb4fe0a42d3aaa174f0be838ad4e516f082d97250468-json.log drwx------. 2 root root 6 Sep 12 11:31 checkpoints -rw-r--r--. 1 root root 8258 Sep 12 11:31 config.v2.json -rw-r--r--. 1 root root 1734 Sep 12 11:31 hostconfig.json drwxr-xr-x. 2 root root 6 Sep 12 11:31 secrets [root@master-0 996f698e37bb2d750cebfb4fe0a42d3aaa174f0be838ad4e516f082d97250468]# more 996f698e37bb2d750cebfb4fe0a42d3aaa174f0be838ad4e516f082d97250468-json.log参考:https://vmware.github.io/vsphere-storage-for-kubernetes/documentation/troubleshooting.html

4.1、kubelet启动失败

- 异常:

[root@master-0 ~]# journalctl -xe -u kubelet -f

Sep 12 15:44:57 master-0 kubelet[130860]: Error: failed to run Kubelet: could not init cloud provider "vsphere": 8:16: expected subsection name or right bracket- 原因:通常是vsphere.conf文件内容格式有问题,根据检查文件内容(8:16),注意引号

- 解决:将 [VirtualCenter vcenter.dameng.com] 修改为 [VirtualCenter “vcenter.dameng.com”]

4.2、apiserver或者controller-manager启动失败

- 异常

[root@master-0 manifests]# kubectl get pods --all-namespaces

The connection to the server 192.168.112.240:6443 was refused - did you specify the right host or port?

[root@master-0 manifests]# docker ps -a | grep apiserver

61548cb79298 192.168.101.88:5000/k8s1.9/hyperkube@sha256:487ab0f671b81a428f70f7cc3dd44dd36adebed1ac85df78ef03a15a377e1737 "kube-apiserver --..." 2 seconds ago Exited (255) 1 second ago k8s_kube-apiserver_kube-apiserver-master-0_kube-system_cb4413f233cf5900254d45241234af7f_1

d824060344d7 192.168.101.88:5000/k8s1.9/pause-amd64:3.0 "/pause" 3 seconds ago Up 3 seconds k8s_POD_kube-apiserver-master-0_kube-system_cb4413f233cf5900254d45241234af7f_0

[root@master-0 manifests]# docker inspect 61548cb79298 | grep Id

"Id": "61548cb792980c49a2be46ab9a62a06a46120a882738f71c9b152466706fdb30",

[root@master-0 ~]# more /var/lib/docker/containers/61548cb792980c49a2be46ab9a62a06a46120a882738f71c9b152466706fdb30/61548cb792980c49a2be46ab9a62a06a46120a882738f71c9b152466706fdb30-json.log

{"log":"I0912 08:07:40.778966 1 server.go:121] Version: v1.9.2+coreos.0\n","stream":"stderr","time":"2018-09-12T08:07:40.779255235Z"}

{"log":"I0912 08:07:40.779121 1 cloudprovider.go:59] --external-hostname was not specified. Trying to get it from the cloud provider.\n","stream":"stderr","time":"2018-09-12T08:07:40.779335163Z"}

{"log":"F0912 08:07:40.779160 1 plugins.go:114] Couldn't open cloud provider configuration /etc/kubernetes/cloud-config/vsphere.conf: \u0026os.PathError{Op:\"open\", Path:\"/etc/kubernetes/cloud-config/vsphere.conf\",

Err:0x2}\n","stream":"stderr","time":"2018-09-12T08:07:40.779444798Z"}- 原因:使用kubeadm部署集群或者将apiserver、controller-manager部署在容器中时,默认配置下,容器并没有挂载vsphere.conf所在的目录,导致apiserver、controller-manager在启动时,无法加载文件

- 解决:修改apiserver、controller-manager的manifest文件,将vsphere.conf所在目录挂载到容器内

4.3、controller-manager持续报错

- 异常:

[root@master-0 ~]# kubectl describe pod hive-metadata-mysql-7f8f4d959-dhc7f

......attachdetach-controller AttachVolume.Attach failed for volume "data" : No VM found

[root@master-0 ~]# kubectl logs -n kube-system kube-controller-manager-master-0 -f

E0912 05:25:40.720017 1 reflector.go:205] k8s.io/kubernetes/pkg/cloudprovider/providers/vsphere/vsphere.go:227: Failed to list *v1.Node: nodes is forbidden: User "system:serviceaccount:kube-system:vsphere-cloud-provider" cannot list nodes at the cluster scope

- 原因:自动创建的vsphere-cloud-provider服务账户没有对应权限

- 解决:为vsphere-cloud-provider授权:创建ClusterRole和ClusterRoleBinding

5、参考资料

- https://vmware.github.io/vsphere-storage-for-kubernetes/documentation/index.html

- https://github.com/kubernetes/kubeadm/issues/484

- https://vanderzee.org/linux/article-170626-141044/article-170620-144221

- https://github.com/kubernetes/kubernetes/issues/57279

- https://github.com/kubernetes/examples/blob/master/staging/volumes/vsphere/README.md