第十二天 - Hive基本操作 - Hive导入数据、统计数据 - HiveJDBC操作Hive

第十二天 - Hive基本操作 - Hive导入数据、统计数据 - HiveJDBC操作Hive

- 第十二天 - Hive基本操作 - Hive导入数据、统计数据 - HiveJDBC操作Hive

-

- 一、Hive基础知识补充

-

- 概念

- Hive基本操作(二)

-

- 二、Hive SQL操作案例一:统计dataflow.log文件中的流量数据

-

- 未指定分隔符

- 指定分隔符

-

- 三、Hive SQL操作案例二:

-

- 通过navicat将sql文件转为txt

- 将数据文件.txt导入到hive中

- 对库中的信息进行查询操作

-

- 四、HiveJDBC操作Hive

-

- 准备工作

- 编写代码

-

- 一、Hive基础知识补充

-

一、Hive基础知识补充

概念

- Hive可以通过HiveJDBC进行操作,方便应用的封装

- Hive有内置函数和语法,也可以编写自定义函数

- Hive的数据类型,大部分与java中一致,其中long在hive中为bigint

- 创建数据表时,需要和数据文件结构(数据类型、列数等)对应,特别是分隔符要正确

- Hive默认不支持update和delete语句,但是可以通过函数实现,insert语句执行较慢,一般采用导入文件数据进行导入

- Hive主要用于数据计算

Hive基本操作(二)

切换数据库

use {dbName};

删除数据库

drop database [if exists] {dbName} [cascade];

查看当前库的所有表

show tables [like ‘*’] [in database];

创建表

create database.table({col_name} {data_type},…)[row format delimited fields terminated by ‘\t’ lines terminated by ‘\n’ ];

[]中用于指定分隔符

增加字段

alter table {tableName} add columns (new_colName data_type);

查看表结构

desc {tableName};

重命名表名

alter table {tableName} rename to {newName};

删除表

drop table if exists {tableName};

导入数据

从本地导入:load data local inpath ‘{localPath}’ into table {dbName.tableName};

从hdfs导入:load data inpath ‘{hdfsPath}’ into table {dbName.tableName};

内部表的数据如果从hdfs中导入,相当于移动操作,原文件会被移动到hive对应的表的文件夹下

二、Hive SQL操作案例一:统计dataflow.log文件中的流量数据

未指定分隔符

在命令行中输入hive进入hive客户端

hive

查看数据库列表

show databases;

切换数据库

use test;

查看当前数据库下的所有表

show tables;

将昨天测试用的两个表删除

drop table t1;

drop table t2;

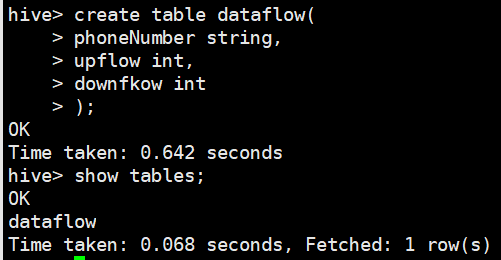

创建内部表,本次未指定分隔符

create table dataflow(

phoneNumber string,

upflow int,

downflow int

);

从本地导入数据文件“dataflow.log”

load data local inpath ‘/home/bigdata/hadoopTest/dataflow.log’ into table dataflow;

查看表,使用limit关键字限定读取的行数

由于创建表时没有指定正确的列分隔符,所以所有数据都分在了第一列,具体可见对hive的数据基本操作一

指定分隔符

删除表dataflow

drop dataflow;

创建表dataflow,指定分隔符

create table dataflow(

phoneNumber string ,

upflow int,

downflow int

)row format delimited fields terminated by ‘,’;

导入数据

load data local inpath ‘/home/bigdata/hadoopTest/dataflow.log’ into table dataflow;

查看表,由于创建表时指定了正确的分隔符,此时能正确读取

当使用聚合函数、group by 等时,hql将转换成MapReduce进行计算,即涉及到数据计算时才会触发MapReduce转换。

group by相当于MapReduce的mapper阶段,输出keyout,valueout;

聚合函数相当于MapReduce的reducer阶段,执行reduce()方法中的迭代计算。

select phoneNumber,sum(upflow),sum(downflow)

from dataflow

group by phoneNumber;

如果运行进程卡死或者出了问题,可以使用此命令强制结束

运行结果

加上总计流量

三、Hive SQL操作案例二:

通过navicat将sql文件转为txt

将mysql中已有的数据导入到hive中

将数据文件.txt导入到hive中

使用Xftp将文件上传至Linux中

在导入数据文件前需要先创建表,根据表结构创建make、orders、purchaser、type四个表

创建表时需要注意指定分隔符

表make

创建

create table make(

id int,

makeName string

)row format delimited fields terminated by ‘\t’;

导入数据

load data local inpath ‘/home/bigdata/data/make.txt’ into table make;

查看数据

select * from make limit 10;

表orders

创建

id int,

typeId int,

retail int,

purchaserId int

)row format delimited fields terminated by ‘\t’;

导入数据

load data local inpath ‘/home/bigdata/data/orders.txt’ into table orders;

查看数据

select * from orders limit 10;

表purchaser

创建

create table purchaser(

id int,age int,

income bigint,

deposit float

)row format delimited fields terminated by ‘\t’;

导入数据

load data local inpath ‘/home/bigdata/data/purchaser.txt/’ into table purchaser;

查看数据

select * from purchaser limit 10;

表type

创建

create table type(

id int,

typeName string,

energyConsumption float,

makeId int

)row format delimited fields terminated by ‘\t’;

导入数据

load data local inpath ‘/home/bigdata/data/type.txt’ into table type;

查看数据

select * from type

对库中的信息进行查询操作

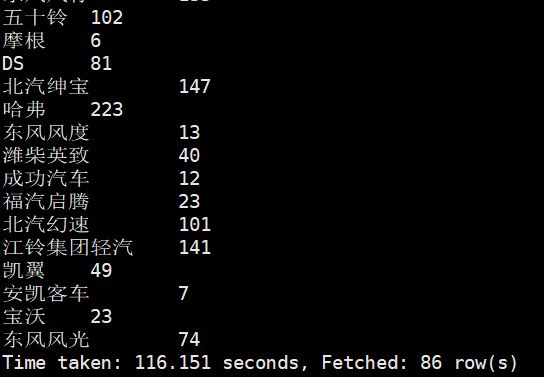

统计每种车型(type)的成交量,显示车型名称、成交量

SELECT t.typeName,COUNT(o.id) as count

FROM orders o,type t

WHERE o.typeId = t.id

GROUP BY o.typeId;左连接:

SELECT t.typeName,COUNT(o.id) as count

from orders o

LEFT JOIN type t

ON o.typeId = t.id

GROUP BY t.typeName,t.id;统计每种车型(type)的成交额,显示车型名称、成交额

select typeName,SUM(o.retail) as sum

from orders o,type t

where o.typeId = t.id

GROUP BY o.typeId;左连接:

SELECT t.typeName,SUM(o.retail) as sum

FROM orders o LEFT JOIN type t

ON o.typeId = t.id

GROUP BY t.typeName,o.typeId;统计每种品牌(make)的成交量,显示品牌名称、成交量

SELECT makeName,SUM(a.typeCount) as count

FROM

(SELECT COUNT(o.id) as typeCount,makeId

FROM

orders o,type t

WHERE o.typeId = t.id

GROUP BY o.typeId) a,make m

WHERE a.makeId = m.id

GROUP BY makeName,m.id;左连接:

select m.makeName,SUM(a.count)

FROM

(SELECT t.makeId,t.typeName,COUNT(o.id) as count

from orders o

LEFT JOIN type t

ON o.typeId = t.id

GROUP BY t.makeId,t.typeName,t.id) as a LEFT JOIN make m

on a.makeId = m.id

GROUP BY m.makeName,m.id;统计每种品牌(make)的成交额,显示品牌名称、成交额

SELECT makeName,SUM(a.typeSum) as sum

FROM

(SELECT sum(o.retail) as typeSum,makeId

FROM

orders o,type t

WHERE o.typeId = t.id

GROUP BY o.typeId,makeId) a,make m

WHERE a.makeId = m.id

GROUP BY makeName,m.id;左连接:

select m.makeName,SUM(a.sum)

FROM

(SELECT t.makeId,t.typeName,SUM(o.retail) as sum

from orders o

LEFT JOIN type t

ON o.typeId = t.id

GROUP BY t.id,t.makeId,t.typeName) as a LEFT JOIN make m

on a.makeId = m.id

GROUP BY m.id,m.makeName;统计每个年龄段成交量,显示年龄段和成交量

select FLOOR(p.age/10) ,count(o.retail)

from purchaser p,orders o

where p.id = o.purchaserId

GROUP BY FLOOR(p.age/10)

;左连接:

select FLOOR(p.age/10) ,count(o.retail)

from purchaser p

LEFT JOIN orders o

on p.id = o.purchaserId

GROUP BY FLOOR(p.age/10)

;统计每个年龄段成交额,显示年龄段和成交额

select FLOOR(p.age/10) ,sum(o.retail)

from purchaser p,orders o

where p.id = o.purchaserId

GROUP BY FLOOR(p.age/10)

;左连接:

select FLOOR(p.age/10) ,sum(o.retail)

from purchaser p

LEFT JOIN orders o

on p.id = o.purchaserId

GROUP BY FLOOR(p.age/10)

;统计每种汽车排量的成交量,显示汽车排量和成交量

select t.energyConsumption ,count(o.retail)

from orders o,type t

where o.typeId = t.id

GROUP BY t.energyConsumption;左连接:

select t.energyConsumption ,count(o.retail)

from orders o

LEFT JOIN type t

on o.typeId = t.id

GROUP BY t.energyConsumption;统计每种汽车排量的成交额,显示汽车排量和成交额

select t.energyConsumption ,sum(o.retail)

from orders o,type t

where o.typeId = t.id

GROUP BY t.energyConsumption;左连接:

select t.energyConsumption ,sum(o.retail)

from orders o

LEFT JOIN type t

on o.typeId = t.id

GROUP BY t.energyConsumption;

四、HiveJDBC操作Hive

准备工作

打开HiveJDBC的远程监控服务端口

前台运行,需要一直停留在该命令

hive –service hiveserver2 –hiveconf hive.server2.thrift.port=10010

执行此命令后,会话窗口光标将会一直停留,无法执行新命令,如果此时ctrl+c强行停止命令或者关闭了会话窗口,远程连接的监控服务端口也会关闭

设置为后台运行

hive –service hiveserver2 –hiveconf hive.server2.thrift.port=10010 &

执行此命令后,远程连接的监控服务端口将会在后台运行,但是如果关闭了会话窗口,此端口也会关闭

设置为始终运行状态,此时关闭会话也可以继续运行,但是如果重启机器就需要重新启动服务

nohup hive –service hiveserver2 –hiveconf hive.server2.thrift.port=10010 &

在Eclipse中新建普通Java项目,右键项目新建文件夹lib

导入共11个jar包到lib目录中,并且添加至构建路径

从CentOS中的hive安装路径的conf目录下下载hive-log4j.properties至本地,放在src目录下并重命名为log4j.properties

修改log4j.properties第19行为hive.root.logger=DEBUG,console,作用是在运行时能在控制台输出hive的运行状态信息

控制台输出的信息不全面,如果要查看详细信息需要在CentOS中查看日志文件。日志文件的目录在hive安装路径的conf目录下hive-log4j.properties中配置的路径,

编写代码

Test01.java

import java.sql.Connection;

import java.sql.DriverManager;

import java.sql.ResultSet;

import java.sql.Statement;

public class Test01 {

public static void main(String[] args) throws Exception{

// 1.加载驱动

Class.forName("org.apache.hive.jdbc.HiveDriver");

// 2.打开连接

Connection connection = DriverManager.getConnection("jdbc:hive2://SZ01:10010/test");

// 3.获得操作对象

Statement statement = connection.createStatement();

// 4.操作Hive

String sql = "select a.energyConsumption,sum(b.retail) from orders b LEFT JOIN type a on a.id = b.typeId GROUP BY a.energyConsumption";

// 5.接受结果

ResultSet rs = statement.executeQuery(sql);

while(rs.next()) {

System.out.println(rs.getFloat(1) + " " + rs.getString(2));

}

// 6.关闭连接

rs.close();

statement.close();

connection.close();

}

}运行结果