第四天 -- Accumulator累加器 -- Spark SQL -- DataFrame -- Hive on Spark

第四天 – Accumulator累加器 – Spark SQL – DataFrame – Hive on Spark

文章目录

- 第四天 -- Accumulator累加器 -- Spark SQL -- DataFrame -- Hive on Spark

- 一、Accumulator(累加器):

- 二、Spark SQL

- Spark SQL简介

- Spark SQL特点

- 三、DataFrame

- DataFrame简介

- DataFrame创建

- DataFrame常用操作

- DSL(领域特定语言)风格语法

- SQL风格语法

- 四、通过编程实现Spark SQL查询

- pom.xml添加Spark SQL依赖

- 通过反射推断Schema

- 通过StructType直接指定Schema

- 五、与MySQL交互

- 从MySQL加载数据

- 将数据写入到mysql中

- 六、案例

- 七、Spark SQL自定义函数

- 简介

- UDF

- UDAF

- 八、Hive on Spark

- 简介

- 配置

- 启动

- 操作

一、Accumulator(累加器):

Spark提供了Accumulator累加器,用于分布式计算过程中对一个变量进行共享操作,其实就是提供了多个task对一个变量并行操作的过程,task只能对Accumulator做累加操作的操作,不能读取其值,只有Driver端才能读取其值。

import org.apache.spark.{Accumulator, SparkConf, SparkContext}

object AccumulatorDemo {

def main(args: Array[String]): Unit = {

val conf = new SparkConf().setAppName("AccumulatorDemo").setMaster("local[2]")

val sc = new SparkContext(conf)

val numbers = sc.parallelize(Array(1,2,3,4,5,6),3)

var sum = 0

numbers.foreach(x => sum += x)

println(sum)

}

}

输出结果为0,并不是预期的结果。需要使用Spark提供的Accumulator累加器对变量进行定义。

import org.apache.spark.{Accumulator, SparkConf, SparkContext}

object AccumulatorDemo {

def main(args: Array[String]): Unit = {

val conf = new SparkConf().setAppName("AccumulatorDemo").setMaster("local[2]")

val sc = new SparkContext(conf)

val numbers = sc.parallelize(Array(1,2,3,4,5,6),3)

// 使用累加器定义变量

val sum:Accumulator[Int] = sc.accumulator(0)

numbers.foreach(x => sum += x)

println(sum)

}

}

输出结果为21

二、Spark SQL

Spark SQL简介

Spark SQL是Spark用来处理结构化数据的一个模块,它提供了一个编程抽象叫做DataFrame并且提供分布式SQL查询引擎的作用。Hive是将Hive SQL转换成MapReduce然后提交到集群上执行,大大简化了编写MapReduce程序的复杂性,由于MapReduce这种计算模型的执行效率较慢,所以有了Spark SQL,它是将Spark SQL转换成RDD,然后提交到集群执行,大大提高了执行效率。spark sql操做的结果数据,保存时默认的格式为parquet格式

Spark SQL的性能优化方面做了很多工作:

1.内存列存储:优点是使用列存储可以大大优化内存的使用效率,减少了对内存的消耗,避免了gc大量数据的性能开销。

2.字节码生成技术:底层动态地用非常简单的代码逻辑进行计算。

Spark SQL特点

- 易整合

- 统一的数据访问方式

- 兼容Hive

- 标准的数据连接

三、DataFrame

DataFrame简介

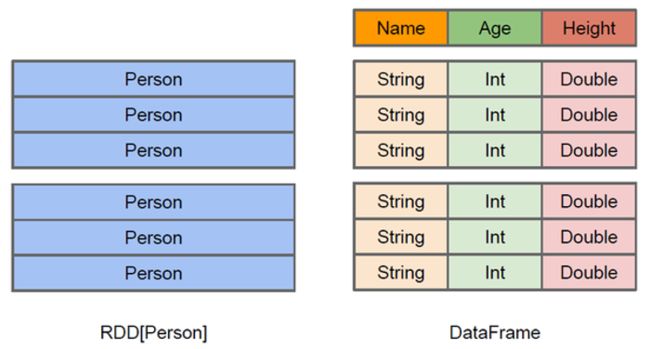

与RDD类似,DataFrame也是一个分布式数据容器。然而DataFrame更像传统数据库的二维表格,除了数据之外,还记录数据的结构信息,即schema。同时,与Hive类似,DataFrame也支持嵌套数据类型(struct、array和map)。从API易用性的角度上看,DataFrame API提供的是一套高层的关系操作,比函数式的RDD API要更加友好,门槛更低。

DataFrame创建

在Spark SQL中SQLContext是创建DataFrames和执行SQL的入口,进入spark shell时已经自动创建了一个SQLContext对象sqlContext,可以直接使用。

-

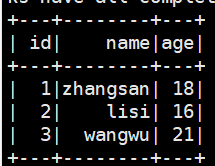

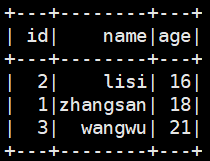

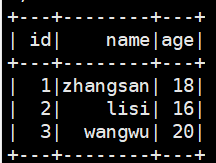

创建文件person.txt数据文件,共三列,分别是id、name、age,使用","分割,上传至hdfs上

1,zhangsan,18 2,lisi,16 3,wangwu,21hdfs dfs -put person.txt /userdata

-

启动spark shell

spark-shell \ --master spark://cdhnocms01:7077 \ --executor-memory 1g \ --total-executor-cores 2 -

读取数据,将每一行数据使用","分割

val lineRDD = sc.textFile(“hdfs://cdhnocms01:8020/userdata/person.txt”).map(_.split(","))

-

定义case class(相当于表的schema)

case class Person(id:Int, name:String, age:Int)

-

将RDD与case class关联

val personRDD = lineRDD.map(x => Person(x(0).toInt, x(1), x(2).toInt))

-

将RDD转换成DataFrame

val personDF = personRDD.toDF

-

对DataFrame进行处理

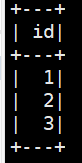

personDF.show

DataFrame常用操作

DSL(领域特定语言)风格语法

-

查看DataFrame中的内容

personDF.show

-

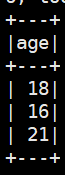

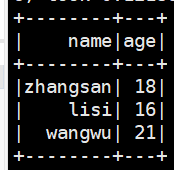

查看DataFrame部分列的内容

personDF.select(personDF.col(“age”)).show

personDF.select(col(“name”),col(“age”)).show

personDF.select(“id”).show

-

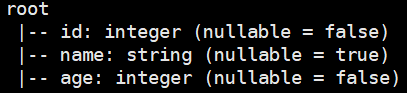

打印DataFrame的schema信息

personDF.printSchema

-

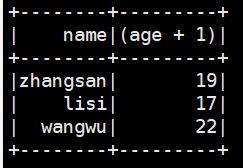

查询所有的name和age,并且将age+1

personDF.select(col(“name”),col(“age”)+1).show

personDF.select(personDF(“name”),personDF(“age”)+1).show

-

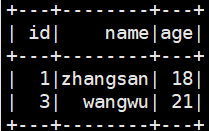

过滤age大于等于18的信息

personDF.filter(col(“age”) >= 18).show

-

按年龄进行分组并统计相同年龄的人数

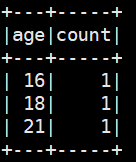

personDF.groupBy(“age”).count().show

-

按照年龄升序排序

personDF.sort(col(“age”)).show

-

按照年龄降序排序

personDF.orderBy(col(“age”).desc).show

SQL风格语法

如果要使用SQL风格的语法,需要将DataFrame注册成表

personDF.registerTempTable(“t_person”)

-

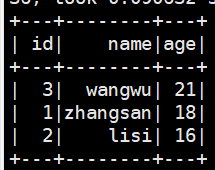

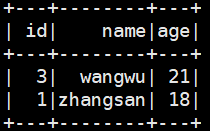

查询年龄最大的前两名

sqlContext.sql(“select * from t_person order by age desc limit 2”).show

-

显示表的schema信息

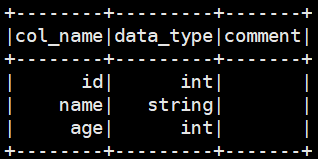

sqlContext.sql(“desc t_person”).show

四、通过编程实现Spark SQL查询

pom.xml添加Spark SQL依赖

org.apache.spark

spark-sql_2.10

1.6.3

1,mimi,34,90

2,yuanyuan,32,85

3,bingbing,35,90

通过反射推断Schema

InferSchemaDemo.scala

import org.apache.spark.rdd.RDD

import org.apache.spark.sql.{DataFrame, SQLContext}

import org.apache.spark.{SparkConf, SparkContext}

object InferSchemaDemo {

def main(args: Array[String]): Unit = {

val conf = new SparkConf().setAppName("InferSchemaDemo").setMaster("local[2]")

val sc = new SparkContext(conf)

val sqlContext = new SQLContext(sc) // 创建sqlCOntext

// 获取数据并切分

val linesRDD: RDD[Array[String]] = sc.textFile("hdfs://cdhnocms01:8020/userdata/p1.txt").map(_.split(","))

// 将RDD和case class关联

val personRDD: RDD[Person] = linesRDD.map(p => Person(p(0).toLong,p(1),p(2).toInt,p(3).toInt))

// 引入SQLContext对象里的隐式转换函数

import sqlContext.implicits._

// 将personRDD转换为DataFrame

val personDF: DataFrame = personRDD.toDF()

// 注册一张临时表

personDF.registerTempTable("t_person")

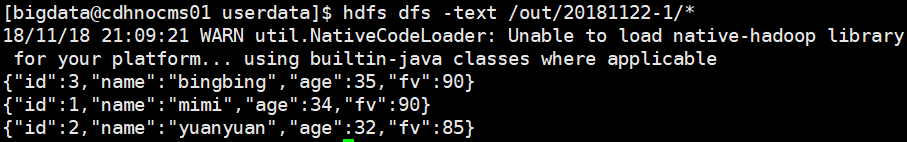

val sql = "select id, name, age, fv from t_person where fv > 60 order by age desc"

// 开始查询

val res: DataFrame = sqlContext.sql(sql)

//res.show()

// 以json方式保存结果数据,可以通过hdfs dfs -text /out/20181122-1/*查看结果文件数据

//res.write.mode("append").json("hdfs://cdhnocms01:8020/out/20181122-1")

// 使用save时保存的数据文件是经过压缩的文件,无法直接查看

res.write.mode("append").save("hdfs://cdhnocms01:8020/out/20181122-2")

sc.stop()

}

}

case class Person(id:Long, name:String, age:Int, fv:Int)

通过StructType直接指定Schema

import org.apache.spark.rdd.RDD

import org.apache.spark.{SparkConf, SparkContext}

import org.apache.spark.sql.{DataFrame, Row, SQLContext}

import org.apache.spark.sql.types.{IntegerType, StringType, StructField, StructType}

/**

* 通过StructType指定schema

*/

object StructTypeSchemaDemo {

def main(args: Array[String]): Unit = {

val conf = new SparkConf().setAppName("InferSchemaDemo").setMaster("local[2]")

val sc = new SparkContext(conf)

val sqlContext = new SQLContext(sc) // 创建sqlCOntext

// 获取数据并切分

val linesRDD: RDD[Array[String]] = sc.textFile("hdfs://cdhnocms01:8020/userdata/p1.txt").map(_.split(","))

// 用StructType指定schema

val schema = StructType(

Array(

StructField("id", IntegerType, false),

StructField("name", StringType, false),

StructField("age", IntegerType, false),

StructField("fv", IntegerType, false)

)

)

// 开始映射

val rowRDD: RDD[Row] = linesRDD.map(p => Row(p(0).toInt, p(1), p(2).toInt, p(3).toInt))

// 将rowRDD转换为DataFrame

val personDF: DataFrame = sqlContext.createDataFrame(rowRDD, schema)

// 注册一张临时表

personDF.registerTempTable("t_person")

val sql = "select id, name, age, fv from t_person where fv > 60 order by age desc"

// 开始查询

val res: DataFrame = sqlContext.sql(sql)

res.show()

sc.stop()

}

}

五、与MySQL交互

Spark SQL可以通过jdbc从关系型数据库中读取数据的方式创建DataFrame,通过对DataFrame一系列的计算后,还可以将数据再写回关系型数据库中。

从MySQL加载数据

-

spark shell方式

启动spark shell,启动时需要指定mysql连接的驱动jar包

spark-shell \ --master spark://cdhnocms01:7077 \ --executor-memory 1g \ --total-executor-cores 2 \ --jars /home/bigdata/userjars/mysql-connector-java-5.1.46-bin.jar \ --driver-class-path /home/bigdata/userjars/mysql-connector-java-5.1.46-bin.jar从mysql中加载数据

val jdbcDF = sqlContext.read.format("jdbc").options(Map("url" -> "jdbc:mysql://cdhnocms01:3306/test","driver" -> "com.mysql.jdbc.Driver","dbtable" -> "person","user" -> "root","password" -> "root")).load()执行查询

jdbcDF.show()

-

编程方式

import org.apache.spark.{SparkConf, SparkContext} import org.apache.spark.sql.SQLContext object jdbcTest { def main(args: Array[String]): Unit = { val conf = new SparkConf().setAppName("InferSchemaDemo").setMaster("local[2]") val sc = new SparkContext(conf) val sqlContext = new SQLContext(sc) // 创建sqlCOntext val jdbcDF = sqlContext.read.format("jdbc").options(Map("url" -> "jdbc:mysql://cdhnocms01:3306/test","driver" -> "com.mysql.jdbc.Driver","dbtable" -> "person","user" -> "root","password" -> "root")).load() jdbcDF.show() sc.stop() } }

将数据写入到mysql中

InsertDataToMysql.scala

import java.util.Properties

import org.apache.spark.rdd.RDD

import org.apache.spark.{SparkConf, SparkContext}

import org.apache.spark.sql.{Row, SQLContext}

import org.apache.spark.sql.types.{IntegerType, StringType, StructField, StructType}

object InsertDataToMysql {

def main(args: Array[String]): Unit = {

val conf = new SparkConf().setAppName("InferSchemaDemo").setMaster("local[2]")

val sc = new SparkContext(conf)

val sqlContext = new SQLContext(sc) // 创建sqlCOntext

// 获取数据并切分

val linesRDD: RDD[Array[String]] = sc.textFile("hdfs://cdhnocms01:8020/userdata/person.txt").map(_.split(","))

val schema = StructType(Array(

StructField("name",StringType,true),

StructField("age",IntegerType,true)

))

val rowRDD = linesRDD.map(p => Row(p(1),p(2).toInt))

val personDF = sqlContext.createDataFrame(rowRDD,schema)

// 准备用于清酒数据库的配置信息

val prop = new Properties()

prop.put("user","root")

prop.put("password","root")

prop.put("driver","com.mysql.jdbc.Driver")

val url = "jdbc:mysql://cdhnocms01:3306/test"

val table = "person"

// 写数据到mysql

personDF.write.mode("append").jdbc(url,table,prop)

sc.stop()

}

}

六、案例

需求:查询成绩为85分及以上的学生的基本信息和成绩信息

数据:

stu_base.json:

{"name":"zhangsan","age":18}

{"name":"lisi","age":16}

{"name":"wangwu","age":20}

stu_scores.json:

{"name":"zhangsan","score":80}

{"name":"lisi","score":90}

{"name":"wangwu","score":85}

实现思路:

- 查询stu_score得到成绩大于等于85分的信息,并存放在集合中

- 根据以上查到的姓名信息,查询stu_base得到该同学的年龄信息

- 将查询到的DataFrame转换为RDD,进行join操作

- 通过StructType指定schema,将join后的RDD转换为DataFrame

import org.apache.spark.rdd.RDD

import org.apache.spark.sql.types.{LongType, StringType, StructField, StructType}

import org.apache.spark.{SparkConf, SparkContext}

import org.apache.spark.sql.{DataFrame, Row, SQLContext}

/**

* 需求:查询成绩为85分以上的学生的基本信息和成绩信息

*/

object SparkSQLTest {

def main(args: Array[String]): Unit = {

val conf = new SparkConf().setAppName("InferSchemaDemo").setMaster("local[2]")

val sc = new SparkContext(conf)

val sqlContext = new SQLContext(sc) // 创建sqlCOntext

// 获取学生成绩信息

val studentScoresDF: DataFrame = sqlContext.read.json("hdfs://cdhnocms01:8020/userdata/stu_scores.json")

studentScoresDF.registerTempTable("student_scores")

val goodStudentScoresDF: DataFrame = sqlContext.sql("select name,score from student_scores where score >= 85")

// 获取到分数大于等于85分的学生姓名

val goodStudentNames = goodStudentScoresDF.rdd.map(row => row(0)).collect

// 获取学生基本信息

val studentInfoDF: DataFrame = sqlContext.read.json("hdfs://cdhnocms01:8020/userdata/stu_base.json")

// 拼接用于查询大于等于85分学生的基本信息的sql语句

studentInfoDF.registerTempTable("student_info")

var sql = "select name, age from student_info where name in ("

for(i <- 0 until goodStudentNames.length){

sql += "'" + goodStudentNames(i) + "'"

// 如果不是最后一个下标,需要加","

if (i < goodStudentNames.length - 1){

sql += ","

}

}

sql += ")"

val goodStudentInfoDF: DataFrame = sqlContext.sql(sql)

// 将分数大于等于85分的学生基本信息和成绩信息进行join

val goodStudentScoreRDD: RDD[(String, Long)] = goodStudentScoresDF.rdd.map(row => (row.getAs[String]("name"),row.getAs[Long]("score")))

val goodStudentInfoRDD: RDD[(String, Long)] = goodStudentInfoDF.rdd.map(row => (row.getAs[String]("name"),row.getAs[Long]("age")))

// 进行join

val goodStudentScoreAndInfoRDD: RDD[(String, (Long, Long))] = goodStudentScoreRDD.join(goodStudentInfoRDD)

// 将RDD转换为DataFrame

val goodStudentRowsRDD: RDD[Row] = goodStudentScoreAndInfoRDD.map(info => Row(info._1,info._2._2.toLong,info._2._1.toLong))

val schema = StructType(Array(

StructField("name", StringType, true),

StructField("age", LongType, true),

StructField("score", LongType, true)

))

val goodStudentDF: DataFrame = sqlContext.createDataFrame(goodStudentRowsRDD,schema)

goodStudentDF.show()

sc.stop()

}

}

七、Spark SQL自定义函数

简介

spark sql也可以实现自定义函数,目的是有sql不好实现的逻辑,可以用自定义函数来实现,其中有UDF、UDAF

UDF

需求:实现统计字符串的长度

import org.apache.spark.rdd.RDD

import org.apache.spark.sql.types.{StringType, StructField, StructType}

import org.apache.spark.{SparkConf, SparkContext}

import org.apache.spark.sql.{Row, SQLContext}

object UDFTest {

def main(args: Array[String]): Unit = {

val conf = new SparkConf().setAppName("InferSchemaDemo").setMaster("local[2]")

val sc = new SparkContext(conf)

val sqlContext = new SQLContext(sc) // 创建sqlCOntext

// 模拟数据

val names = Array("zhangsan","lisi","wangwu")

val namesRDD: RDD[String] = sc.parallelize(names,3)

val namesRowRDD: RDD[Row] = namesRDD.map(name => Row(name))

val schema = StructType(Array(StructField("name",StringType,true)))

val namesDF = sqlContext.createDataFrame(namesRowRDD,schema)

namesDF.registerTempTable("names")

// 自定义和注册自定义函数

sqlContext.udf.register("strlen", (str:String) => str.length)

// 使用自定义函数

val res = sqlContext.sql("select name, strlen(name) from names")

res.show()

sc.stop()

}

}

UDAF

需求:用UDAF和sql实现wordcount

UDAFTest.scala

import org.apache.spark.rdd.RDD

import org.apache.spark.{SparkConf, SparkContext}

import org.apache.spark.sql.{Row, SQLContext}

import org.apache.spark.sql.types.{StringType, StructField, StructType}

object UDAFTest {

def main(args: Array[String]): Unit = {

val conf = new SparkConf().setAppName("InferSchemaDemo").setMaster("local[2]")

val sc = new SparkContext(conf)

val sqlContext = new SQLContext(sc) // 创建sqlCOntext

// 模拟数据

val names = Array("zhangsan","zhangsan","lisi","wangwu","lisi","lisi")

val namesRDD: RDD[String] = sc.parallelize(names,3)

val namesRowRDD: RDD[Row] = namesRDD.map(name => Row(name))

val schema = StructType(Array(StructField("name",StringType,true)))

val namesDF = sqlContext.createDataFrame(namesRowRDD,schema)

namesDF.registerTempTable("names")

// 定义和注册UDAF

sqlContext.udf.register("strcount",new StringCount)

// 使用自定义函数

val res = sqlContext.sql("select name, strcount(name) from names group by name")

res.show()

sc.stop()

}

}

StringCount.scala

import org.apache.spark.sql.Row

import org.apache.spark.sql.expressions.{MutableAggregationBuffer, UserDefinedAggregateFunction}

import org.apache.spark.sql.types._

class StringCount extends UserDefinedAggregateFunction{

// 输入数据的类型

override def inputSchema: StructType = {

StructType(Array(StructField("str",StringType,true)))

}

// 在进行聚合的过程中,缓冲的数据类型

override def bufferSchema: StructType = {

StructType(Array(StructField("count",IntegerType,true)))

}

// 返回值的类型

override def dataType: DataType = IntegerType

// 是否是确定性的,如果为true,即给定的相同的输入,返回相同的输出

override def deterministic: Boolean = true

// 初始化操作

override def initialize(buffer: MutableAggregationBuffer): Unit = {

buffer(0) = 0

}

// 局部聚合过程中使用的方法,当每个分组有新的值进来的时候,如何进行分组对应的聚合值的计算

override def update(buffer: MutableAggregationBuffer, input: Row): Unit = {

buffer(0) = buffer.getAs[Int](0) + 1

}

// 全局聚合,把各个节点上的聚合值进行merge,就是全局合并

override def merge(buffer1: MutableAggregationBuffer, buffer2: Row): Unit = {

buffer1(0) = buffer1.getAs[Int](0) + buffer2.getAs[Int](0)

}

// 指在这个缓存中还可以进行其他的操作,比如加上一个值,返回最终聚合的值

override def evaluate(buffer: Row): Any = {

buffer.getAs[Int](0)

}

}

八、Hive on Spark

简介

操作层用Hive来操作,计算层用Spark Core来进行计算

配置

将 H A D O O P H O M E / e t c / h a d o o p 目 录 下 的 c o r e − s i t e . x m l 和 HADOOP_HOME/etc/hadoop目录下的core-site.xml和 HADOOPHOME/etc/hadoop目录下的core−site.xml和HIVE_HOME/conf目录下的hive-site.xml复制到Spark主节点的conf目录下

启动

spark-sql \

--master spark://cdhnocms01:7077 \

--executor-memory 1g \

--total-executor-cores 2 \

--driver-class-path /home/bigdata/userjars/mysql-connector-java-5.1.46-bin.jar

操作

操作跟在hive端中的操作相同

-

创建表

create table person(id int, name string, age int, fv int) row format delimited fields terminated by ‘,’;

-

加载数据

load data local inpath “/home/bigdata/userdata/p1.txt” into table person

-

查询

select * from person

-

删除表

drop table person