- Scrapy入门学习

晚睡早起₍˄·͈༝·͈˄*₎◞ ̑̑

Pythonscrapy学习python开发语言笔记

文章目录Scrapy一.Scrapy简介二.Scrapy的安装1.进入项目所在目录2.安装软件包Scrapy3.验证是否安装成功三.Scrapy的基础使用1.创建项目2.在tutorial/spiders目录下创建保存爬虫代码的项目文件3.运行爬虫4.利用css选择器+ScrapyShell提取数据例如:Scrapy一.Scrapy简介Scrapy是一个用于抓取网站和提取结构化数据的应用程序框架,

- 爬虫学习笔记-scrapy爬取电影天堂(双层网址嵌套)

DevCodeMemo

爬虫学习笔记

1.终端运行scrapystartprojectmovie,创建项目2.接口查找3.终端cd到spiders,cdscrapy_carhome/scrapy_movie/spiders,运行scrapygenspidermvhttps://dy2018.com/4.打开mv,编写代码,爬取电影名和网址5.用爬取的网址请求,使用meta属性传递name,callback调用自定义的parse_sec

- 爬虫学习笔记-scrapy爬取当当网

DevCodeMemo

爬虫学习笔记

1.终端运行scrapystartprojectscrapy_dangdang,创建项目2.接口查找3.cd100个案例/Scrapy/scrapy_dangdang/scrapy_dangdang/spiders到文件夹下,创建爬虫程序4.items定义ScrapyDangdangItem的数据结构(要爬取的数据)src,name,price5.爬取src,name,price数据导入items

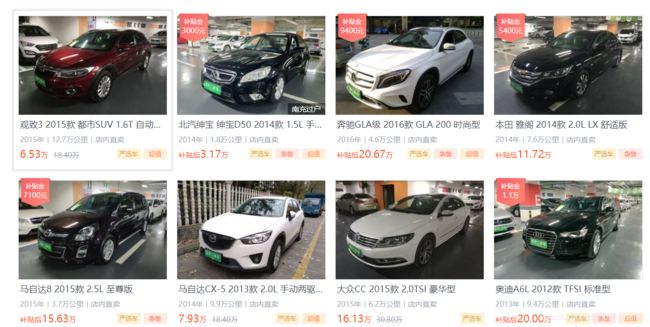

- 爬虫学习笔记-scrapy爬取汽车之家

DevCodeMemo

爬虫学习笔记

1.终端运行scrapystartprojectscrapy_carhome,创建项目2.接口查找3.终端cd到spiders,cdscrapy_carhome/scrapy_carhome/spiders,运行scrapygenspideraudihttps://car.autohome.com.cn/price/brand-33.html4.打开audi,编写代码,xpath获取页面车型价格列

- Python爬虫学习之scrapy库

蜀道之南718

python爬虫学习笔记scrapy

一、scrapy库安装pipinstallscrapy-ihttps://pypi.douban.com/simple二、scrapy项目的创建1、创建爬虫项目打开cmd输入scrapystartproject项目的名字注意:项目的名字不允许使用数字开头也不能包含中文2、创建爬虫文件要在spiders文件夹中去创建爬虫文件cd项目的名字\项目的名字\spiderscdscrapy_baidu_09

- 爬虫学习笔记-scrapy安装及第一个项目创建问题及解决措施

DevCodeMemo

爬虫学习笔记

1.安装scrapypycharm终端运行pipinstallscrapy-ihttps://pypi.douban.com/simple2.终端运行scrapystartprojectscrapy_baidu,创建项目问题1:lxml版本低导致无法找到解决措施:更新或者重新安装lxml3.项目创建成功4.终端cd到项目的spiders文件夹下,cdscrapy_baidu\scrapy_baid

- Python爬虫学习之scrapy库

蜀道之南718

python爬虫笔记学习

一、scrapy库安装pipinstallscrapy-ihttps://pypi.douban.com/simple二、scrapy项目的创建1、创建爬虫项目打开cmd输入scrapystartproject项目的名字注意:项目的名字不允许使用数字开头也不能包含中文2、创建爬虫文件要在spiders文件夹中去创建爬虫文件cd项目的名字\项目的名字\spiderscdscrapy_baidu_09

- python爬虫框架Scrapy

逛逛_堆栈

爬虫框架Scrapy(三)使用框架Scrapy开发一个爬虫只需要四步:创建项目:scrapystartprojectproname(项目名字,不区分大小写)明确目标(编写items.py):明确你想要抓取的目标制作爬虫(spiders/xxspider.py):制作爬虫开始爬取网页存储内容(pipelines.py):设计管道存储爬取内容1、新建项目在开始爬取之前,必须创建一个新的Scrapy项目

- python基于scrapy框架爬取数据并写入到MySQL和本地

阿里多多酱a

pythonscrapy爬虫

目录1.安装scrapy2.创建项目3.工程目录结构4.工程目录结构详情5.创建爬虫文件6.编写对应的代码在爬虫文件中7.执行工程8.scrapy数据解析9.持久化存储10.管道完整代码1.安装scrapypipinstallscrapy2.创建项目scrapystartprojectproname#proname就是你的项目名称3.工程目录结构4.工程目录结构详情spiders:存放爬虫代码目录

- IDEA 使用Git推送项目报错Push failed: Could not read from remote repository

叫我胖虎大人

Can’tfinishGitHubsharingprocessSuccessfullycreatedproject‘Python-Spiders’onGitHub,butinitialpushfailed:Couldnotreadfromremoterepository.上面是抛出的错误:解决方案:setting->VersionControl->Github取消勾选Clonegitreposit

- Scrapy的基本使用(一)

NiceBlueChai

产生步骤(一)应用Scrapy爬虫框架主要时编写配置型代码步骤1:建立一个Scrapy爬虫工程选取一个目录(G:\pycodes\),然后执行以下命令生成的工程目录:产生步骤(二)步骤2:在工程中生成一个Scrapy爬虫进入工程目录然后执行以下命令该命令作用:(1)生成一个名为demo的spider(2)在spiders目录下增加demo.py文件(该命令仅用于生成demo.py,该文件也可以手工

- Scrapy入门到放弃06:Spider中间件

叫我阿柒啊

Scrapy爬虫中间件scrapyspidermiddleware

前言写一写Spider中间件吧,都凌晨了,一点都不想写,主要是也没啥用…哦不,是平时用得少。因为工作上的事情,已经拖更好久了,这次就趁着半夜写一篇。Scrapy-deltafetch插件是在Spider中间件实现的去重逻辑,开发过程中个人用的还是比较少一些的。作用依旧是那张熟悉的架构图,不出意外,这张图是最后一次出现在Scrapy系列文章中了。如架构图所示,Spider中间件位于Spiders(程

- Scrapy下载图片并修改为OSS地址

Az_plus

Scrapy框架scrapypython网络爬虫阿里云

Scrapy下载图片并修改为OSS地址新建爬虫•创建项目#spiderzt为项目名scrapystartprojectspiderzt项目目录如下:•创建爬虫文件doyo.py在spiders文件中创建新的爬虫文件importscrapyclassDoyonewsSpider(scrapy.Spider):name="doyo"#spider名称allowed_domains=["www.doyo

- 【爬虫】Python Scrapy 基础概念 —— 请求和响应

栗子ma

爬虫ScrapyPython爬虫ScrapyPython

【原文链接】https://doc.scrapy.org/en/latest/topics/request-response.htmlScrapyusesRequestandResponse对象来爬网页.Typically,spiders中会产生Request对象,然后传递acrossthesystem,直到他们到达Downloader,which执行请求并返回一个Response对象whicht

- 数据收集与处理(爬虫技术)

没有难学的知识

爬虫

文章目录1前言2网络爬虫2.1构造自己的Scrapy爬虫2.1.1items.py2.1.2spiders子目录2.1.3pipelines.py2.2构造可接受参数的Scrapy爬虫2.3运行Scrapy爬虫2.3.1在命令行运行2.3.2在程序中调用2.4运行Scrapy的一些要点3大规模非结构化数据的存储与分析4全部代码1前言介绍几种常见的数据收集、存储、组织以及分析的方法和工具首先介绍如何

- scrapy框架大致流程介绍

一朋

爬虫scrapypython

scrapy框架介绍:scrapy框架是以python作为基础语言,实现网页数据的抓取,提取信息,保存的一个应用框架,可应用于数据提取、数据挖掘、信息处理和存储数据等一系列的程序中。基本流程:新建项目明确目标制作爬虫模块并开始爬取提取目标数据存储内容流程架构图(注:下列绿线表示数据流向):对于上述scrapy框架图解的基本工作流程,可以简单的理解为:Spiders(爬虫)将需要发送请求的url(R

- scrapy框架搭建

西界M

scrapy

安装scrapypipinstallscrapy-i镜像源创建项目scrapystartproject项目名字创建爬取的单个小项目cd项目名字scrapygenspiderbaidubaidu.com"""spiders文件夹下生成baidu.py文件"""开启一个爬虫scrapycrawlbaidu

- Scrapy 框架

陈其淼

网络爬虫scrapy

介绍Scrapy是一个基于Twisted的异步处理框架,是纯Python实现的开源爬虫框架,其架构清晰,模块之间的耦合程度低,可扩展性极强,可以灵活完成各种需求。Scrapy框架的架构如下图所示:其中各个组件含义如下:ScrapyEngine(引擎):负责Spiders、ItemPipeline、Downloader、Scheduler之间的通信,包括信号和数据传输等。Item(项目):定义爬取结

- python爬虫-scrapy五大核心组件和中间件

小王子爱上玫瑰

python爬虫python爬虫中间件

文章目录一、scrapy五大核心组件Spiders(爬虫)ScrapyEngine(Scrapy引擎)Scheduler(调度器)Downloader(下载器)ItemPipeline(项目管道)二、工作流程三、中间件3.1下载中间件3.1.1UA伪装3.1.2代理IP3.1.3集成selenium3.2爬虫中间件一、scrapy五大核心组件下面这张图我们在python爬虫-scrapy基本使用见

- Python知识点之Python爬虫

燕山588

python程序员编程python爬虫数据库pycharmweb开发

1.scrapy框架有哪几个组件/模块?ScrapyEngine:这是引擎,负责Spiders、ItemPipeline、Downloader、Scheduler中间的通讯,信号、数据传递等等!(像不像人的身体?)Scheduler(调度器):它负责接受引擎发送过来的requests请求,并按照一定的方式进行整理排列,入队、并等待ScrapyEngine(引擎)来请求时,交给引擎。Download

- scrapy框架——架构介绍、安装、项目创建、目录介绍、使用、持久化方案、集成selenium、去重规则源码分析、布隆过滤器使用、redis实现分布式爬虫

山上有个车

爬虫scrapy架构selenium

文章目录前言一、架构介绍引擎(EGINE)调度器(SCHEDULER)下载器(DOWLOADER)爬虫(SPIDERS)项目管道(ITEMPIPLINES)下载器中间件(DownloaderMiddlewares)爬虫中间件(SpiderMiddlewares)一、安装一、项目创建1创建scrapy项目2创建爬虫3启动爬虫,爬取数据二、目录介绍三、解析数据四、配置1.基础配置2.增加爬虫的爬取效率

- 一文秒懂Scrapy原理

小帆芽芽

scrapy爬虫python

scrapy架构图解Spiders(爬虫):它负责处理所有Responses,从中分析提取数据,获取Item字段需要的数据,并将需要跟进的URL提交给引擎,再次进入Scheduler(调度器)Engine(引擎):负责Spider、ItemPipeline、Downloader、Scheduler中间的通讯,信号、数据传递等。Scheduler(调度器):它负责接受引擎发送过来的Request请求

- python scrapy爬取网站数据(一)

Superwwz

Pythonpythonscrapy开发语言

框架介绍scrapy中文文档scrapy是用python实现的一个框架,用于爬取网站数据,使用了twisted异步网络框架,可以加快下载的速度。scrapy的架构图,可以看到主要包括scheduler、Downloader、Spiders、pipline、ScrapyEngine和中间件。各个部分的功能如下:Schduler:调度器,负责接受引擎发送过来的request,并按照一定的方式进行整理排

- scrapy 学习笔记

孤傲的天狼

爬虫scrapypython

1创建项目:$scrapystartprojectproject_name2创建蜘蛛在spiders文件夹下,创建一个文件,my_spiders.py3写蜘蛛:my_spiders.py文件下1创建类,继承scrapy的一个子类2定义一个蜘蛛名字,name="youname"3定义我们要爬取的网址4继承scrapy的方法:start_requests(selfimportscrapyfroms

- Scrapy爬虫框架学习笔记

pippaa

Python爬虫python数据挖掘

Scrapy爬虫框架结构为:5+2式结构,即5个主体和两个关键链用户只用编写spiders和itempipelines即可requests库适合爬取几个页面,scrapy适和批量爬取网站scrapy常用命令1,建立scrapy爬虫工程scrapystartprojectpython123demo2,在工程中产生一个爬虫输入一行命令,用genspider生成demo.pyscrapygenspide

- 2023scrapy教程,超详细(附案例)

TIO程序志

python开发语言

Scrapy教程文章目录Scrapy教程1.基础2.安装Windows安装方式3.创建项目4.各个文件的作用1.Spiders详细使用:2.items.py3.middlewares.py4.pipelines.py5.settings.py6.scrapy.cfg5.项目实现(爬取4399网页的游戏信息)1.基础ScrapyEngine(引擎):负责Spider、ItemPipeline、Dow

- Scrapy 使用教程

Lucky_JimSir

Pythonscrapy

1.使用Anaconda下载condainstallscrapy2.使用scrapy框架创建工程,或者是启动项目scrapystartproject工程名工程目录,下图是在pycharm下的工程目录这里的douban是我自己的项目名爬虫的代码都写在spiders目录下,spiders->testdouban.py是创建的其中一个爬虫的名称。1)、spiders文件夹:爬虫文件主目录2)、init.

- python爬虫框架scrapy基本使用

d34skip

安装scrapypipinstallscrapypipinstallpypiwin32(windows环境下需要安装)创建项目scrapystartproject[项目名称]使用命令创建爬虫(在spiders目录下执行)scrapygenspider[名字][域名]运行代码scrapycrawl[spiders目录下名称]项目结构1,item.py用来存放爬虫爬取下来数据的模型2,middlewa

- 使用scrapy爬虫出错:AttributeError: ‘AsyncioSelectorReactor‘ object has no attribute ‘_handleSignals‘

andux

出错修复scrapy爬虫

使用scrapy爬虫框架时出错:PSD:\Python\Project\爬虫基础\scrapy_01\scrapy_01\spiders>scrapycrawlappTraceback(mostrecentcalllast):File"",line88,in_run_codeFile"C:\Users\Administrator\AppData\Local\Programs\Python\Pyth

- scrapy项目入门指南

BatFor、布衣

爬虫python爬虫

Scrapy简介一种纯python实现的,基于twisted异步爬虫处理框架。优点基本组件概念Scrapy主要包含5大核心组件:引擎(scrapy)调度器(Scheduler)下载器(Downloader)爬虫(Spiders)项目管道(Pipeline)项目实践开发环境:win10+python3.6+scrapy2.4.11、项目创建首先进入CMD命令窗口,输入如下命令:scrapystart

- java封装继承多态等

麦田的设计者

javaeclipsejvmcencapsulatopn

最近一段时间看了很多的视频却忘记总结了,现在只能想到什么写什么了,希望能起到一个回忆巩固的作用。

1、final关键字

译为:最终的

&

- F5与集群的区别

bijian1013

weblogic集群F5

http请求配置不是通过集群,而是F5;集群是weblogic容器的,如果是ejb接口是通过集群。

F5同集群的差别,主要还是会话复制的问题,F5一把是分发http请求用的,因为http都是无状态的服务,无需关注会话问题,类似

- LeetCode[Math] - #7 Reverse Integer

Cwind

java题解MathLeetCodeAlgorithm

原题链接:#7 Reverse Integer

要求:

按位反转输入的数字

例1: 输入 x = 123, 返回 321

例2: 输入 x = -123, 返回 -321

难度:简单

分析:

对于一般情况,首先保存输入数字的符号,然后每次取输入的末位(x%10)作为输出的高位(result = result*10 + x%10)即可。但

- BufferedOutputStream

周凡杨

首先说一下这个大批量,是指有上千万的数据量。

例子:

有一张短信历史表,其数据有上千万条数据,要进行数据备份到文本文件,就是执行如下SQL然后将结果集写入到文件中!

select t.msisd

- linux下模拟按键输入和鼠标

被触发

linux

查看/dev/input/eventX是什么类型的事件, cat /proc/bus/input/devices

设备有着自己特殊的按键键码,我需要将一些标准的按键,比如0-9,X-Z等模拟成标准按键,比如KEY_0,KEY-Z等,所以需要用到按键 模拟,具体方法就是操作/dev/input/event1文件,向它写入个input_event结构体就可以模拟按键的输入了。

linux/in

- ContentProvider初体验

肆无忌惮_

ContentProvider

ContentProvider在安卓开发中非常重要。与Activity,Service,BroadcastReceiver并称安卓组件四大天王。

在android中的作用是用来对外共享数据。因为安卓程序的数据库文件存放在data/data/packagename里面,这里面的文件默认都是私有的,别的程序无法访问。

如果QQ游戏想访问手机QQ的帐号信息一键登录,那么就需要使用内容提供者COnte

- 关于Spring MVC项目(maven)中通过fileupload上传文件

843977358

mybatisspring mvc修改头像上传文件upload

Spring MVC 中通过fileupload上传文件,其中项目使用maven管理。

1.上传文件首先需要的是导入相关支持jar包:commons-fileupload.jar,commons-io.jar

因为我是用的maven管理项目,所以要在pom文件中配置(每个人的jar包位置根据实际情况定)

<!-- 文件上传 start by zhangyd-c --&g

- 使用svnkit api,纯java操作svn,实现svn提交,更新等操作

aigo

svnkit

原文:http://blog.csdn.net/hardwin/article/details/7963318

import java.io.File;

import org.apache.log4j.Logger;

import org.tmatesoft.svn.core.SVNCommitInfo;

import org.tmateso

- 对比浏览器,casperjs,httpclient的Header信息

alleni123

爬虫crawlerheader

@Override

protected void doGet(HttpServletRequest req, HttpServletResponse res) throws ServletException, IOException

{

String type=req.getParameter("type");

Enumeration es=re

- java.io操作 DataInputStream和DataOutputStream基本数据流

百合不是茶

java流

1,java中如果不保存整个对象,只保存类中的属性,那么我们可以使用本篇文章中的方法,如果要保存整个对象 先将类实例化 后面的文章将详细写到

2,DataInputStream 是java.io包中一个数据输入流允许应用程序以与机器无关方式从底层输入流中读取基本 Java 数据类型。应用程序可以使用数据输出流写入稍后由数据输入流读取的数据。

- 车辆保险理赔案例

bijian1013

车险

理赔案例:

一货运车,运输公司为车辆购买了机动车商业险和交强险,也买了安全生产责任险,运输一车烟花爆竹,在行驶途中发生爆炸,出现车毁、货损、司机亡、炸死一路人、炸毁一间民宅等惨剧,针对这几种情况,该如何赔付。

赔付建议和方案:

客户所买交强险在这里不起作用,因为交强险的赔付前提是:“机动车发生道路交通意外事故”;

如果是交通意外事故引发的爆炸,则优先适用交强险条款进行赔付,不足的部分由商业

- 学习Spring必学的Java基础知识(5)—注解

bijian1013

javaspring

文章来源:http://www.iteye.com/topic/1123823,整理在我的博客有两个目的:一个是原文确实很不错,通俗易懂,督促自已将博主的这一系列关于Spring文章都学完;另一个原因是为免原文被博主删除,在此记录,方便以后查找阅读。

有必要对

- 【Struts2一】Struts2 Hello World

bit1129

Hello world

Struts2 Hello World应用的基本步骤

创建Struts2的Hello World应用,包括如下几步:

1.配置web.xml

2.创建Action

3.创建struts.xml,配置Action

4.启动web server,通过浏览器访问

配置web.xml

<?xml version="1.0" encoding="

- 【Avro二】Avro RPC框架

bit1129

rpc

1. Avro RPC简介 1.1. RPC

RPC逻辑上分为二层,一是传输层,负责网络通信;二是协议层,将数据按照一定协议格式打包和解包

从序列化方式来看,Apache Thrift 和Google的Protocol Buffers和Avro应该是属于同一个级别的框架,都能跨语言,性能优秀,数据精简,但是Avro的动态模式(不用生成代码,而且性能很好)这个特点让人非常喜欢,比较适合R

- lua set get cookie

ronin47

lua cookie

lua:

local access_token = ngx.var.cookie_SGAccessToken

if access_token then

ngx.header["Set-Cookie"] = "SGAccessToken="..access_token.."; path=/;Max-Age=3000"

end

- java-打印不大于N的质数

bylijinnan

java

public class PrimeNumber {

/**

* 寻找不大于N的质数

*/

public static void main(String[] args) {

int n=100;

PrimeNumber pn=new PrimeNumber();

pn.printPrimeNumber(n);

System.out.print

- Spring源码学习-PropertyPlaceholderHelper

bylijinnan

javaspring

今天在看Spring 3.0.0.RELEASE的源码,发现PropertyPlaceholderHelper的一个bug

当时觉得奇怪,上网一搜,果然是个bug,不过早就有人发现了,且已经修复:

详见:

http://forum.spring.io/forum/spring-projects/container/88107-propertyplaceholderhelper-bug

- [逻辑与拓扑]布尔逻辑与拓扑结构的结合会产生什么?

comsci

拓扑

如果我们已经在一个工作流的节点中嵌入了可以进行逻辑推理的代码,那么成百上千个这样的节点如果组成一个拓扑网络,而这个网络是可以自动遍历的,非线性的拓扑计算模型和节点内部的布尔逻辑处理的结合,会产生什么样的结果呢?

是否可以形成一种新的模糊语言识别和处理模型呢? 大家有兴趣可以试试,用软件搞这些有个好处,就是花钱比较少,就算不成

- ITEYE 都换百度推广了

cuisuqiang

GoogleAdSense百度推广广告外快

以前ITEYE的广告都是谷歌的Google AdSense,现在都换成百度推广了。

为什么个人博客设置里面还是Google AdSense呢?

都知道Google AdSense不好申请,这在ITEYE上也不是讨论了一两天了,强烈建议ITEYE换掉Google AdSense。至少,用一个好申请的吧。

什么时候能从ITEYE上来点外快,哪怕少点

- 新浪微博技术架构分析

dalan_123

新浪微博架构

新浪微博在短短一年时间内从零发展到五千万用户,我们的基层架构也发展了几个版本。第一版就是是非常快的,我们可以非常快的实现我们的模块。我们看一下技术特点,微博这个产品从架构上来分析,它需要解决的是发表和订阅的问题。我们第一版采用的是推的消息模式,假如说我们一个明星用户他有10万个粉丝,那就是说用户发表一条微博的时候,我们把这个微博消息攒成10万份,这样就是很简单了,第一版的架构实际上就是这两行字。第

- 玩转ARP攻击

dcj3sjt126com

r

我写这片文章只是想让你明白深刻理解某一协议的好处。高手免看。如果有人利用这片文章所做的一切事情,盖不负责。 网上关于ARP的资料已经很多了,就不用我都说了。 用某一位高手的话来说,“我们能做的事情很多,唯一受限制的是我们的创造力和想象力”。 ARP也是如此。 以下讨论的机子有 一个要攻击的机子:10.5.4.178 硬件地址:52:54:4C:98

- PHP编码规范

dcj3sjt126com

编码规范

一、文件格式

1. 对于只含有 php 代码的文件,我们将在文件结尾处忽略掉 "?>" 。这是为了防止多余的空格或者其它字符影响到代码。例如:<?php$foo = 'foo';2. 缩进应该能够反映出代码的逻辑结果,尽量使用四个空格,禁止使用制表符TAB,因为这样能够保证有跨客户端编程器软件的灵活性。例

- linux 脱机管理(nohup)

eksliang

linux nohupnohup

脱机管理 nohup

转载请出自出处:http://eksliang.iteye.com/blog/2166699

nohup可以让你在脱机或者注销系统后,还能够让工作继续进行。他的语法如下

nohup [命令与参数] --在终端机前台工作

nohup [命令与参数] & --在终端机后台工作

但是这个命令需要注意的是,nohup并不支持bash的内置命令,所

- BusinessObjects Enterprise Java SDK

greemranqq

javaBOSAPCrystal Reports

最近项目用到oracle_ADF 从SAP/BO 上调用 水晶报表,资料比较少,我做一个简单的分享,给和我一样的新手 提供更多的便利。

首先,我是尝试用JAVA JSP 去访问的。

官方API:http://devlibrary.businessobjects.com/BusinessObjectsxi/en/en/BOE_SDK/boesdk_ja

- 系统负载剧变下的管控策略

iamzhongyong

高并发

假如目前的系统有100台机器,能够支撑每天1亿的点击量(这个就简单比喻一下),然后系统流量剧变了要,我如何应对,系统有那些策略可以处理,这里总结了一下之前的一些做法。

1、水平扩展

这个最容易理解,加机器,这样的话对于系统刚刚开始的伸缩性设计要求比较高,能够非常灵活的添加机器,来应对流量的变化。

2、系统分组

假如系统服务的业务不同,有优先级高的,有优先级低的,那就让不同的业务调用提前分组

- BitTorrent DHT 协议中文翻译

justjavac

bit

前言

做了一个磁力链接和BT种子的搜索引擎 {Magnet & Torrent},因此把 DHT 协议重新看了一遍。

BEP: 5Title: DHT ProtocolVersion: 3dec52cb3ae103ce22358e3894b31cad47a6f22bLast-Modified: Tue Apr 2 16:51:45 2013 -070

- Ubuntu下Java环境的搭建

macroli

java工作ubuntu

配置命令:

$sudo apt-get install ubuntu-restricted-extras

再运行如下命令:

$sudo apt-get install sun-java6-jdk

待安装完毕后选择默认Java.

$sudo update- alternatives --config java

安装过程提示选择,输入“2”即可,然后按回车键确定。

- js字符串转日期(兼容IE所有版本)

qiaolevip

TODateStringIE

/**

* 字符串转时间(yyyy-MM-dd HH:mm:ss)

* result (分钟)

*/

stringToDate : function(fDate){

var fullDate = fDate.split(" ")[0].split("-");

var fullTime = fDate.split("

- 【数据挖掘学习】关联规则算法Apriori的学习与SQL简单实现购物篮分析

superlxw1234

sql数据挖掘关联规则

关联规则挖掘用于寻找给定数据集中项之间的有趣的关联或相关关系。

关联规则揭示了数据项间的未知的依赖关系,根据所挖掘的关联关系,可以从一个数据对象的信息来推断另一个数据对象的信息。

例如购物篮分析。牛奶 ⇒ 面包 [支持度:3%,置信度:40%] 支持度3%:意味3%顾客同时购买牛奶和面包。 置信度40%:意味购买牛奶的顾客40%也购买面包。 规则的支持度和置信度是两个规则兴

- Spring 5.0 的系统需求,期待你的反馈

wiselyman

spring

Spring 5.0将在2016年发布。Spring5.0将支持JDK 9。

Spring 5.0的特性计划还在工作中,请保持关注,所以作者希望从使用者得到关于Spring 5.0系统需求方面的反馈。