图像增强序列——LIME: A Method for Low-light IMage Enhancement(LIME模型,2017CVPR)

1. 参考文献

2. 模型实现

% 论文: LIME: A Method for Low-light Image Enhancement

% 作者:Xiaojie Guo

% 链接:

% Author: HSW

% Date: 2018-04-27

clc;

close all;

clear;

addpath(genpath('removeHaze\'));

addpath(genpath('BM3D\'));

% img = imread('timg1.png');

img = imread('timg2.png');

% img = imread('timg3.png');

% img = imread('timg4.png');

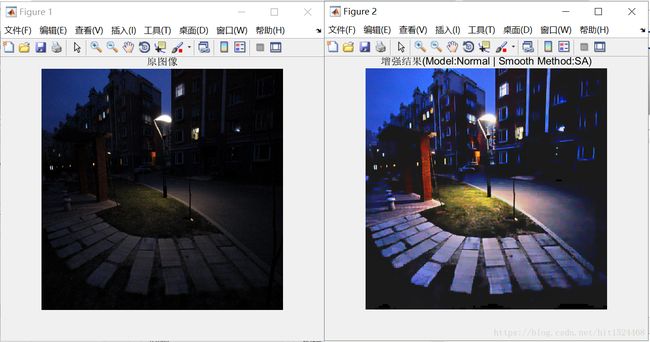

figure;

imshow(img, []);

title('原图像');

Model = 'Normal'; % 'Dehaze' / 'Normal'

method = 'SA';

denoiseFlag = 0; % = 0时表示不进行噪声处理; = 1时表示进行噪声处理

if size(img, 3) == 3

img_in = im2double(img);

img_out = Lime_enhance(img_in, Model, method, 0, 0);

figure;

imshow(img_out, []);

title(['增强结果(', 'Model:', Model, ' | Smooth Method:', method, ')']);

else

disp('LIME模型处理彩色图像');

end

2.1 LIME模型相关代码

function T = Illumination(L, method)

% Inputs:

% L:

% Outputs:

% T:

if strcmp(method, 'max_c') == 1

T = max(L, [], 3);

elseif strcmp(method, 'min_c') == 1

T = min(L, [], 3);

end

end

function T_out = Illumination_filter(T_in, method, ksize, sigma1, sigma2, alpha, sharpness, maxIter)

% Inputs:

% T_in:

% ksize: 窗口的大小为ksize x ksize

% method:

% sigma1:

% sigma2:

% alpha:

% sharpness:

% maxIter:

% Outputs:

% T_out:

%

% Author: HSW

% Date: 2018-04-27

%

if ~exist('method','var')

method = 'Max';

end

if strcmp(method, 'Max') == 1

if ~exist('ksize','var')

ksize = 5;

end

elseif strcmp(method, 'Mean') == 1

if ~exist('ksize','var')

ksize = 5;

end

elseif strcmp(method, 'BF') == 1

if ~exist('ksize','var')

ksize = 5;

end

if ~exist('sigma1','var')

sigma1 = 5;

end

if ~exist('sigma2','var')

sigma2 = 7;

end

elseif strcmp(method, 'TV') == 1

if ~exist('alpha','var')

alpha = 0.15;

end

if ~exist('maxIter','var')

maxIter = 50;

end

elseif strcmp(method, 'SA') == 1

if ~exist('alpha','var')

alpha = 0.15;

end

if ~exist('sigma1','var')

sigma1 = 5;

end

if ~exist('sharpness', 'var')

sharpness = 0.02;

end

if ~exist('maxIter','var')

maxIter = 4;

end

end

if strcmp(method, 'Max') == 1

% 局部极大值

T_out = filter_Max(T_in, ksize);

elseif strcmp(method, 'Mean') == 1

T_out = filter_Mean(T_in, ksize);

elseif strcmp(method, 'BF') == 1

% 双边滤波

T_out = filter_BF(T_in, ksize, sigma1, sigma2);

elseif strcmp(method, 'TV') == 1

T_out = filter_TV(T_in, alpha, maxIter);

elseif strcmp(method, 'SA') == 1

T_out = filter_SA(T_in, alpha, sigma1, sharpness, maxIter);

end

end

function T_out = filter_Max(T_in, ksize)

% Inputs:

% T_in:

% ksize:

% Outputs:

% T_out:

% Author: HSW

% Date: 2018-04-27

%

[m, n] = size(T_in);

hsize = floor(ksize / 2);

T_out = T_in;

for i = 1:m

for j = 1:n

patch = T_in(max(1, i - hsize):min(m, i + hsize), max(1, j - hsize):min(n, j + hsize));

T_out(i,j) = max(max(patch));

end

end

disp('run filter_max');

end

function T_out = filter_Mean(T_in, ksize)

% Inputs:

% T_in:

% ksize:

% Outputs:

% T_out:

% Author: HSW

% Date: 2018-04-27

%

[m, n] = size(T_in);

hsize = floor(ksize / 2);

T_out = T_in;

for i = 1:m

for j = 1:n

patch = T_in(max(1, i - hsize):min(m, i + hsize), max(1, j - hsize):min(n, j + hsize));

T_out(i,j) = mean(mean(patch));

end

end

disp('run filter_mean');

end

function T_out = filter_BF(T_in, ksize, sigma1, sigma2)

% Inputs:

% T_in:

% ksize:

% sigma1:

% sigma2:

% Outputs:

% T_out:

% Author: HSW

% Date: 2018-04-27

%

[m, n] = size(T_in);

hsize = floor(ksize / 2);

T_out = T_in;

xx = -hsize : hsize;

yy = -hsize : hsize;

kernel1 = zeros(ksize, ksize);

for ii = 1:ksize

for jj = 1:ksize

kernel1(ii, jj) = exp(-(xx(ii) * yy(jj) / sigma1)^2);

end

end

kernel1 = kernel1 ./ sum(sum(kernel1)); % 归一化

for i = hsize + 1:m - hsize

for j = hsize + 1:n - hsize

kernel2 = exp(-((T_in(i - hsize : i + hsize, j - hsize:j + hsize) - repmat(T_in(i,j), ksize, ksize)) ./ sigma2).^2);

kernel2 = kernel2 ./ sum(sum(kernel2));

T_out(i,j) = sum(sum(kernel1 .* kernel2 .* T_in(i - hsize : i + hsize, j - hsize : j + hsize) ./ sum(sum(kernel1 .* kernel2))));

end

end

end

function T_out = filter_TV(T_in, alpha, maxIter)

% Inputs:

% T_in:

% alpha:

% maxIter:

% Outputs:

% T_out:

% Author: HSW

% Date: 2018-04-27

%

epsilon = 0.00001;

dt = 0.1;

J = T_in;

for iter = 1:maxIter

DfJx=J([2:end end],:)-J; %函数关于X的一阶偏导(向后差分)

DbJx=J-J([1 1:end-1],:); %函数关于X的一阶偏导(向前差分)

DfJy=J(:,[2:end end])-J; %函数关于Y的一阶偏导(向后差分)

DbJy=J-J(:,[1 1:end-1]); %函数关于Y的一阶偏导(向前差分)

TempDJx=(epsilon+DfJx.*DfJx+((sign(DfJy)+sign(DbJy)).*min(abs(DfJy),abs(DbJy))./2).^2).^(1/2);%求梯度的模

TempDJy=(epsilon+DfJy.*DfJy+((sign(DfJx)+sign(DbJx)).*min(abs(DfJx),abs(DbJx))./2).^2).^(1/2);

DivJx=DfJx./(TempDJx + (TempDJx == 0) * epsilon);

DivJy=DfJy./(TempDJy + (TempDJy == 0) * epsilon);

%求散度

Div=DivJx-DivJx([1 1:end-1],:)+DivJy-DivJy(:,[1 1:end-1]);

J= J + dt * alpha* Div - dt * (J-T_in); %产生迭代

end

T_out = J;

end

function T_out = filter_SA(T_in, alpha, sigma, sharpness, maxIter)

% Inputs:

% T_in:

% alpha:

% sigma:

% maxIter:

% sharpness:

% Outputs:

% T_out:

% Author: HSW

% Date: 2018-04-27

%

% 论文描述的解法没有完全理解,就按照论文中的文献[17]的迭代法进行实现,只是不迭代更新Wh 和 Wv

T_out = tsmooth(T_in, alpha, sigma, sharpness, maxIter);

end

function S = tsmooth(I,lambda,sigma,sharpness,maxIter)

% 参考代码: https://blog.csdn.net/songzitea/article/details/12851723#

if (~exist('lambda','var'))

lambda=0.01;

end

if (~exist('sigma','var'))

sigma=3.0;

end

if (~exist('sharpness','var'))

sharpness = 0.02;

end

if (~exist('maxIter','var'))

maxIter=4;

end

I = im2double(I);

x = I;

sigma_iter = sigma;

lambda = lambda/2.0;

dec=2.0;

[wx, wy] = computeTextureWeights(x, sigma_iter, sharpness); % 与文献[17]不同,文献[17]每次迭代都改变wx, wy

for iter = 1:maxIter

% [wx, wy] = computeTextureWeights(x, sigma_iter, sharpness);

x = solveLinearEquation(I, wx, wy, lambda);

sigma_iter = sigma_iter/dec;

if sigma_iter < 0.5

sigma_iter = 0.5;

end

end

S = x;

end

function [retx, rety] = computeTextureWeights(fin, sigma,sharpness)

fx = diff(fin,1,2);

fx = padarray(fx, [0 1 0], 'post');

fy = diff(fin,1,1);

fy = padarray(fy, [1 0 0], 'post');

vareps_s = sharpness;

vareps = 0.001;

wto = max(sum(sqrt(fx.^2+fy.^2),3)/size(fin,3),vareps_s).^(-1);

fbin = lpfilter(fin, sigma);

gfx = diff(fbin,1,2);

gfx = padarray(gfx, [0 1], 'post');

gfy = diff(fbin,1,1);

gfy = padarray(gfy, [1 0], 'post');

wtbx = max(sum(abs(gfx),3)/size(fin,3),vareps).^(-1);

wtby = max(sum(abs(gfy),3)/size(fin,3),vareps).^(-1);

retx = wtbx.*wto;

rety = wtby.*wto;

retx(:,end) = 0;

rety(end,:) = 0;

end

function ret = conv2_sep(im, sigma)

ksize = bitor(round(5*sigma),1);

g = fspecial('gaussian', [1,ksize], sigma);

ret = conv2(im,g,'same');

ret = conv2(ret,g','same');

end

function FBImg = lpfilter(FImg, sigma)

FBImg = FImg;

for ic = 1:size(FBImg,3)

FBImg(:,:,ic) = conv2_sep(FImg(:,:,ic), sigma);

end

end

function OUT = solveLinearEquation(IN, wx, wy, lambda)

[r,c,ch] = size(IN);

k = r*c;

dx = -lambda*wx(:);

dy = -lambda*wy(:);

B(:,1) = dx;

B(:,2) = dy;

d = [-r,-1];

A = spdiags(B,d,k,k);

e = dx;

w = padarray(dx, r, 'pre'); w = w(1:end-r);

s = dy;

n = padarray(dy, 1, 'pre'); n = n(1:end-1);

D = 1-(e+w+s+n);

A = A + A' + spdiags(D, 0, k, k);

if exist('ichol','builtin')

L = ichol(A,struct('michol','on'));

OUT = IN;

for ii=1:ch

tin = IN(:,:,ii);

[tout, flag] = pcg(A, tin(:),0.1,100, L, L');

OUT(:,:,ii) = reshape(tout, r, c);

end

else

OUT = IN;

for ii=1:ch

tin = IN(:,:,ii);

tout = A\tin(:);

OUT(:,:,ii) = reshape(tout, r, c);

end

end

end

function img_out = Lime_enhance(img_in, Model, method, denoiseFlag, displayFlag)

% Inputs:

% Model: 'Normal' 论文公式(1)模型, 'Dehaze'论文公式(2)模型

% method: 'Max' / 'Mean' / 'BF' / 'TV' / 'SA'

% Outputs:

% img_out:

%

epsilon = 0.0001;

[m, n, dims] = size(img_in);

if strcmp(Model, 'Normal') == 1

% 估计光照亮度

T = Illumination(img_in, 'max_c'); % 亮通道

if displayFlag

figure;

imshow(T,[]);

title('T');

end

% 亮通道光滑

smoothT = Illumination_filter(T, method);

if displayFlag

figure;

imshow(smoothT, []);

title('smoothT');

end

% gama变换

gama = 0.8;

gamaT = smoothT.^gama;

if displayFlag

figure;

imshow(gamaT, []);

title('gamaT');

end

% I = img_in ./ repmat((gamaT + (gamaT == 0) * epsilon), [1, 1, 3]);

I = 1 - ((ones([m, n, dims]) - img_in) - repmat(0.95 * (1 - gamaT), [1, 1, 3])) ./ repmat((gamaT + (gamaT == 0) * epsilon), [1, 1, 3]);

I(I < 0) = 0;

I(I > 1) = 1;

if displayFlag

figure;

imshow(I, []);

title('未除噪增强图像');

end

% 进行噪声处理

if denoiseFlag == 1

I_noise = uint8(I .* 255);

I_YCbCr = rgb2ycbcr(I_noise);

Y = I_YCbCr(:, :, 1);

maxY = max(max(Y));

minY = min(min(Y));

% BM3D去噪

Y_denoise = BM3D(Y, 0, 5, 1, 1);

maxY_denoise = max(max(Y_denoise));

minY_denoise = min(min(Y_denoise));

Y_denoise = (double(Y_denoise) - double(minY_denoise)) ./ double(maxY_denoise - minY_denoise) .* double(maxY - minY) + double(minY);

I_YCbCr(:, :, 1) = Y_denoise;

I_denoise = ycbcr2rgb(I_YCbCr);

I_denoise = double(I_denoise) ./ 255.;

% 图像融合

I = double(I) .* double(repmat(gamaT, [1, 1, 3])) + double(I_denoise) .* double(repmat((1 - gamaT), [1, 1, 3]));

end

elseif strcmp(Model, 'Dehaze') == 1

img_in = 1 - img_in;

ksize = 5;

[img_in, I, J, T_est, T, A] = removeHaze(img_in,ksize);

I = 1 - I;

end

img_out = I;

end2.2 RemoveHaze文件夹代码

function T_colored = colorTransmission( T )

%COLORTRANSMISSION Summary of this function goes here

% Detailed explanation goes here

t = size(T);

T_colored = zeros(t(1), t(2), 3);

maxT = max(max(max(T)));

minT = min(min(min(T)));

avg = (maxT + minT)/2;

T_colored(:,:,1) = T > ( maxT - avg/2 );

T_colored(:,:,2) = ((minT+avg/2) < T) .* (T < (maxT-avg/2));

T_colored(:,:,3) = T < minT+avg/2;

end

function A = estimateA( I, J, numPixels )

%ESTIMATEA Summary of this function goes here

% Detailed explanation goes here

% Make a list of the brightest pixels

brightestJ = zeros(numPixels,3);

[x_dim y_dim] = size(J);

for i = 1:x_dim

for j = 1:y_dim

[minElement, index] = min(brightestJ(:,3));

if J(i,j) > minElement

brightestJ(index,:) = [i j J(i,j)];

end

end

end

% Find the highest intensity pixel from the original Image using the

% list calculated above

highestIntensity = zeros(1,3);

for i = 1:numPixels

x = brightestJ(i,1);

y = brightestJ(i,2);

intensity = sum(I(x,y,:));

if intensity > sum(highestIntensity)

highestIntensity = I(x,y,:);

end

end

% Set as the Atmosphere lighting

dimI = size(I);

if numel(dimI) == 3

A = zeros(x_dim,y_dim,3);

for a = 1:dimI(3)

A(:,:,a) = A(:,:,a) + highestIntensity(:,:,a);

end

else

A = zeros(x_dim,y_dim);

A(:,:) = A(:,:) + highestIntensity(:,:);

end

end

function [ T ] = generateLaplacian( I, T_est)

%GENERATELAPLACIAN2 Summary of this function goes here

% Detailed explanation goes here

dimI = size(I);

% Taking a box around the pixel

win_size = 1;

win_pixels = 9;

% As per equation in paper when computing the laplacian

% U is to be added to the window covariance

U = .000001 ./win_pixels.*eye(3);

windowIndicies = 1:dimI(1)*dimI(2);

windowIndicies = reshape(windowIndicies,dimI(1),dimI(2));

totalElements = win_pixels^2 * ( dimI(1) - 2 ) * ( dimI(2) - 2 );

indicies_x = ones(1,totalElements);

indicies_y = ones(1,totalElements);

elements = zeros(1,totalElements);

count = 0;

for i = (1+win_size):(dimI(2)-win_size)

for j = (1+win_size):(dimI(1)-win_size)

% Get the window around i and j

rangeI = i-win_size:i+win_size;

rangeJ = j-win_size:j+win_size;

window = I(rangeJ, rangeI,:);

% Convert to a vector

% each column representing a color

window_vector = reshape(window,win_pixels,3);

% Calculate the mean and difference

window_mean = mean(window_vector)';

diff = window_vector' - repmat(window_mean,1,win_pixels);

% both methods of computing covariant produce the same results

window_covariance = (diff*diff'/win_pixels)+U;

%window_covariance = (window_vector'*window_vector/win_pixels - window_mean*window_mean')+U;

% Compute the elements in the L matrix

% easier to just store these in a spares matrix

L_element = eye(win_pixels) - (1 + diff' * inv(window_covariance) * diff) ./ win_pixels;

L_element = (L_element(:))'; % reshape it to a single vector

% Calculate the cordinates in the L matrix that we are dealing

% with. [ coordinates required in a sparse matrix ]

% Step 1. Get the indicies of the current window

window_indicies = reshape(windowIndicies(rangeJ,rangeI),1,win_pixels);

% Step 2. Create all combinations of pixels

x = repmat(window_indicies,win_pixels,1);

y = x';

% reformat combination of pixels

x = (x(:))';

y = (y(:))';

indicies_x((count*(win_pixels^2) + 1):(count*(win_pixels^2)+(win_pixels^2))) = x;

indicies_y((count*(win_pixels^2) + 1):(count*(win_pixels^2)+(win_pixels^2))) = y;

elements((count*(win_pixels^2) + 1):(count*(win_pixels^2)+(win_pixels^2))) = L_element;

count = count + 1;

end

end

L = sparse(indicies_x,indicies_y,elements,dimI(1)*dimI(2),dimI(1)*dimI(2));

T = (L + .0001 .* speye(size(L))) \ T_est(:) .* .0001;

T = reshape(T, size(T_est));

end

function J = makeDarkChannel( I, patch_size )

% Assuming that this is RGB but overall not requiring it

[image_x image_y channels] = size(I);

J = zeros(image_x,image_y);

tmpPatch = double(zeros(2*floor(patch_size/2),2*floor(patch_size/2),channels));

I = padarray(I, [floor(patch_size/2) floor(patch_size/2)], 'symmetric');

% I think the size actually returns in order [y x ~ but doesn't really

% matter as long as order is kept

% padarray resizes the example 300x400 to 314x414.

% Use original image_x, image_y and add 2*floor(patch_size/2)

for i = 1:image_x

minX = i;

maxX = (i + 2*floor(patch_size/2));

for j = 1:image_y

minY = j;

maxY = (j + 2*floor(patch_size/2));

% copy all color channels over

tmpPatch = I(minX:maxX, minY:maxY,:);

J(i,j) = min(tmpPatch(:)); % find min across all channels

end

end

end

%function [I J T A L] = removeHaze( imageName, patch_size )

function [I I_out J T_est T A] = removeHaze( I, patch_size )

% Used to make image more realistic due to seeming unbelievable

% when there is no sense of depth

aerialPerspective = 0.95;

% Make grayscales to color

if numel(size(I)) == 2

[x y] = size(I);

tmpI = zeros(x,y,3);

for c = 1:3

tmpI(:,:,c) = I;

end

I = tmpI;

end

J = makeDarkChannel(I,patch_size);

% Section 4.4

% Estimate Atmosphere

% We first pick the top 0.1% bright- est pixels in the dark channel.

% These pixels are most haze- opaque (bounded by yellow lines in

% Figure 6(b)). Among these pixels, the pixels with highest intensity

% in the input image I is selected as the atmospheric light.

% TL;DR TAKE .1% of the brightest pixels

dimJ = size(J);

numBrightestPixels = ceil(0.001 * dimJ(1) * dimJ(2)); % Use the cieling to overestimate number needed

A = estimateA(I,J,numBrightestPixels);

% Section 4.1

% Estimate the Transmission Equation 12

T_est = 1 - aerialPerspective*makeDarkChannel(I./A,patch_size);

[T] = generateLaplacian(I,T_est);

dehazed = zeros(size(I));

% Equation 16

for c = 1:3

dehazed(:,:,c) = (I(:,:,c) - A(:,:,c))./(max(T, .1)) + A(:,:,c);

end

I_out = dehazed;

beep

end

2.3 BM3D文件夹相关代码

function img_out = BM3D(img_in, tran_mode, sigma, displayMidResult, displayProcess)

% 参考文献: 《Image denoising by sparse 3D transform-domain collaborative filtering》

% 《An Analysis and Implementation of the BM3D Image Denoising Method》

% Inputs:

% img_in: 噪声图像,必须为矩形方阵

% tran_mode: = 0, fft; = 1, dct; = 2, dwt, = 3, db1

% displayMidResult: 0/1

% displayProcess: 0/1

% Outputs:

% img_out: 去噪图像

% 代码下载链接: http://www.pudn.com/Download/item/id/2703664.html

%

%

if ~exist('tran_mode', 'var')

tran_mode = 0;

end

if ~exist('sigma', 'var')

sigma = 10;

end

if ~exist('displyaMidResult', 'var')

displayMidResult = 0;

end

if ~exist('displayProcess', 'var')

displayProcess = 0;

end

img_noise = img_in;

[row,col] = size(img_noise);

tic

% first step

kHard=8; % 块大小

pHard=4; % 块移动间隔

lambda_distHard=0;% 求相似的距离时,变换后,收缩的阈值

nHard=40; % 搜索窗口大小

NHard=28; % 最多相似块个数

tauHard=5000; % 最大的相似距离for fft

beta=2;

% tauHard=50000;% for dct

if(tran_mode==0) %fft

lambda2d=400;

lambda1d=500;

lambda2d_wie=50;

lambda1d_wie=500;

elseif(tran_mode == 1) %dct

lambda2d=50;

lambda1d=80;

lambda2d_wie=20;

lambda1d_wie=60;

elseif(tran_mode == 2) %dwt

lambda2d=50;

lambda1d=80;

lambda2d_wie=20;

lambda1d_wie=60;

end

%kaiser 窗,实际代码中可能没有用到。

kaiser_win=kaiser(kHard,1)*kaiser(kHard,1)';

%图像分块,并且做变换,为找相似块做准备

[block,tran_block,block2row_idx,block2col_idx]=im2block(img_noise,kHard,pHard,lambda_distHard,0);

% block number row:行方向上的 块的个数

bn_r=floor((row-kHard)/pHard)+1;

bn_c=floor((col-kHard)/pHard)+1;

%基础估计的图像

img_basic_sum=zeros(row,col);

img_basic_weight=zeros(row,col);

%显示处理进度

is_disp_process = displayProcess;

process_step_total = bn_r/10;

process_step_cnt = 0;

%基础估计

fprintf('BM3D: First Stage Start...\n');

%对每个块遍历

for i=1:1:bn_r

if((is_disp_process) &&(i>process_step_total))

process_step_total=process_step_total+bn_r/10;

process_step_cnt=process_step_cnt+1;

fprintf(' process:%d/10\n',process_step_cnt)

end

for j=1:1:bn_c

[sim_blk,sim_num,sim_blk_idx]=search_similar_block(i,j,block,tran_block,kHard,floor(nHard/pHard),...

bn_r,bn_c,tauHard,NHard);

%toc

% test fine_similar block

num_sim=size(sim_blk_idx,3);

%tic

%做3D变换,并且用阈值收缩

tran3d_blk_shrink=transform_3d(sim_blk,tran_mode,lambda2d,lambda1d);

%toc

NHard_P=nnz(tran3d_blk_shrink);%non_zero_num

if(NHard_P >1)

wHard_P=1/NHard_P;

else

wHard_P=1;

end

Wwin2D= kaiser(kHard, beta) * kaiser(kHard, beta)'; % Kaiser window used in the hard-thresholding part

wWien_P=Wwin2D*wHard_P;

%wHard_P=wHard_P*kaiser_win;% 要不要?

%tic

% 3D逆变换

blk_est=inv_transform_3d(tran3d_blk_shrink,tran_mode);

%pause

%可能是复数,虚部为0或接近于0,所以只取实部

%max(abs(imag(blk_est(:))))

blk_est=real(blk_est);

%toc

%tic

for k=1:sim_num

idx=sim_blk_idx(k);

ir=block2row_idx(idx);

jr=block2col_idx(idx);

%实在算不清了。利用提前保存好 块索引和左上角坐标的 对应关系

%ir=floor((idx-1)*pHard/col)+1;

%jr=(idx-1)*pHard-(i-1)*col+1;

img_basic_sum(ir:ir+kHard-1,jr:jr+kHard-1)=...

img_basic_sum(ir:ir+kHard-1,jr:jr+kHard-1)+wHard_P*blk_est(:,:,k);

img_basic_weight(ir:ir+kHard-1,jr:jr+kHard-1)=...

img_basic_weight(ir:ir+kHard-1,jr:jr+kHard-1)+wHard_P;

end

%toc

%pause

end

end

fprintf('BM3D: First Stage End...\n');

img_basic=img_basic_sum./img_basic_weight;

if displayMidResult

figure;

imshow(img_basic,[]);

title('BM3D:Fist Stage Result');

% psnr=20*log10(255/sqrt(mean((img_basic(:)-img(:)).^2)))

end

% second step

kWien=kHard;

pWien=pHard;

lambda_distWien=lambda_distHard;

nWien=nHard;%搜索窗口大小

NWien=NHard;%最多相似块个数

tauWien=tauHard;

sigma2=sigma*sigma;

[block_basic,tran_block_basic,block2row_idx_basic,block2col_idx_basic]=im2block(img_basic,kWien,pWien,lambda_distWien,0);

bn_r=floor((row-kWien)/pWien)+1;

bn_c=floor((col-kWien)/pWien)+1;

img_wien_sum=zeros(row,col);

img_wien_weight=zeros(row,col);

process_step_total=bn_r/10;

process_step_cnt=0;

fprintf('BM3D: Second Stage Start...\n');

for i=1:1:bn_r

if((is_disp_process) &&(i>process_step_total))

process_step_total=process_step_total+bn_r/10;

process_step_cnt=process_step_cnt+1;

fprintf(' process:%d/10\n',process_step_cnt)

end

for j=1:1:bn_c

[sim_blk_basic,sim_num,sim_blk_basic_idx]=search_similar_block(i,j,block_basic,tran_block_basic,kWien,floor(nWien/pWien),...

bn_r,bn_c,tauWien,NWien);

%对基础块进行3D变换,求得omega_P.

tran3d_blk_basic=transform_3d(sim_blk_basic,tran_mode,lambda2d_wie,lambda1d_wie);

omega_P=(tran3d_blk_basic.^2)./((tran3d_blk_basic.^2)+sigma2);

%用 基础块得到的相似块的索引,来找到 噪声块的相似块,并进行3D变换

tran3d_blk=transform_3d(block(:,:,sim_blk_basic_idx),tran_mode,lambda2d_wie,lambda1d_wie);

blk_est=inv_transform_3d(omega_P.*tran3d_blk,tran_mode);

%可能是复数,虚部为0或接近于0,所以只取实部

%max(abs(imag(blk_est(:))))

blk_est=real(blk_est);

NWien_P=nnz(omega_P); %IPOL文中8式中矩阵范数?应该如何求??

if(NWien_P >1)

wWien_P=1/(NWien_P);

else

wWien_P=1;

end

% wWien_P=wWien_P/sigma2;

for k=1:sim_num

idx=sim_blk_basic_idx(k);

ir=block2row_idx_basic(idx);

jr=block2col_idx_basic(idx);

img_wien_sum(ir:ir+kWien-1,jr:jr+kWien-1)=...

img_wien_sum(ir:ir+kWien-1,jr:jr+kWien-1)+wWien_P*blk_est(:,:,k);

img_wien_weight(ir:ir+kWien-1,jr:jr+kWien-1)=...

img_wien_weight(ir:ir+kWien-1,jr:jr+kWien-1)+wWien_P;

end

end

end

fprintf('BM3D: Second Stage End\n');

img_wien=img_wien_sum./img_wien_weight;

if displayMidResult

figure;

imshow(img_wien,[]);

title('BM3D: 去噪结果');

% psnr=20*log10(255/sqrt(mean((img_wien(:)-img(:)).^2)))

end

img_out = img_wien;

toc% 图像分块,并且做变换,为找相似块做准备

% k:块大小,p:块移动步长,lambda2D,delta 收缩阈值

% block 返回的块,transform_block 变换后的块

% block2row_idx,block2col_idx 为保存的 块索引 与 块左上角在图像中坐标的 对应关系

function [block,transform_block,block2row_idx,block2col_idx] =im2block(img,k,p,lambda2D,delta)

[row,col]=size(img);

%这个阈值该用什么公式呢??

thres=lambda2D*delta*sqrt(2*log(row*col));

% r_num:行方向 上 应该有 多少个块

r_num=floor((row-k)/p)+1;

c_num=floor((col-k)/p)+1;

block=zeros(k,k,r_num*c_num);

block2row_idx=[];

block2col_idx=[];

cnt=1;

for i=0:1:r_num-1

rs=1+i*p;

for j=0:1:c_num-1

cs=1+j*p;

block(:,:,cnt)=img(rs:rs+k-1,cs:cs+k-1);

block2row_idx(cnt)=rs;

block2col_idx(cnt)=cs;

%该用什么变换呢??

tr_b=fft2(block(:,:,cnt));

% tr_b=dct2(block(:,:,cnt));

idx=find(abs(tr_b) % 3D逆变换

function [blk_est]=inv_transform_3d(blk_tran3d,tran_mode)

global blk_tran1d_s;

global blk_2d_s;

[m,n,blk_num]=size(blk_tran3d);

blk_invtran1d=zeros(m,n,blk_num);

blk_est=zeros(m,n,blk_num);

if(tran_mode==0) %fft

for i=1:1:m

for j=1:1:n

blk_invtran1d(i,j,:)=ifft(blk_tran3d(i,j,:));

end

end

for i=1:1:blk_num

blk_est(:,:,i)=ifft2(blk_invtran1d(:,:,i));

end

elseif(tran_mode==1) %dct

for i=1:1:m

for j=1:1:n

blk_invtran1d(i,j,:)=idct(blk_tran3d(i,j,:));

end

end

for i=1:1:blk_num

blk_est(:,:,i)=idct2(blk_invtran1d(:,:,i));

end

elseif(tran_mode==2) %dwt

blk_num=length(blk_2d_s);

blk_c=waverec2(blk_tran3d,blk_tran1d_s,'haar');

blk_est=[];

for i=1:1:blk_num

blk_est(:,:,i)=waverec2(blk_c(:,i),blk_2d_s{i},'Bior1.5');

end

else

error('tran_mode error');

end% 搜索相似块

% ik,jk: 块的左上角 坐标。

%bn_r: block_num_row,分块之后,沿着行的方向上有多少个块。

%用 tran_block 来算距离,得到的sim_blk 却是来自block.

%np: 搜索窗口的大小/块移动步距。

% tau: 最大相似距离

% max_sim_num: 最多相似块 个数

function [sim_blk,sim_num,sim_blk_idx]=search_similar_block(ik,jk,block,tran_block,k,np,bn_r,bn_c,tau,max_sim_num)

% 搜索窗口的 左上角 和 右下角 的块的坐标。s,e:start,end.

in_s=max(ik-floor(np/2),1);

jn_s=max(jk-floor(np/2),1);

in_e=min(ik+floor(np/2),bn_r);

jn_e=min(jk+floor(np/2),bn_c);

% 当前参考块

ref_blk=tran_block(:,:,((ik-1)*bn_c+jk));

k2=k*k;

cnt=0;

%dist=[];

%blk_idx=[];

%如果参考块在图像的边缘,那它周围的搜索区域就不会是完整的n*n

%所以不能单纯的由cnt 反推出idx.

%for ii=in_s:1:in_e

% for jj=jn_s:1:jn_e

% cnt=cnt+1;

% %idx=ii*bn_c+jj;

% idx=(ii-1)*bn_c+(jj-1)+1;

% cur_blk=tran_block(:,:,idx);

% blk_idx(cnt)=idx;

% dist(cnt)=norm(cur_blk-ref_blk);% 只是比较大小,就没必要归一化了 /k2;

% end

%end

%下面找相似块的方法要比上面的快一些

ii=in_s:1:in_e;

jj=jn_s:1:jn_e;

[II,JJ]=meshgrid(ii,jj);

IDX=(II-1)*bn_c+JJ;

blk_idx=IDX(:);

cur_blk=tran_block(:,:,blk_idx);

cnt=size(cur_blk,3);

ref_blk_mat=repmat(ref_blk,[1,1,cnt]);

% shit! 要不要用norm ? 奇异值能衡量矩阵的相似程度?

% norm(cur_blk-ref_blk_mat);

delta_blk=cur_blk-ref_blk_mat;

dist=sum(sum(delta_blk.*delta_blk,1),2);

% 排序,找到最相似的块

[dist_sort,dist_idx]=sort(dist);

max_num=min(cnt,max_sim_num);%可能当前块中个数还没有max_sim_num多

if(dist_sort(max_num) % 阈值收缩,abs小于thres的 赋值 0

function [val]=thres_shrink(data,thres)

val=data;

idx=find(abs(data)% 3D变换,先进行 2D变换,用lambda2d阈值收缩,然后进行1D变换,

% lambda1d 阈值收缩。

function blk_tran3d=transform_3d(blk_3d,tran_mode,lambda2d,lambda1d)

global blk_tran1d_s;

global blk_2d_s;

[m,n,blk_num]=size(blk_3d);

%变换不同时,可能需要修改??

blk_2d_shrink=zeros(m,n,blk_num);

blk_1d_shrink=zeros(m,n,blk_num);

%(strcmp(tran_mode,'fft'))

if(tran_mode==0) %fft

for i=1:1:blk_num

blk_tran2d=fft2(blk_3d(:,:,i));

blk_2d_shrink(:,:,i)=thres_shrink(blk_tran2d,lambda2d);

end

for i=1:1:m

for j=1:1:n

blk_tran1d=fft(blk_2d_shrink(i,j,:));

blk_1d_shrink(i,j,:)=thres_shrink(blk_tran1d,lambda1d);

end

end

blk_tran3d=blk_1d_shrink;

%test 这是为了测试 还能否反变换回来。

%blk_invtran1d=zeros(m,n,blk_num);

%blk_est=zeros(m,n,blk_num);

%for i=1:1:m

% for j=1:1:n

% blk_invtran1d(i,j,:)=ifft(blk_tran3d(i,j,:));

% end

%end

%for i=1:1:blk_num

% blk_est(:,:,i)=ifft2(blk_invtran1d(:,:,i));

%end

elseif(tran_mode==1) %dct

for i=1:1:blk_num

blk_tran2d=dct2(blk_3d(:,:,i));

blk_2d_shrink(:,:,i)=thres_shrink(blk_tran2d,lambda2d);

end

for i=1:1:m

for j=1:1:n

blk_tran1d=dct(blk_2d_shrink(i,j,:));

blk_1d_shrink(i,j,:)=thres_shrink(blk_tran1d,lambda1d);

end

end

blk_tran3d=blk_1d_shrink;

elseif(tran_mode==2) %dwt

blk_2d_s={};

blk_2d_shrink=[];%zeros()

for i=1:1:blk_num

[blk_tran2d_c,blk_tran2d_s]=wavedec2(blk_3d(:,:,i),2,'Bior1.5');

blk_2d_shrink(:,i)=thres_shrink(blk_tran2d_c,lambda2d);

blk_2d_s{i}=blk_tran2d_s;

end

%这里应该用 wavedec.因为是对1维??

[blk_tran1d_c,blk_tran1d_s]=wavedec2(blk_2d_shrink,1,'haar');

blk_tran3d=thres_shrink(blk_tran1d_c,lambda1d);

% elseif(strcmp(tran_mode,'db1')) %还未实现

% blk_2d_s={};

% blk_2d_shrink=[];%zeros()

% for i=1:1:blk_num

% [blk_tran2d_cA,blk_tran2d_cH,blk_tran2d_cV,blk_tran2d_cD]=...

% dwt2(blk_3d(:,:,i),'db1');

% blk_2d_shrink(:,i)=thres_shrink(blk_tran2d_c,lambda2d);

% blk_2d_s{i}=blk_tran2d_s;

% end

% [blk_tran1d_c,blk_tran1d_s]=wavedec2(blk_2d_shrink,1,'haar');

% blk_tran3d=thres_shrink(blk_tran1d_c,lambda1d);

else

error('tran_mode error');

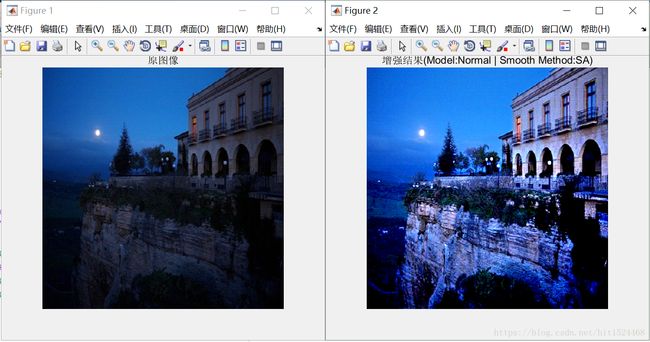

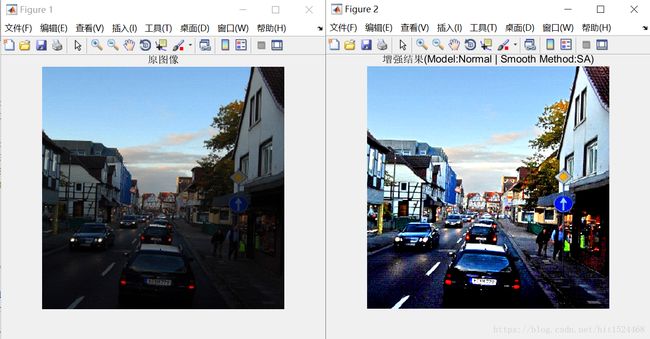

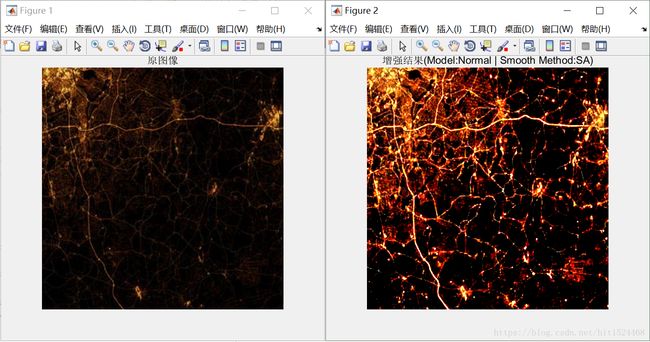

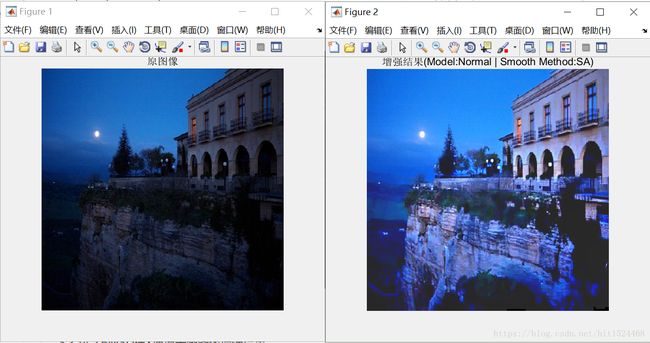

end3. 模型效果

3.1 不包含BM3D在Y通道去噪图像增强结果

3.2 包含BM3D在Y通道去噪图像增强结果