LongAdder原理解析

一般都是CAS对一个变量进行操作,但Doug Lea大神觉得不满足,又写了一个LongAdder

先看下传统的

AtomicLong的原理.png

再来看下LongAdder的

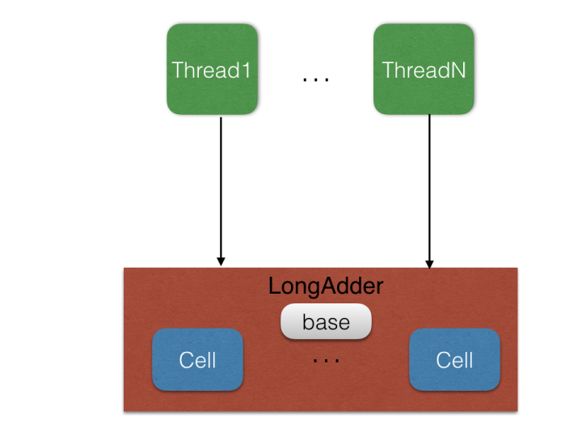

LongAdder原理图.png

即将一个变量进一步拆分到一个base数组中,减少资源竞争

@sun.misc.Contended static final class Cell {

volatile long value;

Cell(long x) { value = x; }

final boolean cas(long cmp, long val) {

return UNSAFE.compareAndSwapLong(this, valueOffset, cmp, val);

}

// Unsafe mechanics

private static final sun.misc.Unsafe UNSAFE;

private static final long valueOffset;

static {

try {

UNSAFE = sun.misc.Unsafe.getUnsafe();

Class ak = Cell.class;

valueOffset = UNSAFE.objectFieldOffset

(ak.getDeclaredField("value"));

} catch (Exception e) {

throw new Error(e);

}

}

}

- 类似于AtomicLong,利用CAS来更新变量

- @Contended避免value伪共享

将多个cell数组中的值加起来的和就类似于AtomicLong中的value

public long sum() {

Cell[] as = cells; Cell a;

long sum = base;

if (as != null) {

for (int i = 0; i < as.length; ++i) {

if ((a = as[i]) != null)

sum += a.value;

}

}

return sum;

}

increment()的调用链

java.util.concurrent.atomic.LongAdder.increment

->java.util.concurrent.atomic.LongAdder.add

多个线程操作的时候.png

add()方法如下

public void add(long x) {

Cell[] as; long b, v; int m; Cell a;

if ((as = cells) != null || !casBase(b = base, b + x)) {

boolean uncontended = true;

if (as == null || (m = as.length - 1) < 0 ||

(a = as[getProbe() & m]) == null ||

!(uncontended = a.cas(v = a.value, v + x)))

longAccumulate(x, null, uncontended);

}

}

第一次Cell数组为空,进入casBase()

final boolean casBase(long cmp, long val) {

return UNSAFE.compareAndSwapLong(this, BASE, cmp, val);

}

即原子更新,成功则直接返回,失败则说明出现并发了

if的三个判断

- 数组为空

- 或者数组长度小于1

- 或者位置上没有Cell对象,即getProbe()&m其实相当于hashMap里面的tab[i = (n - 1) & hash]

- 或者修改cell的值失败

才会最终进入到longAccumulate()方法中

小结

- 如果Cells表为空,尝试用CAS更新base字段,成功则退出;

- 如果Cells表为空,CAS更新base字段失败,出现竞争,uncontended为true,调用longAccumulate;

- 如果Cells表非空,但当前线程映射的槽为空,uncontended为true,调用longAccumulate;

- 如果Cells表非空,且前线程映射的槽非空,CAS更新Cell的值,成功则返回,否则,uncontended设为false,调用longAccumulate。

看到这里大概应该知道为什么LongAdder会比AtomicLong更高效了,没错,唯一会制约AtomicLong高效的原因是高并发,高并发意味着CAS的失败几率更高, 重试次数更多,越多线程重试,CAS失败几率又越高,变成恶性循环,AtomicLong效率降低。 那怎么解决? LongAdder给了我们一个非常容易想到的解决方案:减少并发,将单一value的更新压力分担到多个value中去,降低单个value的 “热度”,分段更新!!!

这样,线程数再多也会分担到多个value上去更新,只需要增加value就可以降低 value的 “热度” AtomicLong中的 恶性循环不就解决了吗? cells 就是这个 “段” cell中的value 就是存放更新值的, 这样,当我需要总数时,把cells 中的value都累加一下不就可以了么!!

在看看add方法中的代码,casBase方法可不可以不要,直接分段更新,上来就计算 索引位置,然后更新value?

不是不行,而是有所考虑的,因为,casBase操作等价于AtomicLong中的CAS操作,要知道,LongAdder这样的处理方式是有坏处的,分段操作必然带来空间上的浪费,可以空间换时间,但是,能不换就不换,空间时间都节约.

casBase操作保证了在低并发时,不会立即进入分支做分段更新操作,因为低并发时,casBase操作基本都会成功,只有并发高到一定程度了,才会进入分支

longAccumulate()方法如下

final void longAccumulate(long x, LongBinaryOperator fn,

boolean wasUncontended) {

int h;

if ((h = getProbe()) == 0) {

ThreadLocalRandom.current(); // force initialization

h = getProbe();

wasUncontended = true;

}

boolean collide = false; // True if last slot nonempty

for (;;) {

Cell[] as; Cell a; int n; long v;

if ((as = cells) != null && (n = as.length) > 0) {

if ((a = as[(n - 1) & h]) == null) {

if (cellsBusy == 0) { // Try to attach new Cell

Cell r = new Cell(x); // Optimistically create

if (cellsBusy == 0 && casCellsBusy()) {

boolean created = false;

try { // Recheck under lock

Cell[] rs; int m, j;

if ((rs = cells) != null &&

(m = rs.length) > 0 &&

rs[j = (m - 1) & h] == null) {

rs[j] = r;

created = true;

}

} finally {

cellsBusy = 0;

}

if (created)

break;

continue; // Slot is now non-empty

}

}

collide = false;

}

else if (!wasUncontended) // CAS already known to fail

wasUncontended = true; // Continue after rehash

else if (a.cas(v = a.value, ((fn == null) ? v + x :

fn.applyAsLong(v, x))))

break;

else if (n >= NCPU || cells != as)

collide = false; // At max size or stale

else if (!collide)

collide = true;

else if (cellsBusy == 0 && casCellsBusy()) {

try {

if (cells == as) { // Expand table unless stale

Cell[] rs = new Cell[n << 1];

for (int i = 0; i < n; ++i)

rs[i] = as[i];

cells = rs;

}

} finally {

cellsBusy = 0;

}

collide = false;

continue; // Retry with expanded table

}

h = advanceProbe(h);

}

else if (cellsBusy == 0 && cells == as && casCellsBusy()) {

boolean init = false;

try { // Initialize table

if (cells == as) {

Cell[] rs = new Cell[2];

rs[h & 1] = new Cell(x);

cells = rs;

init = true;

}

} finally {

cellsBusy = 0;

}

if (init)

break;

}

else if (casBase(v = base, ((fn == null) ? v + x :

fn.applyAsLong(v, x))))

break; // Fall back on using base

}

}

longAccumulate.png

- 如果Cells表为空,尝试获取锁之后初始化表(初始大小为2);

- 如果Cells表非空,对应的Cell为空,自旋锁未被占用,尝试获取锁,添加新的Cell;

- 如果Cells表非空,找到线程对应的Cell,尝试通过CAS更新该值;

- 如果Cells表非空,线程对应的Cell CAS更新失败,说明存在竞争,尝试获取自旋锁之后扩容,将cells数组扩大,降低每个cell的并发量后再试