- 免费的GPT可在线直接使用(一键收藏)

kkai人工智能

gpt

1、LuminAI(https://kk.zlrxjh.top)LuminAI标志着一款融合了星辰大数据模型与文脉深度模型的先进知识增强型语言处理系统,旨在自然语言处理(NLP)的技术开发领域发光发热。此系统展现了卓越的语义把握与内容生成能力,轻松驾驭多样化的自然语言处理任务。VisionAI在NLP界的应用领域广泛,能够胜任从机器翻译、文本概要撰写、情绪分析到问答等众多任务。通过对大量文本数据的

- 一天认识一个硬件之CPU

哲伦贼稳妥

一天认识一个硬件IT技术电脑硬件电脑运维硬件工程其他

CPU,全称为中央处理器(CentralProcessingUnit),是计算机硬件系统的核心部件之一,负责执行计算机程序中的指令和处理数据。它相当于计算机的大脑,今天就来给大家分享一下台式机和笔记本大脑的对比。性能差异核心数量和频率:台式机CPU通常支持更多的核心数量和更高的运行频率,这使得它们在处理多线程任务和多任务处理方面更具优势。性能释放:笔记本CPU受限于散热和供电条件,功耗通常较低,导

- 如何让大模型更聪明?

吗喽一只

人工智能算法机器学习

随着人工智能技术的飞速发展,大模型在多个领域展现出了前所未有的能力,但它们仍然面临着理解力、泛化能力和适应性等方面的挑战。让大模型更聪明,从算法创新、数据质量与多样性、模型架构优化等角度出发,我们可以采取以下策略:一、算法创新优化损失函数:损失函数是优化算法的核心,直接影响模型的最终性能。在大模型中,需要设计更为精细的损失函数来捕捉数据中的复杂性和细微差别。例如,结合任务特性和数据特性,设计多任务

- 面向6G的核心网网络架构研究

宋罗世家技术屋

计算机工程的科学与探索专栏网络架构

摘要通过分析6G网络愿景和核心网网络架构所面临的挑战,提出面向6G的核心网网络架构的需求,并在此基础上提出智能且能力普惠的核心网架构,实现“连接+AI+算力+智能+能力开放”的6G核心网,能够根据场景和业务需求按需部署网络功能,保证网络按需确定性服务能力。通过对四大网络功能体进行重构,实现多任务协同能力,形成灵活的用户面处理逻辑,实现网络能力普惠的自治管理和智能服务。01概述大连接物联网(mass

- 【机器学习】朴素贝叶斯

可口的冰可乐

机器学习机器学习概率论

3.朴素贝叶斯素贝叶斯算法(NaiveBayes)是一种基于贝叶斯定理的简单而有效的分类算法。其“朴素”之处在于假设各特征之间相互独立,即在给定类别的条件下,各个特征是独立的。尽管这一假设在实际中不一定成立,合理的平滑技术和数据预处理仍能使其在许多任务中表现良好。优点:速度快:由于朴素贝叶斯仅需计算简单的概率,训练和预测的速度非常快。适用于高维数据:即使在特征数量多的情况下,朴素贝叶斯仍然表现良好

- 多道程序设计和分时

yanlingyun0210

操作系统操作系统

分类:(3类)多道程序设计分时简单批处理一些其他补充1多道程序设计1.设计起因:单用户通常不能总使cpu和设备在所有时间都忙碌。2.概念:在内存中同时存在多道作业,在管理程序的控制下“相互穿插运行”(交替运行)3.目的:提高cpu的利用率,充分发挥并行性。2分时(多任务)(时间片)1.分时系统:一种联机的多用户交互式操作系统(其实是多道程序设计的延伸)(每个用户都能实时得到服务)(unix就是常见

- 深度学习计算机视觉中 feature modulation 操作是什么?

Wils0nEdwards

深度学习计算机视觉人工智能

什么是特征调制(FeatureModulation)?在深度学习与计算机视觉领域,特征调制(FeatureModulation)是一种用于增强模型灵活性和表达能力的技术,尤其是最近几年,它在许多任务中变得越来越重要。特征调制通过动态调整神经网络中间层的特征,使模型能够根据不同的上下文、输入或任务自适应地调整自身的行为。特征调制的核心概念特征调制的基本思想是通过某种形式的参数调节来改变特征表示的性质

- 《自然语言处理 Transformer 模型详解》

黑色叉腰丶大魔王

自然语言处理transformer人工智能

一、引言在自然语言处理领域,Transformer模型的出现是一个重大的突破。它摒弃了传统的循环神经网络(RNN)和卷积神经网络(CNN)架构,完全基于注意力机制,在机器翻译、文本生成、问答系统等众多任务中取得了卓越的性能。本文将深入讲解Transformer模型的原理、结构和应用。二、Transformer模型的背景在Transformer出现之前,RNN及其变体(如LSTM和GRU)是自然语言

- Google 释出 Android 15 源代码

CIb0la

系统安全运维程序人生

Google向AndroidOpenSourceProject(AOSP)释出了Android15源代码。Android15将在未来几周内推送给Pixel手机,未来几个月推送给三星、摩托罗拉、一加和小米等厂商的兼容手机。Android15的新特性包括:简化passkey的登陆,防盗检测,改进大屏幕设备的多任务处理,应用访问限制,增强了屏幕阅读器TalkBack,集成GeminiAI用于图像的音频描

- 鸿蒙轻内核M核源码分析系列十二 事件Event

OpenHarmony_小贾

OpenHarmonyHarmonyOS鸿蒙开发harmonyosopenharmony鸿蒙内核鸿蒙开发移动开发嵌入式硬件驱动开发

事件(Event)是一种任务间通信的机制,可用于任务间的同步。多任务环境下,任务之间往往需要同步操作,一个等待即是一个同步。事件可以提供一对多、多对多的同步操作。本文通过分析鸿蒙轻内核事件模块的源码,深入掌握事件的使用。本文中所涉及的源码,以OpenHarmonyLiteOS-M内核为例,均可以在开源站点https://gitee.com/openharmony/kernel_liteos_m获取

- 配音接单的平台有那些,适合新手的?

配音新手圈

1、喜马拉雅FM喜马拉雅这个平台,原本是一个声音平台,那么我们也可以通过喜马拉雅平台进入后台做相应的任务,比如喜马拉雅的平台有个有声制作,这个就是一个配声任务平台,我们点击立即入驻,里面有很多任务,我们都是可以靠自己声音去做的。下载到手机,然后注册账号—-点击登录—-点开个人中心里的创作中心,我要赚钱,找到有声化平台里面会有非常多的配音任务,大量的小说和文章需要配音,一个任务大概是50~100,如

- 在 Linux 中如何检查正在运行的进程

IT孔乙己

linuxdebian运维

每天,开发人员都会在终端中使用各种应用程序并运行命令。这些应用程序可能包括浏览器、代码编辑器、终端、视频会议应用程序或音乐播放器。对于你打开的每个软件应用程序或你运行的命令,它都会创建一个进程或任务。Linux操作系统和现代计算机的一大优点是它们支持多任务处理,所以多个程序可以同时运行。你有没有想过如何检查机器上运行的所有程序?这篇文章就是为你准备的,我将向你展示如何列出、管理和终止Linux电脑

- MIT6.824 课程-MapReduce

余为民同志

6.824mapreduce分布式6.824

MapReduce:在大型集群上简化数据处理概要MapReduce是一种编程模型,它是一种用于处理和生成大型数据集的实现。用户通过指定一个用来处理键值对(Key/Value)的map函数来生成一个中间键值对集合。然后,再指定一个reduce函数,它用来合并所有的具有相同中间key的中间value。现实生活中有许多任务可以通过该模型进行表达,具体案例会在论文中展现出来。以这种函数式风格编写的程序能够

- 常用命令

九妄_b2a1

Linux是多用户多任务的操作系统在linux所有的一切都是文件(包括文件夹)init0关机init6重启/斜杠\反斜杠-横杠_下划线lsls查看当前目录ls-a查看隐藏文件ls-L具体信息列表形式ls-h跟L参数引用ls-lh把大小转成具体的数值ls*.txt就只查出为txt格式的文件(通配符过滤的作用)ls1.*就只查出为1开头的文件ls[157]包括里面的任意字符ls?.txt查出一个字符的

- 倩馨的财富能量日记 Day63

诺宝的馨妈

今天想分享关于微习惯的内容,养成良好的微习惯,也可以为我们的生活增添许多色彩。及时整理。许多人都有这样的体验:用完东西随手一扔,没有及时归置起来,等真正要用到时,总是会花很多时间去找。如果我们学会将物品分类管理,每次用完都能把东西放回它该有的位置,不仅可以节约时间,你的生活也会变得更加有条不紊。设置提醒。如今,很多人的生活都是多任务进行的,稍不注意就容易忘记许多事。好脑子不如烂笔头,无论是用笔还是

- Lua协同程序Coroutine

z2014z

lua开发语言

Lua协同程序(Coroutine)定义Lua协同程序(Coroutine)与线程类似:拥有独立的堆栈、局部变量、指令指针,同时又与其它协同程序共享全局变量和其它大部分东西。协同程序可以理解为一种特殊的线程,可以暂停和恢复其执行,从而允许非抢占式的多任务处理。协同是非常强大的功能,但是用起来也很复杂。线程和协同程序区别主要区别在于,一个程序可以同时运行几个线程,而协同程序却需要彼此协作的运行。在任

- 【备战软考(嵌入式系统设计师)】04-嵌入式软件架构

折途想要敲代码

备战软考架构嵌入式硬件mcu单片机

嵌入式操作系统嵌入式系统有以下特点:要求编码体积小,能够在有限的存储空间内运行。面向应用,可以进行裁剪和移植。用于特定领域,可以支持多任务。可靠性高,及时响应,无需人工干预独立运行。实时性高,且要求固态存储。要求在系统投入前就具有确定性和可预测性。一般考在选择题。机内自检BIT(Built-InTest,机内自检),可以完成对故障的检测和定位。包括下面四种:上电BIT:在系统上电的时候对所有硬件资

- Rust:Restful API 服务程序开发详述

许野平

#Rust软件工程WebrustrestfulWarpTokiohyper

0.关于异步程序设计0.1对异步机制的理解运行效率对于后端程序来讲很重要。我曾经以为,多线程机制是后端设计的终极方法,后来才发现,异步机制才是榨干CPU运行效率资源的关键所在。我最初对于异步程序设计有误解,以为多线程架构就是异步编程。后来才搞明白,多线程仅仅是异步机制的一手段之一。其实,即使单线程也可以实现异步编程。这意味着,有可能利用单一线程实现并发多任务执行。异步编程主要关注的是任务的非阻塞执

- rust命令学习

若者いChiang

rust学习开发语言

rust命令学习rustc:编译rust文件用的方法,后接主函数##编译出一个可执行文件.exe和一个.pdb文件rustcmain.rscargo:Cargo是Rust的构建系统和包管理器。为它可以为你处理很多任务,比如构建代码、下载依赖库,以及编译这些库。##查看版本cargo--version##快速创建项目hello_worldcargonewhello_world##编译出可执行文件和.

- 2024年效果图云渲染平台到底哪家最好?

水月rusuo

云渲染图形渲染

2024年各云渲染平台在效果图业务方面均在做调整,下面我就从使用功能设计、渲染速度、渲染价格、使用体验这四个方面对今年各家云渲染平台的现状做列举,以供参考。一、功能对比具体功能列表云渲染平台技术水平基础功能高级功能独有功能痛点炫云☆☆☆☆☆一键渲染、自动下载结果、进度预览;多任务同时渲染;小样0.01元;性价比及速度包含不同模式可自由选择;分布式渲染;一键AO/颜色通道自助免费取回原场景(7天内)

- 集成电路学习:什么是RTOS实时操作系统

limengshi138392

integratedcircuit学习嵌入式硬件物联网microsoftfpga开发

RTOS:实时操作系统RTOS,全称RealTimeOperatingSystem,即实时操作系统,是一种专为满足实时控制需求而设计的操作系统。它能够在外部事件或数据产生时,以足够快的速度进行处理,并在规定的时间内控制生产过程或对处理系统做出快速响应。RTOS的主要特点包括及时响应、高可靠性、多任务处理、确定性、资源管理、优先级调度、中断处理和时间管理等。一、RTOS的主要特点1、及时响应和高可靠

- linux

何李21高职

linux

Linux,全称GNU/Linux,是一种免费使用和自由传播的类UNIX操作系统,其内核由林纳斯·本纳第克特·托瓦兹于1991年10月5日首次发布,它主要受到Minix和Unix思想的启发,是一个基于POSIX的多用户、多任务、支持多线程和多CPU的操作系统。它能运行主要的Unix工具软件、应用程序和网络协议。它支持32位和64位硬件。Linux继承了Unix以网络为核心的设计思想,是一个性能稳定

- 过有规律地生活

苜溯

近来的生活很不规律,拖延症又犯,匆忙地过着生活。接连两次断更,晚上累得睡过去,第二天早上醒来,才懊悔自己没有完成任务。突然意识到,不能再这样下去了。昨天是上班的第一天,我在白天就把要写的文章写了,抽空备了今天的课,睡前还看了好一会的《寻找家园》。昨晚睡前是轻松的,不像平时那样,每晚都焦虑着,还有好多任务没有完成。这个星期要值班,每天都要早起。十一的时候,每天都很忙碌,原本计划要做的事情,一样都没做

- python爬虫爬取京东商品评价_京东商品评论爬取实战

weixin_39835158

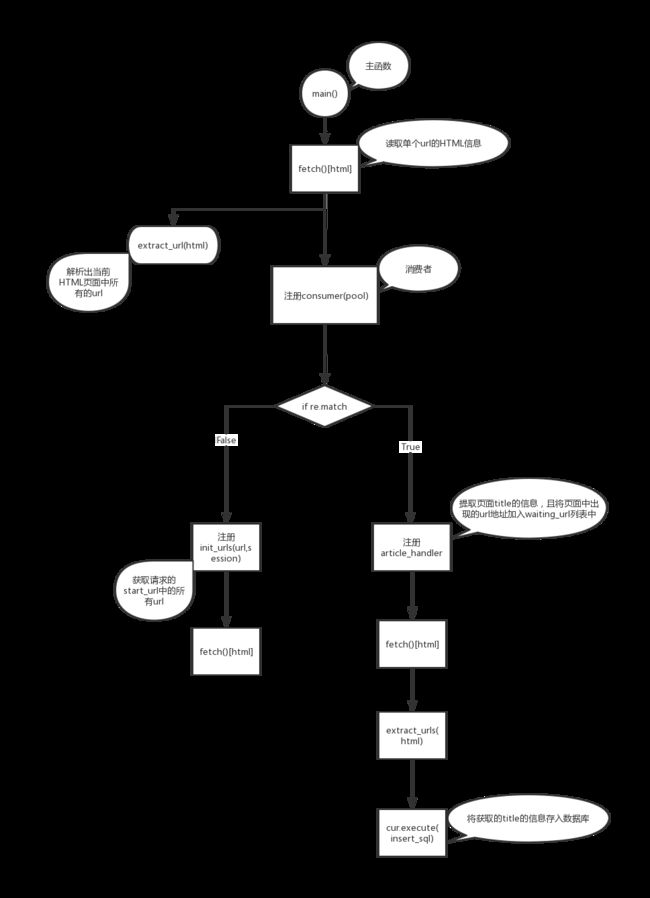

先说说为什么写这个小demo吧,说起来还真的算不上“项目”,之前有一个朋友面试,别人出了这么一道机试题,需求大概是这样紫滴:1.给定任意京东商品链接,将该商品评论信息拿下,存入csv或者数据库2.要求使用多任务来提高爬虫获取数据的效率3.代码简洁,规范,添加必要注释4.可以使用函数式编程,或者面向对象编程看到上面四个简单的需求,层次高的童鞋可能就看不下去了,因为太简单了,这里本人的目的是给初学爬虫

- 【论文笔记】Multi-Task Learning as a Bargaining Game

xhyu61

机器学习学习笔记论文笔记论文阅读人工智能深度学习

Abstract本文将多任务学习中的梯度组合步骤视为一种讨价还价式博弈(bargaininggame),通过游戏,各个任务协商出共识梯度更新方向。在一定条件下,这种问题具有唯一解(NashBargainingSolution),可以作为多任务学习中的一种原则方法。本文提出Nash-MTL,推导了其收敛性的理论保证。1Introduction大部分MTL优化算法遵循一个通用方案。计算所有任务的梯度g

- Python自动化运维 - day9 - 进程与线程

anhuoqiu1787

运维操作系统python

概述我们都知道windows是支持多任务的操作系统。什么叫“多任务”呢?简单地说,就是操作系统可以同时运行多个任务。打个比方,你一边在用浏览器上网,一边在听MP3,一边在用Word赶作业,这就是多任务,至少同时有3个任务正在运行。还有很多任务悄悄地在后台同时运行着,只是桌面上没有显示而已。现在,多核CPU已经非常普及了,但是,即使过去的单核CPU,也可以执行多任务。由于CPU执行代码都是顺序执行的

- Lua协同程序coroutine的简介及优缺点例子解析

乔丹搞IT

lua非常实用的脚本lua开发语言

代码示例:Lua中的协同程序(coroutine)是一种非常强大的功能,它允许程序以非抢占式的方式进行多任务处理。协同程序类似于线程,拥有独立的堆栈、局部变量和指令指针,但与其他协同程序共享全局变量和其他资源。协同程序的运行需要彼此协作,同一时间只有一个协同程序在运行,且该协同程序只有在必要时才会被挂起。协同程序的基本语法和使用创建协同程序使用coroutine.create函数,它接受一个函数作

- 做任务赚佣金一单10块(一单10块钱佣金的任务平台)

手机聊天员赚钱平台

做任务赚10元佣金?现在很多人在手机上赚钱,寻找任务平台,尤其是其他乱七八糟的赚钱软件“教育”之后发现接单做任务,做单赚单简单可靠。而且很多高价单都很酷,做一个甚至可以赚几十。给大家推荐一个陪聊赚米项目叭,正规陪聊项目,网易云旗下大平台,无任何费用,下方有微信二维码,可扫码了解,也可点击链接,联系我们了解:https://www.jianshu.com/p/a8b7493d9f71但是很多任务平台

- Ansible的脚本:playbook

Jessica小戴

ansible网络

Ansible的脚本一、playbook(剧本)1、playbook的组成1.1tasks(任务):每一个tasks就是一个模快1.2variables(变量):存储和传递数据,可以自定义,也可以是全局变量,也可以是脚本外传参1.3templates(模版):用于生成配置文件和多任务的编排1.4handlers(处理器):满足某些条件时触发的操作,一般用于重启等操作1.5roles(角色):组织和

- Linux | 进程池技术解析:利用无名管道实现并发任务处理(含实现代码)

koi li

Linuxlinuxc++算法ubuntu库运维服务器

在血海里游泳,一直游到海水变蓝。——何小鹏2024.8.31目录一、进程池二、使用匿名管道实现进程池的核心前置知识:管道的四种情况和五个特征三、代码实现四、实现代码详解main()2、loadTask()3、channelInit()问题:为什么要将子进程的管道读端重定向至标准输入?4、ctrlProcess()5、channelClose()一、进程池多任务处理是提高系统性能和响应速度的关键。进

- web前段跨域nginx代理配置

刘正强

nginxcmsWeb

nginx代理配置可参考server部分

server {

listen 80;

server_name localhost;

- spring学习笔记

caoyong

spring

一、概述

a>、核心技术 : IOC与AOP

b>、开发为什么需要面向接口而不是实现

接口降低一个组件与整个系统的藕合程度,当该组件不满足系统需求时,可以很容易的将该组件从系统中替换掉,而不会对整个系统产生大的影响

c>、面向接口编口编程的难点在于如何对接口进行初始化,(使用工厂设计模式)

- Eclipse打开workspace提示工作空间不可用

0624chenhong

eclipse

做项目的时候,难免会用到整个团队的代码,或者上一任同事创建的workspace,

1.电脑切换账号后,Eclipse打开时,会提示Eclipse对应的目录锁定,无法访问,根据提示,找到对应目录,G:\eclipse\configuration\org.eclipse.osgi\.manager,其中文件.fileTableLock提示被锁定。

解决办法,删掉.fileTableLock文件,重

- Javascript 面向对面写法的必要性?

一炮送你回车库

JavaScript

现在Javascript面向对象的方式来写页面很流行,什么纯javascript的mvc框架都出来了:ember

这是javascript层的mvc框架哦,不是j2ee的mvc框架

我想说的是,javascript本来就不是一门面向对象的语言,用它写出来的面向对象的程序,本身就有些别扭,很多人提到js的面向对象首先提的是:复用性。那么我请问你写的js里有多少是可以复用的,用fu

- js array对象的迭代方法

换个号韩国红果果

array

1.forEach 该方法接受一个函数作为参数, 对数组中的每个元素

使用该函数 return 语句失效

function square(num) {

print(num, num * num);

}

var nums = [1,2,3,4,5,6,7,8,9,10];

nums.forEach(square);

2.every 该方法接受一个返回值为布尔类型

- 对Hibernate缓存机制的理解

归来朝歌

session一级缓存对象持久化

在hibernate中session一级缓存机制中,有这么一种情况:

问题描述:我需要new一个对象,对它的几个字段赋值,但是有一些属性并没有进行赋值,然后调用

session.save()方法,在提交事务后,会出现这样的情况:

1:在数据库中有默认属性的字段的值为空

2:既然是持久化对象,为什么在最后对象拿不到默认属性的值?

通过调试后解决方案如下:

对于问题一,如你在数据库里设置了

- WebService调用错误合集

darkranger

webservice

Java.Lang.NoClassDefFoundError: Org/Apache/Commons/Discovery/Tools/DiscoverSingleton

调用接口出错,

一个简单的WebService

import org.apache.axis.client.Call;import org.apache.axis.client.Service;

首先必不可

- JSP和Servlet的中文乱码处理

aijuans

Java Web

JSP和Servlet的中文乱码处理

前几天学习了JSP和Servlet中有关中文乱码的一些问题,写成了博客,今天进行更新一下。应该是可以解决日常的乱码问题了。现在作以下总结希望对需要的人有所帮助。我也是刚学,所以有不足之处希望谅解。

一、表单提交时出现乱码:

在进行表单提交的时候,经常提交一些中文,自然就避免不了出现中文乱码的情况,对于表单来说有两种提交方式:get和post提交方式。所以

- 面试经典六问

atongyeye

工作面试

题记:因为我不善沟通,所以在面试中经常碰壁,看了网上太多面试宝典,基本上不太靠谱。只好自己总结,并试着根据最近工作情况完成个人答案。以备不时之需。

以下是人事了解应聘者情况的最典型的六个问题:

1 简单自我介绍

关于这个问题,主要为了弄清两件事,一是了解应聘者的背景,二是应聘者将这些背景信息组织成合适语言的能力。

我的回答:(针对技术面试回答,如果是人事面试,可以就掌

- contentResolver.query()参数详解

百合不是茶

androidquery()详解

收藏csdn的博客,介绍的比较详细,新手值得一看 1.获取联系人姓名

一个简单的例子,这个函数获取设备上所有的联系人ID和联系人NAME。

[java]

view plain

copy

public void fetchAllContacts() {

- ora-00054:resource busy and acquire with nowait specified解决方法

bijian1013

oracle数据库killnowait

当某个数据库用户在数据库中插入、更新、删除一个表的数据,或者增加一个表的主键时或者表的索引时,常常会出现ora-00054:resource busy and acquire with nowait specified这样的错误。主要是因为有事务正在执行(或者事务已经被锁),所有导致执行不成功。

1.下面的语句

- web 开发乱码

征客丶

springWeb

以下前端都是 utf-8 字符集编码

一、后台接收

1.1、 get 请求乱码

get 请求中,请求参数在请求头中;

乱码解决方法:

a、通过在web 服务器中配置编码格式:tomcat 中,在 Connector 中添加URIEncoding="UTF-8";

1.2、post 请求乱码

post 请求中,请求参数分两部份,

1.2.1、url?参数,

- 【Spark十六】: Spark SQL第二部分数据源和注册表的几种方式

bit1129

spark

Spark SQL数据源和表的Schema

case class

apply schema

parquet

json

JSON数据源 准备源数据

{"name":"Jack", "age": 12, "addr":{"city":"beijing&

- JVM学习之:调优总结 -Xms -Xmx -Xmn -Xss

BlueSkator

-Xss-Xmn-Xms-Xmx

堆大小设置JVM 中最大堆大小有三方面限制:相关操作系统的数据模型(32-bt还是64-bit)限制;系统的可用虚拟内存限制;系统的可用物理内存限制。32位系统下,一般限制在1.5G~2G;64为操作系统对内存无限制。我在Windows Server 2003 系统,3.5G物理内存,JDK5.0下测试,最大可设置为1478m。典型设置:

java -Xmx355

- jqGrid 各种参数 详解(转帖)

BreakingBad

jqGrid

jqGrid 各种参数 详解 分类:

源代码分享

个人随笔请勿参考

解决开发问题 2012-05-09 20:29 84282人阅读

评论(22)

收藏

举报

jquery

服务器

parameters

function

ajax

string

- 读《研磨设计模式》-代码笔记-代理模式-Proxy

bylijinnan

java设计模式

声明: 本文只为方便我个人查阅和理解,详细的分析以及源代码请移步 原作者的博客http://chjavach.iteye.com/

import java.lang.reflect.InvocationHandler;

import java.lang.reflect.Method;

import java.lang.reflect.Proxy;

/*

* 下面

- 应用升级iOS8中遇到的一些问题

chenhbc

ios8升级iOS8

1、很奇怪的问题,登录界面,有一个判断,如果不存在某个值,则跳转到设置界面,ios8之前的系统都可以正常跳转,iOS8中代码已经执行到下一个界面了,但界面并没有跳转过去,而且这个值如果设置过的话,也是可以正常跳转过去的,这个问题纠结了两天多,之前的判断我是在

-(void)viewWillAppear:(BOOL)animated

中写的,最终的解决办法是把判断写在

-(void

- 工作流与自组织的关系?

comsci

设计模式工作

目前的工作流系统中的节点及其相互之间的连接是事先根据管理的实际需要而绘制好的,这种固定的模式在实际的运用中会受到很多限制,特别是节点之间的依存关系是固定的,节点的处理不考虑到流程整体的运行情况,细节和整体间的关系是脱节的,那么我们提出一个新的观点,一个流程是否可以通过节点的自组织运动来自动生成呢?这种流程有什么实际意义呢?

这里有篇论文,摘要是:“针对网格中的服务

- Oracle11.2新特性之INSERT提示IGNORE_ROW_ON_DUPKEY_INDEX

daizj

oracle

insert提示IGNORE_ROW_ON_DUPKEY_INDEX

转自:http://space.itpub.net/18922393/viewspace-752123

在 insert into tablea ...select * from tableb中,如果存在唯一约束,会导致整个insert操作失败。使用IGNORE_ROW_ON_DUPKEY_INDEX提示,会忽略唯一

- 二叉树:堆

dieslrae

二叉树

这里说的堆其实是一个完全二叉树,每个节点都不小于自己的子节点,不要跟jvm的堆搞混了.由于是完全二叉树,可以用数组来构建.用数组构建树的规则很简单:

一个节点的父节点下标为: (当前下标 - 1)/2

一个节点的左节点下标为: 当前下标 * 2 + 1

&

- C语言学习八结构体

dcj3sjt126com

c

为什么需要结构体,看代码

# include <stdio.h>

struct Student //定义一个学生类型,里面有age, score, sex, 然后可以定义这个类型的变量

{

int age;

float score;

char sex;

}

int main(void)

{

struct Student st = {80, 66.6,

- centos安装golang

dcj3sjt126com

centos

#在国内镜像下载二进制包

wget -c http://www.golangtc.com/static/go/go1.4.1.linux-amd64.tar.gz

tar -C /usr/local -xzf go1.4.1.linux-amd64.tar.gz

#把golang的bin目录加入全局环境变量

cat >>/etc/profile<

- 10.性能优化-监控-MySQL慢查询

frank1234

性能优化MySQL慢查询

1.记录慢查询配置

show variables where variable_name like 'slow%' ; --查看默认日志路径

查询结果:--不用的机器可能不同

slow_query_log_file=/var/lib/mysql/centos-slow.log

修改mysqld配置文件:/usr /my.cnf[一般在/etc/my.cnf,本机在/user/my.cn

- Java父类取得子类类名

happyqing

javathis父类子类类名

在继承关系中,不管父类还是子类,这些类里面的this都代表了最终new出来的那个类的实例对象,所以在父类中你可以用this获取到子类的信息!

package com.urthinker.module.test;

import org.junit.Test;

abstract class BaseDao<T> {

public void

- Spring3.2新注解@ControllerAdvice

jinnianshilongnian

@Controller

@ControllerAdvice,是spring3.2提供的新注解,从名字上可以看出大体意思是控制器增强。让我们先看看@ControllerAdvice的实现:

@Target(ElementType.TYPE)

@Retention(RetentionPolicy.RUNTIME)

@Documented

@Component

public @interface Co

- Java spring mvc多数据源配置

liuxihope

spring

转自:http://www.itpub.net/thread-1906608-1-1.html

1、首先配置两个数据库

<bean id="dataSourceA" class="org.apache.commons.dbcp.BasicDataSource" destroy-method="close&quo

- 第12章 Ajax(下)

onestopweb

Ajax

index.html

<!DOCTYPE html PUBLIC "-//W3C//DTD XHTML 1.0 Transitional//EN" "http://www.w3.org/TR/xhtml1/DTD/xhtml1-transitional.dtd">

<html xmlns="http://www.w3.org/

- BW / Universe Mappings

blueoxygen

BO

BW Element

OLAP Universe Element

Cube Dimension

Class

Charateristic

A class with dimension and detail objects (Detail objects for key and desription)

Hi

- Java开发熟手该当心的11个错误

tomcat_oracle

java多线程工作单元测试

#1、不在属性文件或XML文件中外化配置属性。比如,没有把批处理使用的线程数设置成可在属性文件中配置。你的批处理程序无论在DEV环境中,还是UAT(用户验收

测试)环境中,都可以顺畅无阻地运行,但是一旦部署在PROD 上,把它作为多线程程序处理更大的数据集时,就会抛出IOException,原因可能是JDBC驱动版本不同,也可能是#2中讨论的问题。如果线程数目 可以在属性文件中配置,那么使它成为

- 推行国产操作系统的优劣

yananay

windowslinux国产操作系统

最近刮起了一股风,就是去“国外货”。从应用程序开始,到基础的系统,数据库,现在已经刮到操作系统了。原因就是“棱镜计划”,使我们终于认识到了国外货的危害,开始重视起了信息安全。操作系统是计算机的灵魂。既然是灵魂,为了信息安全,那我们就自然要使用和推行国货。可是,一味地推行,是否就一定正确呢?

先说说信息安全。其实从很早以来大家就在讨论信息安全。很多年以前,就据传某世界级的网络设备制造商生产的交