利用word2vec词向量做textcnn的文本分类

思路如下:

- 读取数据

- 数据的y值处理

- 把文本做word2vec模型

- 文本分词

- 把分完的词做word2vec向量映射

- 建立神经网络模型,并训练

- 预测

直接上代码:

import pandas as pd

import numpy as np

import jieba

import re

import multiprocessing

from multiprocessing import Pool

from keras.utils import to_categorical

from keras.models import Model

from keras.layers import Dense, Embedding, Activation, merge, Input, Lambda, Reshape,BatchNormalization

from keras.layers import Conv1D, Flatten, Dropout, MaxPool1D, GlobalAveragePooling1D,SeparableConvolution1D

from keras import regularizers

from keras.layers.merge import concatenate

from sklearn.externals import joblib

import heapq

# 读取训练集和测试集数据

f=open(r'C:\Users\admin\Desktop\game_test\game\apptype_train.dat',encoding='utf-8')

sentimentlist = []

for line in f:

s = line.strip().split('\t')

sentimentlist.append(s)

f.close()

df_train=pd.DataFrame(sentimentlist,columns=['s_no','deal_code','text'])

# 训练集的数据处理

df_=df_train['deal_code'].str.split('|',expand=True)

df_.columns=['deal_code1','deal_code2']

df_2=pd.concat([df_train,df_],axis=1)

a=df_2[['s_no','deal_code1','text']]

a.columns=['s_no','deal_code2','text'] #a.rename(columns={'deal_code1':'deal_code2'}, inplace = True)

b=df_2[['s_no','deal_code2','text']]

df_3=pd.concat([a,b],axis=0)

df_train_end=df_3[df_3['deal_code2'].isnull().values==False]

# 读取测试集

f=open(r'C:\Users\admin\Desktop\game_test\game\app_desc.dat',encoding='utf-8')

sentimentlist = []

for line in f:

s = line.strip().split('\t')

sentimentlist.append(s)

f.close()

df_test=pd.DataFrame(sentimentlist,columns=['s_no','text'])

print('1:数据集读取成功')

# 标签表预处理(y值即label的映射,label的数量) eg:'你好':1 1:'你好'

label=list(set(df_train_end['deal_code2'].tolist()))

dig_lables = dict(enumerate(label))

lable_dig = dict((lable,dig) for dig, lable in dig_lables.items())

print('2:y值处理成功')

# 训练数据集标签预处理

df_train_end['标签_数字'] = df_train_end['deal_code2'].apply(lambda lable: lable_dig[lable])

# 文本分词

def seg_sentences(sentence):

sentence = re.sub(u"([^\u4e00-\u9fa5\u0030-\u0039\u0041-\u005a\u0061-\u007a])","",sentence)

sentence_seged = list(jieba.cut(sentence.strip()))

return sentence_seged

df_train_end['文本分词']=df_train_end['text'].apply(seg_sentences)

df_test['文本分词']=df_test['text'].apply(seg_sentences)

df_train_end['文本分词数量']=df_train_end['文本分词'].apply(lambda x: len(x))

print('3:分词成功')

# y值label的数量,以及one-hot y值的label数字

num_classes = len(dig_lables)

df_all=pd.concat([df_train_end['文本分词'],df_test['文本分词']])

train_lables = to_categorical(df_train_end['标签_数字'],num_classes=num_classes)

# word2vec词向量

from gensim.models import Word2Vec

import time

start_time = time.time()

model = Word2Vec(df_all, size=250, window=5, min_count=20, workers=4)

# word_vectors = model.wv 保存word2vec模型以及的词向量

model.save("word2vec.model")

model.wv.save_word2vec_format('output_vector_file.txt', binary=False)

end_time = time.time()

print("used time : %d s" % (end_time - start_time))

print('4:w2vmodel成功')

"""

把Word2vec向量转换成elmo向量的少部分代码,在本篇博客中可以忽略该注释

from gensim.models import KeyedVectors

models=KeyedVectors.load_word2vec_format(fname='output_vector_file.txt',binary=False)

words=models.vocab

with open(r'E:\python_data\hf\vocab.txt','w',encoding='utf-8') as f:

f.write(''+'\n')

f.write(''+'\n')

f.write(''+'\n') # bilm-tf 要求vocab有这三个符号,并且在最前面

for word in words:

f.write(word+'\n')

from gensim.models import Word2Vec

model = Word2Vec.load("word2vec.model")

"""

# 把词转换成word2vec的词向量

def output_vocab(vocab):

for k, v in vocab.items():

print(k)

def embedding_sentences(sentences,w2vModel):

all_vectors = []

embeddingDim = w2vModel.vector_size

embeddingUnknown = [0 for i in range(embeddingDim)]

for sentence in sentences:

this_vector = []

for word in sentence:

if word in w2vModel.wv.vocab:

this_vector.append(w2vModel[word])

else:

this_vector.append(embeddingUnknown)

all_vectors.append(this_vector)

return all_vectors

def padding_sentences(input_sentences, padding_token, padding_sentence_length = 200):

sentences = [sentence for sentence in input_sentences]

max_sentence_length = padding_sentence_length

l=[]

for sentence in sentences:

if len(sentence) > max_sentence_length:

sentence = sentence[:max_sentence_length]

l.append(sentence)

else:

sentence.extend([padding_token] * (max_sentence_length - len(sentence)))

l.append(sentence)

return (l, max_sentence_length)

# Get embedding vector

sentences, max_document_length = padding_sentences(df_train_end['文本分词'], '')

x = np.array(embedding_sentences(sentences,model))

print('5:padding_sentence完成')

def sepcnn(embedding_dims,max_len,num_class):

tensor_input = Input(shape=(max_len, embedding_dims))

cnn1 = SeparableConvolution1D(200, 3, padding='same', strides = 1, activation='relu',kernel_regularizer=regularizers.l1(0.00001))(tensor_input)

cnn1 = BatchNormalization()(cnn1)

cnn1 = MaxPool1D(pool_size=100)(cnn1)

cnn2 = SeparableConvolution1D(200, 4, padding='same', strides = 1, activation='relu',kernel_regularizer=regularizers.l1(0.00001))(tensor_input)

cnn2 = BatchNormalization()(cnn2)

cnn2 = MaxPool1D(pool_size=100)(cnn2)

cnn3 = SeparableConvolution1D(200, 5, padding='same', strides = 1, activation='relu',kernel_regularizer=regularizers.l1(0.00001))(tensor_input)

cnn3 = BatchNormalization()(cnn3)

cnn3 = MaxPool1D(pool_size=100)(cnn3)

cnn = concatenate([cnn1,cnn2,cnn3], axis=-1)

dropout = Dropout(0.2)(cnn)

flatten = Flatten()(dropout)

dense = Dense(512, activation='relu')(flatten)

dense = BatchNormalization()(dense)

dropout = Dropout(0.2)(dense)

tensor_output = Dense(num_class, activation='softmax')(dropout)

model = Model(inputs = tensor_input, outputs = tensor_output)

print(model.summary())

model.compile(optimizer='adam', loss='categorical_crossentropy', metrics=['accuracy'])

return model

model_sepcnn = sepcnn(embedding_dims=250,max_len=200,num_class=num_classes)

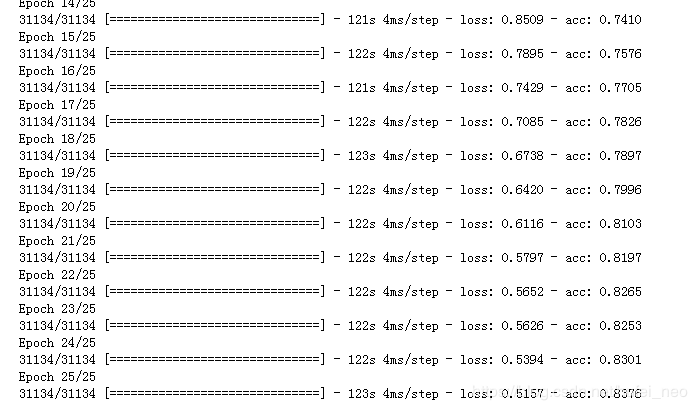

model_sepcnn.fit(x,train_lables,epochs=25, batch_size=512)

print('6:训练完成')

# 保存模型

joblib.dump(model_sepcnn, "train_model.m")

print('7:保存模型完成')

# 预测top2的测试集结果

sentences, max_document_length = padding_sentences(df_test['文本分词'], '')

x_test = np.array(embedding_sentences(sentences,model))

pred=model_sepcnn.predict(x_test)

c=[]

for i in range(len(pred)):

b=heapq.nlargest(2, range(len(pred[i])), pred[i].take)

c.append(b)

d=pd.DataFrame(c)

df_test['label1'] = [dig_lables[dig] for dig in d[0].tolist()]

df_test['label2'] = [dig_lables[dig] for dig in d[1].tolist()]

df_test.rename(columns={'s_no':'id'}, inplace = True)

df_test[['id','label1','label2']].to_csv(r'C:\Users\admin\Desktop\game_test\pre_test_1.csv',encoding='utf-8',index=False)

print('8:测试数据并导出完成')

"""

另一种获取预测top2的测试集结果的方法

sentences, max_document_length = padding_sentences(df_test['文本分词'], '')

x_test = embedding_sentences(sentences,model)

# 结果预测

def get_topk_lables(martix):

pred_result = model_sepcnn.predict(martix.reshape(1,200,200))[0]

ranks_result = sorted(enumerate(pred_result),key=lambda item: -item[1])

topk_index = [i[0] for i in ranks_result[:2]]

pred_result=[]

for i in topk_index:

pred_result.append(dig_lables.get(i))

return pred_result

#输出结果

result_test=[]

for i in range(len(x_test)):

pred = get_topk_lables(np.array(x_test[i]))

result_test.append(pred)

df_test['result']=result_test

df_test['label1']=df_test.result.apply(lambda x:x[0])

df_test['label2']=df_test.result.apply(lambda x:x[1])

df_test.rename(columns={'s_no':'id'}, inplace = True)

data_to_out=df_test[['id','label1','label2']]

data_to_out.to_csv(r'E:\python_data\hf\pre_test_8.csv',encoding='utf-8',index=False)

"""

可以看出最后收敛很慢了,epochs可以适当调小

总结(优化点):

- 从word2vec模型的参数优化(也可以把word2vec的模型转换成elmo向量)

- 神经网络架构的优化(参数的优化:包括卷积数,池化大小,dropout,dense层,epochs等等)