MySQL用中间件ProxySQL实现读写分离和主节点故障应用无感应

昨天做的用proxysql实现的读写分离,但是在实际的应用中这样的结构还很不完整,如果主节点出现故障那么整个拓扑的数据库也无法通过proxysql来调用了,所以还需要增加主节点故障后proxysql能够自动切换到新的主节点的功能。

(MGR)组复制能够完成主节点故障后推选出来新的主节点,不过在应用层不可能通过改新的主节点的IP来连接新的主节点,通过mgr+proxysql可能实际主节点故障时应用无感应自动切换到新的主节点。

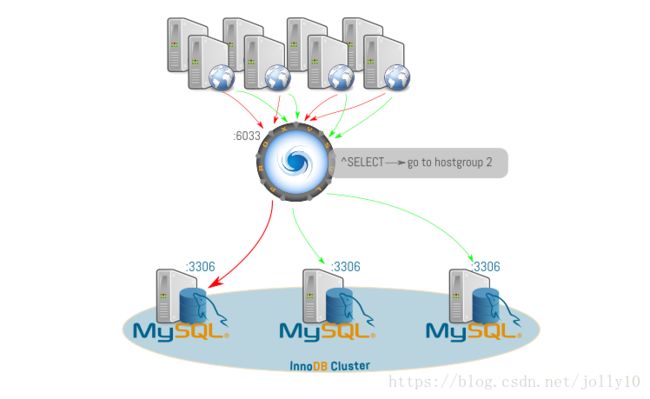

描述下上面的实现思路:三个节点使multi-primary的方式连接,应用通过连接ProxySQL中间件,根据sql的属性(是否为select语句)来决定连接哪一个节点,一个可写节点,两个只读节点(其实三个都是可写节点,只不过通过proxysql进行了读写分离)。如果默认的可写节点挂掉的话,proxysql通过定期运行的调度器会将另一个只读节点的其中一台设为可写节点,实际主节点故障应用无感应的要求。

上述的整个过程中,应用无需任何变动。应用从意识发生了故障,到连接重新指向新的主,正常提供服务,秒级别的间隔。下面做一下这个配置:

1.各server说明:

qht131 172.17.61.131 master1

qht132 172.17.61.132 master2

qht133 172.17.61.133 master3

qht134 172.17.61.134 proxysql

multi-primary的主从复制结构 :

mysql> SELECT * FROM performance_schema.replication_group_members;

+---------------------------+--------------------------------------+-------------+-------------+--------------+

| CHANNEL_NAME | MEMBER_ID | MEMBER_HOST | MEMBER_PORT | MEMBER_STATE |

+---------------------------+--------------------------------------+-------------+-------------+--------------+

| group_replication_applier | bb0dea82-58ed-11e8-94e5-000c29e8e89b | qht131 | 3306 | ONLINE |

| group_replication_applier | bb0dea82-58ed-11e8-94e5-000c29e8e90b | qht132 | 3306 | ONLINE |

| group_replication_applier | bb0dea82-58ed-11e8-94e5-000c29e8e91b | qht133 | 3306 | ONLINE |

+---------------------------+--------------------------------------+-------------+-------------+--------------+

3 rows in set (0.00 sec)

2.在数据库端建立proxysql登入需要的帐号(如之前已建立好的话直跳过此步骤)

mysql> CREATE USER 'proxysql'@'%' IDENTIFIED BY 'proxysql';

Query OK, 0 rows affected (0.41 sec)

mysql> GRANT ALL ON * . * TO 'proxysql'@'%';

Query OK, 0 rows affected (0.00 sec)

mysql> create user 'sbuser'@'%' IDENTIFIED BY 'sbpass';

Query OK, 0 rows affected (0.00 sec)

mysql> GRANT ALL ON * . * TO 'sbuser'@'%';

Query OK, 0 rows affected (0.00 sec)

mysql> FLUSH PRIVILEGES;

Query OK, 0 rows affected (0.07 sec) 3.创建检查MGR节点状态的函数和视图

参照前面的博客,在MGR主节点上执行下面链接中的SQL

https://github.com/lefred/mysql_gr_routing_check/blob/master/addition_to_sys.sql

USE sys;

DELIMITER $$

CREATE FUNCTION IFZERO(a INT, b INT)

RETURNS INT

DETERMINISTIC

RETURN IF(a = 0, b, a)$$

CREATE FUNCTION LOCATE2(needle TEXT(10000), haystack TEXT(10000), offset INT)

RETURNS INT

DETERMINISTIC

RETURN IFZERO(LOCATE(needle, haystack, offset), LENGTH(haystack) + 1)$$

CREATE FUNCTION GTID_NORMALIZE(g TEXT(10000))

RETURNS TEXT(10000)

DETERMINISTIC

RETURN GTID_SUBTRACT(g, '')$$

CREATE FUNCTION GTID_COUNT(gtid_set TEXT(10000))

RETURNS INT

DETERMINISTIC

BEGIN

DECLARE result BIGINT DEFAULT 0;

DECLARE colon_pos INT;

DECLARE next_dash_pos INT;

DECLARE next_colon_pos INT;

DECLARE next_comma_pos INT;

SET gtid_set = GTID_NORMALIZE(gtid_set);

SET colon_pos = LOCATE2(':', gtid_set, 1);

WHILE colon_pos != LENGTH(gtid_set) + 1 DO

SET next_dash_pos = LOCATE2('-', gtid_set, colon_pos + 1);

SET next_colon_pos = LOCATE2(':', gtid_set, colon_pos + 1);

SET next_comma_pos = LOCATE2(',', gtid_set, colon_pos + 1);

IF next_dash_pos < next_colon_pos AND next_dash_pos < next_comma_pos THEN

SET result = result +

SUBSTR(gtid_set, next_dash_pos + 1,

LEAST(next_colon_pos, next_comma_pos) - (next_dash_pos + 1)) -

SUBSTR(gtid_set, colon_pos + 1, next_dash_pos - (colon_pos + 1)) + 1;

ELSE

SET result = result + 1;

END IF;

SET colon_pos = next_colon_pos;

END WHILE;

RETURN result;

END$$

CREATE FUNCTION gr_applier_queue_length()

RETURNS INT

DETERMINISTIC

BEGIN

RETURN (SELECT sys.gtid_count( GTID_SUBTRACT( (SELECT

Received_transaction_set FROM performance_schema.replication_connection_status

WHERE Channel_name = 'group_replication_applier' ), (SELECT

@@global.GTID_EXECUTED) )));

END$$

CREATE FUNCTION gr_member_in_primary_partition()

RETURNS VARCHAR(3)

DETERMINISTIC

BEGIN

RETURN (SELECT IF( MEMBER_STATE='ONLINE' AND ((SELECT COUNT(*) FROM

performance_schema.replication_group_members WHERE MEMBER_STATE != 'ONLINE') >=

((SELECT COUNT(*) FROM performance_schema.replication_group_members)/2) = 0),

'YES', 'NO' ) FROM performance_schema.replication_group_members JOIN

performance_schema.replication_group_member_stats USING(member_id));

END$$

CREATE VIEW gr_member_routing_candidate_status AS SELECT

sys.gr_member_in_primary_partition() as viable_candidate,

IF( (SELECT (SELECT GROUP_CONCAT(variable_value) FROM

performance_schema.global_variables WHERE variable_name IN ('read_only',

'super_read_only')) != 'OFF,OFF'), 'YES', 'NO') as read_only,

sys.gr_applier_queue_length() as transactions_behind, Count_Transactions_in_queue as 'transactions_to_cert' from performance_schema.replication_group_member_stats;$$

DELIMITER ;4.在proxysql中增加帐号

mysql> INSERT INTO MySQL_users(username,password,default_hostgroup) VALUES ('proxysql','proxysql',1);

Query OK, 1 row affected (0.00 sec) mysql> UPDATE global_variables SET variable_value='proxysql' where variable_name='mysql-monitor_username'; Query OK, 1 row affected (0.00 sec)

mysql> UPDATE global_variables SET variable_value='proxysql' where variable_name='mysql-monitor_password';

Query OK, 1 row affected (0.00 sec) mysql> LOAD MYSQL SERVERS TO RUNTIME;

Query OK, 0 rows affected (0.23 sec)

mysql> SAVE MYSQL SERVERS TO DISK;

Query OK, 0 rows affected (0.08 sec) [root@qht134 proxysql]# mysql -uproxysql -pproxysql -h 127.0.0.1 -P6033 -e"select @@hostname"

mysql: [Warning] Using a password on the command line interface can be insecure.

+------------+

| @@hostname |

+------------+

| qht131 |

+------------+

5.配置proxysql

mysql> delete from mysql_servers;

Query OK, 6 rows affected (0.00 sec)

mysql> insert into mysql_servers (hostgroup_id, hostname, port) values(1,'172.17.61.131',3306);

Query OK, 1 row affected (0.00 sec)

mysql> insert into mysql_servers (hostgroup_id, hostname, port) values(1,'172.17.61.132',3306);

Query OK, 1 row affected (0.00 sec)

mysql> insert into mysql_servers (hostgroup_id, hostname, port) values(1,'172.17.61.133',3306);

Query OK, 1 row affected (0.00 sec)

mysql> insert into mysql_servers (hostgroup_id, hostname, port) values(2,'172.17.61.131',3306);

Query OK, 1 row affected (0.00 sec)

mysql> insert into mysql_servers (hostgroup_id, hostname, port) values(2,'172.17.61.132',3306);

Query OK, 1 row affected (0.00 sec)

mysql> insert into mysql_servers (hostgroup_id, hostname, port) values(2,'172.17.61.133',3306);

Query OK, 1 row affected (0.00 sec)

mysql> select * from mysql_servers ;

+--------------+---------------+------+--------+--------+-------------+-----------------+---------------------+---------+----------------+---------+

| hostgroup_id | hostname | port | status | weight | compression | max_connections | max_replication_lag | use_ssl | max_latency_ms | comment |

+--------------+---------------+------+--------+--------+-------------+-----------------+---------------------+---------+----------------+---------+

| 1 | 172.17.61.131 | 3306 | ONLINE | 1 | 0 | 1000 | 0 | 0 | 0 | |

| 1 | 172.17.61.132 | 3306 | ONLINE | 1 | 0 | 1000 | 0 | 0 | 0 | |

| 1 | 172.17.61.133 | 3306 | ONLINE | 1 | 0 | 1000 | 0 | 0 | 0 | |

| 2 | 172.17.61.131 | 3306 | ONLINE | 1 | 0 | 1000 | 0 | 0 | 0 | |

| 2 | 172.17.61.132 | 3306 | ONLINE | 1 | 0 | 1000 | 0 | 0 | 0 | |

| 2 | 172.17.61.133 | 3306 | ONLINE | 1 | 0 | 1000 | 0 | 0 | 0 | |

+--------------+---------------+------+--------+--------+-------------+-----------------+---------------------+---------+----------------+---------+

6 rows in set (0.00 sec)hostgroup_id = 1代表write group,针对我们提出的限制,这个地方只配置了一个节点;hostgroup_id = 2代表read group,包含了MGR的所有节点,目前只是Onlinle的,等配置过scheduler后,status就会有变化 。

对于上面的hostgroup配置,默认所有的写操作会发送到hostgroup_id为1的online节点,也就是发送到写节点上。所有的读操作,会发送为hostgroup_id为2的online节点。需要确认一下没有使用proxysql的读写分离规则。因为我昨天配置了这个地方,所以需要删除,以免影响后面的测试。

mysql> delete from mysql_query_rules;

Query OK, 2 rows affected (0.50 sec)

mysql> commit;

Query OK, 0 rows affected (0.00 sec)最后我们需要将global_variables,mysql_servers、mysql_users表的信息加载到RUNTIME,更进一步加载到DISK:

mysql> LOAD MYSQL VARIABLES TO RUNTIME;

Query OK, 0 rows affected (0.00 sec)

mysql> SAVE MYSQL VARIABLES TO DISK;

Query OK, 94 rows affected (0.02 sec)

mysql> LOAD MYSQL SERVERS TO RUNTIME;

Query OK, 0 rows affected (0.00 sec)

mysql> SAVE MYSQL SERVERS TO DISK;

Query OK, 0 rows affected (0.03 sec)

mysql> LOAD MYSQL USERS TO RUNTIME;

Query OK, 0 rows affected (0.00 sec)

mysql> SAVE MYSQL USERS TO DISK;

Query OK, 0 rows affected (0.01 sec) 6.配置scheduler

首先,请在Github地址https://github.com/ZzzCrazyPig/proxysql_groupreplication_checker下载相应的脚本

这个地址有三个脚本可供下载:

proxysql_groupreplication_checker.sh:用于multi-primary模式,可以实现读写分离,以及故障切换,同一时间点多个节点可以多写

gr_mw_mode_cheker.sh:用于multi-primary模式,可以实现读写分离,以及故障切换,不过在同一时间点只能有一个节点能写

gr_sw_mode_checker.sh:用于single-primary模式,可以实现读写分离,以及故障切换

由于我的环境是multi-primary模式,所以选择/proxysql_groupreplication_checker.sh脚本。

接着,将我们提供的脚本proxysql_groupreplication_checker.sh放到目录/var/lib/proxysql/下,并增加可以执行的权限(重要) 。

[root@qht134 ~]# chmod a+x /var/lib/proxysql/proxysql_groupreplication_checker.sh

最后,我们在proxysql的scheduler表里面加载如下记录,然后加载到RUNTIME使其生效,同时还可以持久化到磁盘:

mysql> INSERT INTO scheduler(id,interval_ms,filename,arg1,arg2,arg3,arg4, arg5)

-> VALUES (1,'10000','/var/lib/proxysql/proxysql_groupreplication_checker.sh','1','2','1','0','/var/lib/proxysql/proxysql_groupreplication_checker.log');

Query OK, 1 row affected (0.00 sec)

mysql> LOAD SCHEDULER TO RUNTIME;

Query OK, 0 rows affected (0.00 sec)

mysql> SAVE SCHEDULER TO DISK;

Query OK, 0 rows affected (0.03 sec)scheduler各column的说明:

active : 1: enable scheduler to schedule the script we provide

interval_ms : invoke one by one in cycle (eg: 5000(ms) = 5s represent every 5s invoke the script)filename: represent the script file path

arg1~arg5: represent the input parameters the script received

脚本proxysql_groupreplication_checker.sh对应的参数说明如下:

arg1 is the hostgroup_id for write

arg2 is the hostgroup_id for readarg3 is the number of writers we want active at the same time

arg4 represents if we want that the member acting for writes is also candidate for reads

arg5 is the log file

schedule信息加载后,就会分析当前的环境,mysql_servers中显示出当前只有qht131是可以写的,qht132以及qht133是用来读的。

mysql> select * from mysql_servers ;

+--------------+---------------+------+--------------+--------+-------------+-----------------+---------------------+---------+----------------+---------+

| hostgroup_id | hostname | port | status | weight | compression | max_connections | max_replication_lag | use_ssl | max_latency_ms | comment |

+--------------+---------------+------+--------------+--------+-------------+-----------------+---------------------+---------+----------------+---------+

| 1 | 172.17.61.131 | 3306 | ONLINE | 1 | 0 | 1000 | 0 | 0 | 0 | |

| 1 | 172.17.61.132 | 3306 | OFFLINE_SOFT | 1 | 0 | 1000 | 0 | 0 | 0 | |

| 1 | 172.17.61.133 | 3306 | OFFLINE_SOFT | 1 | 0 | 1000 | 0 | 0 | 0 | |

| 2 | 172.17.61.131 | 3306 | OFFLINE_SOFT | 1 | 0 | 1000 | 0 | 0 | 0 | |

| 2 | 172.17.61.132 | 3306 | ONLINE | 1 | 0 | 1000 | 0 | 0 | 0 | |

| 2 | 172.17.61.133 | 3306 | ONLINE | 1 | 0 | 1000 | 0 | 0 | 0 | |

+--------------+---------------+------+--------------+--------+-------------+-----------------+---------------------+---------+----------------+---------+

6 rows in set (0.00 sec)因为schedule的arg4我设为了0,就表示可写的节点不能用于读。那我将arg4设置为1试一下:

mysql> update scheduler set arg4=1;

Query OK, 1 row affected (0.00 sec)

mysql> select * from scheduler;

+----+--------+-------------+--------------------------------------------------------+------+------+------+------+---------------------------------------------------------+---------+

| id | active | interval_ms | filename | arg1 | arg2 | arg3 | arg4 | arg5 | comment |

+----+--------+-------------+--------------------------------------------------------+------+------+------+------+---------------------------------------------------------+---------+

| 1 | 1 | 10000 | /var/lib/proxysql/proxysql_groupreplication_checker.sh | 1 | 2 | 1 | 1 | /var/lib/proxysql/proxysql_groupreplication_checker.log | |

+----+--------+-------------+--------------------------------------------------------+------+------+------+------+---------------------------------------------------------+---------+

1 row in set (0.00 sec)

mysql> SAVE SCHEDULER TO DISK;

Query OK, 0 rows affected (0.01 sec)

mysql> LOAD SCHEDULER TO RUNTIME;

Query OK, 0 rows affected (0.00 sec)

mysql> select * from mysql_servers;

+--------------+---------------+------+--------------+--------+-------------+-----------------+---------- -----------+---------+----------------+---------+

| hostgroup_id | hostname | port | status | weight | compression | max_connections | max_repli cation_lag | use_ssl | max_latency_ms | comment |

+--------------+---------------+------+--------------+--------+-------------+-----------------+---------- -----------+---------+----------------+---------+

| 1 | 172.17.61.131 | 3306 | ONLINE | 1 | 0 | 1000 | 0 | 0 | 0 | |

| 1 | 172.17.61.132 | 3306 | OFFLINE_SOFT | 1 | 0 | 1000 | 0 | 0 | 0 | |

| 1 | 172.17.61.133 | 3306 | OFFLINE_SOFT | 1 | 0 | 1000 | 0 | 0 | 0 | |

| 2 | 172.17.61.131 | 3306 | ONLINE | 1 | 0 | 1000 | 0 | 0 | 0 | |

| 2 | 172.17.61.132 | 3306 | ONLINE | 1 | 0 | 1000 | 0 | 0 | 0 | |

| 2 | 172.17.61.133 | 3306 | ONLINE | 1 | 0 | 1000 | 0 | 0 | 0 | |

+--------------+---------------+------+--------------+--------+-------------+-----------------+---------- -----------+---------+----------------+---------+

6 rows in set (0.00 sec)

arg4设置为1之后,qht131节点用来写的同时也可以被用来写。

便于下面的测试还是将arg4设为0:

mysql> update scheduler set arg4=0;

Query OK, 1 row affected (0.00 sec)

mysql> SAVE SCHEDULER TO DISK;

Query OK, 0 rows affected (0.02 sec)

mysql> LOAD SCHEDULER TO RUNTIME;

Query OK, 0 rows affected (0.00 sec)

mysql> select * from mysql_servers;

+--------------+---------------+------+--------------+--------+-------------+-----------------+---------------------+---------+----------------+---------+

| hostgroup_id | hostname | port | status | weight | compression | max_connections | max_replication_lag | use_ssl | max_latency_ms | comment |

+--------------+---------------+------+--------------+--------+-------------+-----------------+---------------------+---------+----------------+---------+

| 1 | 172.17.61.131 | 3306 | ONLINE | 1 | 0 | 1000 | 0 | 0 | 0 | |

| 1 | 172.17.61.132 | 3306 | OFFLINE_SOFT | 1 | 0 | 1000 | 0 | 0 | 0 | |

| 1 | 172.17.61.133 | 3306 | OFFLINE_SOFT | 1 | 0 | 1000 | 0 | 0 | 0 | |

| 2 | 172.17.61.131 | 3306 | OFFLINE_SOFT | 1 | 0 | 1000 | 0 | 0 | 0 | |

| 2 | 172.17.61.132 | 3306 | ONLINE | 1 | 0 | 1000 | 0 | 0 | 0 | |

| 2 | 172.17.61.133 | 3306 | ONLINE | 1 | 0 | 1000 | 0 | 0 | 0 | |

+--------------+---------------+------+--------------+--------+-------------+-----------------+---------------------+---------+----------------+---------+

6 rows in set (0.00 sec)各个节点的gr_member_routing_candidate_status视图也显示了当前节点是否是正常状态的,proxysql就是读取的这个视图的信息来决定此节点是否可用。

mysql> select * from sys.gr_member_routing_candidate_status\G

*************************** 1. row ***************************

viable_candidate: YES

read_only: NO

transactions_behind: 0

transactions_to_cert: 0

1 row in set (0.01 sec)

7.设置读写分离:

mysql> insert into mysql_query_rules (active, match_pattern, destination_hostgroup, apply)

-> values (1,"^SELECT",2,1);

Query OK, 1 row affected (0.00 sec)

mysql> LOAD MYSQL QUERY RULES TO RUNTIME;

Query OK, 0 rows affected (0.00 sec)

mysql> SAVE MYSQL QUERY RULES TO DISK;

Query OK, 0 rows affected (0.03 sec)

match_pattern的规则是基于正则表达式的,

active表示是否启用这个sql路由项,

match_pattern就是我们正则匹配项,destination_hostgroup表示我们要将该类sql转发到哪些mysql上面去,这里我们将select转发到group 2,。

apply为1表示该正则匹配后,将不再接受其他匹配,直接转发。

对于for update需要在gruop1上执行,可以加上规则:

insert into mysql_query_rules(active,match_pattern,destination_hostgroup,apply) values(1,'^SELECT.*FOR UPDATE$',1,1);

通过一个循环连接proxysql,由于是select 语句,一直连接的是qht132和qht133

[root@qht133 ~]# while true; do mysql -h 172.17.61.134 -u proxysql -pproxysql -P 6033 -e "select @@hostname, sleep(3)" 2>/dev/null; done

+------------+----------+

| @@hostname | sleep(3) |

+------------+----------+

| qht132 | 0 |

+------------+----------+

+------------+----------+

| @@hostname | sleep(3) |

+------------+----------+

| qht133 | 0 |

+------------+----------+

+------------+----------+

| @@hostname | sleep(3) |

+------------+----------+

| qht132 | 0 |

+------------+----------+

+------------+----------+

| @@hostname | sleep(3) |

+------------+----------+

| qht133 | 0 |

+------------+----------+

8.设置故障应用无感应:

在读写分离中,我设置了qht131为可写节点,qht132,qht133为只读节点

如果此时qht131变成只读模式的话,应用能不能直接连到其它的节点进行写操作?

现手动将qht131变成只读模式:

mysql> set global read_only=1;

Query OK, 0 rows affected (0.00 sec)观察一下mysql_servers的状态,自动将group1的qht132改成了online,group2的qht131,qht133变成online了,就表示将qht132变为可写节点,其它两个节点变为只读节点了。

mysql> select * from mysql_servers;

+--------------+---------------+------+--------------+--------+-------------+-----------------+---------------------+---------+----------------+---------+

| hostgroup_id | hostname | port | status | weight | compression | max_connections | max_replication_lag | use_ssl | max_latency_ms | comment |

+--------------+---------------+------+--------------+--------+-------------+-----------------+---------------------+---------+----------------+---------+

| 1 | 172.17.61.131 | 3306 | OFFLINE_SOFT | 1 | 0 | 1000 | 0 | 0 | 0 | |

| 1 | 172.17.61.132 | 3306 | ONLINE | 1 | 0 | 1000 | 0 | 0 | 0 | |

| 1 | 172.17.61.133 | 3306 | OFFLINE_SOFT | 1 | 0 | 1000 | 0 | 0 | 0 | |

| 2 | 172.17.61.131 | 3306 | ONLINE | 1 | 0 | 1000 | 0 | 0 | 0 | |

| 2 | 172.17.61.132 | 3306 | OFFLINE_SOFT | 1 | 0 | 1000 | 0 | 0 | 0 | |

| 2 | 172.17.61.133 | 3306 | ONLINE | 1 | 0 | 1000 | 0 | 0 | 0 | |

+--------------+---------------+------+--------------+--------+-------------+-----------------+---------------------+---------+----------------+---------+

6 rows in set (0.00 sec)通过模拟的连接也可以看到select语句都连接到qht131和qht133进行了。

[root@qht133 ~]# while true; do mysql -h 172.17.61.134 -u proxysql -pproxysql -P 6033 -e "select @@hostname, sleep(3)" 2>/dev/null; done

+------------+----------+

| @@hostname | sleep(3) |

+------------+----------+

| qht133 | 0 |

+------------+----------+

+------------+----------+

| @@hostname | sleep(3) |

+------------+----------+

| qht131 | 0 |

+------------+----------+

+------------+----------+

| @@hostname | sleep(3) |

+------------+----------+

| qht133 | 0 |

+------------+----------+

+------------+----------+

| @@hostname | sleep(3) |

+------------+----------+

| qht131 | 0 |

+------------+----------+将qht131变为可写模式后,mysql_servers也恢复过来了。

mysql> set global read_only=0;

Query OK, 0 rows affected (0.00 sec)mysql> select * from mysql_servers;

+--------------+---------------+------+--------------+--------+-------------+-----------------+---------------------+---------+----------------+---------+

| hostgroup_id | hostname | port | status | weight | compression | max_connections | max_replication_lag | use_ssl | max_latency_ms | comment |

+--------------+---------------+------+--------------+--------+-------------+-----------------+---------------------+---------+----------------+---------+

| 1 | 172.17.61.131 | 3306 | ONLINE | 1 | 0 | 1000 | 0 | 0 | 0 | |

| 1 | 172.17.61.132 | 3306 | OFFLINE_SOFT | 1 | 0 | 1000 | 0 | 0 | 0 | |

| 1 | 172.17.61.133 | 3306 | OFFLINE_SOFT | 1 | 0 | 1000 | 0 | 0 | 0 | |

| 2 | 172.17.61.131 | 3306 | OFFLINE_SOFT | 1 | 0 | 1000 | 0 | 0 | 0 | |

| 2 | 172.17.61.132 | 3306 | ONLINE | 1 | 0 | 1000 | 0 | 0 | 0 | |

| 2 | 172.17.61.133 | 3306 | ONLINE | 1 | 0 | 1000 | 0 | 0 | 0 | |

+--------------+---------------+------+--------------+--------+-------------+-----------------+---------------------+---------+----------------+---------+

6 rows in set (0.00 sec)经过测试将qht131节点停止组复制(stop group_replication)后,mysql_servers表的信息也会正常的切换新的节点。待qht131再加入到组复制后,mysql_servers也会正常的将qht131改成online状态。

mysql> select * from mysql_servers;

+--------------+---------------+------+--------------+--------+-------------+-----------------+---------------------+---------+----------------+---------+

| hostgroup_id | hostname | port | status | weight | compression | max_connections | max_replication_lag | use_ssl | max_latency_ms | comment |

+--------------+---------------+------+--------------+--------+-------------+-----------------+---------------------+---------+----------------+---------+

| 1 | 172.17.61.131 | 3306 | OFFLINE_SOFT | 1 | 0 | 1000 | 0 | 0 | 0 | |

| 1 | 172.17.61.132 | 3306 | ONLINE | 1 | 0 | 1000 | 0 | 0 | 0 | |

| 1 | 172.17.61.133 | 3306 | OFFLINE_SOFT | 1 | 0 | 1000 | 0 | 0 | 0 | |

| 2 | 172.17.61.131 | 3306 | OFFLINE_SOFT | 1 | 0 | 1000 | 0 | 0 | 0 | |

| 2 | 172.17.61.132 | 3306 | OFFLINE_SOFT | 1 | 0 | 1000 | 0 | 0 | 0 | |

| 2 | 172.17.61.133 | 3306 | ONLINE | 1 | 0 | 1000 | 0 | 0 | 0 | |

+--------------+---------------+------+--------------+--------+-------------+-----------------+---------------------+---------+----------------+---------+

6 rows in set (0.00 sec)

容易出现的错误:

mysql> select * from mysql_servers ;

+--------------+---------------+------+--------------+--------+-------------+-----------------+---------------------+---------+----------------+---------+

| hostgroup_id | hostname | port | status | weight | compression | max_connections | max_replication_lag | use_ssl | max_latency_ms | comment |

+--------------+---------------+------+--------------+--------+-------------+-----------------+---------------------+---------+----------------+---------+

| 1 | 172.17.61.131 | 3306 | OFFLINE_HARD | 1 | 0 | 1000 | 0 | 0 | 0 | |

| 2 | 172.17.61.131 | 3306 | OFFLINE_SOFT | 1 | 0 | 1000 | 0 | 0 | 0 | |

| 2 | 172.17.61.132 | 3306 | OFFLINE_SOFT | 1 | 0 | 1000 | 0 | 0 | 0 | |

| 2 | 172.17.61.133 | 3306 | OFFLINE_SOFT | 1 | 0 | 1000 | 0 | 0 | 0 | |

+--------------+---------------+------+--------------+--------+-------------+-----------------+---------------------+---------+----------------+---------+

4 rows in set (0.00 sec)所有节点都offline了,错误日志如下:

[2018-05-22 23:57:52] read node [hostgroup_id: 2, hostname: 172.17.61.133, port: 3306, isOK: 0] is not OK, we will set it's status to be 'OFFLINE_SOFT'

ERROR 1142 (42000) at line 1: SELECT command denied to user 'proxysql'@'qht134' for table 'gr_member_routing_candidate_status'

[2018-05-22 23:57:55] current write node [hostgroup_id: 2, hostname: 172.17.61.131, port: 3306, isOK: 0] is not OK, we need to do switch over

ERROR 1142 (42000) at line 1: SELECT command denied to user 'proxysql'@'qht134' for table 'gr_member_routing_candidate_status'

[2018-05-22 23:57:55] read node [hostgroup_id: 2, hostname: 172.17.61.132, port: 3306, isOK: 0] is not OK, we will set it's status to be 'OFFLINE_SOFT'

ERROR 1142 (42000) at line 1: SELECT command denied to user 'proxysql'@'qht134' for table 'gr_member_routing_candidate_status'从错误日志上看出是权限的问题,proxysql用户没有足够的权限读取数据。

解决:

mysql> GRANT ALL ON * . * TO 'proxysql'@'%'; mysql> flush privileges;mysql> select * from mysql_servers;

+--------------+---------------+------+--------------+--------+-------------+-----------------+---------------------+---------+----------------+---------+

| hostgroup_id | hostname | port | status | weight | compression | max_connections | max_replication_lag | use_ssl | max_latency_ms | comment |

+--------------+---------------+------+--------------+--------+-------------+-----------------+---------------------+---------+----------------+---------+

| 1 | 172.17.61.131 | 3306 | ONLINE | 1 | 0 | 1000 | 0 | 0 | 0 | |

| 1 | 172.17.61.132 | 3306 | OFFLINE_SOFT | 1 | 0 | 1000 | 0 | 0 | 0 | |

| 1 | 172.17.61.133 | 3306 | OFFLINE_SOFT | 1 | 0 | 1000 | 0 | 0 | 0 | |

| 2 | 172.17.61.131 | 3306 | OFFLINE_SOFT | 1 | 0 | 1000 | 0 | 0 | 0 | |

| 2 | 172.17.61.132 | 3306 | ONLINE | 1 | 0 | 1000 | 0 | 0 | 0 | |

| 2 | 172.17.61.133 | 3306 | ONLINE | 1 | 0 | 1000 | 0 | 0 | 0 | |

+--------------+---------------+------+--------------+--------+-------------+-----------------+---------------------+---------+----------------+---------+

6 rows in set (0.00 sec)参考:

http://lefred.be/content/ha-with-mysql-group-replication-and-proxysql/

https://blog.csdn.net/d6619309/article/details/54602556